LangChain

Introduction to LCEL

LCEL Demo 6

In this lesson, we dive into advanced LCEL patterns by injecting custom logic into your pipelines and inspecting their internal structure. You’ll learn how to:

- Build a basic LCEL chain.

- Wrap Python functions as

RunnableLambdacomponents. - Debug and compute additional metrics.

- Visualize and explore your chain graph with

grandalf.

1. Creating a Basic LCEL Chain

Start by defining a simple chain that generates a one-line description for any topic using OpenAI’s Chat API:

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_template("Give me a one-line description of {topic}")

model = ChatOpenAI()

output_parser = StrOutputParser()

chain = prompt | model | output_parser

result = chain.invoke({"topic": "AI"})

print(result)

# 'AI is the simulation of human intelligence processes by machines, especially computer systems.'

| Component | Purpose | Example |

|---|---|---|

| ChatPromptTemplate | Build the prompt from a template | from_template("Give me a one-line...") |

| ChatOpenAI | Call the OpenAI chat model | ChatOpenAI() |

| StrOutputParser | Parse the raw LLM output | StrOutputParser() |

2. Adding Custom Transformations

You can seamlessly insert your own Python functions into the chain as RunnableLambda components.

def to_titlecase(text: str) -> str:

return text.title()

chain = prompt | model | output_parser | RunnableLambda(to_titlecase)

titlecased = chain.invoke({"topic": "AI"})

print(titlecased)

# 'Ai Is The Simulation Of Human Intelligence Processes By Machines, Especially Computer Systems.'

Note

Use RunnableLambda to wrap any pure Python function and inject it at any stage of your chain.

3. Computing Metrics with Custom Logic

You can further extend the pipeline to compute metrics like text length. Here’s an example that also prints the intermediate value for debugging:

def get_len(text: str) -> int:

print(text) # debug print

return len(text)

chain = (

prompt

| model

| output_parser

| RunnableLambda(to_titlecase)

| RunnableLambda(get_len)

)

length = chain.invoke({"topic": "AI"})

# Prints: 'Ai Is The Simulation Of Human Intelligence Processes By Machines, Especially Computer Systems.'

print(length)

# 94

Warning

Remember to remove or disable debug print statements in production to avoid cluttering your logs.

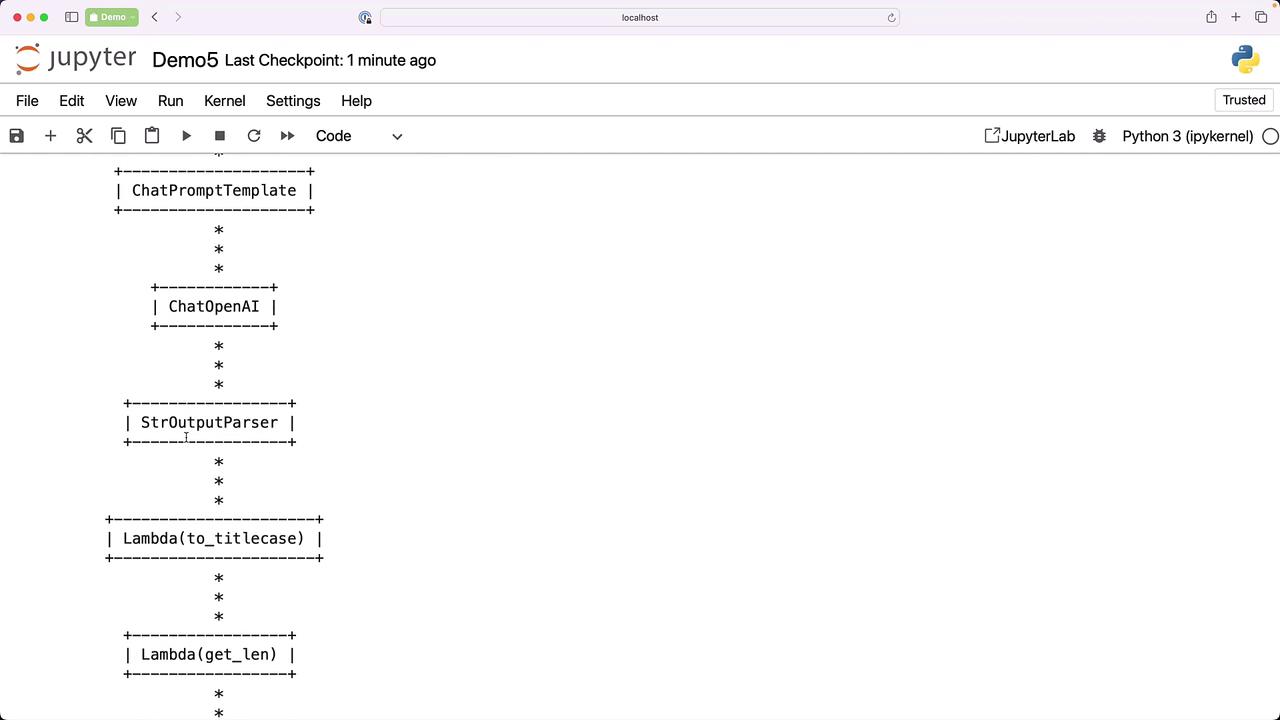

4. Inspecting Your Chain with Grandalf

To explore the internal graph of your LCEL chain, install the grandalf package:

pip install grandalf # Inspect LCEL chain structure

Retrieve and print the raw graph:

graph = chain.get_graph()

print(graph)

For a clearer hierarchical view, render it as ASCII art:

chain.get_graph().print_ascii()

+---------------------------+

| PromptInput |

+---------------------------+

| * |

+---------------------------+

| ChatPromptTemplate |

+---------------------------+

| * |

+---------------------------+

| ChatOpenAI |

+---------------------------+

| * |

+---------------------------+

| StrOutputParser |

+---------------------------+

| * |

+---------------------------+

| RunnableLambda(to_titlecase) |

+---------------------------+

| * |

+---------------------------+

| RunnableLambda(get_len) |

+---------------------------+

This ASCII diagram illustrates the data flow from prompt generation through model invocation, parsing, custom transformations, and final metric calculation.

5. Next Steps

With custom runnables and introspection in your toolkit, you can combine multiple chains, integrate external APIs, and build complex, maintainable workflows in LCEL.

Links and References

Watch Video

Watch video content

Practice Lab

Practice lab