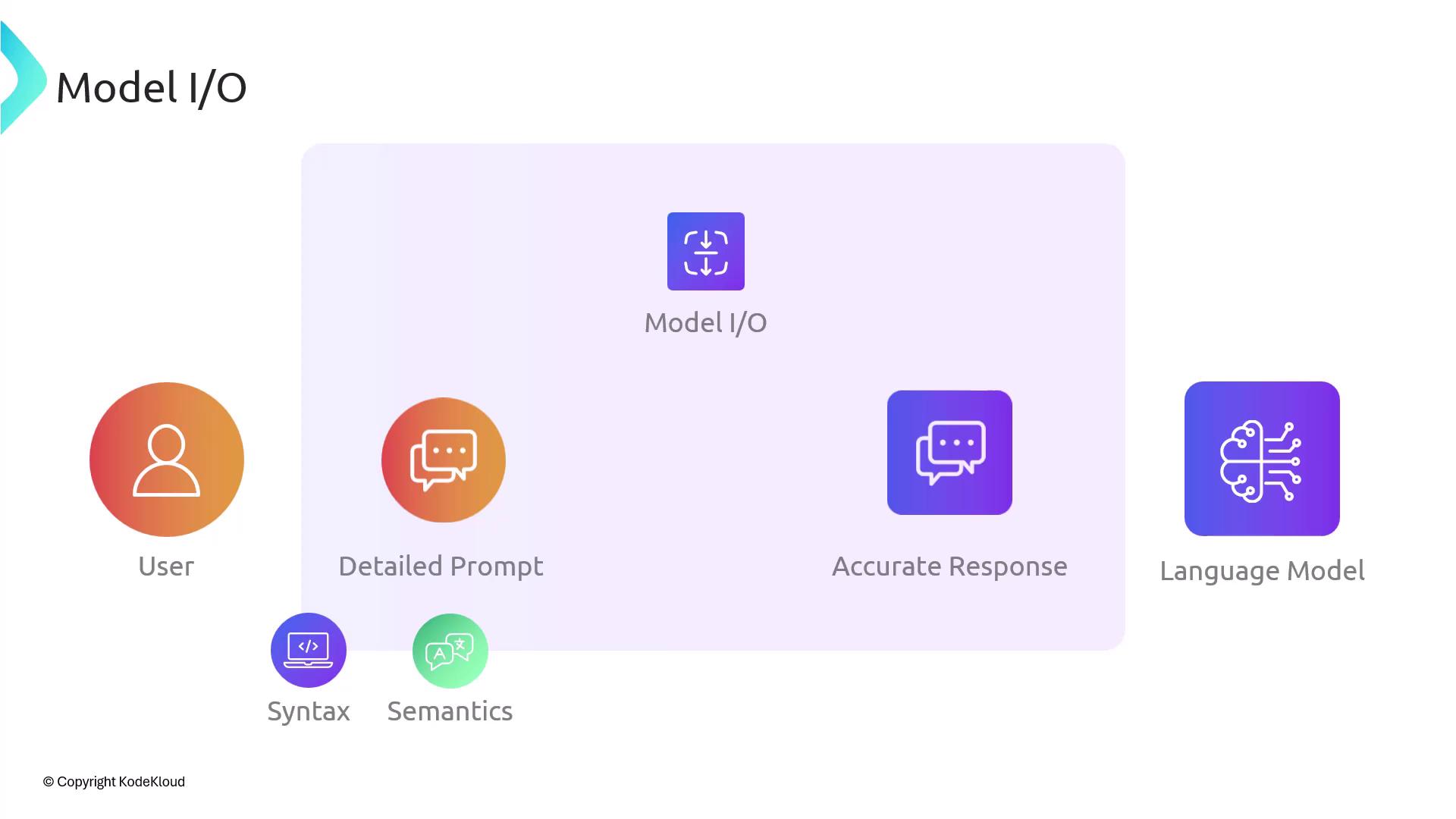

Why Model I/O Matters

Prompt engineering and response parsing are two sides of the same coin. A generic, one-line prompt often produces ambiguous or incomplete outputs. Conversely, a carefully crafted prompt written in the LLM’s preferred syntax and semantics leads to more accurate, relevant responses.High-quality prompts can significantly reduce hallucinations and improve the reliability of your language-model applications.

- Structure Prompts

Prepare and format input text—using templates, variables, and conditional logic—so the LLM understands your intent. - Parse Responses

Extract, validate, and convert the LLM’s raw output into schemas or data structures your application can consume.

Core Model I/O Tasks

| Task | Purpose |

|---|---|

| Prompt Engineering | Design templates, inject context, and apply best practices to guide the LLM’s output. |

| Response Parsing | Use validators, regex, and type schemas to transform model text into reliable data formats. |