LangChain

Tips Tricks and Resources

Resources

Before diving in, note that LangChain evolves quickly alongside generative AI. This guide covers versions 0.1.10 and 0.1.11. To match the examples below, install one of these versions:

pip install langchain==0.1.11

# or

pip install langchain==0.1.10

Warning

Always pin your LangChain version to ensure compatibility with course notebooks and examples.

Official Website and Documentation

Start your LangChain journey by browsing the official site and documentation:

- Website: https://langchain.com

- Docs: https://langchain.readthedocs.io

You can also follow the LangChain blog for tutorials and release notes, and explore the Oracle Cloud Infrastructure Python SDK docs:

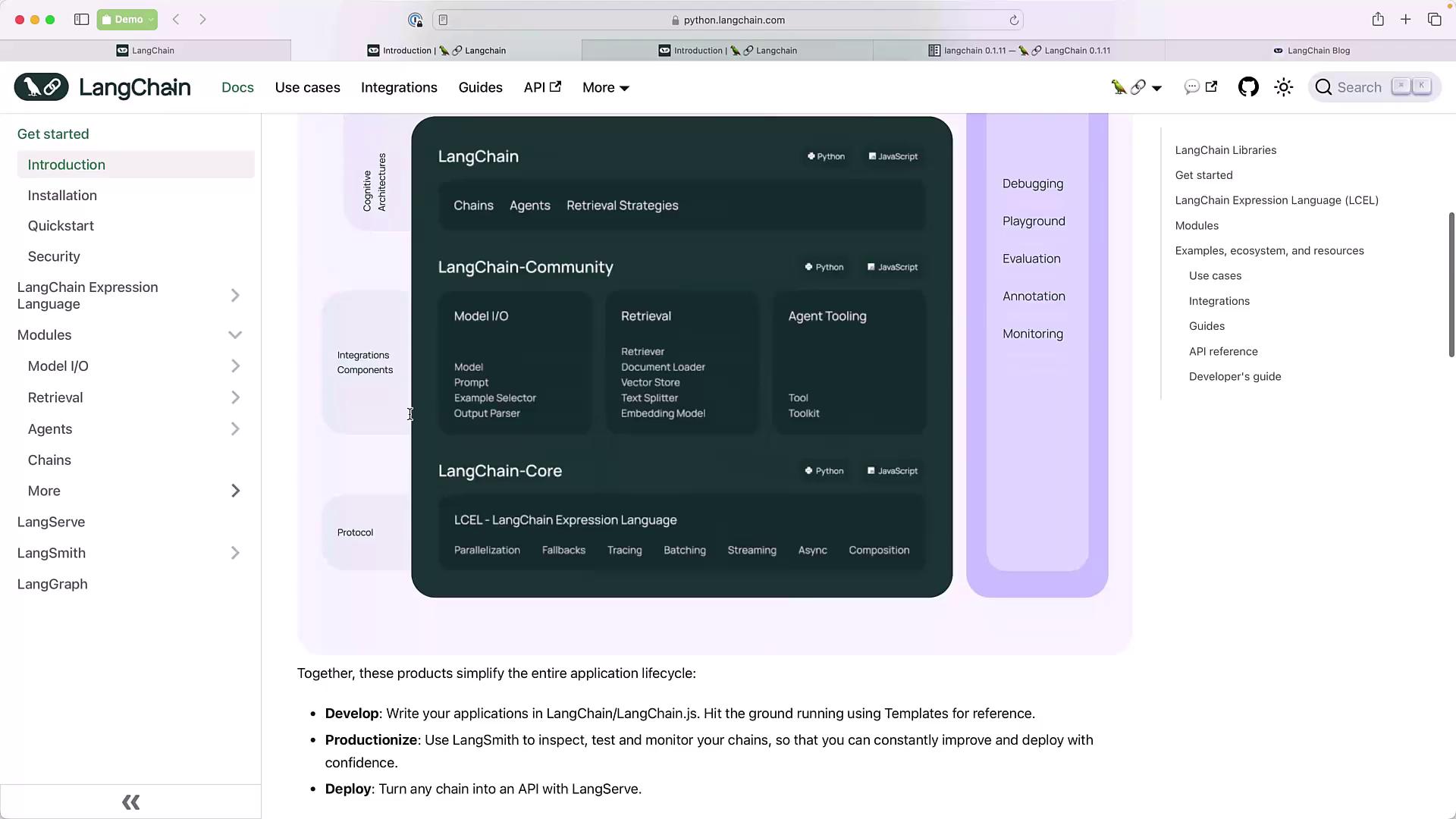

Documentation Overview

LangChain’s documentation covers everything from basic concepts to advanced modules:

Below is a quick reference for core modules aligned with this course:

| Module | Description |

|---|---|

| Model I/O | Formatting, predicting, and parsing LLM requests |

| Prompt Engineering | Building and testing templates |

| Chat Models | Conversational interfaces |

| Output Parsers | Structured data extraction |

| Retrieval Agents | Querying external knowledge |

| Chains | Orchestrating multi-step processes |

| Memory | Context management between interactions |

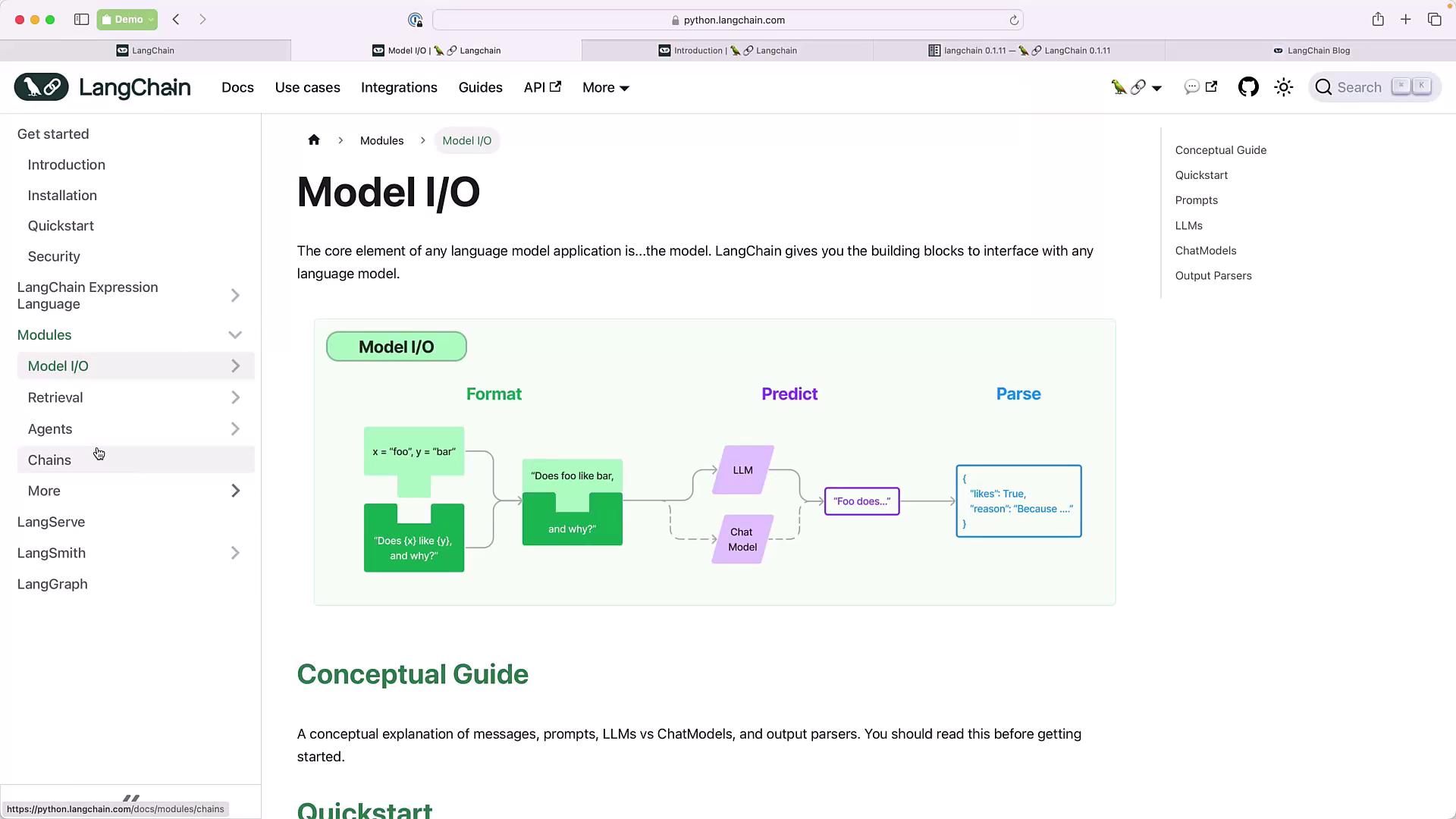

Core Modules and Model I/O

LangChain’s core sections include Model I/O, prompt engineering, chat models, output parsers, retrieval agents, chains, and memory. Here’s a representative flowchart for Model I/O:

Note

New modules such as LangServ, LangSmith, and LangGraph are under active development and not covered in this guide. Apply for early access if you’d like to experiment.

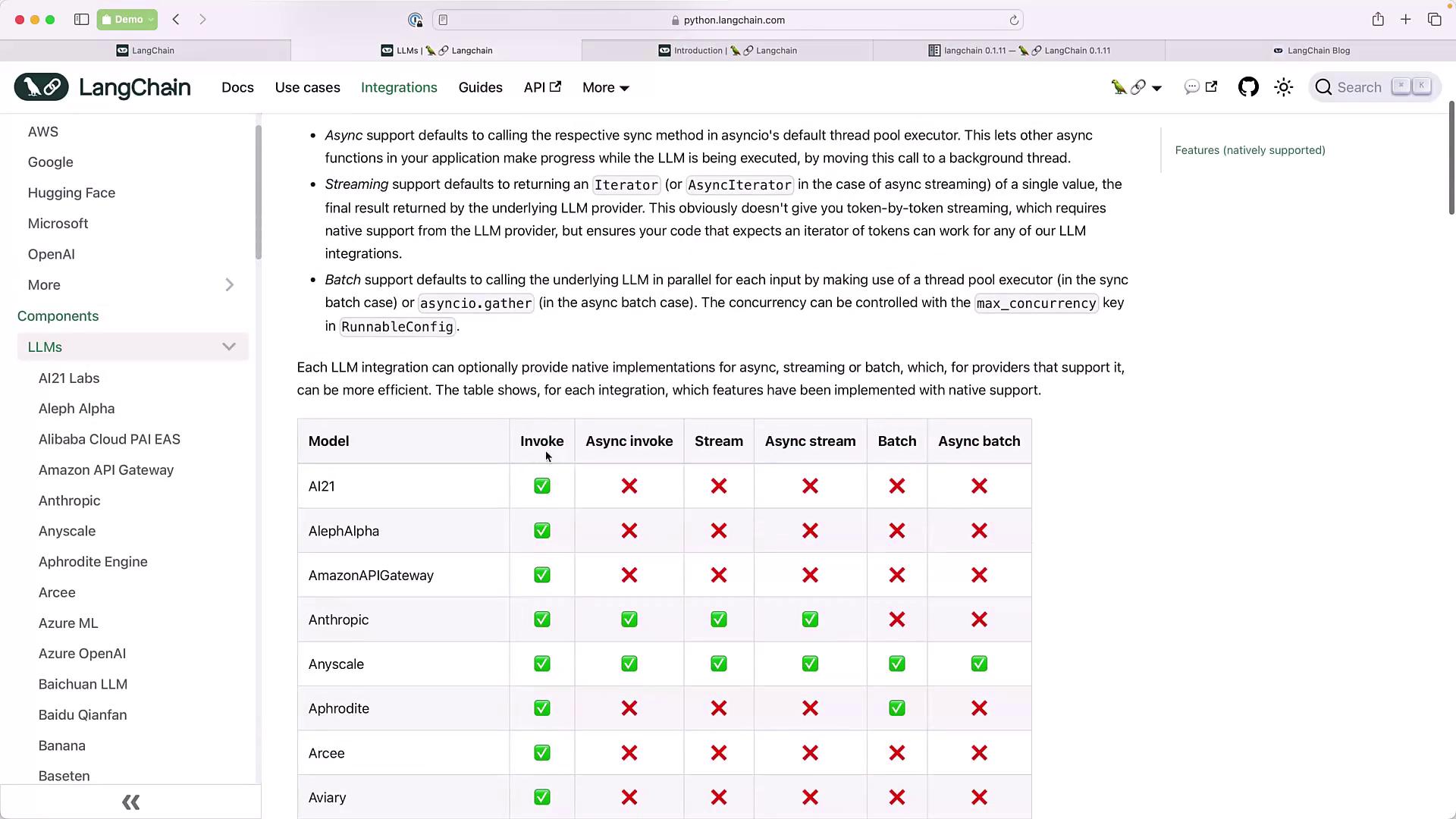

Third-Party Integrations

LangChain integrates with dozens of LLM providers, embedding models, and vector stores. You can filter integrations based on support for invoke, async, streaming, batch, and more:

Embedding models and databases follow a similar pattern—check the Integrations section for your preferred vendor.

Python SDK and API Reference

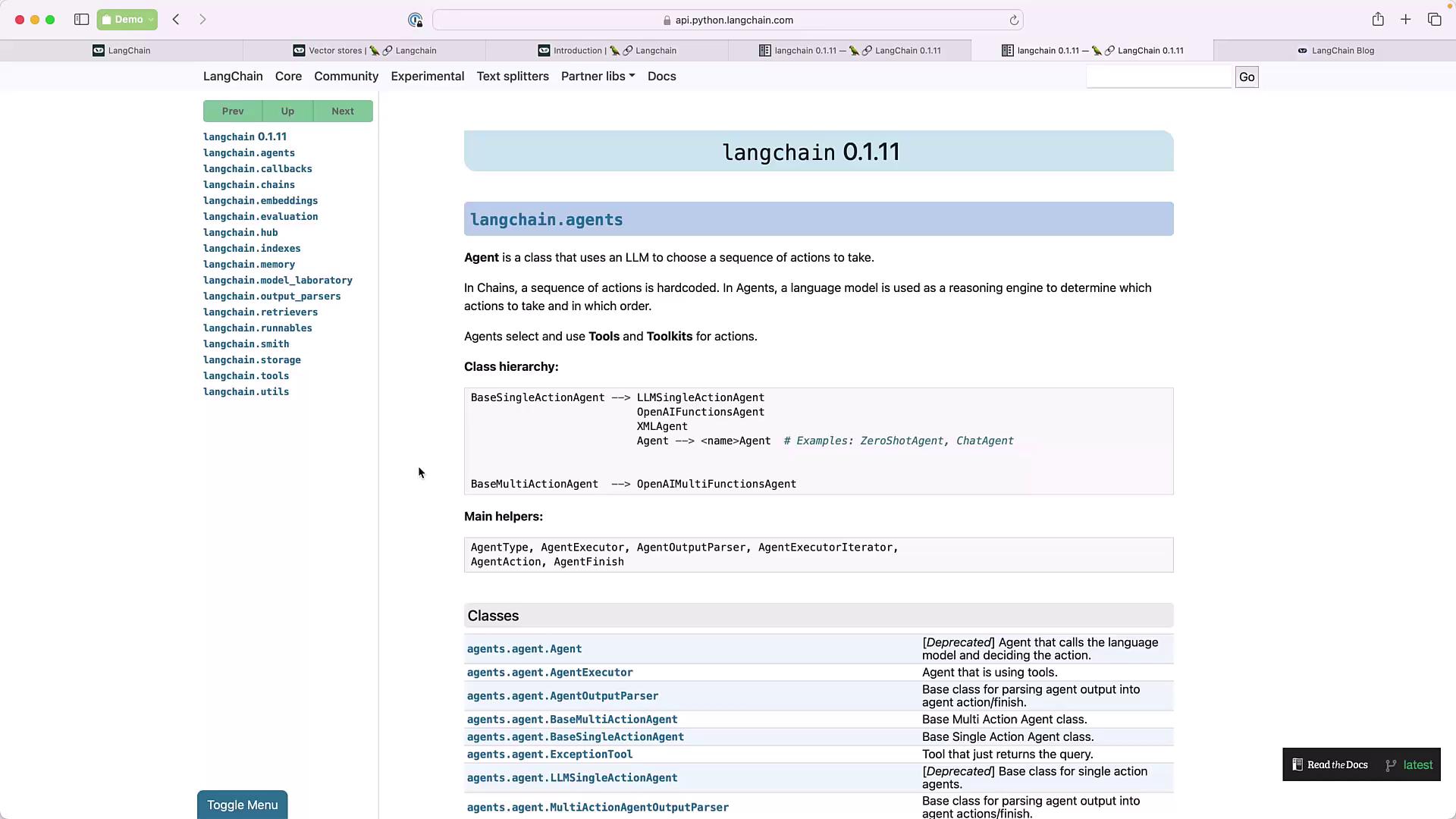

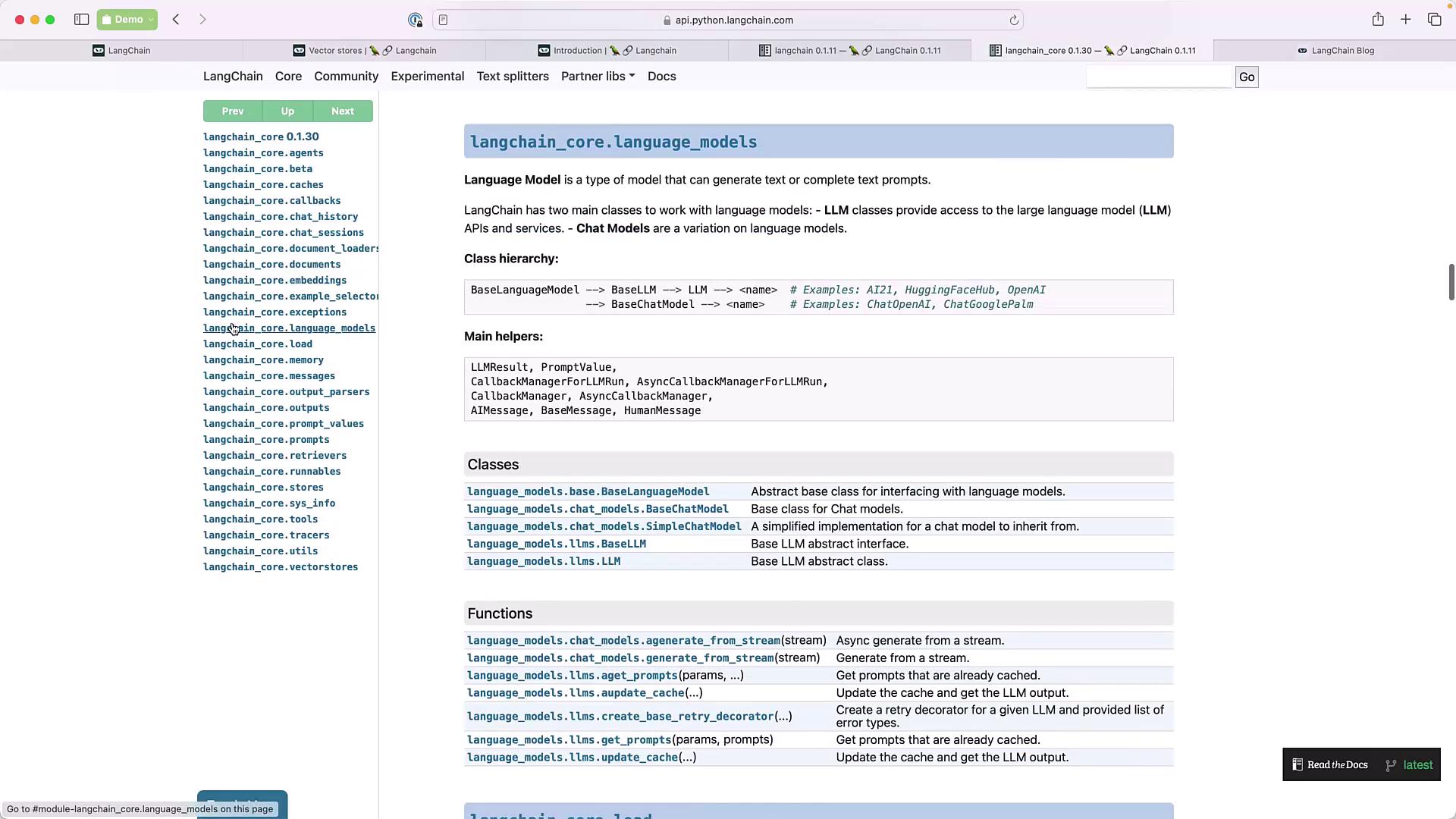

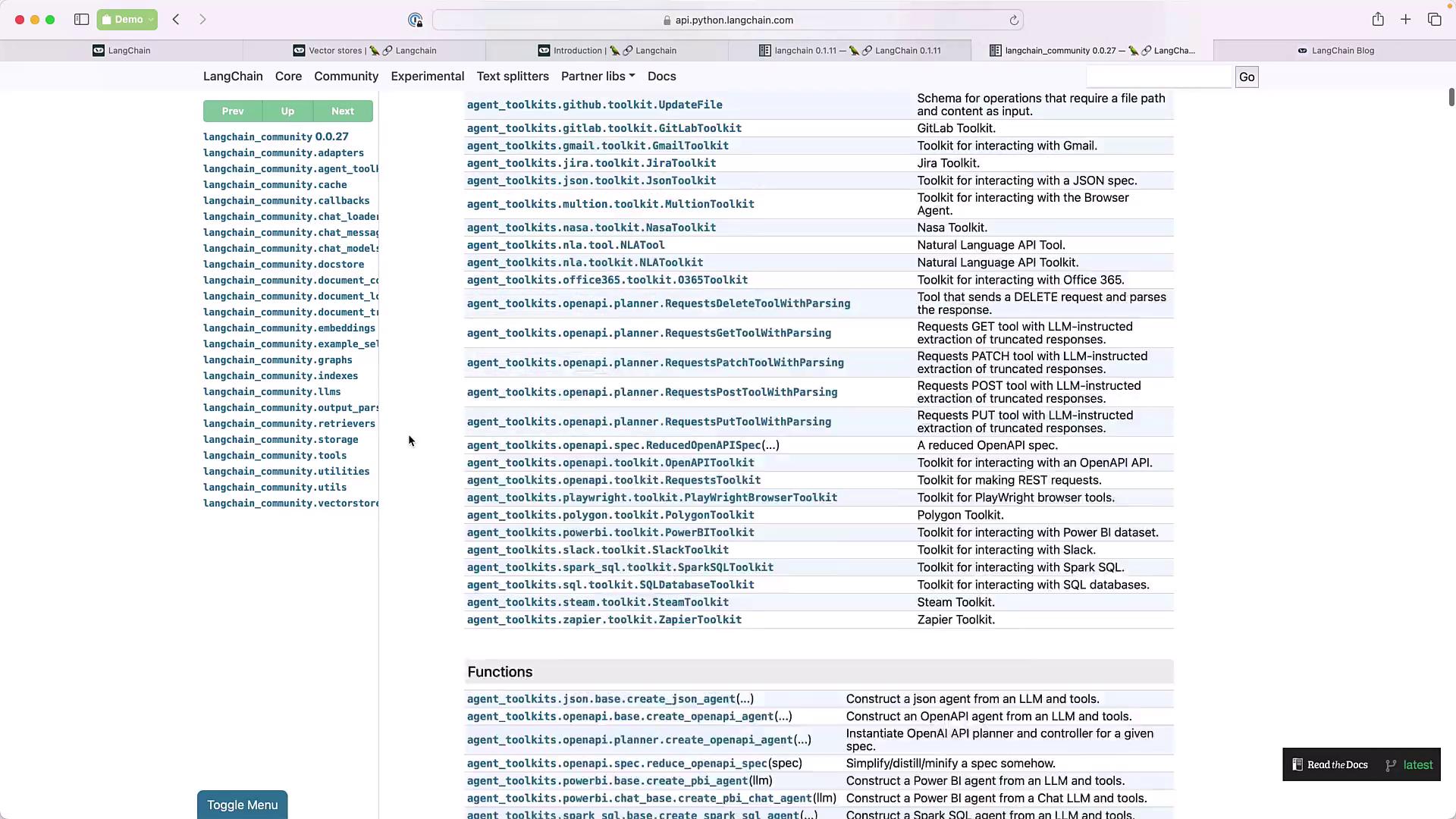

Every LangChain component is documented under the API reference. You’ll find details for agents, language models, chains, toolkits, and community modules.

Agents

Language Models

Agent Toolkits

A variety of toolkits help you build and customize agents:

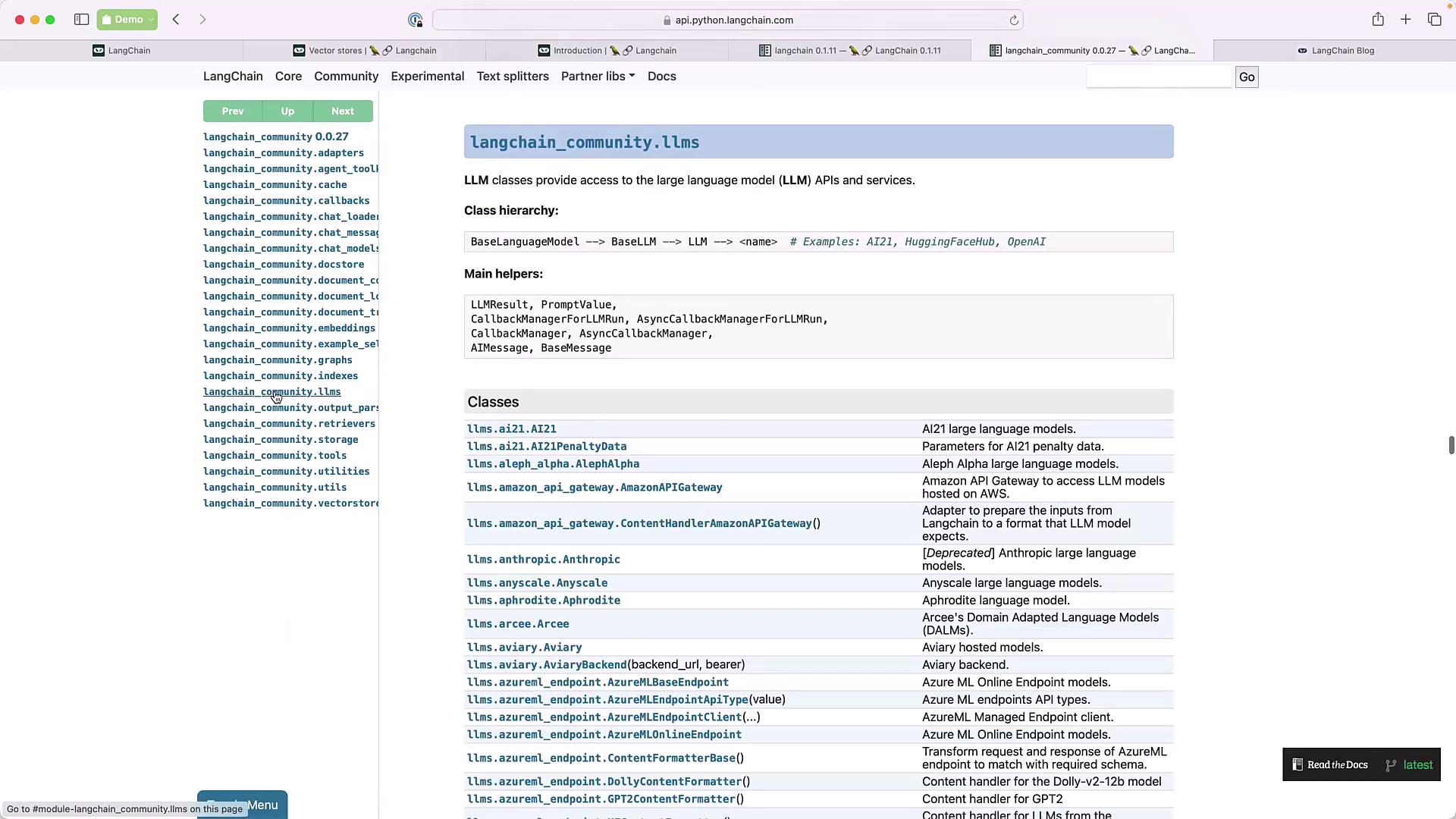

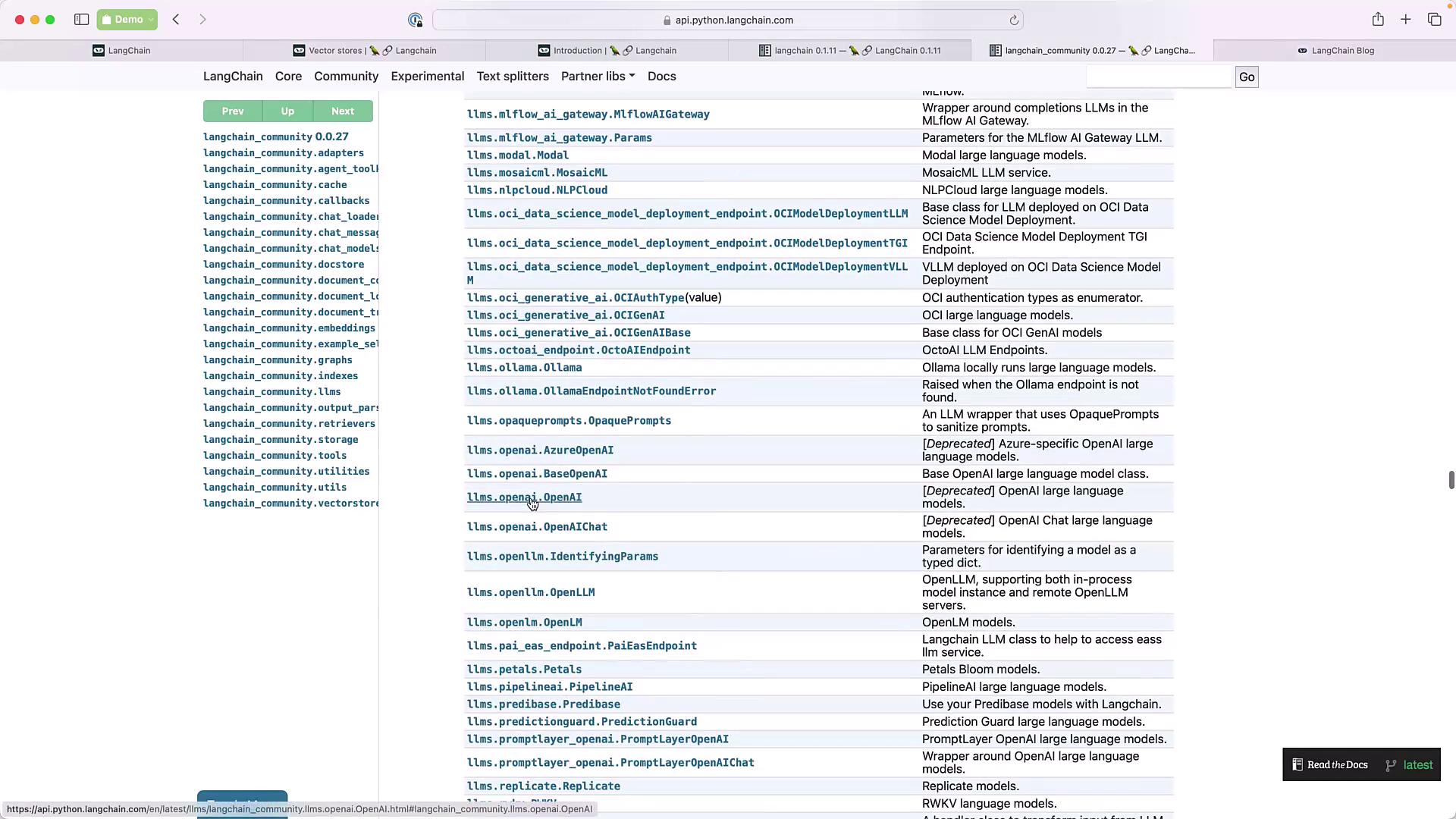

Community LLMs and Modules

Community contributions extend core functionality. Browse community LLM implementations and modules:

Code Examples

Initializing an OpenAI Chat Model

from langchain_community.llms import OpenAIChat

openai_chat = OpenAIChat(model_name="gpt-3.5-turbo")

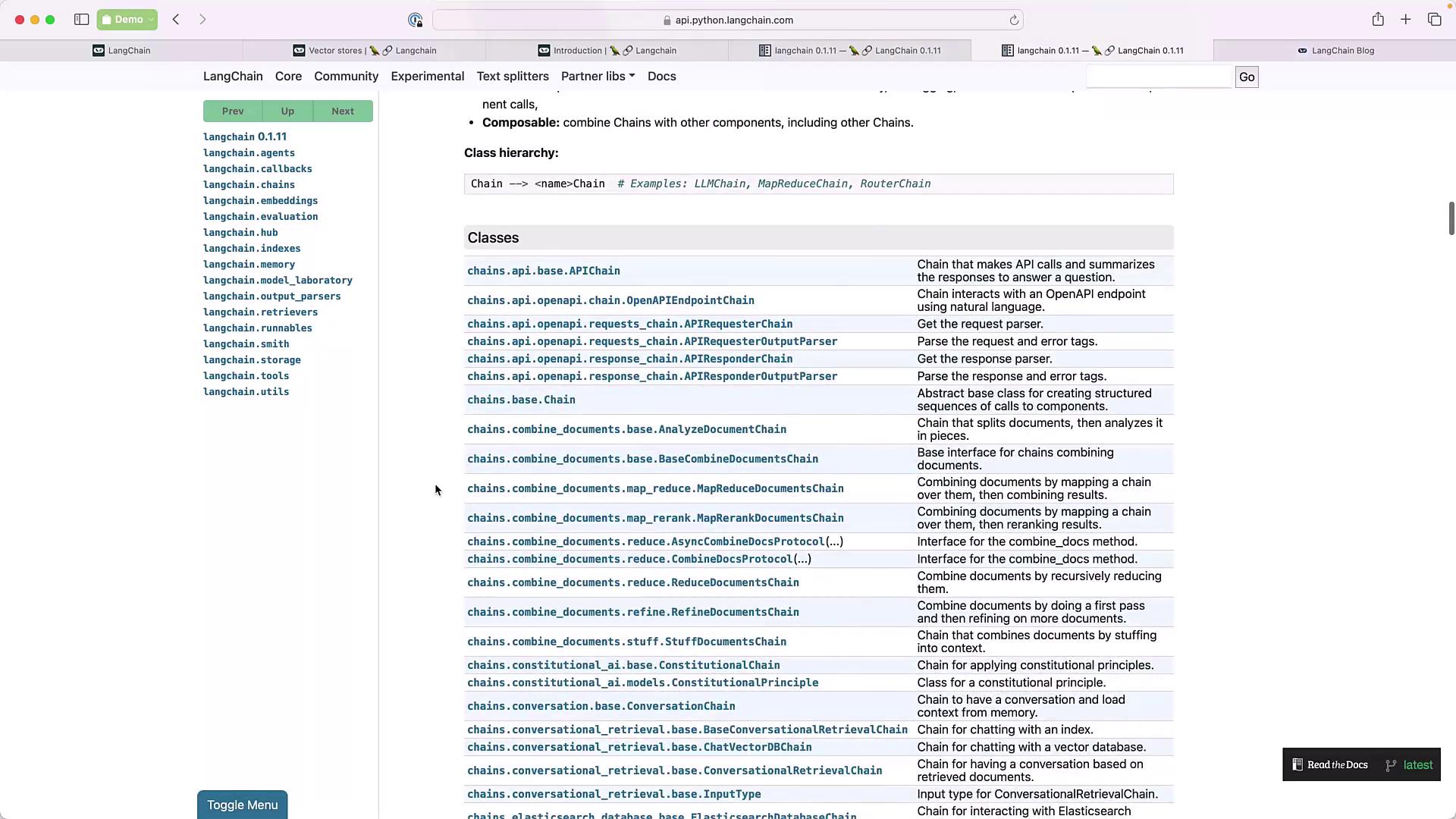

Creating an LLMChain

from langchain.chains import LLMChain

from langchain_community.llms import OpenAI

from langchain_core.prompts import PromptTemplate

prompt = PromptTemplate(

input_variables=["adjective"],

template="Tell me a {adjective} joke"

)

llm_chain = LLMChain(llm=OpenAI(), prompt=prompt)

Exploring Chains

Discover all chain implementations in the API reference:

Blog and Updates

Stay up to date with the latest tutorials, release notes, and community announcements:

Links and References

Watch Video

Watch video content

Practice Lab

Practice lab