Mastering Generative AI with OpenAI

Understanding Prompt Engineering

Prompt Engineering in Generative AI

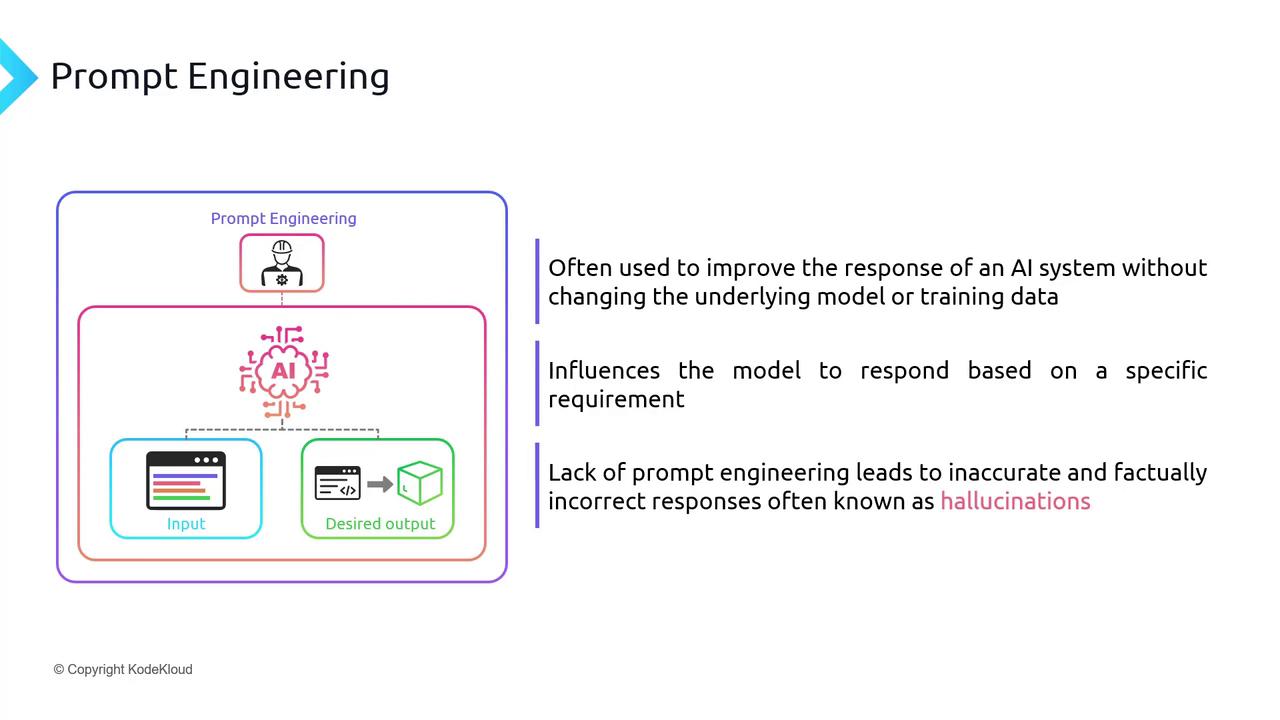

Prompt engineering is the art and science of crafting inputs (prompts) that guide large language models (LLMs) like GPT toward accurate, relevant, and controlled outputs—without changing the model weights or retraining on new data.

Note

Think of your prompt as code: precise instructions lead to reliable results. Combining technical understanding of model behavior with creative phrasing is key to reducing errors and maximizing output quality.

Key Benefits of Prompt Engineering

| Benefit | Description | Example Prompt |

|---|---|---|

| Control Outputs | Shape tone, style, and structure of the response | “Translate this paragraph to French, preserving a formal register.” |

| Reduce Hallucinations | Clarify context to avoid generating incorrect details | “Summarize the following news article factually without adding details.” |

| Enable Complex Tasks | Chain multi-step instructions for workflow automation | “First outline the plot, then draft dialogue for Scene 2 in screenplay.” |

Why Clear Prompts Matter

Large language models interpret your prompt as a custom “programming language.” Vague or underspecified prompts often cause:

- Off-topic or irrelevant responses

- Fabricated facts and inaccuracies (hallucinations)

- Inconsistent formatting or style

Well-structured prompts help you:

- Achieve precise outputs (summaries, code, translations)

- Maintain factual accuracy across tasks

- Optimize results without fine-tuning the model

Next, we’ll dive into practical techniques—such as prompt templates, role prompting, and chain-of-thought—to maximize the reliability and relevance of your Generative AI workflows.

Links and References

Watch Video

Watch video content