| Prompt Type | Purpose | Example Use Case |

|---|---|---|

| Explicit | Clearly defines format & content | Story writing, summaries |

| Conversational | Simulates chat with follow-ups | Interactive Q&A, customer support |

| Instructional | Provides structured requirements | Blog posts, reports |

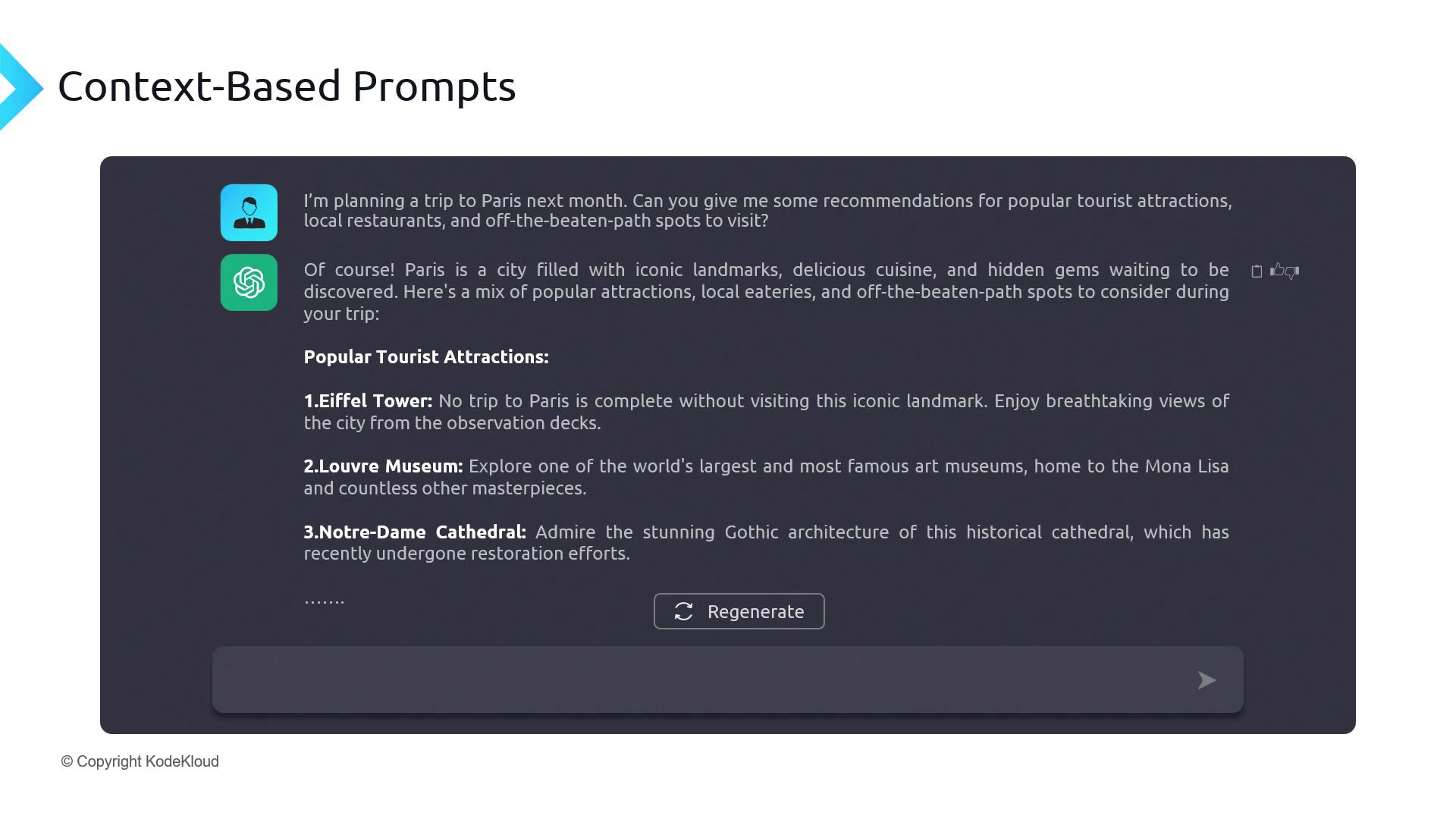

| Context-Based | Supplies background then asks a question | Travel plans, project planning |

| Open-Ended | Broad queries for creative or comprehensive answers | Opinion pieces, research overviews |

| Bias-Mitigating | Directs neutrality and factual balance | Sensitive topics, policy analysis |

| Code-Generation | Generates programming snippets | Utility functions, scripts |

1. Explicit Prompts

Explicit prompts specify the exact format, style, and content you need. The more details you provide, the closer the output matches your expectations. Example: “Write a short story about a young girl who discovers a magical key that unlocks a hidden door to another world.”

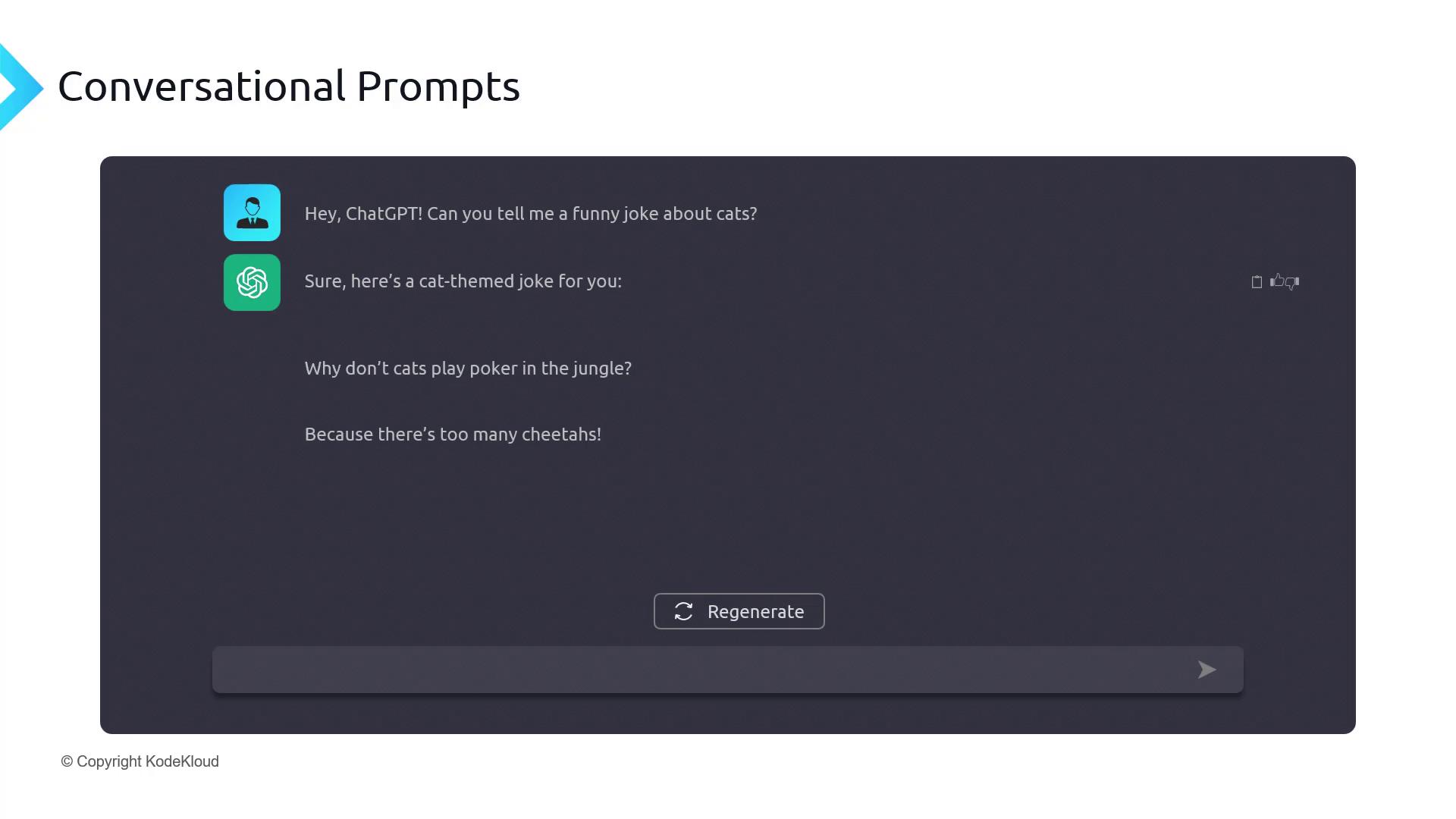

2. Conversational Prompts

These prompts mimic a chat dialogue, allowing follow-up questions, clarifications, or variations—just like talking to an assistant. User: “Can you tell me a funny joke about cats?”Assistant: “Why did the cat sit on the computer? Because there were too many cheetahs!”

3. Instructional Prompts

Also known as prescriptive prompts, these guide the model with explicit structure—sections, tone, length, or formatting rules. Example: “Write a detailed blog post discussing the benefits and drawbacks of renewable energy. Structure it with an introduction, a benefits section, a drawbacks section, and a conclusion.”4. Context-Based Prompts

Start by supplying background information or a scenario, then pose a targeted question. This combination yields richer, more relevant responses. Context:“I’m planning a week-long trip to Paris next month.”

Prompt:

“Suggest must-visit tourist attractions and local restaurants based on my itinerary.”

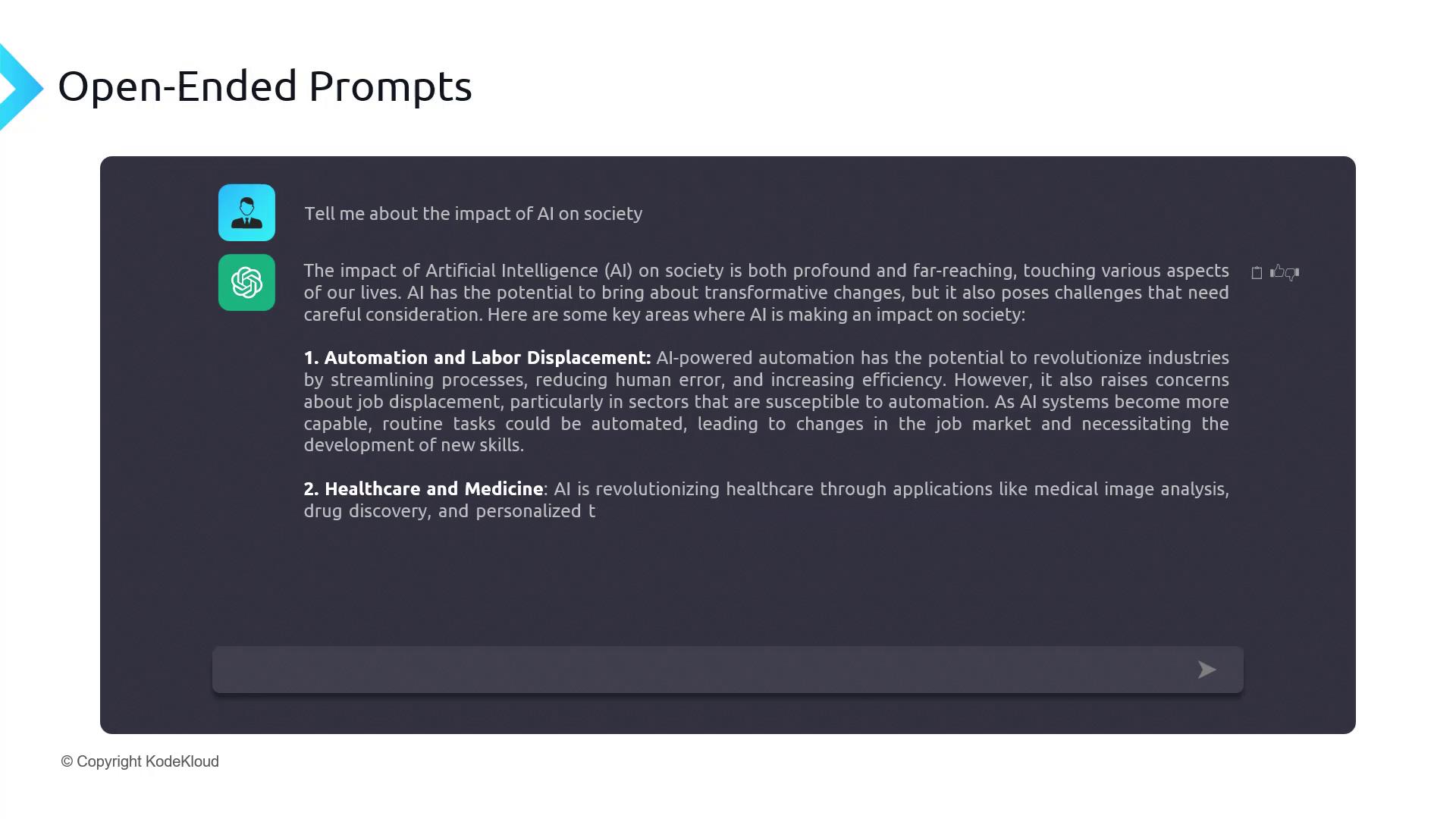

5. Open-Ended Prompts

Open-ended prompts are intentionally broad, inviting creativity or comprehensive exploration without tight constraints. Example: “What is the impact of AI on society?”

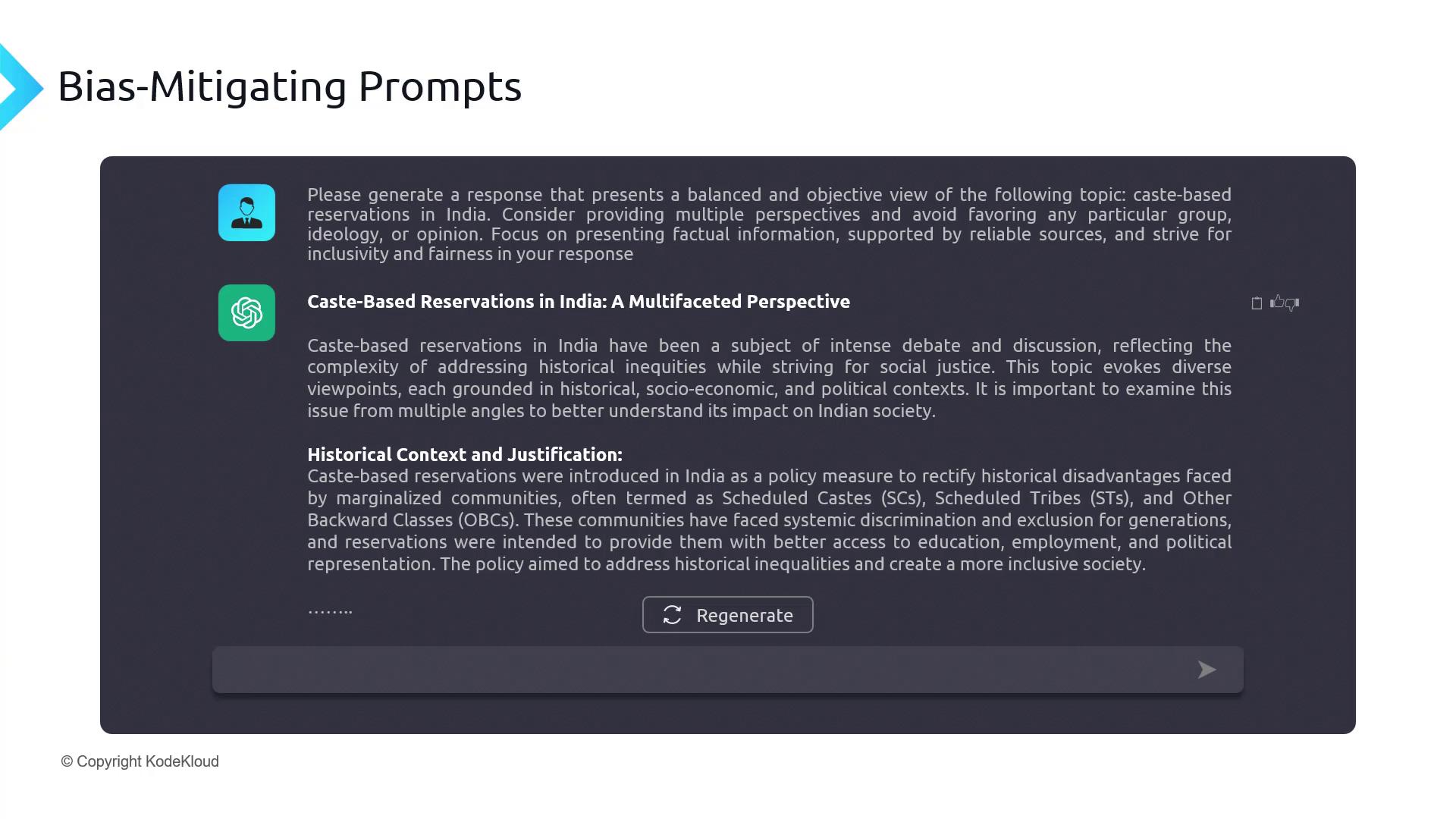

6. Bias-Mitigating Prompts

To counteract biases in training data, explicitly instruct the model to present balanced, fact-based information and avoid partisanship.When covering sensitive or controversial topics, always ensure your prompt requests neutrality and evidence-based responses to minimize unintended bias.

7. Code-Generation Prompts

LLMs excel at producing code snippets, algorithms, or scripts. Simply describe the functionality you need, and then test and refine the output.

Always review and test generated code for edge cases, performance, and security considerations before using it in production.