Mastering Generative AI with OpenAI

Understanding Tokens and API Parameters

What are Tokens

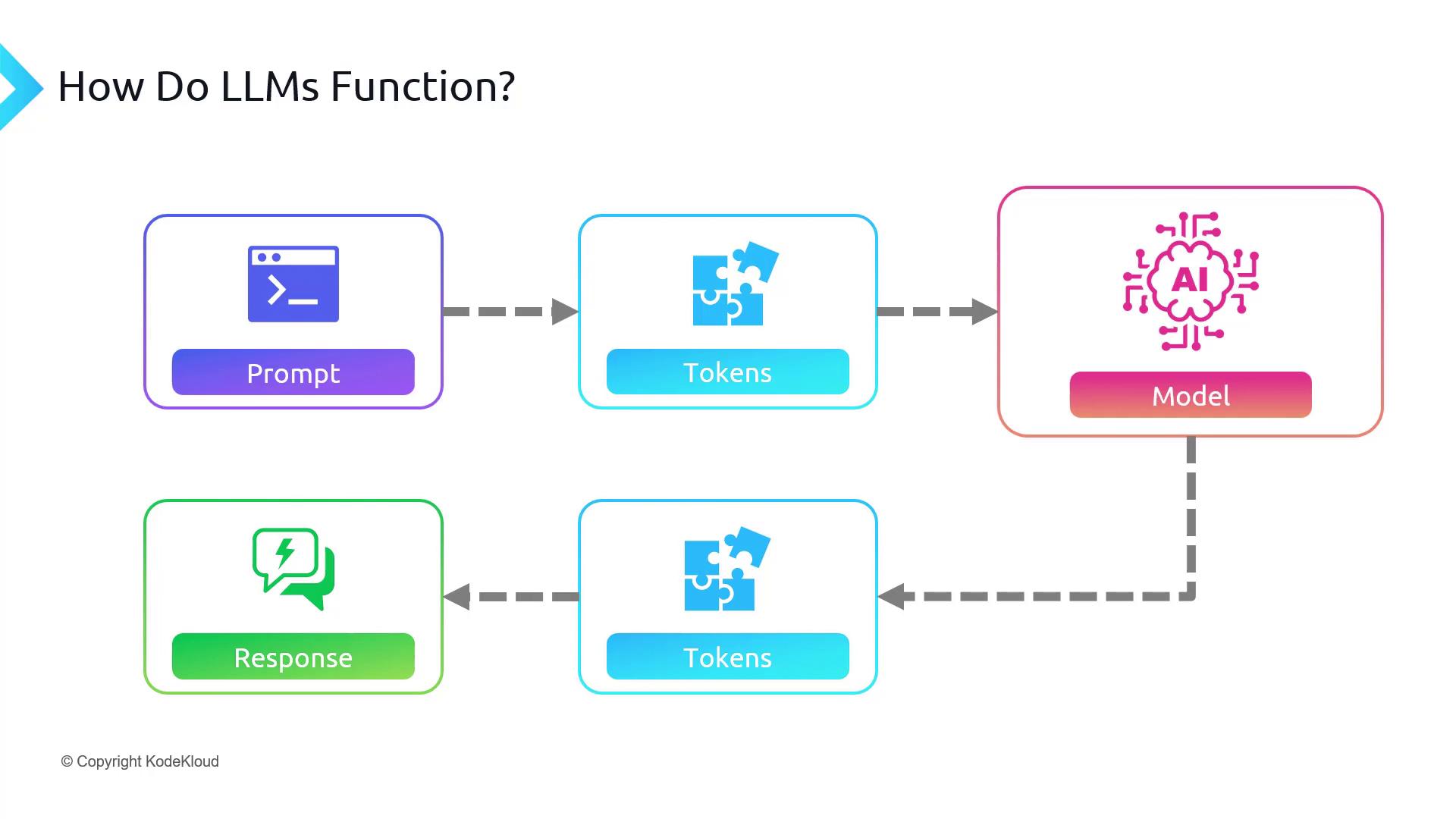

In this guide, we’ll dive into how large language models (LLMs) like GPT-3 and ChatGPT convert text into numerical units called tokens. Rather than processing words or sentences directly, these models operate on tokens: when you submit a prompt, it’s first transformed into tokens; when the model replies, it generates tokens that are decoded back into human-readable text.

Note

Tokens represent sub-word units rather than full words. Breaking text into these pieces is called tokenization.

Tokenization in OpenAI Models

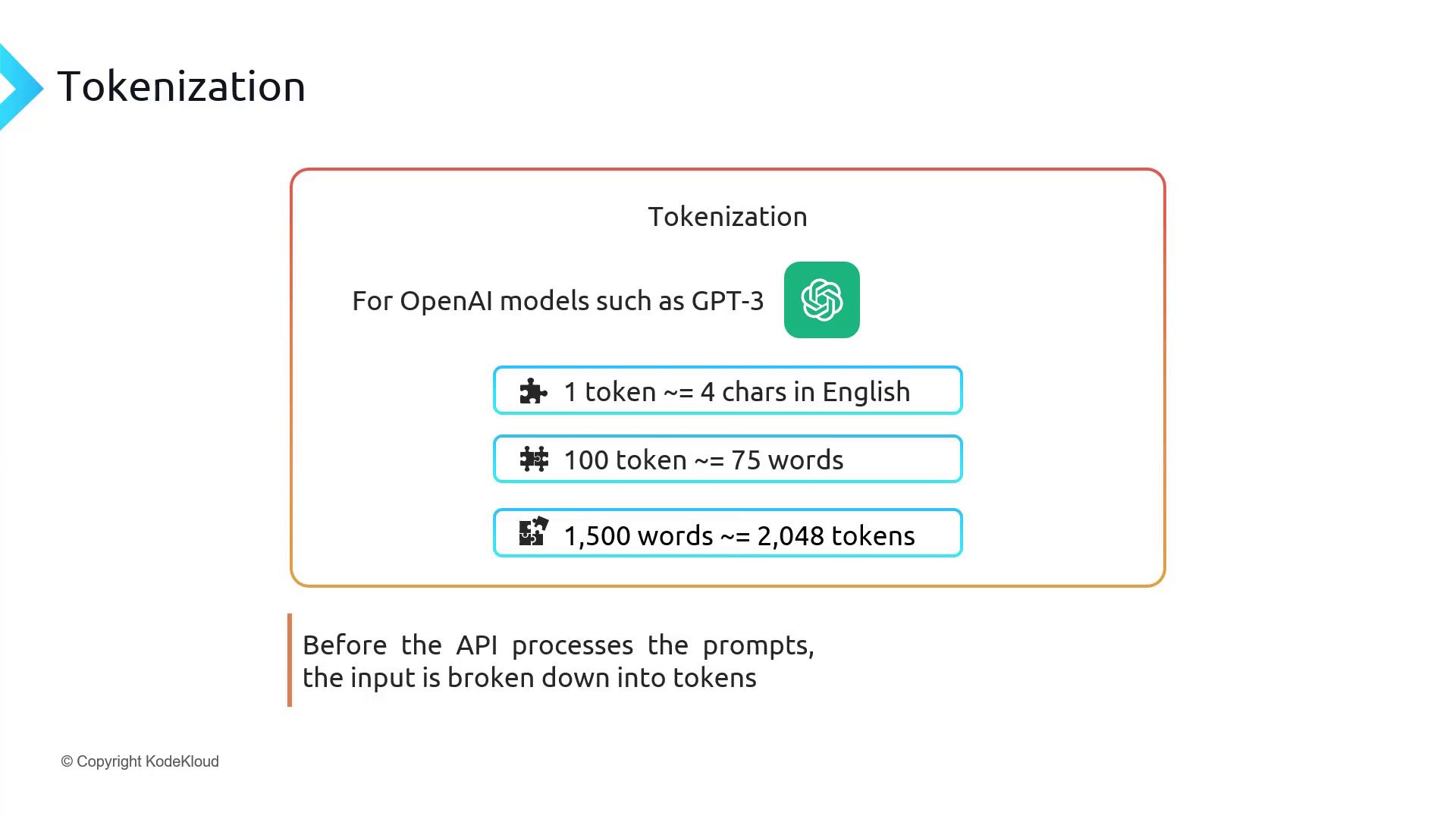

For OpenAI’s GPT-3 family, one token typically equals about four English characters. In practical terms:

| Metric | Approximate Equivalence |

|---|---|

| 1 token | ~4 characters |

| 100 tokens | ~75 words |

| 2,048 tokens | ~1,500 words |

Note

These are averages—actual token counts vary based on language, punctuation, and formatting.

Tools for Exploring Tokenization

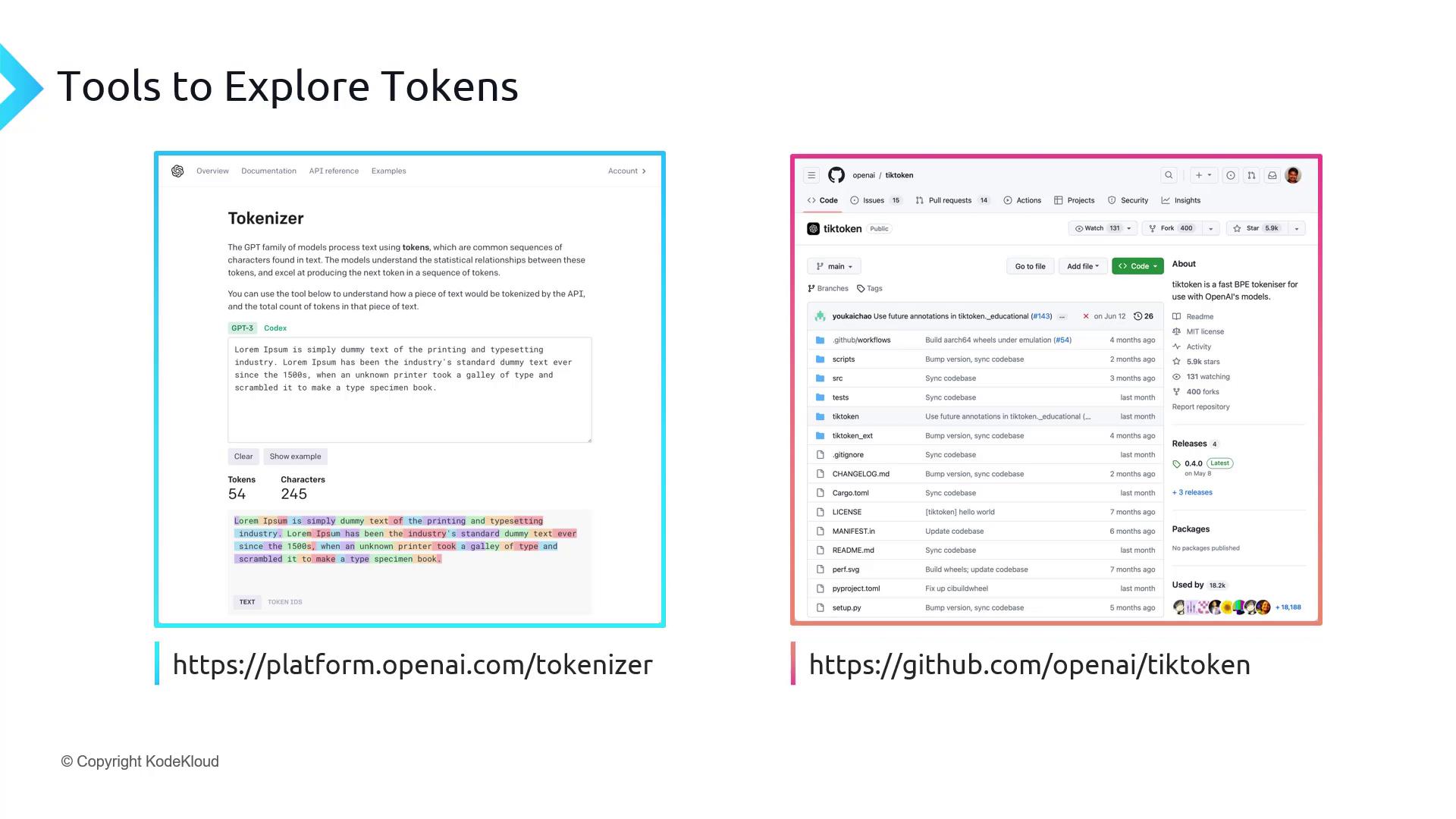

To inspect how your text is split into tokens, try these:

| Tool | Description |

|---|---|

| OpenAI Tokenizer | Interactive web app to visualize token boundaries: https://platform.openai.com/tokenizer |

| Tiktoken | Open-source Python library for encoding/decoding tokens: https://github.com/openai/tiktoken |

Context Length and Token Limits

Every LLM enforces a maximum context length, which is the sum of input and output tokens in a single request. Exceeding this limit triggers errors or truncated responses.

- Current cap for many OpenAI models: 4,097 tokens

- Example: If your prompt consumes 4,000 tokens, you have only 97 tokens left for the model’s answer.

To work with longer conversations, split your text into chunks or feed previous model outputs as new inputs.

Token Cost Impact

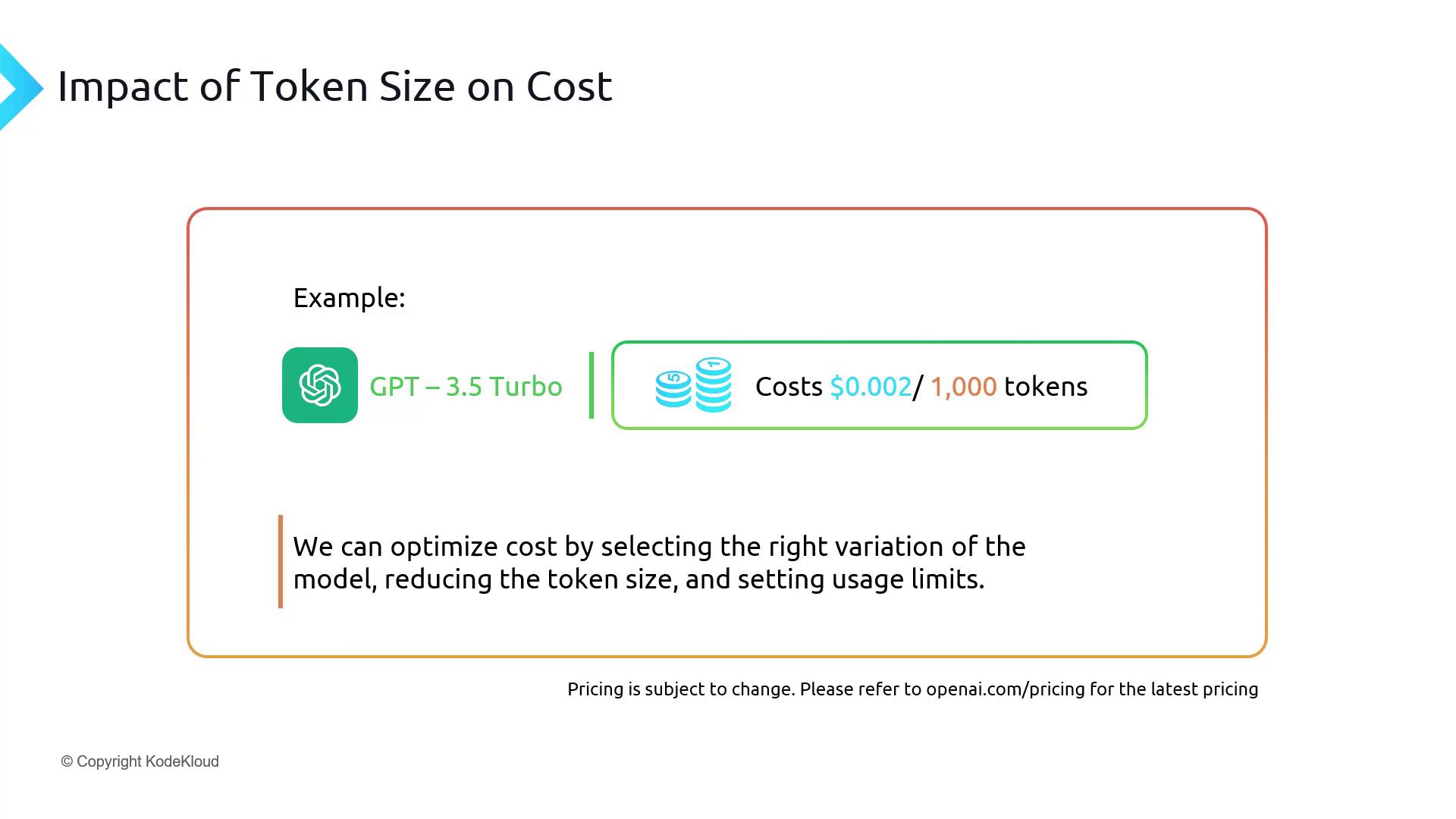

OpenAI’s billing is based on tokens processed. For example, GPT-3.5 Turbo is priced at $0.002 per 1,000 tokens (check the latest pricing).

Monitoring token usage helps you:

- Optimize prompt length to fit within context limits

- Manage monthly spending by choosing shorter prompts or more efficient models

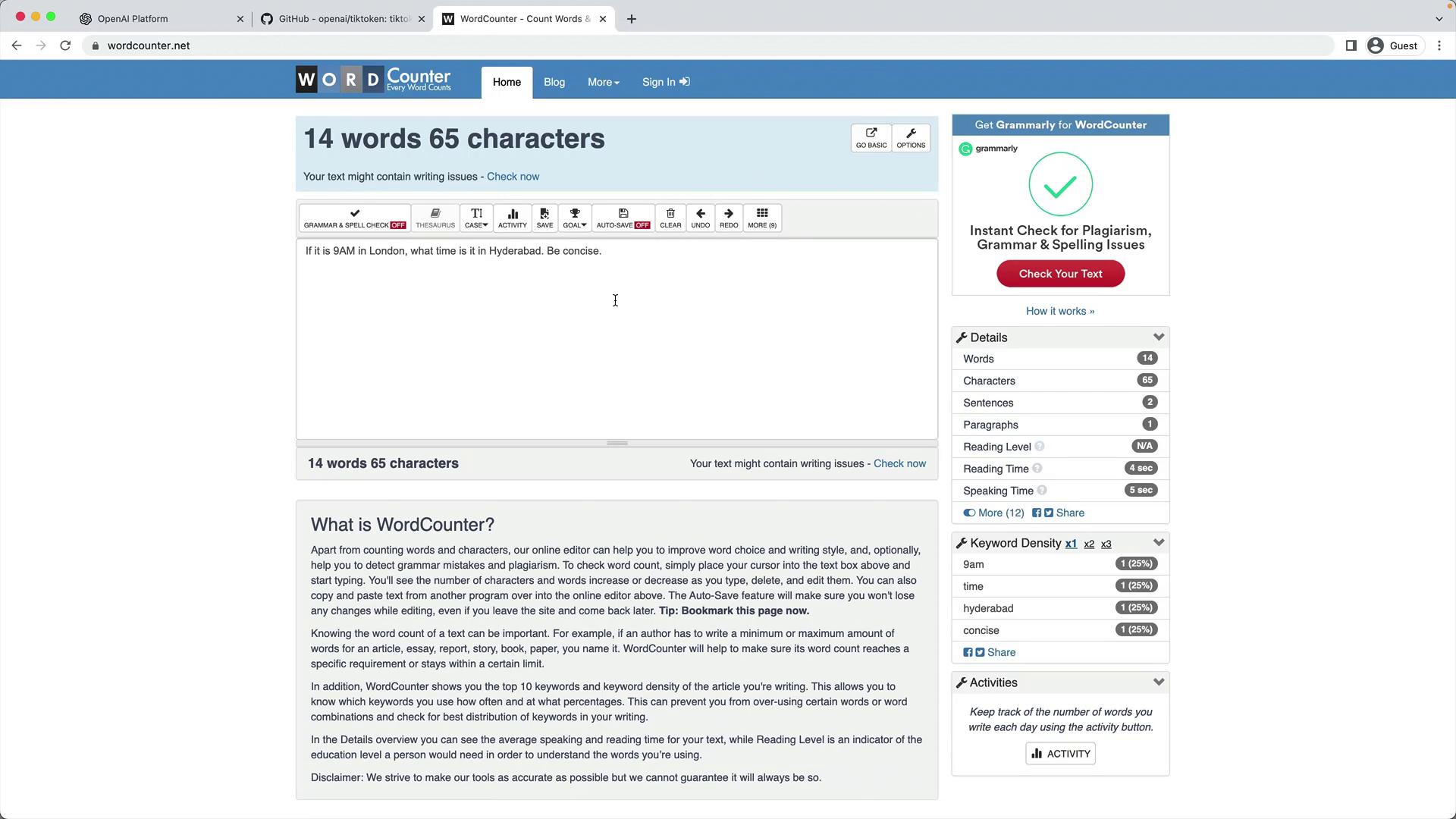

Interactive Tokenizer Demo

Try the OpenAI Tokenizer with this prompt:

If it is 9AM in London, what time is it in Hyderabad. Be concise.

- Characters: 65

- Words: 14

- Tokens: 19

You can also inspect the raw token IDs—a vector of integers—for advanced debugging.

Programmatic Tokenization with Tiktoken

Install the library:

pip install tiktoken

Use it in Python:

import tiktoken

# Choose encoding for your model

encoding = tiktoken.encoding_for_model("gpt-3.5-turbo")

prompt = "If it is 9AM in London, what time is it in Hyderabad. Be concise."

# Encode text → list of token IDs

tokens = encoding.encode(prompt)

print(tokens)

# Count tokens

print(len(tokens)) # 19

# Decode back to text

print(encoding.decode(tokens))

# "If it is 9AM in London, what time is it in Hyderabad. Be concise."

Links and References

Watch Video

Watch video content