Running Local LLMs With Ollama

Getting Started With Ollama

Demo Essential Ollama CLI Commands

In this guide, we’ll walk through the most common Ollama CLI commands with practical examples. Whether you’re spinning up a model server, inspecting metadata, or managing local models, these commands form the foundation of any AI application workflow.

Table of Contents

- List All Commands

- Run a Model Interactively

- Stop a Running Model

- View Local Models

- Remove a Model

- Download Models Without Running

- Show Model Details

- Monitor Active Models

- Links and References

1. List All Commands

To discover every available Ollama subcommand, simply run:

ollama

You’ll see output similar to:

Available Commands:

serve Start Ollama model server

create Create a model from a Modelfile

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

list List local models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help Help for ollama

-v, --version Show version information

Tip

Append --help to any command to view detailed usage information, for example:

ollama run --help

2. Run a Model Interactively

Launch an interactive chat session with a model (e.g., llama3.2):

ollama run llama3.2

Once running, type your queries:

>>> What is the time?

I'm not sure what time you are referring to, as I'm a large language model without real-time access...

To exit the chat, enter:

>>> /bye

3. Stop a Running Model

Models continue running in the background even after you exit the chat interface. To completely terminate:

ollama stop llama3.2

Warning

Leaving unused models running can consume memory and GPU resources. Always stop models you’re no longer using.

4. View Local Models

List all models downloaded on your machine:

ollama list

| NAME | ID | SIZE | MODIFIED |

|---|---|---|---|

| llava:latest | 8dd30f6b0cb1 | 4.7 GB | 48 minutes ago |

| llama3.2:latest | a80c4f17acd5 | 2.0 GB | About an hour ago |

5. Remove a Model

Free up disk space by deleting a model:

ollama rm llava

Confirm removal:

ollama list

| NAME | ID | SIZE | MODIFIED |

|---|---|---|---|

| llama3.2:latest | a80c4f17acd5 | 2.0 GB | About an hour ago |

6. Download Models Without Running

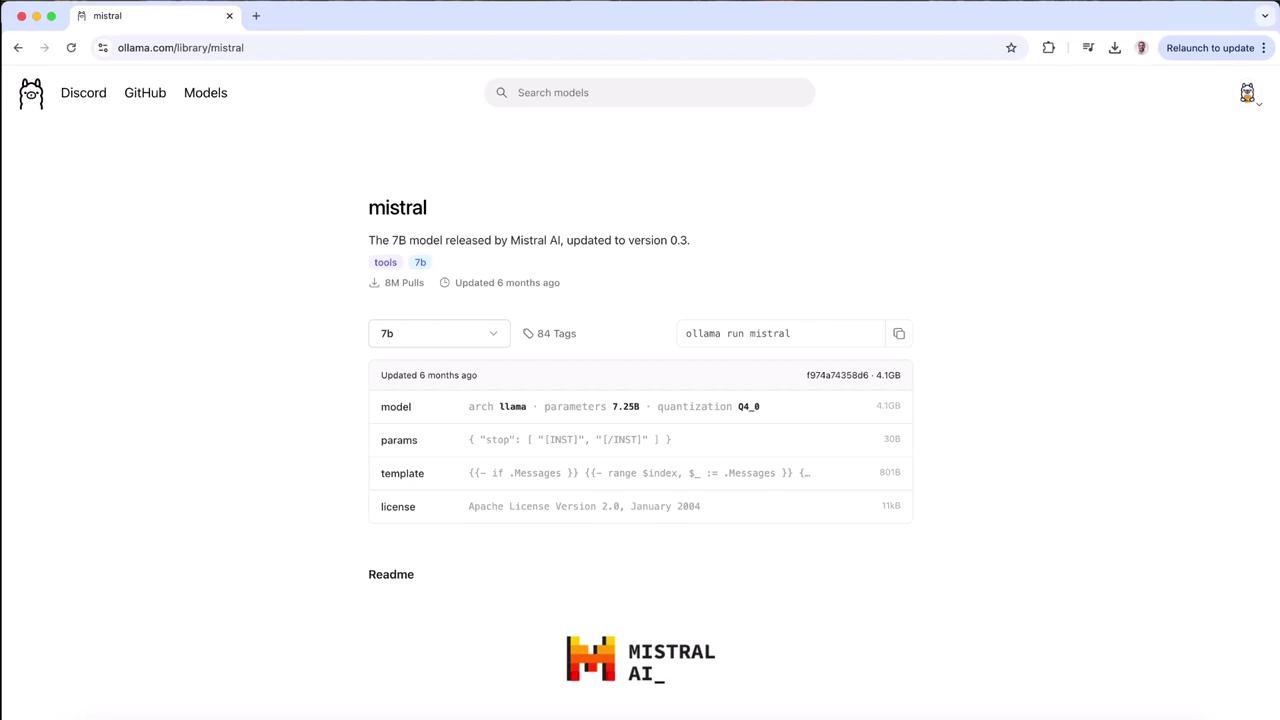

Use ollama pull to fetch a model without immediately launching it. For example, to pull Mistral 7B:

ollama pull mistral

You’ll see progress bars like:

pulling manifest

pulling ff82381e2bea... 28%

Note

Press Ctrl+C at any point to abort the download.

7. Show Model Details

Inspect model metadata, architecture, and licensing:

ollama show llama3.2

Model

architecture llama

parameters 3.2B

context length 131072

embedding length 3072

quantization Q4_K_M

Parameters

stop "<|start_header_id|>"

stop "<|end_header_id|>"

stop "<|eot_id|>"

License

LLAMA 3.2 COMMUNITY LICENSE AGREEMENT

Release Date: March 26, 2024

Understanding the license ensures you comply with usage restrictions.

8. Monitor Active Models

Similar to Docker’s ps, this command lists all currently running models:

ollama ps

After launching a model in one terminal:

ollama run llama3.2

Open another terminal and run:

ollama ps

| NAME | ID | SIZE | PROCESSOR | UNTIL |

|---|---|---|---|---|

| llama3.2:latest | a80c4f17acd5 | 4.0 GB | 100% GPU | 4 minutes from now |

To stop the model:

ollama stop llama3.2

Verify no active models remain:

ollama ps

9. Links and References

Watch Video

Watch video content

Practice Lab

Practice lab