Running Local LLMs With Ollama

Getting Started With Ollama

Ollama Introduction

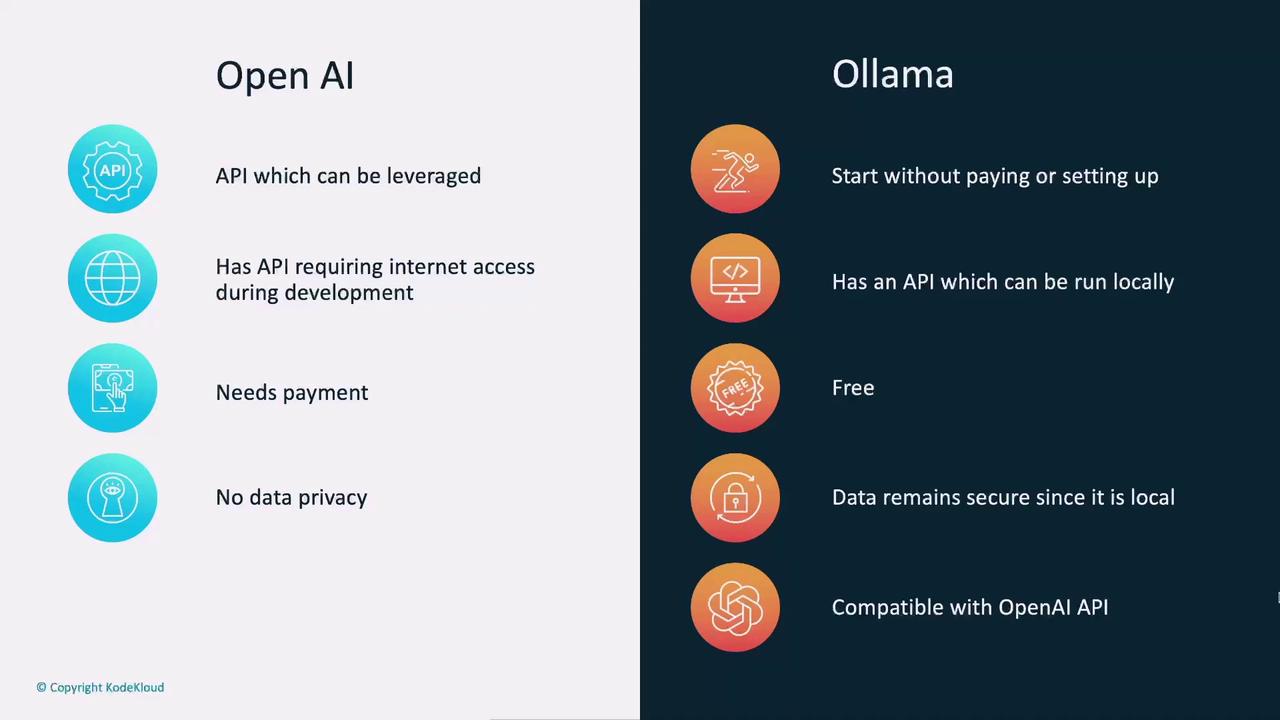

Welcome to this guide on Ollama, the open-source solution for running and developing large language models (LLMs) locally. We’ll start by examining the challenges in AI development today, then see how Ollama addresses them without vendor lock-in or high cloud costs.

Current AI Development Challenges

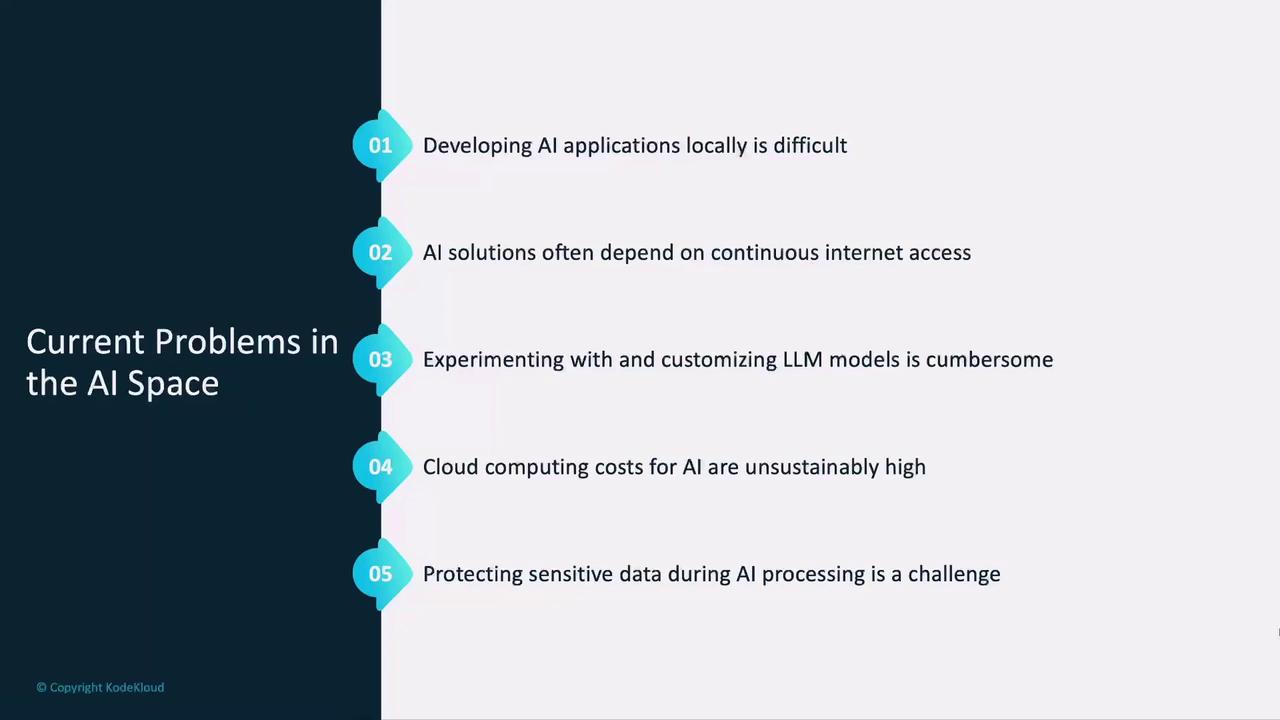

As AI adoption grows, developers face multiple hurdles when building and testing LLM-powered applications:

Complex local setup

Traditional apps spin up a local database, but most LLMs run on remote servers, complicating offline development.

Internet dependency & vendor lock-in

Relying on an external LLM service means constant connectivity, shared billing info, and limited flexibility when new models appear.

DIY cloud infrastructure is costly

Hosting your own GPU-backed servers requires significant time, expertise, and budget.

High compute costs & compliance risks

Cloud GPUs rack up bills quickly, and sending sensitive data externally can conflict with GDPR or HIPAA requirements.

Now that these pain points are clear, let’s explore how Ollama provides a seamless local LLM workflow.

What Is Ollama?

Ollama is an open-source CLI and API that lets you run, experiment with, and fine-tune LLMs on your own machine. It supports macOS, Windows, Linux, and Docker:

- Access models from various vendors—no single-source lock-in

- Interact via an OpenAI-compatible API for easy integration

- Leverage a growing community of plugins and integrations

In short, Ollama replaces costly cloud services or DIY infrastructure with a local, secure, and flexible environment for AI development.

Use Cases

1. Developing AI Applications

Build and test AI features entirely offline, free from API charges and data egress:

- No upfront payment or account setup

- Full data privacy—everything runs on your device

- Smooth production transition via OpenAI-compatible endpoints

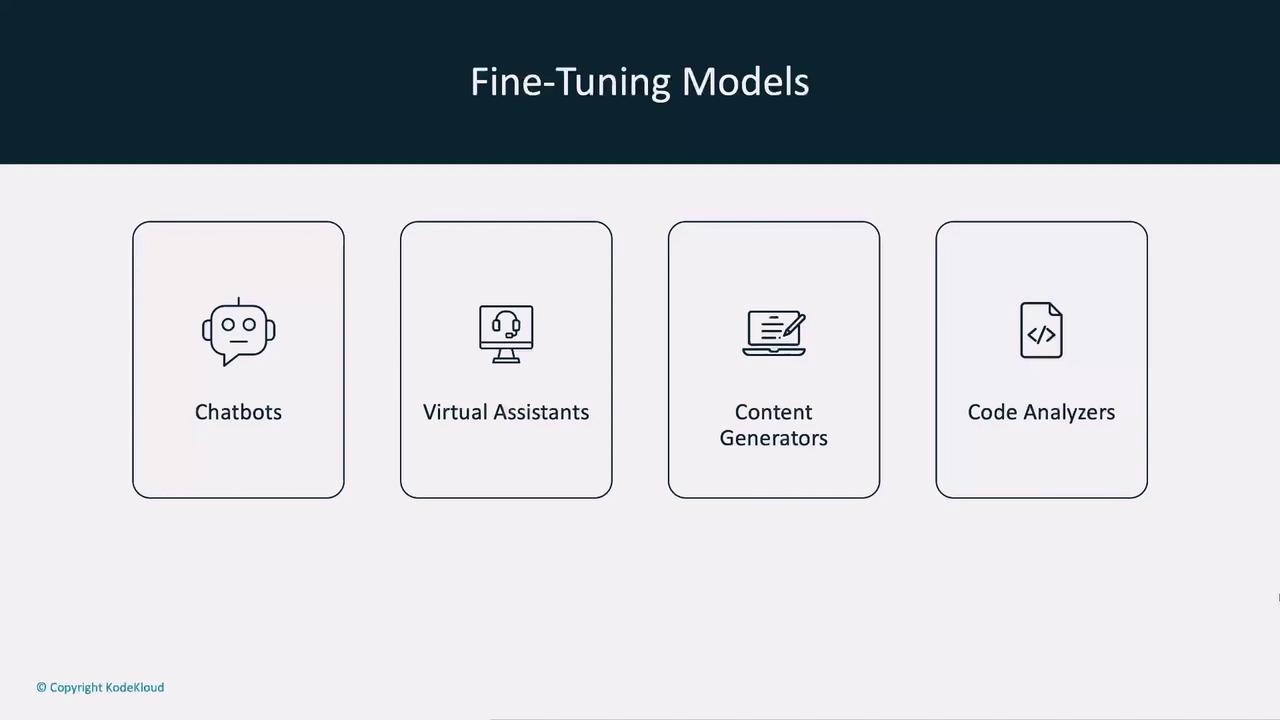

Ollama also supports fine-tuning, enabling you to customize models for:

| Use Case | Description |

|---|---|

| Chatbots | Domain-specific conversational AI |

| Virtual Assistants | Task automation and scheduling |

| Content Generators | Blog posts, marketing copy, more |

| Code Analyzers | Static analysis, code completion |

Note

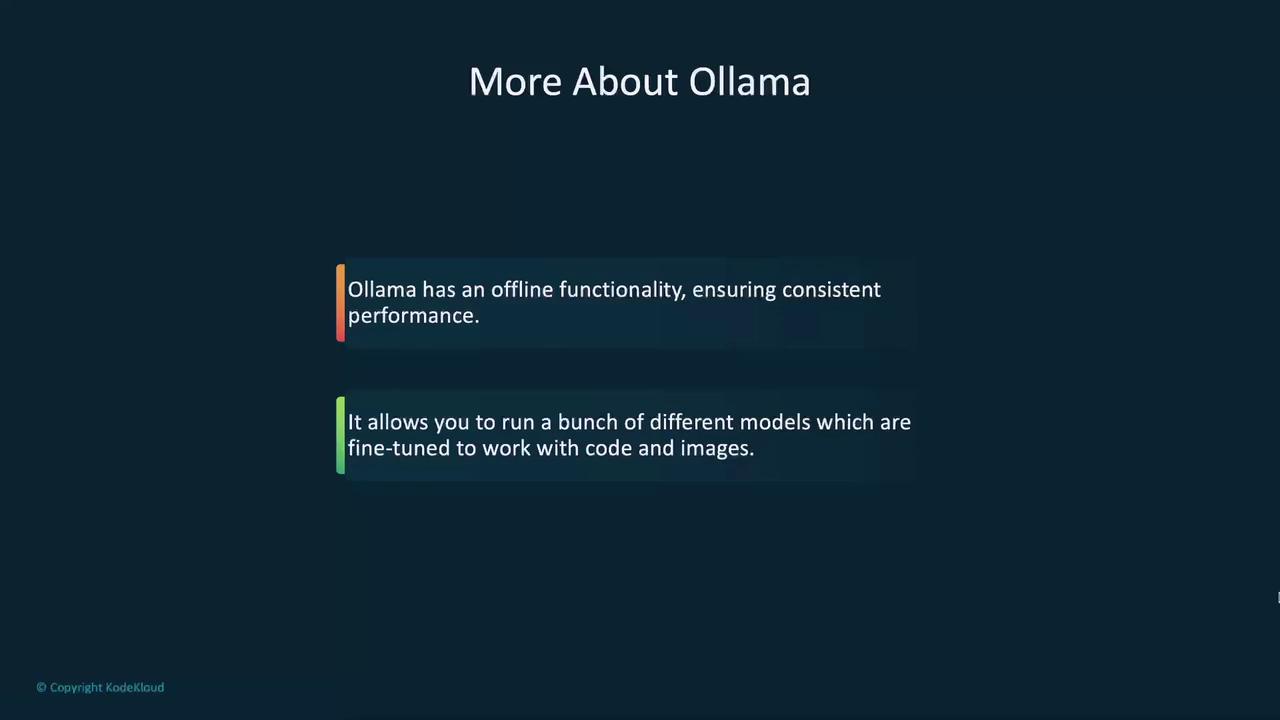

With Ollama’s offline mode, your app’s performance is consistent—no more flakey internet. Switch models on the fly, from code-focused to image-capable, and find the best fit.

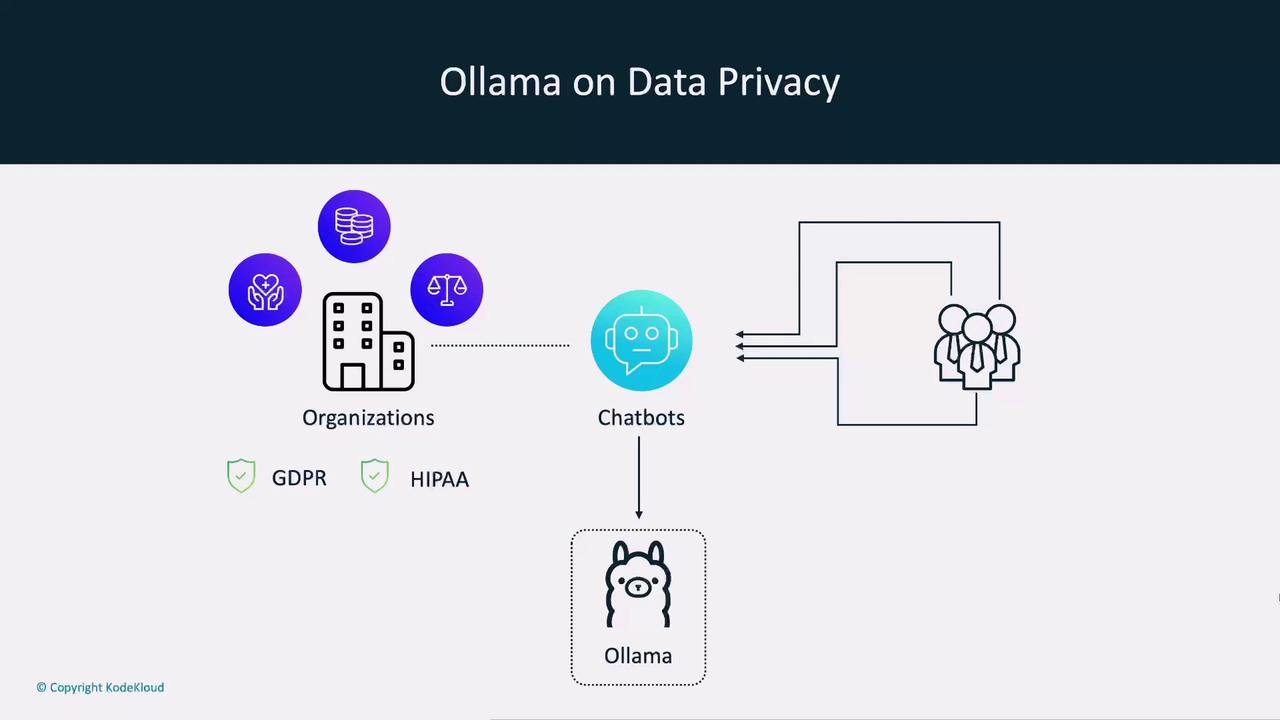

2. Privacy-Centric Platforms

Organizations like Growmore handle highly sensitive data and require in-house AI solutions. Ollama enables:

- Local or on-prem deployment

- GDPR & HIPAA compliance by keeping data internal

- Secure employee-facing chatbots without external API calls

3. Exploring AI Advancements

Stay ahead of the curve by testing new models as they emerge:

- Benchmark performance across architectures

- Fine-tune for niche tasks and industries

- Compare behavior side by side to pick the ideal model

Benefits of Ollama

| Benefit | Why It Matters |

|---|---|

| Secure | Keeps all data and inference on your local machine |

| Cost-effective | Free, open source, and no hidden cloud charges |

| Efficient | Quick setup, rapid model swaps, and zero vendor lock |

Get Started

To begin running LLMs locally with Ollama, download the installer for your platform at ollama.com and follow the setup guide. In the next section, we’ll walk through installing Ollama and launching your first local model. Happy coding!

Links and References

Watch Video

Watch video content