Running Local LLMs With Ollama

Getting Started With Ollama

Installing Ollama

This guide walks you through downloading, installing, and verifying Ollama on your local machine so you can run large language models (LLMs) like LLaMA 3.2 straight from your terminal.

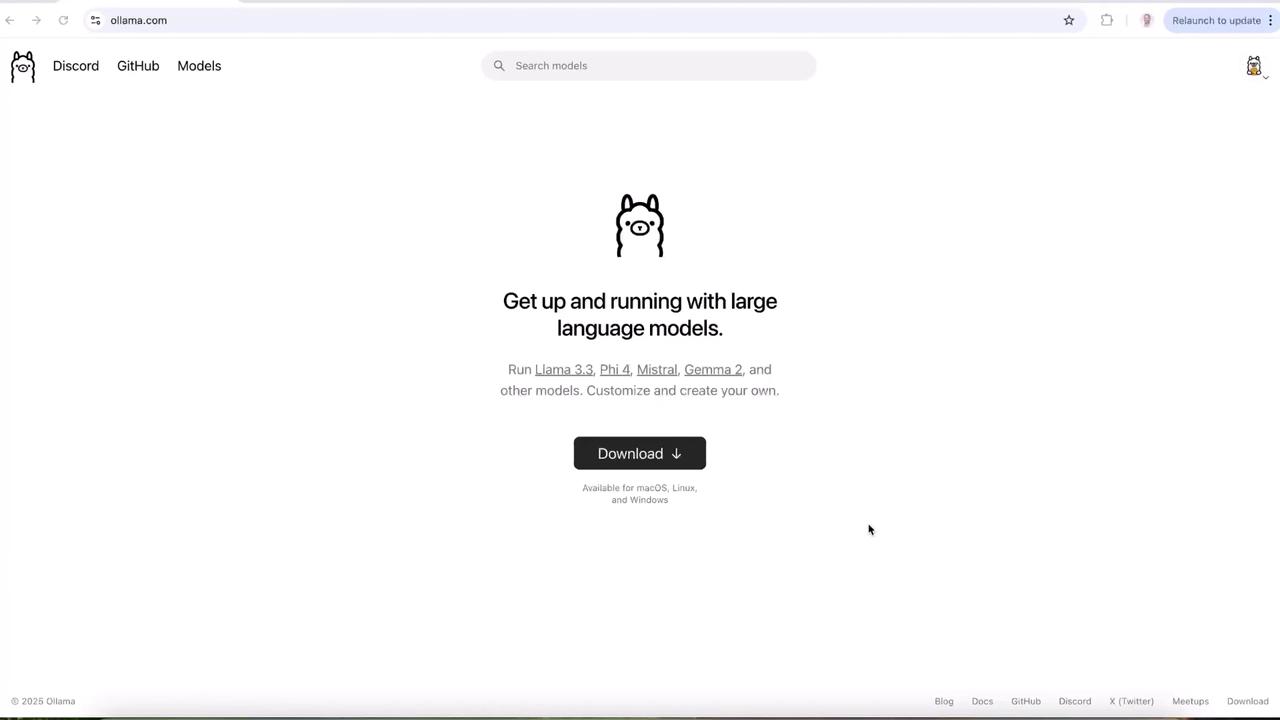

1. Downloading Ollama

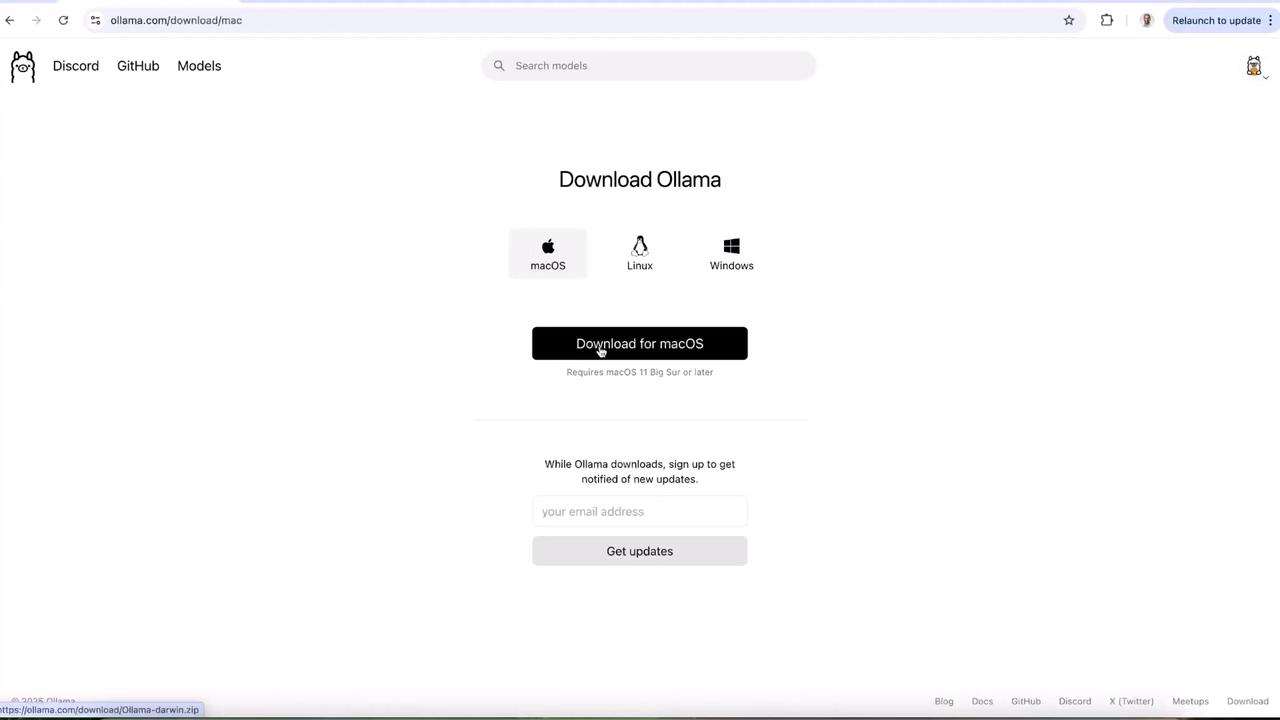

Visit the Ollama website and choose your operating system. Below is a quick reference for each platform:

| Operating System | File Format | Action |

|---|---|---|

| macOS | .zip | Download, unzip, and move to Applications |

| Linux | .tar.gz | Download and extract |

| Windows | .exe | Download and run installer |

Select the download button for your OS, then you’ll land on a page like this:

2. Installing on macOS

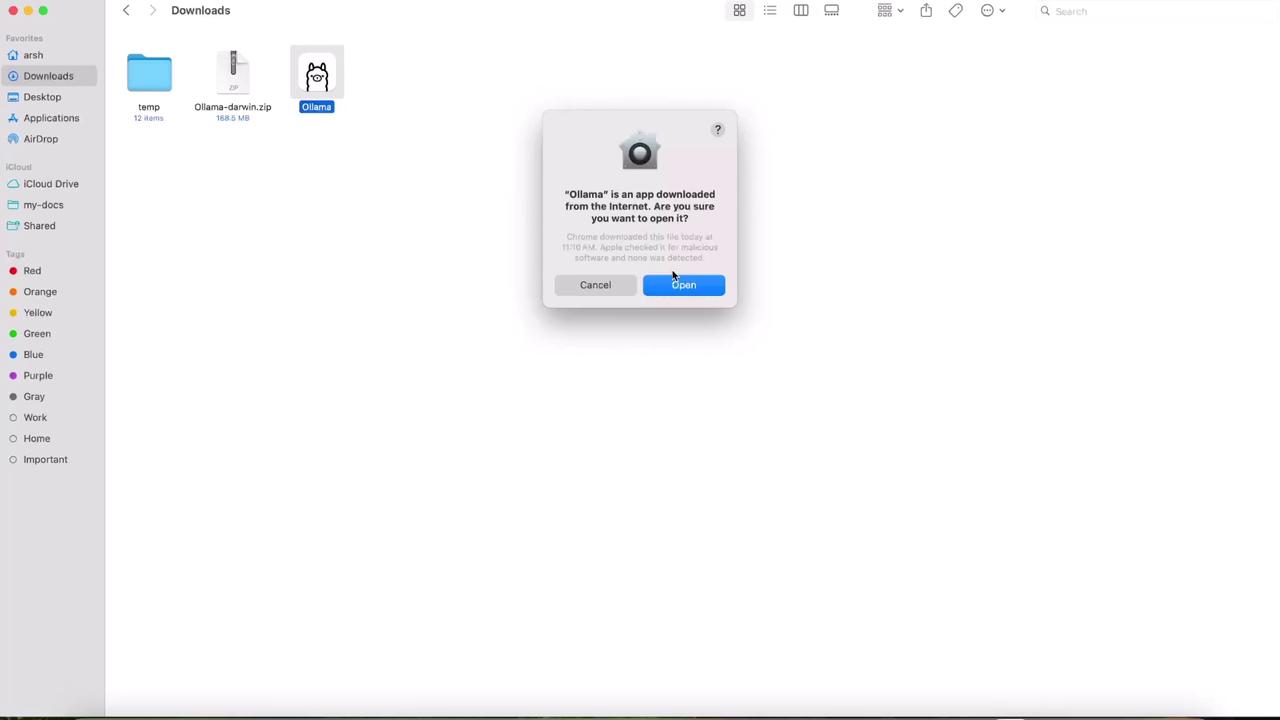

Once the .zip file has finished downloading:

- Open Finder and navigate to your Downloads folder.

- Unzip the archive and double-click Ollama.app.

- When prompted, click Open.

- macOS will ask to move the app to Applications—confirm to complete the install.

Note

If you see a security prompt about an unidentified developer, go to System Preferences > Security & Privacy and allow the app to run.

3. Verifying the CLI

Launch your terminal. On first run, you may be asked to install the Ollama CLI—type yes to proceed. Once installed, confirm everything is set up by running:

ollama

You should see output similar to:

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

Use "ollama [command] --help" for more information about a command.

Note

If you don’t see the above output, ensure your PATH includes the Ollama binary or restart your terminal session.

Congratulations! You now have Ollama installed and can run LLMs locally.

4. Next Steps

To get started, try running Meta’s LLama 3.2 model:

ollama run llama3

Explore the full set of commands with ollama help or check out the official documentation to learn how to create and manage your own models.

Links and References

Watch Video

Watch video content