AI-900: Microsoft Certified Azure AI Fundamentals

Fundamentals of Machine Learning

Deep Learning

Deep Learning is an advanced subfield of machine learning inspired by the structure and function of the human brain. Just as billions of neurons in our brain communicate through electrochemical signals to help us think, see, and make decisions, artificial neural networks in deep learning simulate this behavior by processing data and identifying patterns.

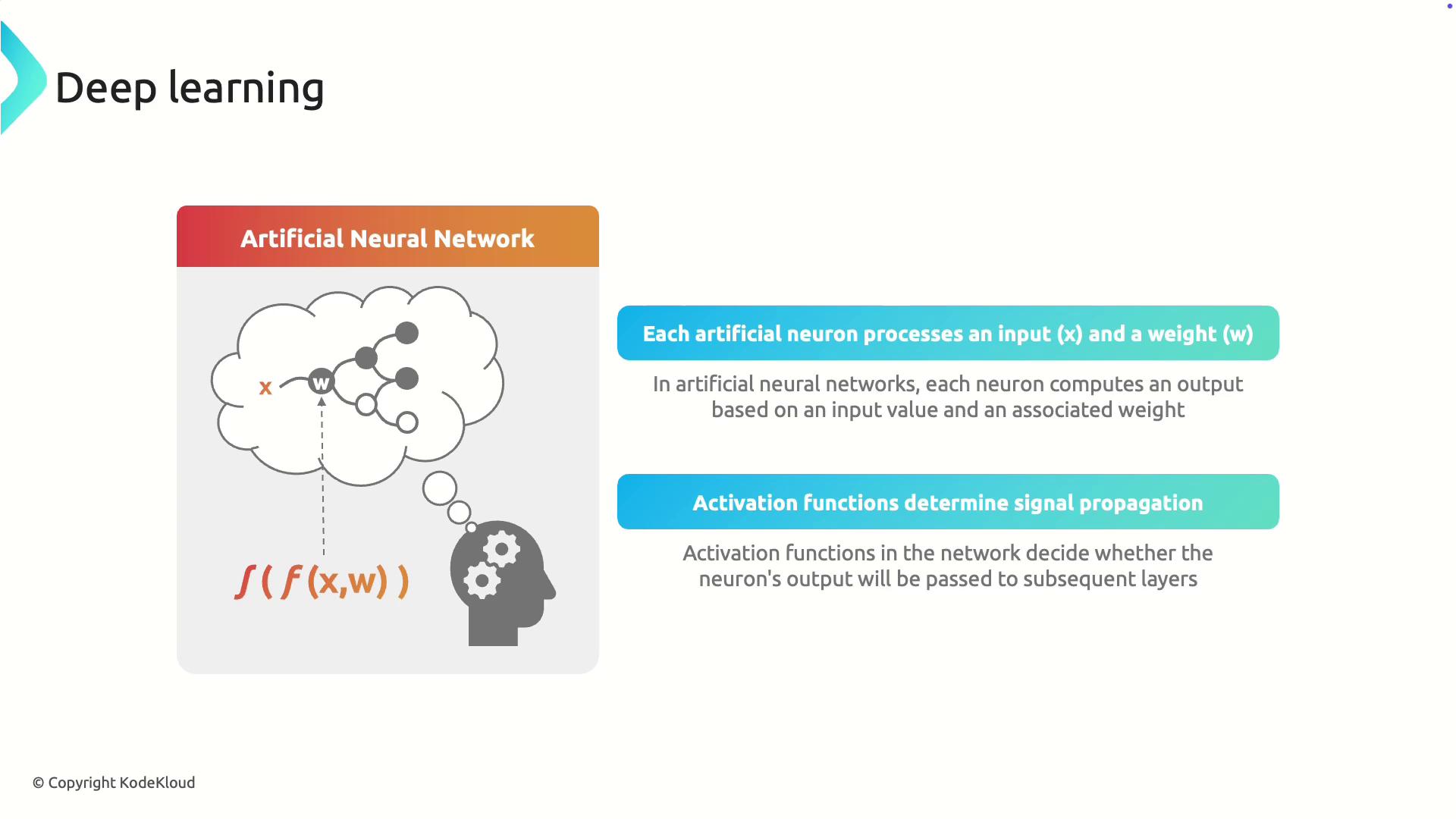

Artificial Neural Networks

Artificial neural networks simulate biological neurons by receiving input, processing it, and transmitting output. Each artificial neuron multiplies its input by a weight that reflects the importance of that feature. For instance, when predicting house prices, the number of rooms may be weighted differently than the room size. An activation function then decides whether the neuron’s output should move to the next layer, filtering the most relevant information.

Deep learning models, also known as deep neural networks (DNNs), consist of multiple layers that progressively refine the data. This hierarchical structure allows these models to learn complex patterns gradually from raw input data.

Note

In image recognition tasks, early layers may detect edges, mid-layers capture shapes, and deeper layers recognize complete objects, leading to highly effective classification and prediction.

Example: Classifying Animal Sounds

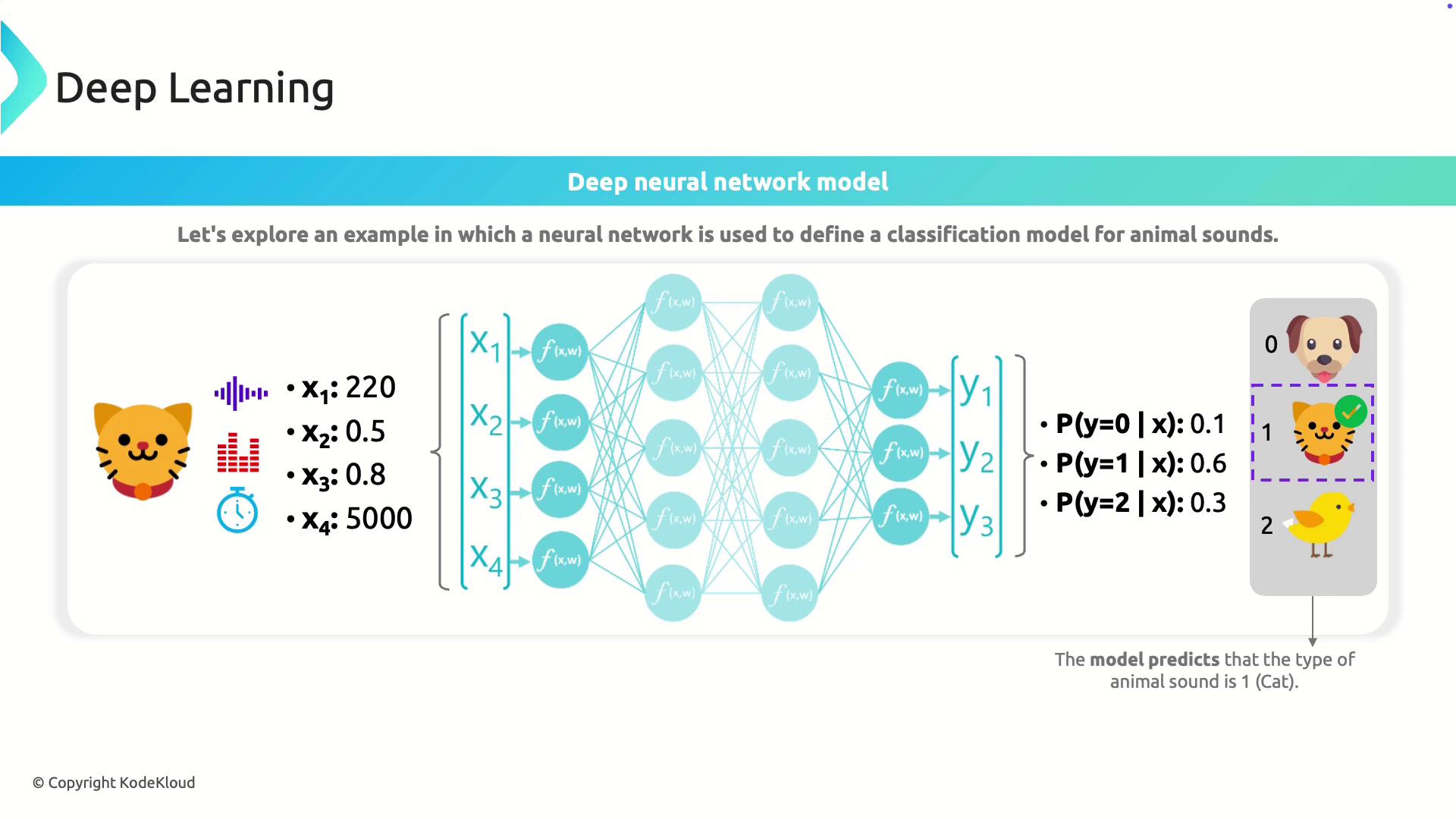

This section explains how a deep learning model can classify animal sounds using a step-by-step process. The model processes a feature vector X, which includes characteristics such as pitch, duration, amplitude, and frequency. Each feature is processed by a corresponding neuron in the input layer.

1. Input Layer and Feature Extraction

Each neuron in the input layer receives a feature and calculates a weighted sum using its specific weight (W). These weights are continuously adjusted during training, enabling the model to learn the contribution of each feature to the final prediction.

2. Hidden Layers

The output from the input layer is sent through one or more hidden layers. In these layers, every neuron connects to all neurons in the subsequent layer, allowing the model to refine and abstract features iteratively. This process helps uncover complex patterns essential for accurate predictions.

3. Output Layer and Prediction

In the final output layer, the network computes probabilities for each possible class. For example, a probability distribution might be 0.1 for dog, 0.6 for cat, and 0.3 for bird. The model then selects the class with the highest probability as its prediction.

The classification process can be summarized as:

- Start with a feature vector describing the sound.

- Process the features in the input layer with weighted summation.

- Pass the data through multiple hidden layers for iterative feature refinement.

- Compute output probabilities in the final layer and choose the class with the maximum probability.

Learning Through Iterative Training

The power of deep learning lies in its ability to learn from repeated exposure to data. During training, the model performs the following steps:

- Forward propagates training data through the network to generate predicted probabilities.

- Compares these predictions with actual labels (ground truth) to determine the loss, which measures the error.

- Adjusts the weights using optimization techniques such as backpropagation to minimize the loss.

For example, if the correct label for a sound is [0, 1, 0] (indicating a cat) and the model outputs [0.1, 0.6, 0.3], the network will update its weights to reduce the error.

Note

The continuous process of weight adjustment through backpropagation is what makes deep learning models robust and effective for diverse applications in artificial intelligence.

Summary

Deep learning models draw inspiration from the human brain to learn complex patterns from data by processing inputs through multiple layers. The essential aspects include:

- Feature extraction in the input layer.

- Progressive abstraction in hidden layers.

- Final prediction in the output layer using probabilities.

These principles extend to various AI techniques, including Azure Machine Learning, further showcasing the versatility of deep learning.

For more information on related topics, consider exploring these resources:

By understanding and applying these deep learning principles, you can leverage powerful techniques for applications in image recognition, natural language processing, and beyond.

Watch Video

Watch video content