AWS Certified AI Practitioner

Applications of Foundation Models

Design considerations for Foundation Model Applications

Welcome to this detailed lesson on designing applications with foundation models. In this guide, we will explore essential design considerations that directly impact performance, scalability, and cost efficiency. Whether you are building real-time applications or complex AI solutions, understanding these trade-offs is key to success.

Model Selection and Cost Considerations

When choosing a foundation model, it is crucial to balance cost, accuracy, latency, and precision. Consider these important questions:

- Was the model pre-trained on a massive third-party dataset?

- What are the cost implications associated with using this model?

- How do its accuracy and inference speed compare when pitted against simpler alternatives?

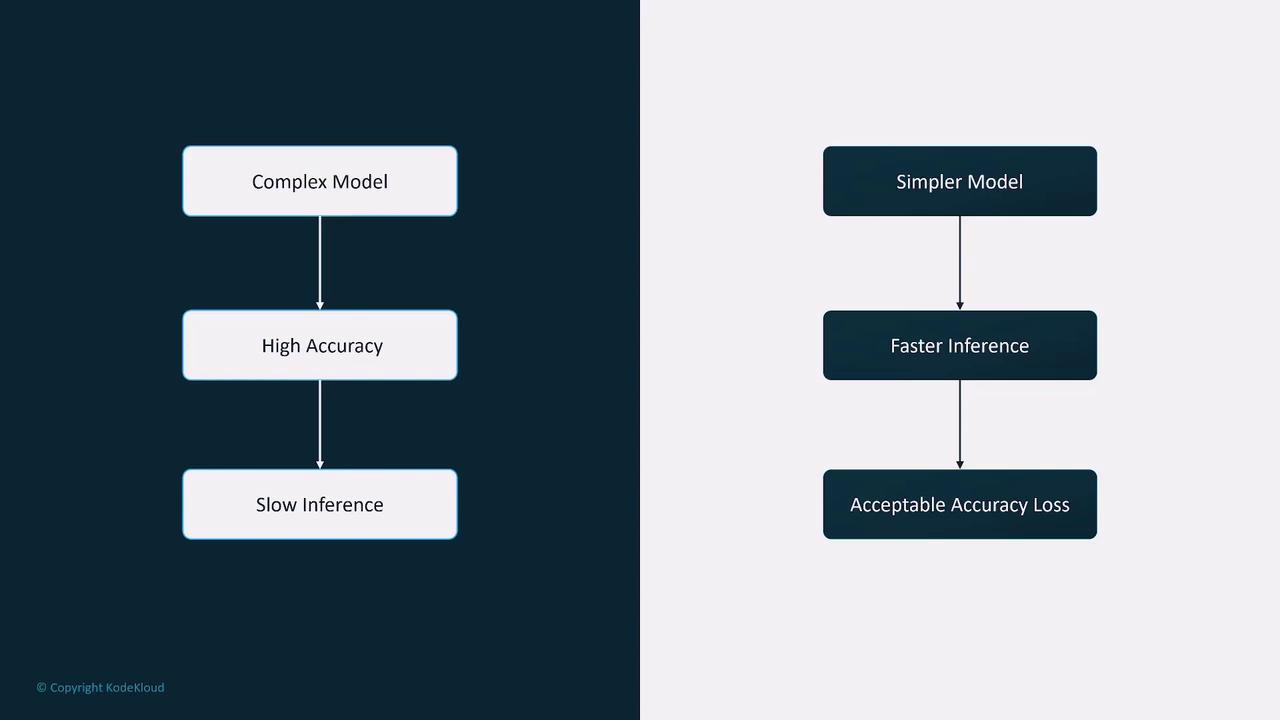

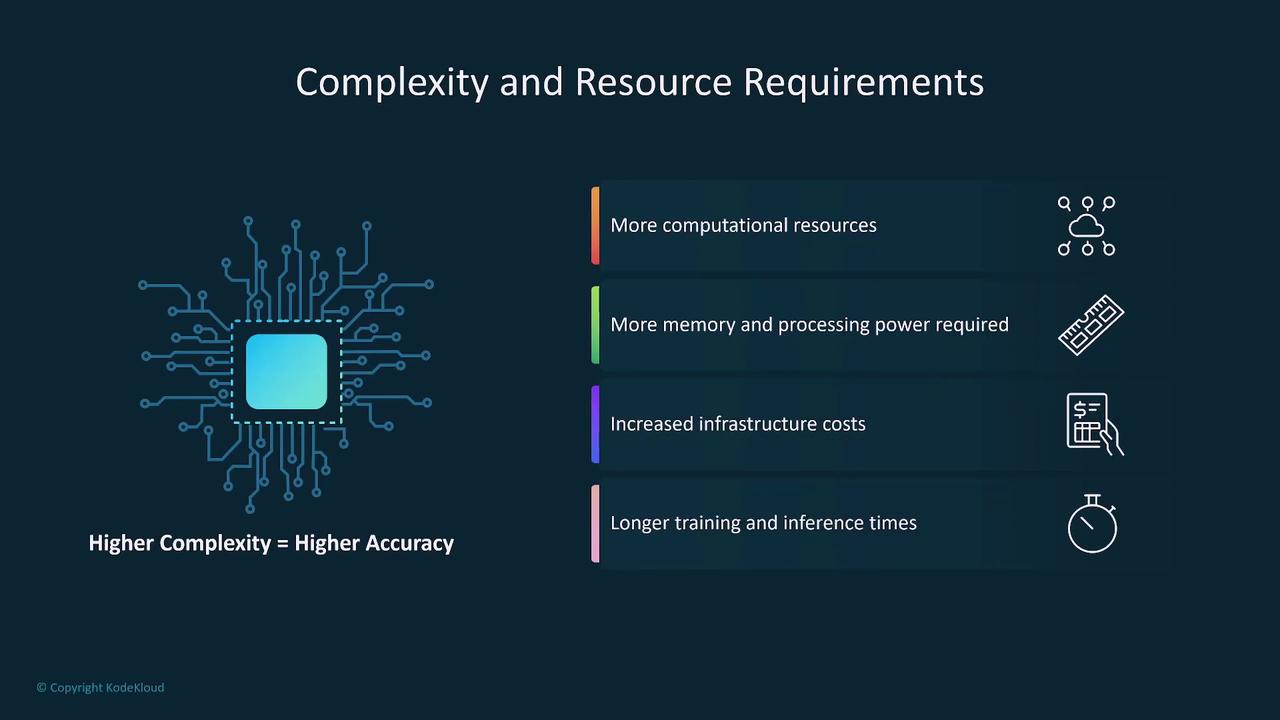

Complex models generally provide higher accuracy but incur greater costs and slower inference times. On the other hand, simpler models offer faster processing and lower expense, though possibly at the expense of marginal accuracy.

For instance, when comparing a model with 98% accuracy that costs $500,000 (Model A) versus one with 97% accuracy that costs less than half as much (Model B), you must analyze whether the marginal gain in accuracy justifies the additional cost.

Latency is another pivotal factor. In applications such as real-time translation or self-driving vehicles, the model's inference speed is critical. While highly complex models may provide superior accuracy, they might not be suitable if they cannot meet real-time processing demands.

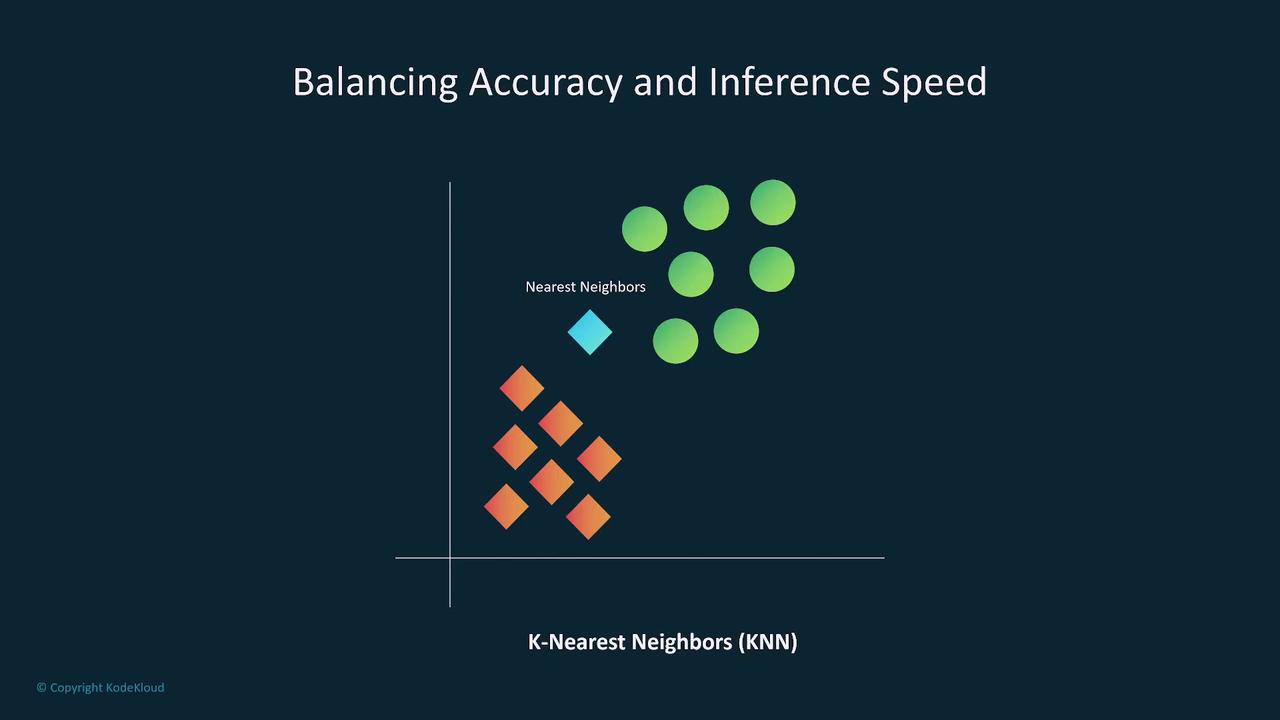

Model Complexity and Inference

A practical example is the K-Nearest Neighbors (KNN) model used in self-driving vehicle systems. KNN models perform most of their computations during inference, making them computationally intensive. This characteristic renders them less ideal for real-time decision-making in high-dimensional scenarios. In these cases, opting for a more complex model may be necessary to balance inference speed with overall complexity.

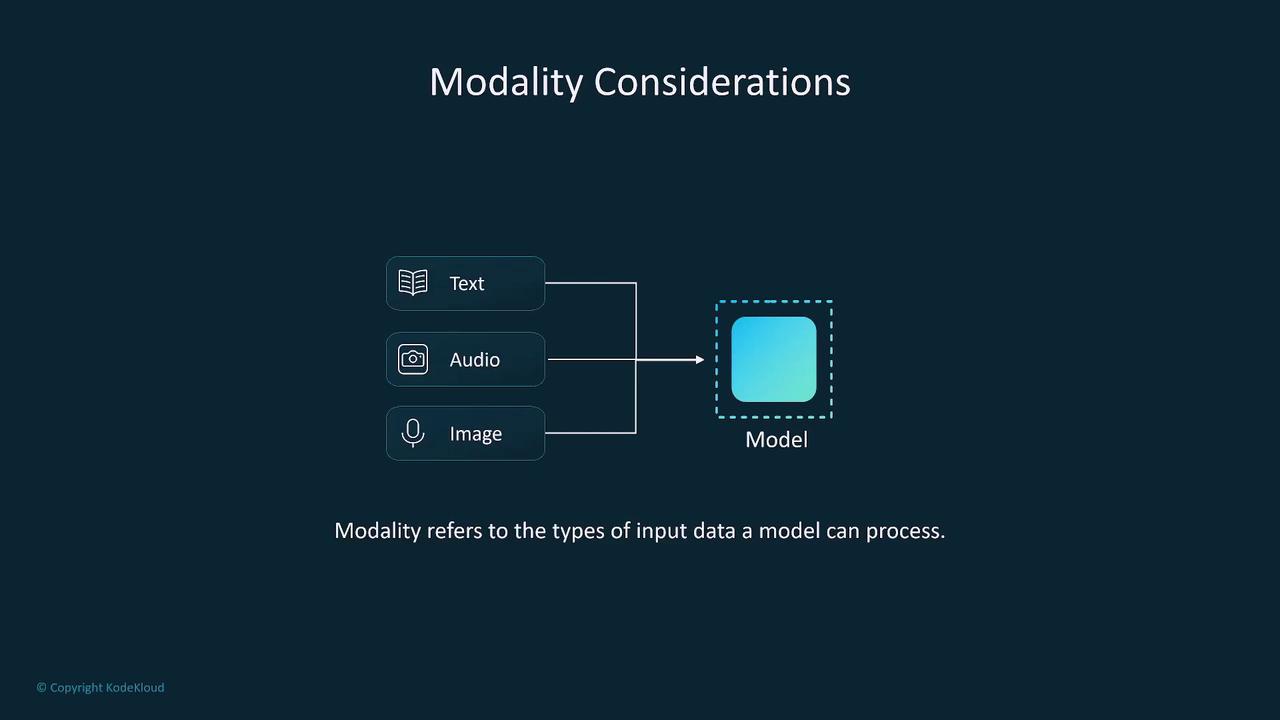

Modality and Data Input Considerations

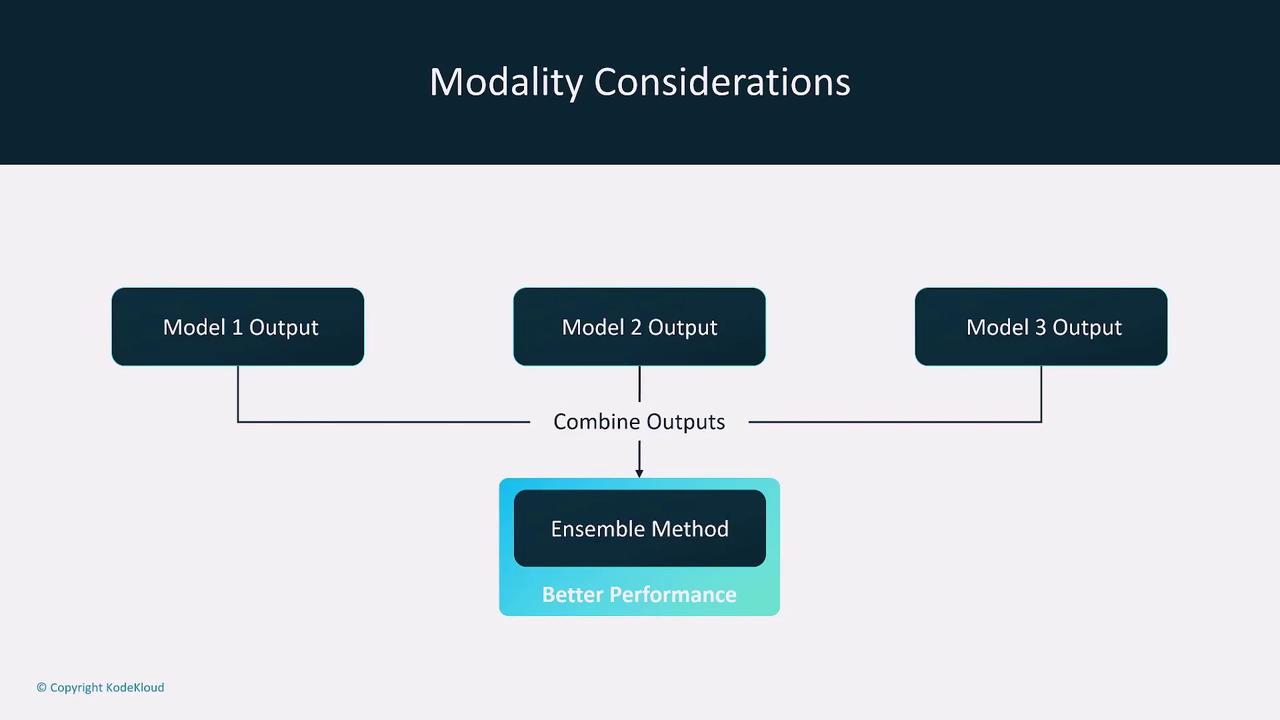

Modality refers to the type of input data a model can handle, such as text, images, and audio. While many simpler models are limited to one or two types of data, multimodal models can process multiple inputs concurrently. If you are dealing with single-input models, consider using ensemble methods to combine the outputs of several specialized models.

Using ensemble methods not only broadens the types of supported data but often enhances the overall performance.

For global applications, assess whether incorporating multilingual capabilities is necessary, especially in scenarios like real-time translation.

Choosing the Right Model Architecture

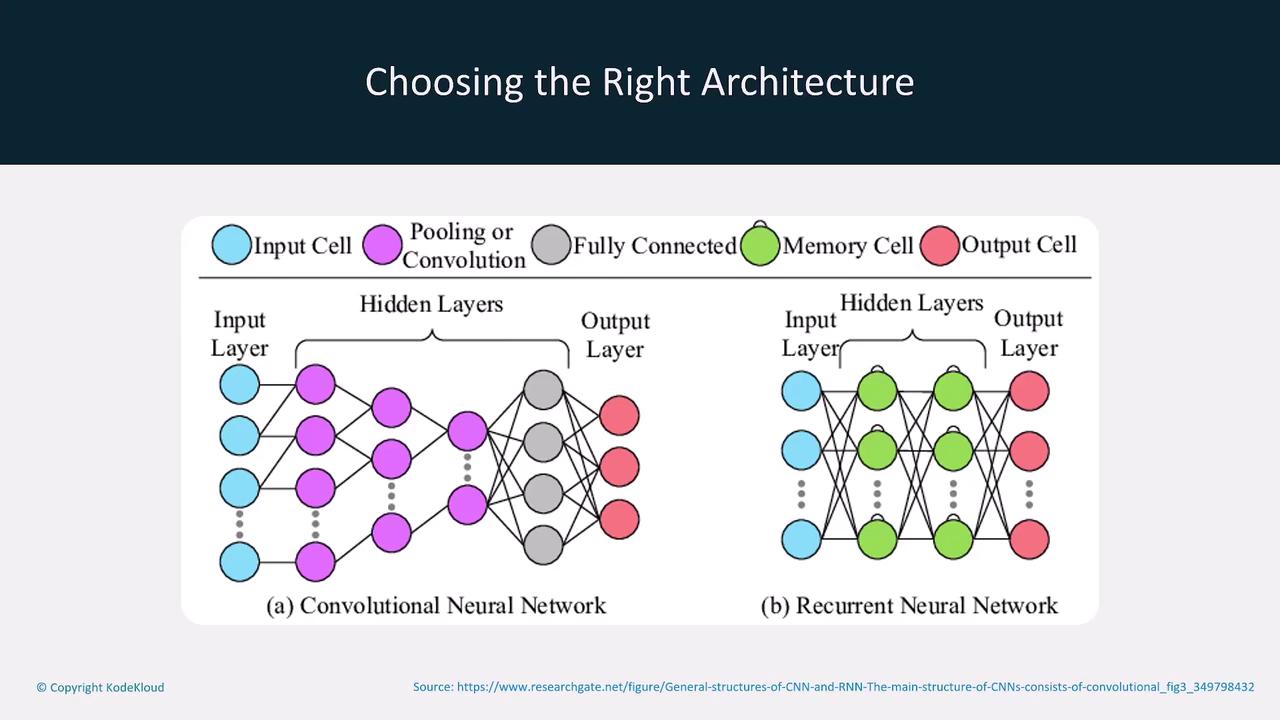

Different tasks require specific model architectures. For example:

- Convolutional Neural Networks (CNNs): Ideal for image recognition tasks.

- Recurrent Neural Networks (RNNs): Better suited for natural language processing (NLP).

Selecting the right architecture is a core component of MLOps and should align with your business problem and data characteristics.

Even if the technical specifics of CNNs and RNNs extend beyond this lesson, understanding their fundamental differences helps in evaluating their impact on infrastructure costs, training time, and inference efficiency.

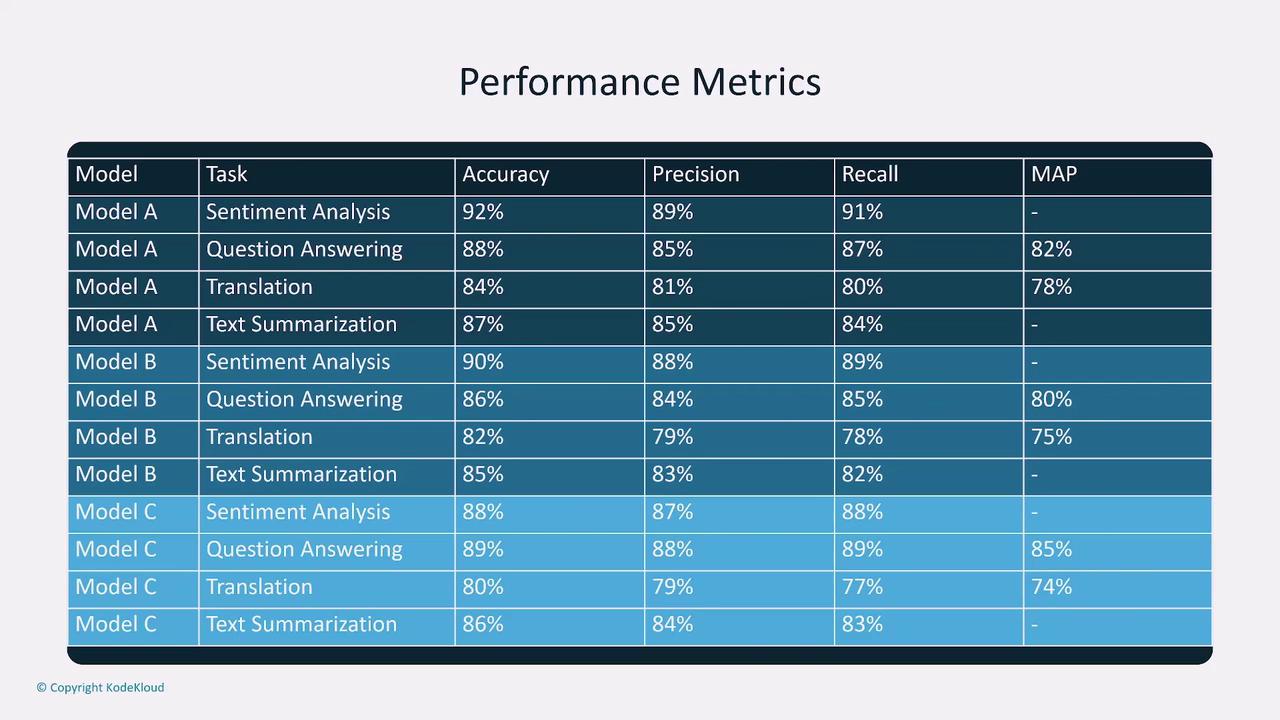

Performance Metrics

Performance metrics are critical for evaluating model effectiveness. Some key metrics include:

- Accuracy: How often the model makes correct predictions.

- Precision: The quality of positive predictions, measured as the proportion of true positives among all positive predictions.

- Recall: The model's ability to capture all relevant instances.

- F1 Score: The harmonic mean of precision and recall, especially useful for imbalanced datasets.

For certain tasks, mean average precision (MAP) may be used to average precision across multiple query types. Evaluation criteria vary by application—for instance, BLEU scores are popular in translation tasks, while sentiment analysis might require different metrics.

When dealing with imbalanced datasets, high accuracy might be misleading. In mission-critical areas such as medical diagnosis, false negatives could have severe consequences. Always evaluate metrics within the context of the specific application.

Customizing Models

Customization can be achieved through different approaches:

- Fine-Tuning: Minor adjustments such as adding system prompts or exposing the model to new data without retraining entirely.

- Full Retraining: Offers complete control and specialization, but with higher costs and longer training durations.

Fine-tuning generally incurs lower costs while still improving model specificity.

Always analyze these trade-offs holistically, considering model complexity, performance metrics, and cost implications together.

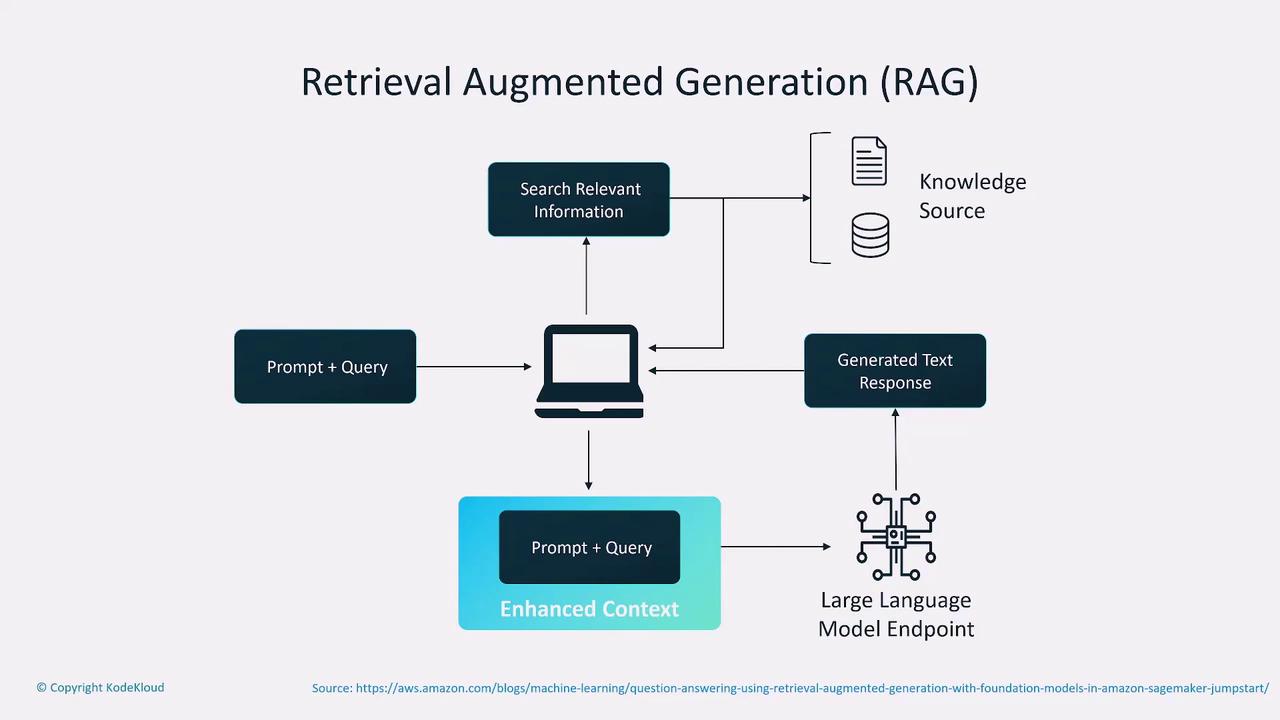

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) enhances model responses by retrieving additional, relevant documents during the query process. This technique merges retrieval-based methods with generative models, thereby improving the quality of answers in customer-facing applications. The process involves:

- Receiving a prompt.

- Retrieving pertinent data from a knowledge base.

- Combining this information with the initial query.

- Sending the enriched prompt to a large language model to generate the final response.

Implementing RAG can add complexity and cost due to the need for managing external knowledge sources, but the improved contextual accuracy can be a significant benefit.

Storing Embeddings in Vector Databases

Embeddings are numerical representations of tokens derived from input queries. Storing these embeddings in vector databases enables efficient semantic retrieval—especially useful when managing large, predefined knowledge bases. Options for vector databases include:

| Database Technology | Use Case | Example |

|---|---|---|

| DocumentDB | Document-oriented storage and querying | Use for structured documents |

| Neptune | Graph database to capture relationships | Ideal for connected data |

| RDS with Postgres | Traditional relational database | General-purpose applications |

| Aurora with Postgres | Scalable, managed relational database | High performance, scalable |

| OpenSearch | Search engine with vector support | Semantic search and retrieval |

Conclusion

Balancing cost, latency, and model complexity is paramount when designing AI solutions with foundation models. By carefully evaluating performance metrics, customizing models appropriately, and employing techniques like RAG and vector databases, you can tailor your solution to meet specific business objectives with efficiency and precision.

Note

For more insights and detailed documentation on model evaluation and deployment best practices, explore our MLOps Guidelines.

Thank you for reading this lesson. Stay tuned for future posts covering advanced topics like RAG, vector databases, and more in-depth model customization strategies.

Watch Video

Watch video content