AWS Certified AI Practitioner

Fundamentals of Generative AI

Cost Consideration for AWS Gen AI Services redundancy availability performance and more

Welcome back. In this article, we explore essential cost considerations for AWS generative AI services, focusing on redundancy, availability, performance, and more. When developing on AWS, it’s vital to balance your technical requirements with the associated expenses. Similar to managing your own data center, AWS offers flexible pricing models that allow you to optimize costs while meeting performance and availability needs.

Redundancy Considerations

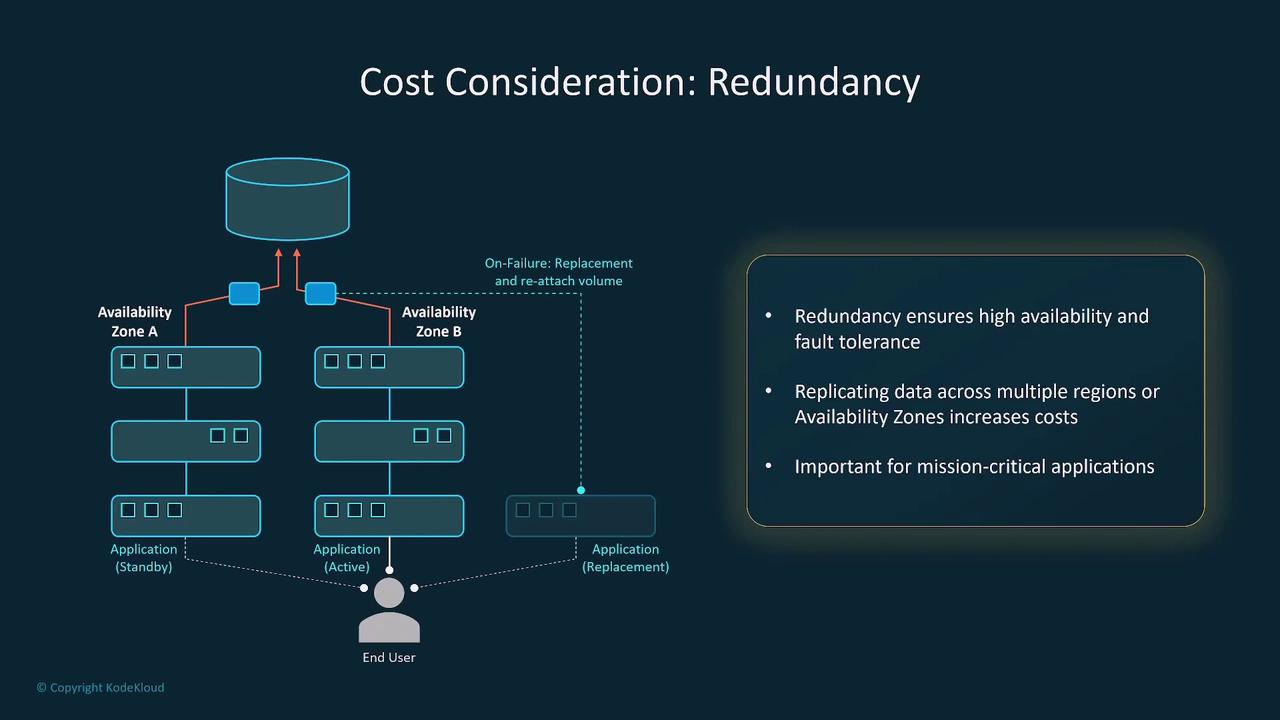

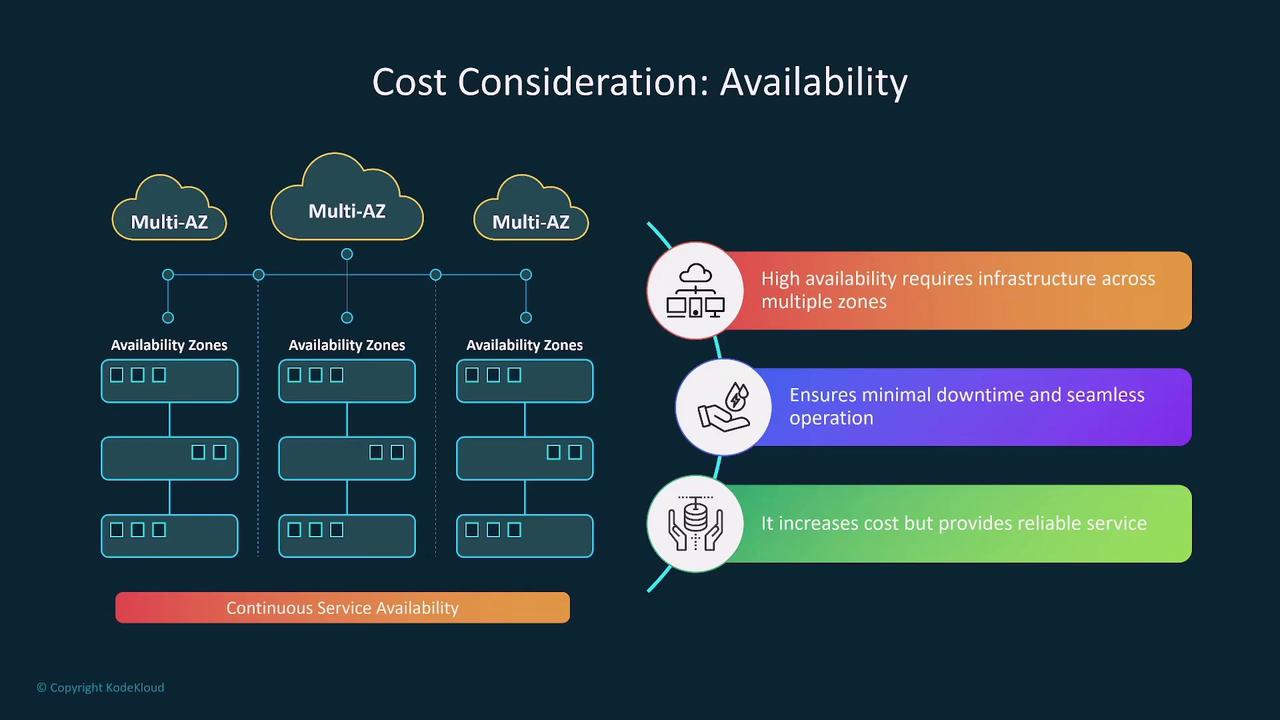

One critical factor is redundancy. For instance, ensure that your model's storage is both redundant and highly available by distributing it across multiple availability zones and regions. Although additional redundancy increases costs, it’s a necessity when data loss cannot be tolerated or when high uptime is expected by your users.

Beyond local redundancy, evaluate the need for global redundancy. Running your application across multiple regions minimizes downtime and enhances fault tolerance in mission-critical scenarios.

Performance and Compute Options

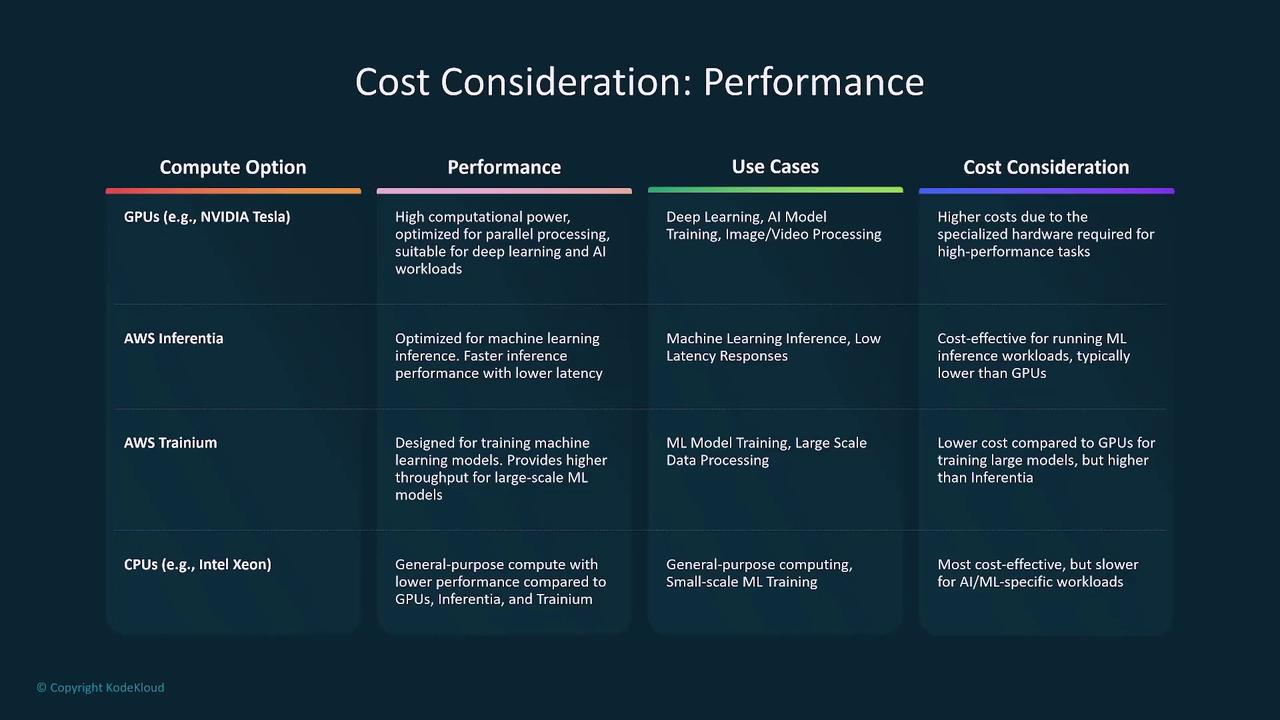

Performance is a significant cost factor, particularly when choosing between GPUs, AWS Inferentia, or standard CPUs. For example, GPUs can greatly enhance inference speed for machine learning applications, whereas AWS Inferentia offers a cost-effective solution for high-throughput ML workloads. Meanwhile, training-focused services like AWS Trainium may deliver superior throughput than GPUs, albeit at a higher price.

Consider whether your workload is batch or real-time and how mission-critical it is when selecting the appropriate compute option.

Additionally, token-based pricing models—where you pay per word, character, or token instead of reserving capacity—can be more economical for variable and low-to-medium throughput workloads. However, for high usage, it might be more prudent to evaluate savings plans such as those offered for SageMaker.

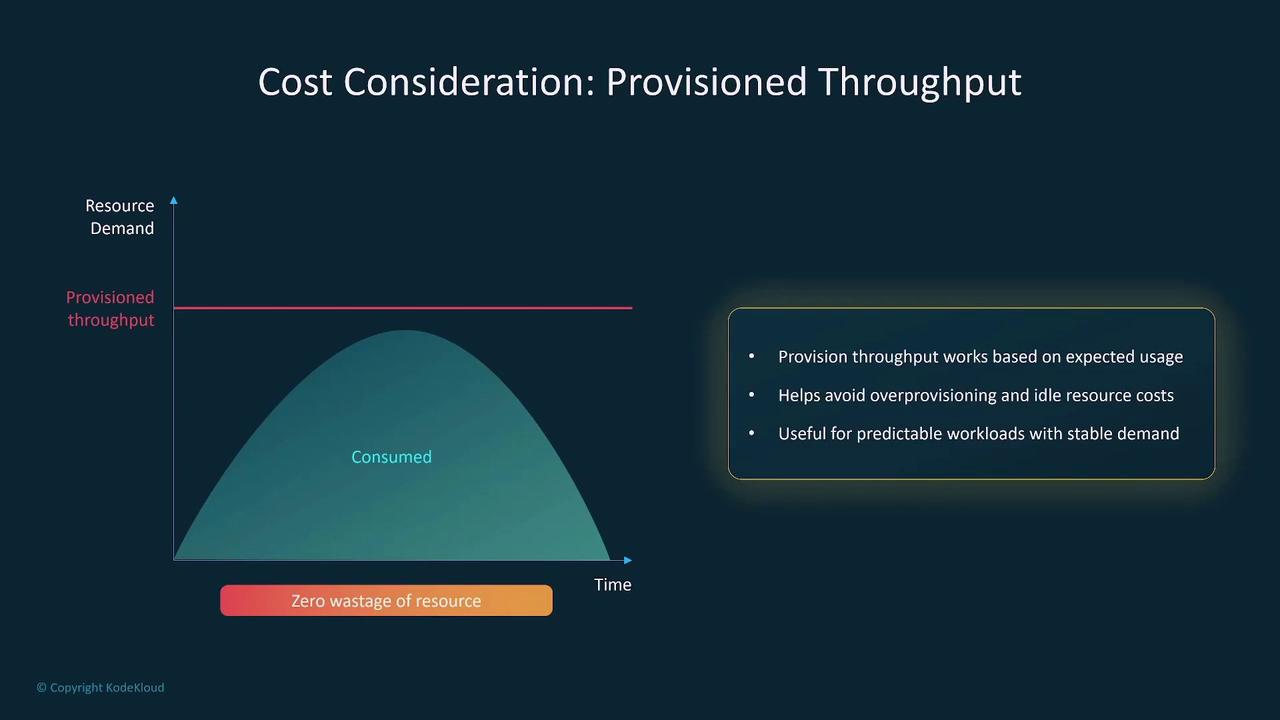

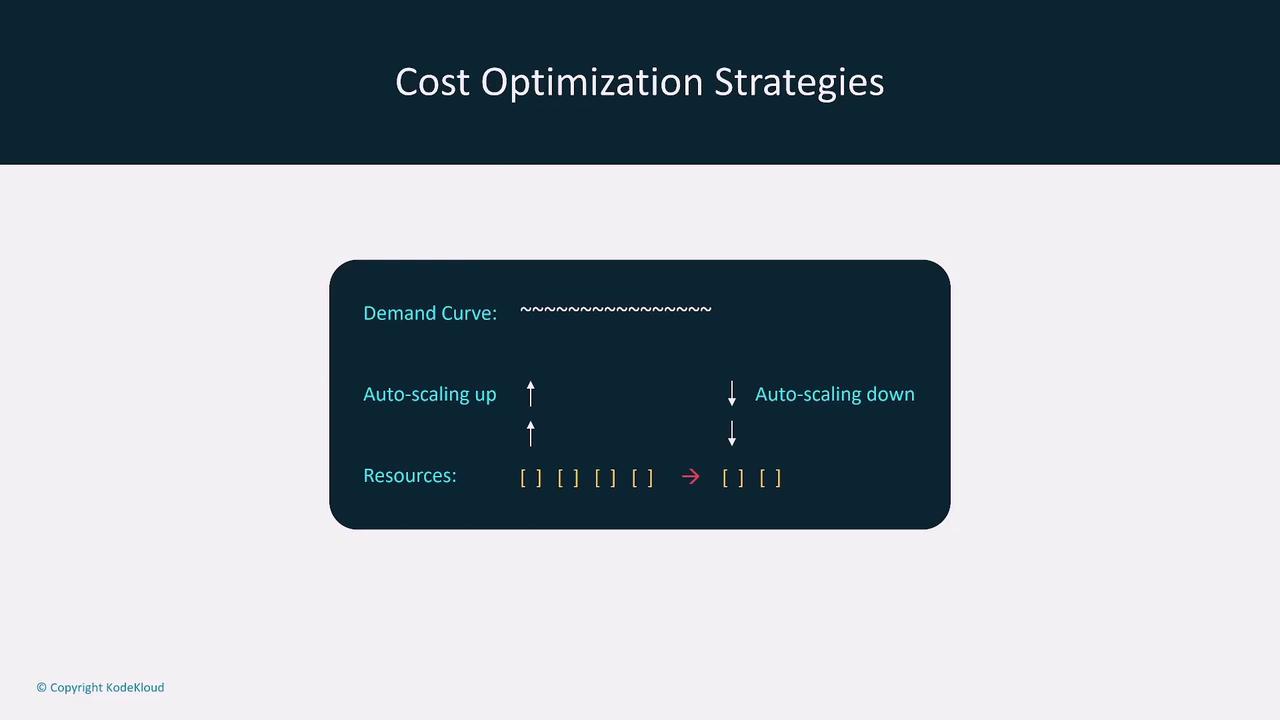

Provisioned Throughput Versus Auto Scaling

Provisioning throughput at a fixed level ensures consistent performance; however, if usage is variable, it can lead to resource wastage. For predictable workloads, provisioned throughput is effective. Conversely, auto scaling adjusts resources dynamically based on demand, reducing waste in fluctuating workloads.

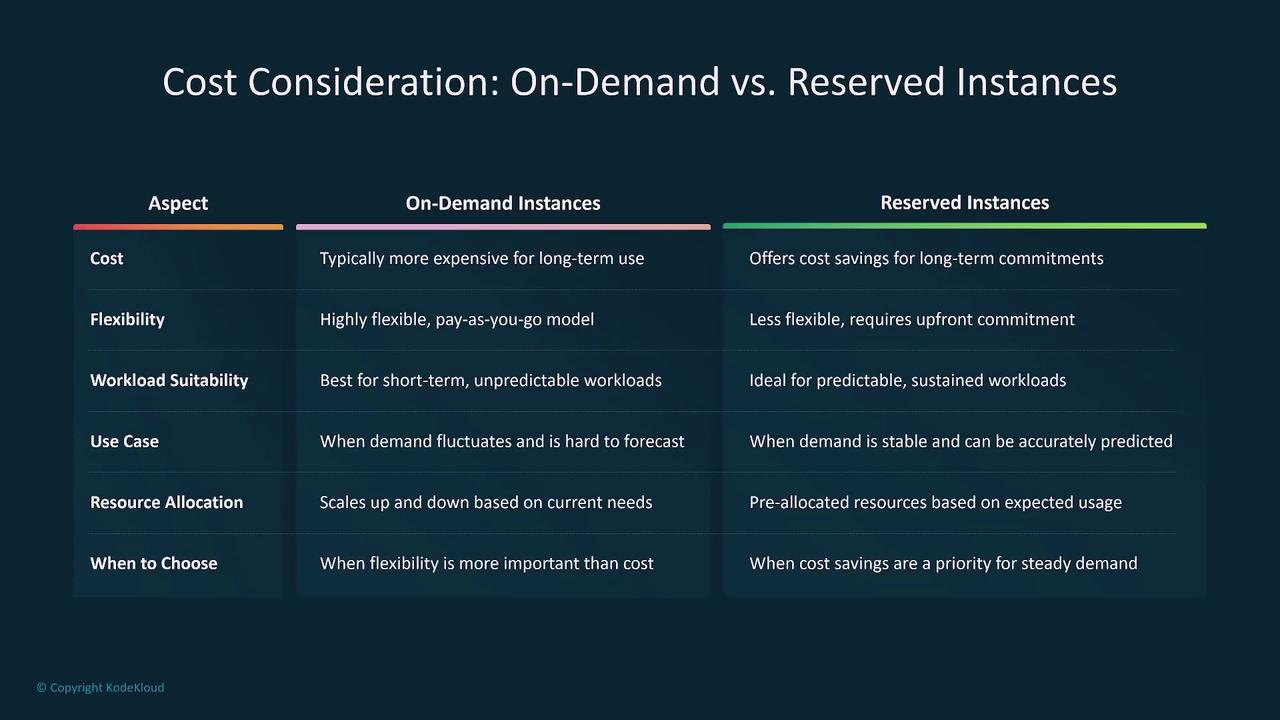

Cost optimization strategies may also include a mix of on-demand and reserved instances. On-demand instances are highly flexible, making them ideal for short-term or unpredictable workloads, while reserved instances—often requiring a commitment of one to three years—offer substantial savings for stable, predictable workloads.

Custom vs. Pre-Trained Models

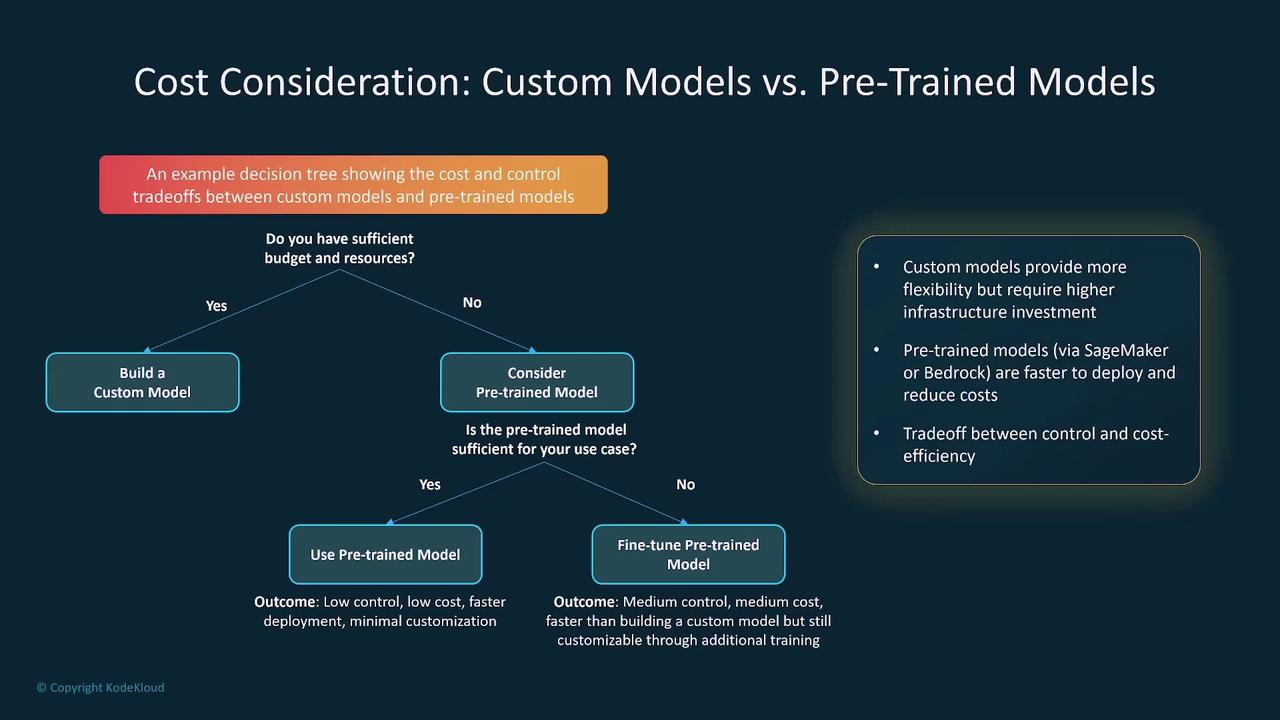

Deciding between custom and pre-trained models often comes down to efficiency and cost. Pre-trained models can quickly be fine-tuned for specific use cases at a lower cost and with easier deployment, making them a suitable choice in about 80% of cases. Custom models, while offering greater control, typically require a higher investment.

For example, with Amazon Bedrock and its foundation models, increased consumption can impact on-demand pricing, making reserved instances or savings plans a better option for managing costs at scale.

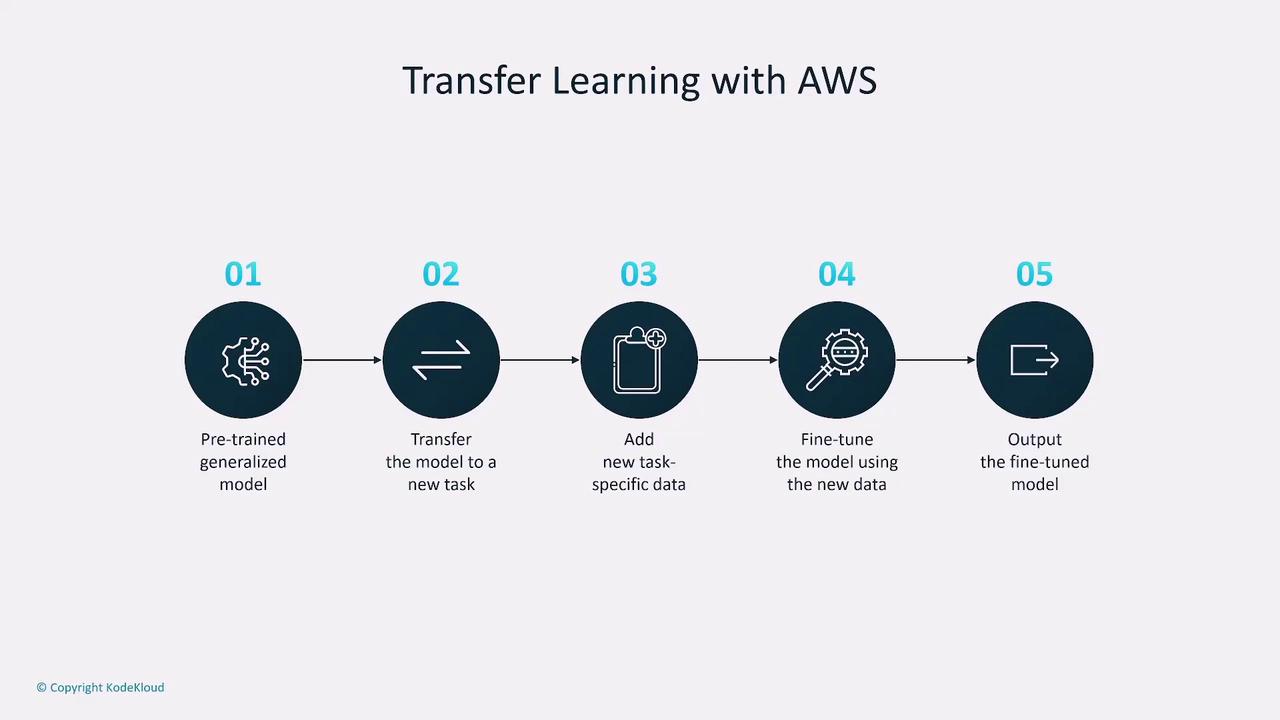

Transfer learning is another effective strategy. By fine-tuning a pre-trained model on a specific dataset, you can reduce training time and data requirements significantly, avoiding the need to start from scratch.

Additional Cost Considerations

Low latency is crucial for many applications, yet boosting performance by adding capacity invariably increases costs. It is essential to balance enhanced performance against client expectations and budget constraints.

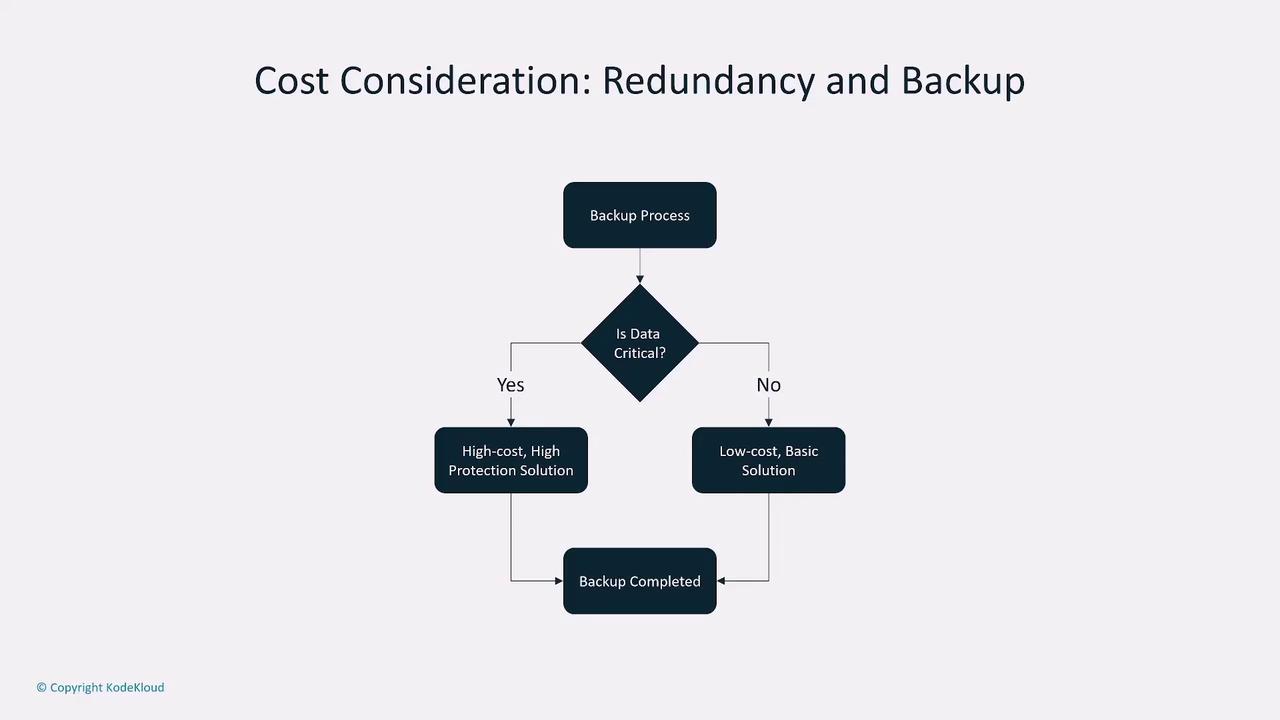

Backup strategies are also important, especially for critical data such as vector databases. Consider the frequency of backups, the level of detail required, and whether additional redundancy is necessary to support disaster recovery and business continuity. AWS Backup or more granular backup solutions can be employed depending on your data’s criticality.

For further cost optimization, consider auto scaling to match resource usage with demand and use spot instances for non-critical workloads. This strategy helps prevent over-provisioning by scaling down resources during periods of lower demand.

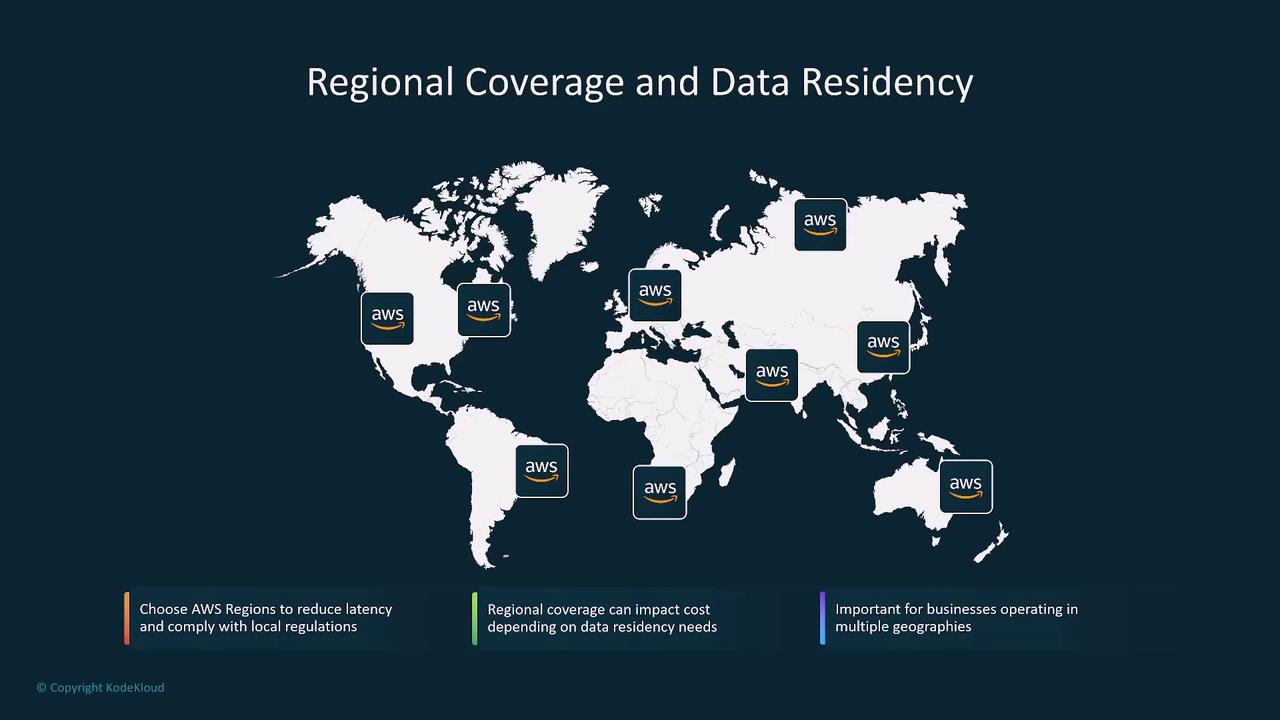

Regulatory requirements such as GDPR also make regional coverage and data residency important. Balancing compliance, low latency, and global operations is key to managing costs while meeting business objectives.

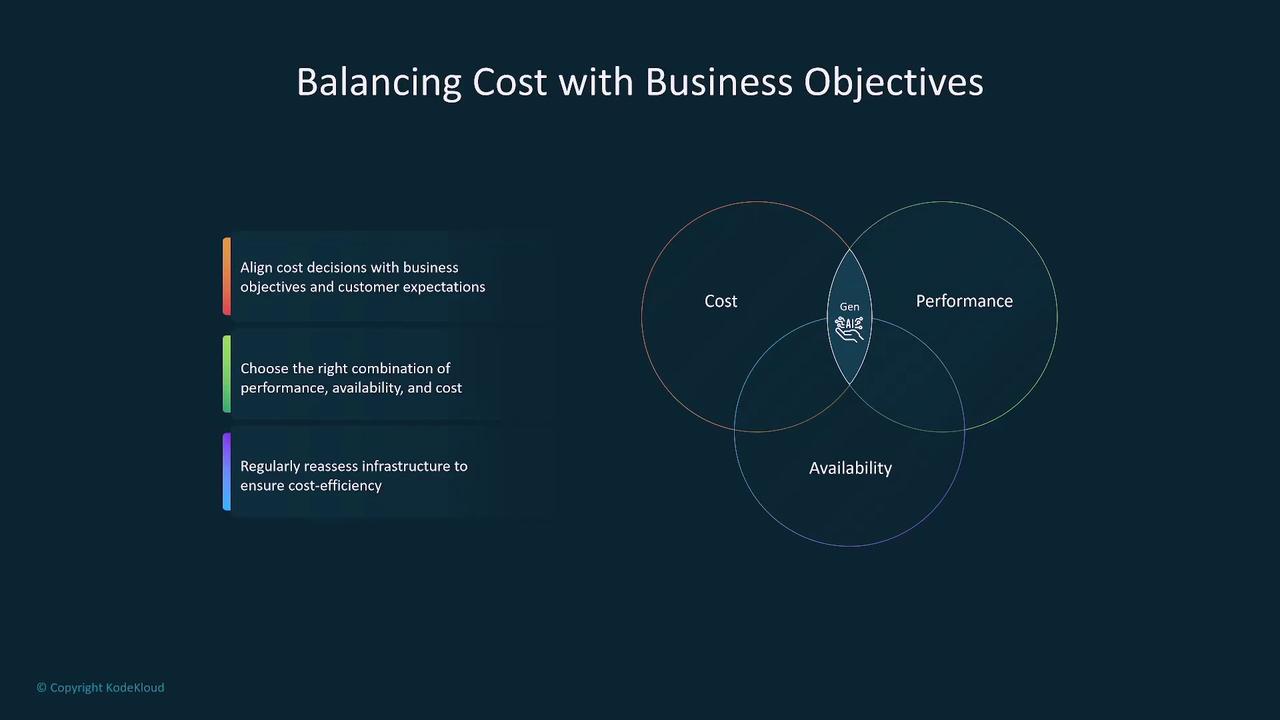

Balancing Cost, Performance, and Business Objectives

Ultimately, successful cost optimization on AWS involves continuously assessing your infrastructure’s performance, availability, and redundancy in line with your business objectives. AWS offers various tools to help monitor and manage costs, including AWS Budgets, Cost Explorer, the Cost and Usage Report, Trusted Advisor, and Compute Optimizer.

Conclusion

In summary, AWS provides a variety of flexible pricing models tailored to meet different performance, availability, and redundancy requirements. Regular monitoring and evaluation of your infrastructure are crucial to ensure that your cost optimization strategies align with both technical demands and business goals. By selecting the right mix of on-demand versus reserved capacity, custom versus pre-trained models, and leveraging auto scaling, you can establish a cost-effective setup for your generative AI applications on AWS.

Key Takeaway

Using a balanced approach to cost, performance, and availability is essential for optimizing your AWS infrastructure while meeting both technical needs and business objectives.

Thank you for reading. We look forward to bringing you more insights in the next article.

Watch Video

Watch video content