AWS Certified AI Practitioner

Fundamentals of Generative AI

AWS Infrastructure for bulding Gen AI Applications

In this article, we explore how AWS’s global infrastructure and comprehensive suite of AI services empower businesses to design, deploy, and scale generative AI applications with ease. A solid understanding of AWS’s data centers, managed services, and flexible pricing models is essential for cloud practitioners and AI developers alike.

AWS Global Infrastructure and AI Services

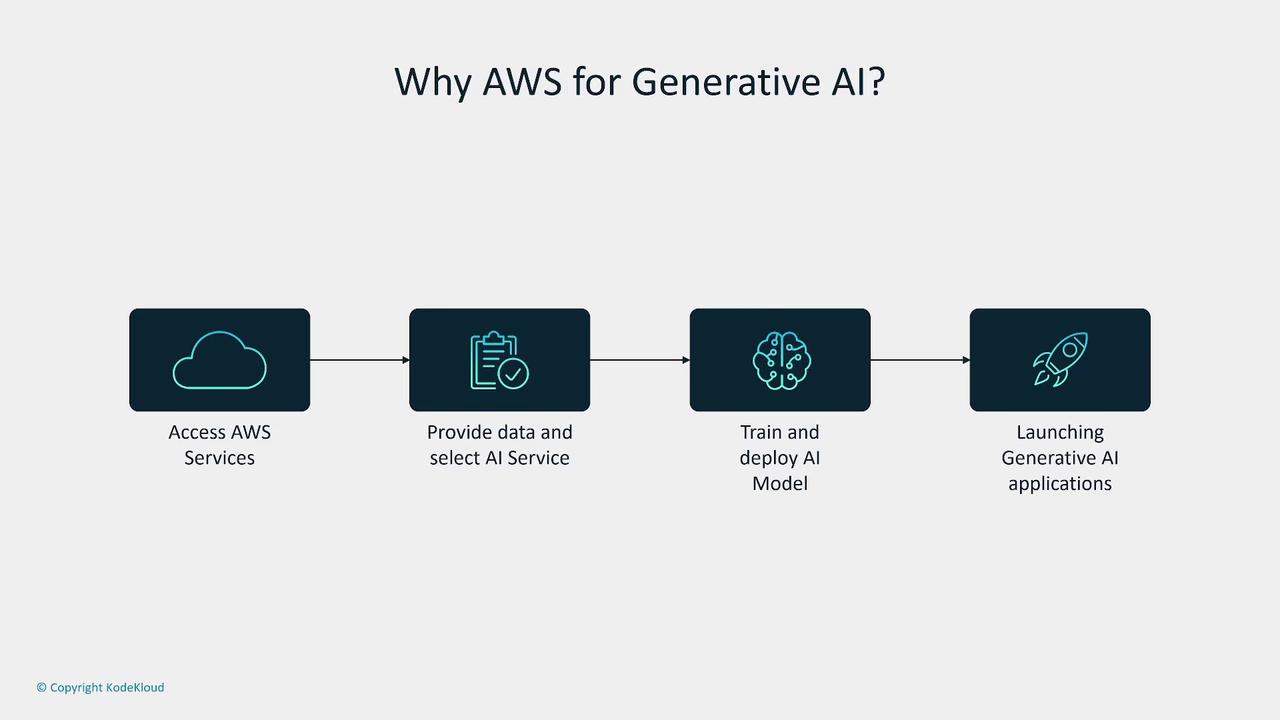

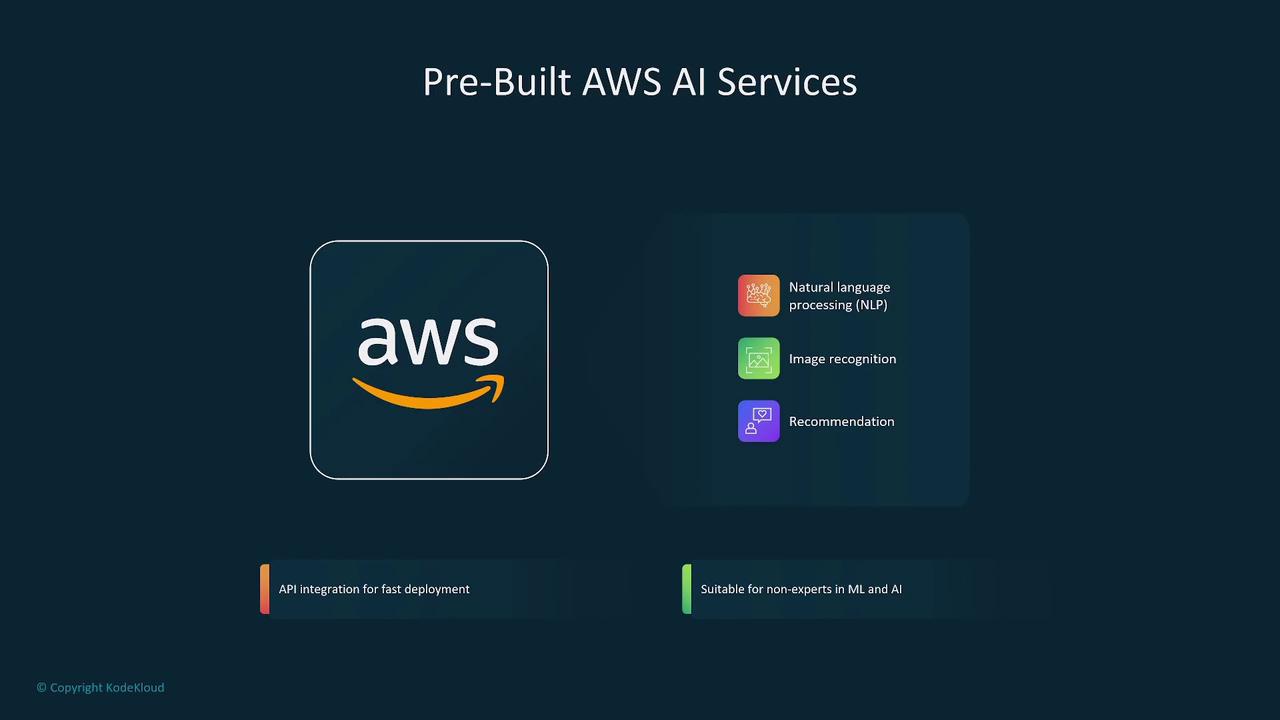

AWS offers a robust global infrastructure that is essential for modern generative AI applications. To build these applications effectively, you need to be familiar with how AWS services are accessed, how data is ingested, and how AI models are selected, trained, and deployed. AWS streamlines every step—from selecting pre-built models to rapid deployment—ensuring business objectives are met with speed and efficiency.

AWS reduces barriers to entry with cost-effective and scalable solutions tailored to accelerate time-to-market. By leveraging pre-trained foundation models and pre-built datasets, businesses can significantly cut down on development time and customization overhead.

Integrated Model Lifecycle with SageMaker and Beyond

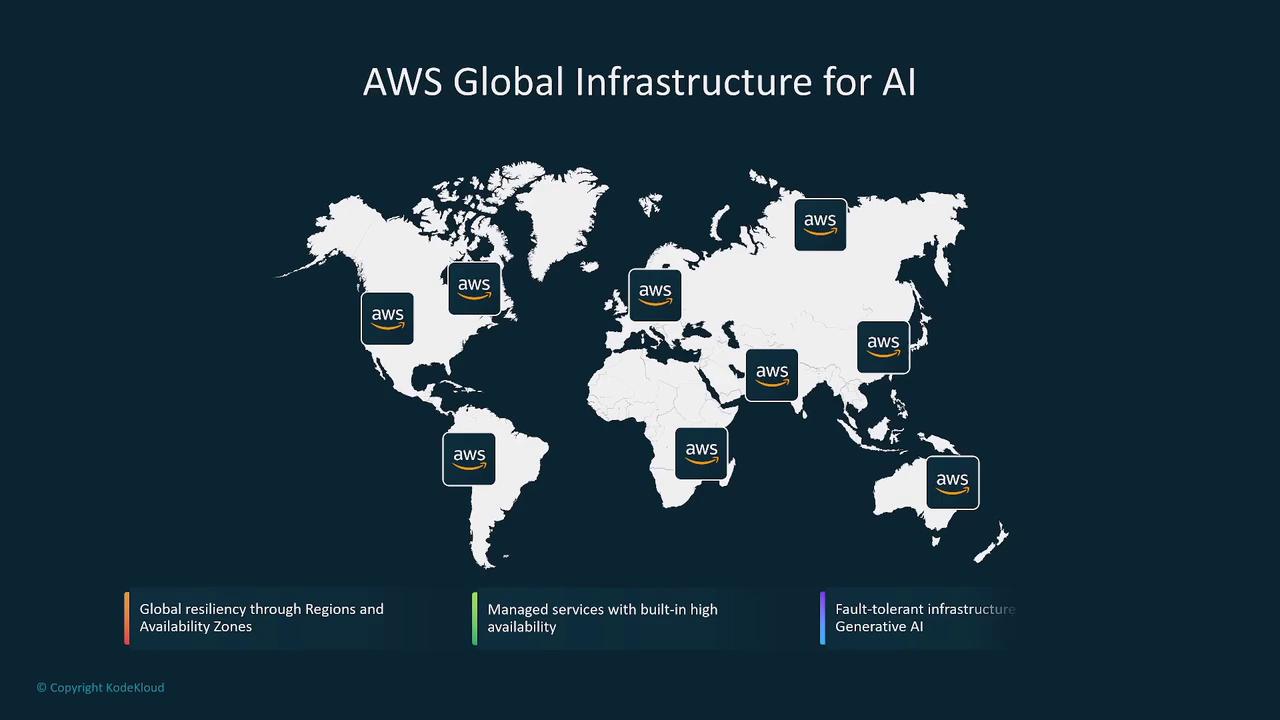

AWS supports the entire AI lifecycle—from model selection and data preparation to training, tuning, evaluation, and deployment—with services like SageMaker and Bedrock. This comprehensive approach is backed by a global network of data centers spanning over 30 regions, along with local zones and a vast edge network, ensuring high availability and fault tolerance.

Cost-Effectiveness and Scalable Pricing Models

A key advantage of AWS is its flexible, pay-as-you-go pricing model. Token-based pricing means you only pay for the data processed, making it an ideal solution for workloads with variable usage. This approach ensures optimum cost-efficiency without sacrificing performance.

For high-volume users, dedicated services might prove more cost-effective. However, for most organizations, the scalable pricing model provides a balanced solution that adapts to fluctuating workloads.

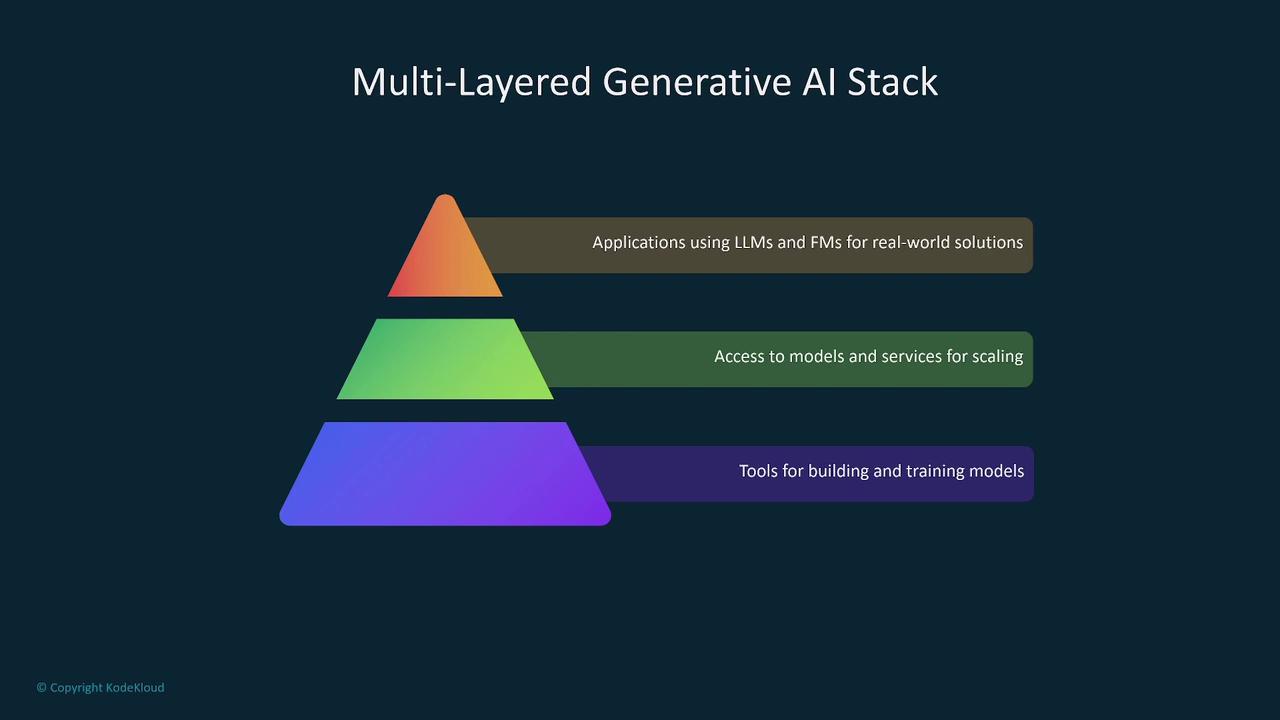

The Generative AI Stack on AWS

AWS’s generative AI stack integrates a variety of tools and services that cover the entire spectrum from data ingestion and model training to deployment and scaling. This stack encompasses:

- Hardware and infrastructure management

- Pre-trained models and foundational AI services

- Advanced machine learning platforms for applications such as chatbots and content generation

These components are built on a lower-level framework designed for rapid deployment and seamless customization.

SageMaker JumpStart

AWS SageMaker JumpStart offers a catalog of pre-built machine learning models, datasets, and algorithms to facilitate rapid deployment of AI projects. This service incorporates industry best practices, enabling you to quickly customize foundation models to meet specific business needs.

Managed Services: Amazon Bedrock and PartyRock

AWS provides specialized managed services to enhance your generative AI projects:

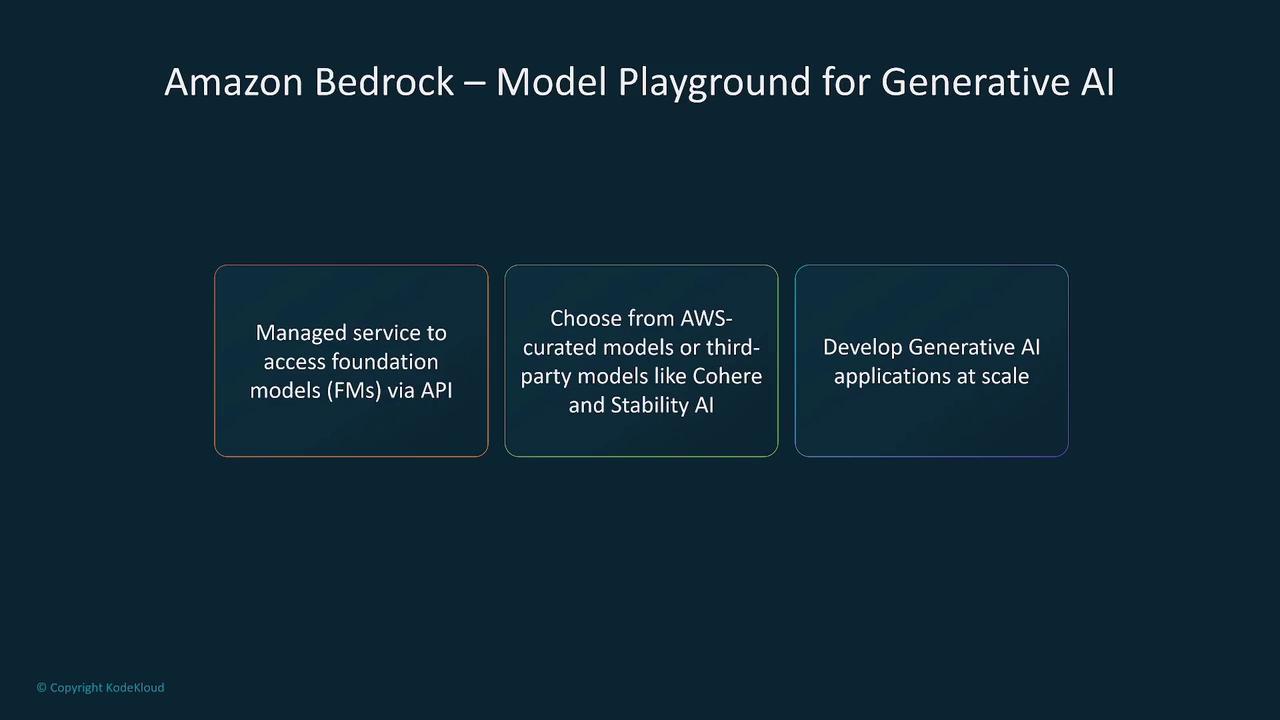

- Amazon Bedrock: This service lets you interact with multiple foundation models via simple APIs, supporting tasks such as text generation, image generation, code generation, and summarization. With built-in guardrails and agents, Bedrock simplifies the process of scaling AI applications.

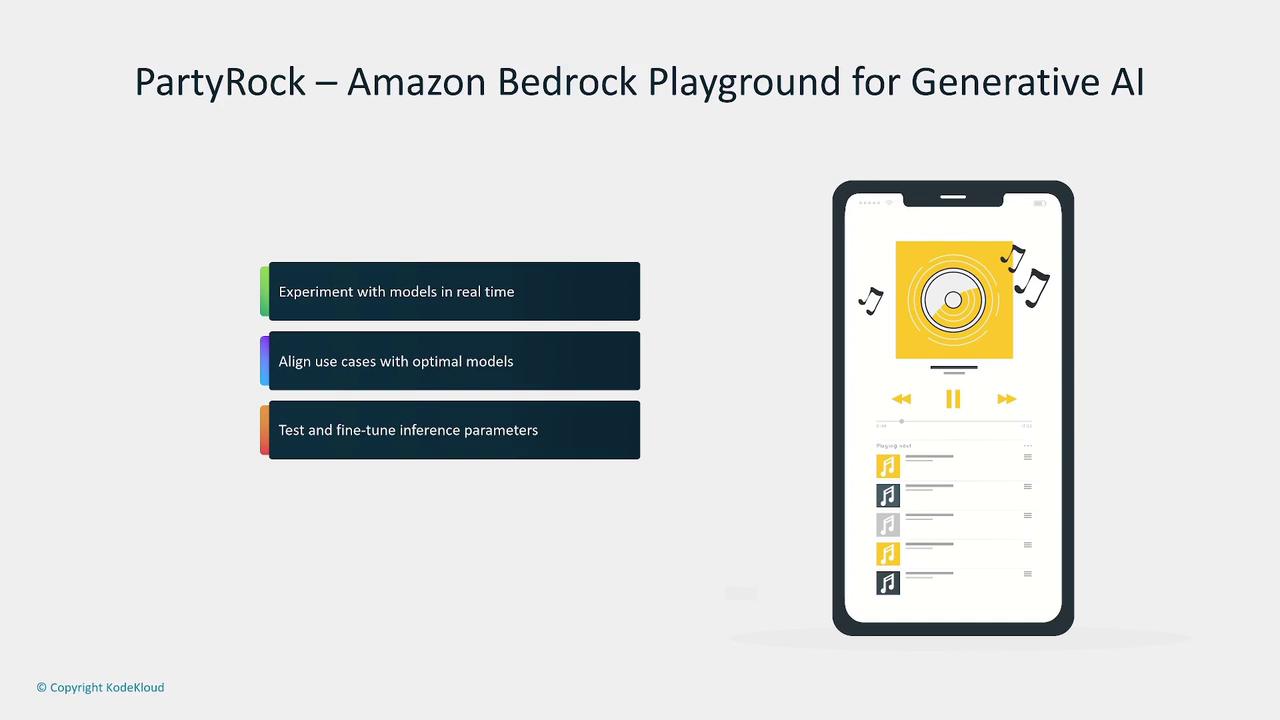

- PartyRock: Designed as a sandbox for experimentation, PartyRock allows you to test, fine-tune, and align use cases in an interactive environment—a complementary offering to Bedrock’s production-optimized capabilities.

Additionally, Amazon Q offers a range of AI services—including solutions for developers, chat interfaces, and data visualization with QuickSight—leveraging both AWS-curated and third-party foundation models from providers like Cohere, OpenAI, and Claude.

Underlying Technologies: Vector Databases and Security

Data Management with Vector Databases

Efficient data retrieval in AI applications often requires the use of vector embeddings. AWS supports a variety of databases—including RDS, Aurora, DocumentDB, and Neptune—that facilitate vector-based search and context-aware data management, which is vital for natural language processing and data-driven AI models.

Enhanced Security for AI Workloads

Security is a top priority for AI applications. AWS implements robust security measures including the AWS Nitro System, isolation of workloads, and support for GPU and specialized ML accelerators like Inferentia and Trainium. AWS further fortifies applications with IAM policies, Inspector, multi-factor authentication, continuous monitoring, encryption, and detailed logging through CloudWatch and CloudTrail.

Note

It is crucial to design AI systems with security in mind to protect against vulnerabilities, including potential prompt injection attacks.

Achieving Business Objectives with AWS

AWS's full spectrum of AI tools and managed services is designed to boost efficiency, accessibility, and security for your generative AI applications. By combining rapid deployment with pre-built models and scalable infrastructure, AWS helps you achieve business goals in a cost-effective way—whether managing high-level services or low-level virtual machines and containers.

Final Thoughts

Harnessing AWS for generative AI applications can dramatically accelerate your innovation cycle while enhancing scalability and security. Explore AWS’s offerings to find the solutions that best meet your business and technical needs.

Thank you for reading. We look forward to sharing more insights in our next article.

Watch Video

Watch video content