Amazon OpenSearch

Amazon OpenSearch, built on the legacy Elasticsearch platform, is a widely known service for vector databases. It delivers high-performance vector similarity searches, ideal for uncovering related concepts. The serverless version auto-scales to accommodate large generative AI models while also powering interactive log analytics, real-time website searches, and application monitoring. Key features of OpenSearch include its k-Nearest Neighbor (k-NN) search, which quickly identifies semantically related vectors. OpenSearch integrates seamlessly with AWS Bedrock and SageMaker, ensuring real-time data ingestion and indexing for dynamic applications.

Use Cases for OpenSearch

OpenSearch is well-suited for:- Recommendation engines leveraging continuous vector-based insights.

- Semantic search for real-time text and image processing.

- Enhanced conversational AI via rapid contextual retrieval.

- Log analytics for real-time application monitoring and website search.

Amazon Aurora with PgVector Extension

Another powerful option is Amazon Aurora’s PostgreSQL-Compatible Edition with the PgVector extension. PgVector enables the integration of vector embeddings generated by machine learning models directly into the database. This integration facilitates the storage and semantic indexing of data derived from large language models, making it ideal for recommendation systems and catalog searches.

Amazon Neptune ML

Amazon Neptune ML combines traditional graph database capabilities with advanced machine learning features. By leveraging graph neural networks (GNNs), Neptune ML enhances predictive models by analyzing complex inter-data relationships. Integrated with the Deep Graph Library (DGL), this service simplifies model selection and training—ideal for use cases where relationships between data points are critical.

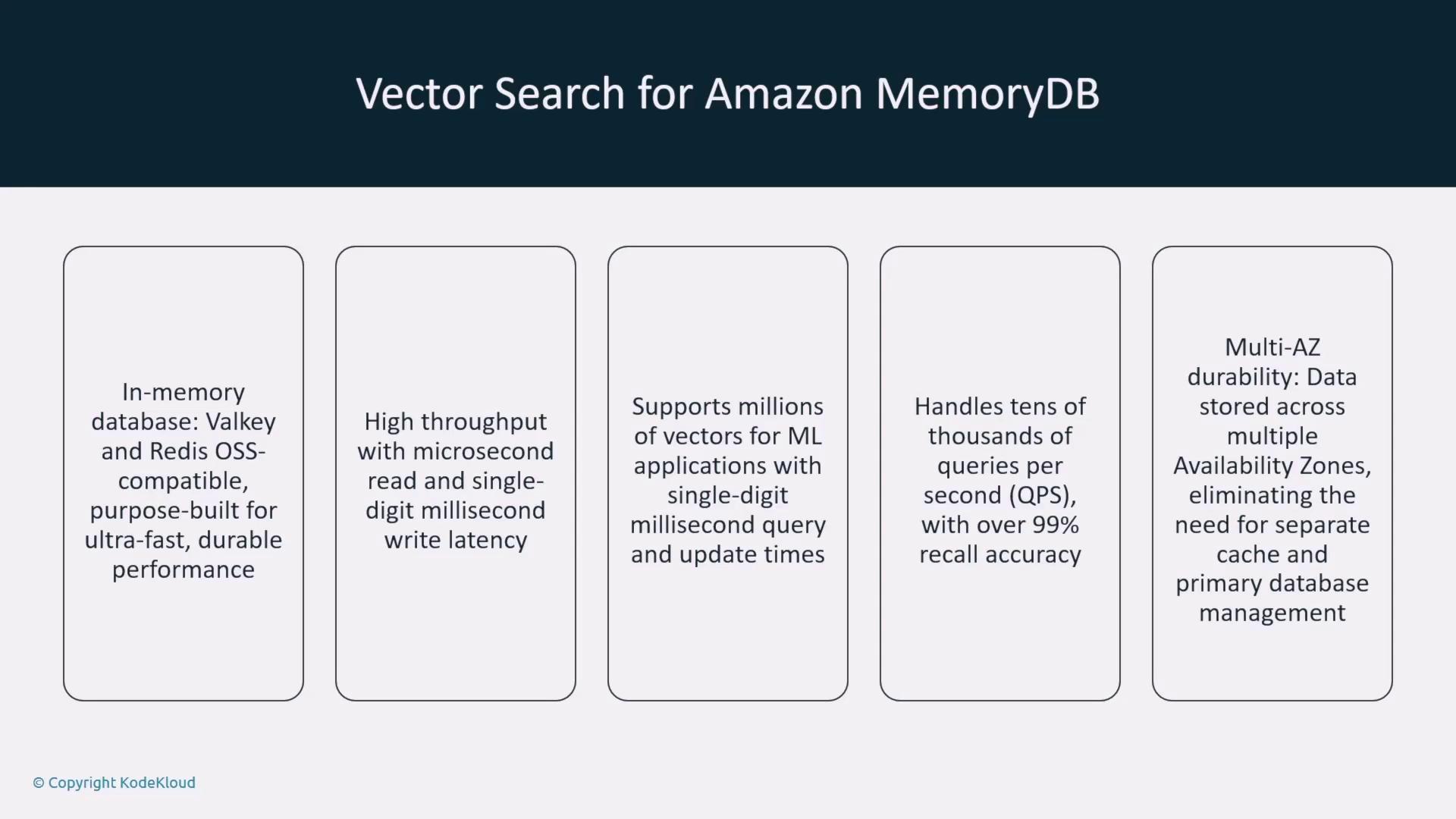

Amazon MemoryDB

Amazon MemoryDB, an in-memory database service, offers robust vector search capabilities. With support for purpose-built engines like Valkyrie or Redis, MemoryDB delivers high-throughput vector searches with latencies in the single-digit milliseconds. It handles millions of vectors and high query volumes, ensuring high recall accuracy and reliability through multi-AZ configurations. This makes MemoryDB suitable as both a caching layer and a primary database with built-in backup support.

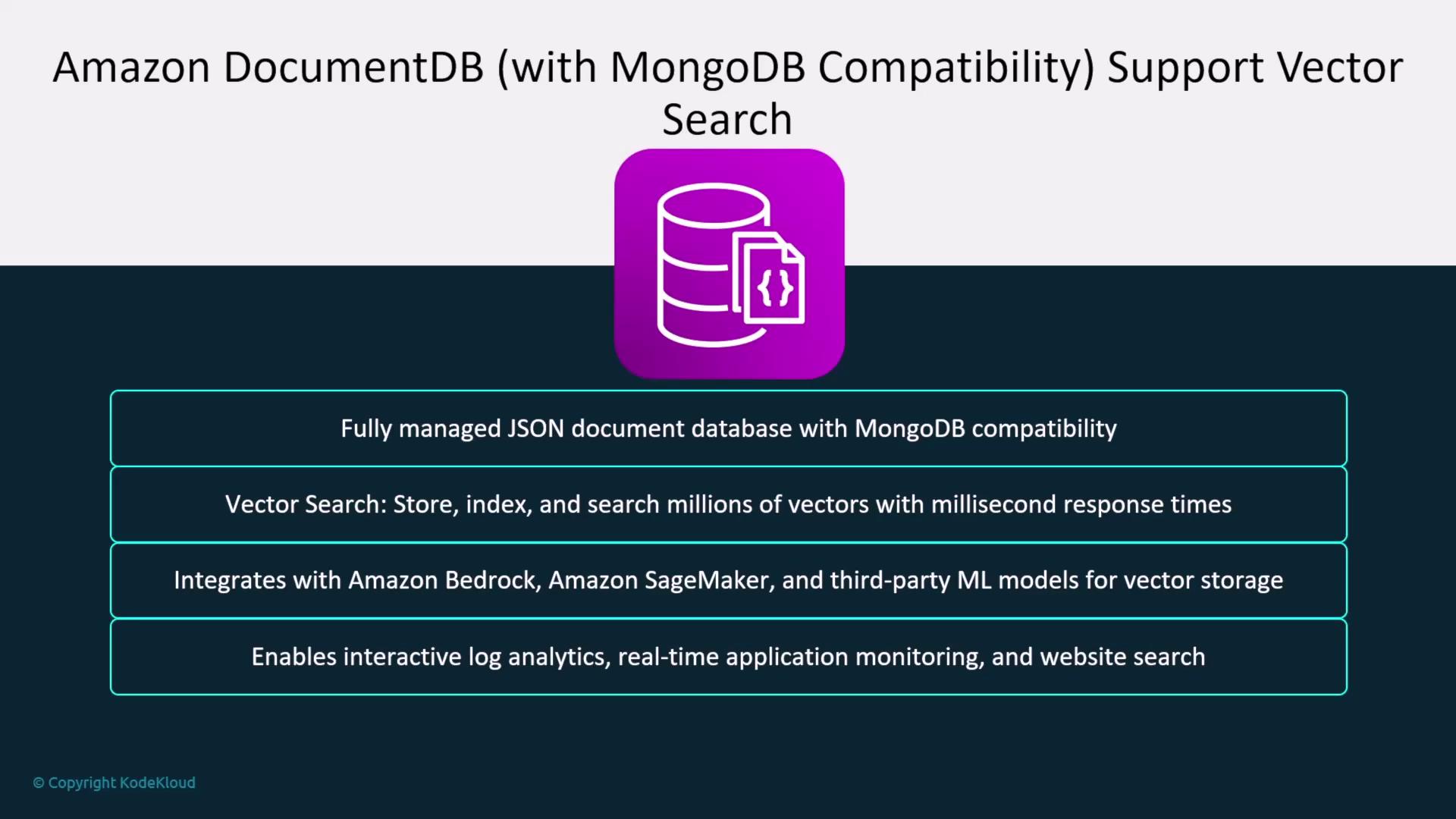

Amazon DocumentDB

Amazon DocumentDB, compatible with MongoDB, provides similar vector search capabilities. This document database allows for efficient storing, indexing, and searching of vector embeddings. It integrates easily with major generative AI and machine learning services and supports custom model deployments. In addition, DocumentDB facilitates log analytics, application monitoring, and website search.

Retrieval-Augmented Generation with AWS Bedrock

AWS Bedrock supports the creation of custom knowledge bases using Retrieval-Augmented Generation (RAG). RAG dynamically retrieves up-to-date and domain-specific information to augment a generative AI model’s knowledge base. This feature is especially beneficial for fine-tuning models in specialized domains—an essential concept for your exam preparation.Be sure to familiarize yourself with the integration capabilities between these services and AWS Bedrock as they are crucial for building scalable, intelligent applications.

Summary

The vector database services on AWS include:- Amazon OpenSearch

- Amazon Aurora with PgVector for PostgreSQL

- Amazon Neptune ML

- Amazon MemoryDB

- Amazon DocumentDB