AWS Certified AI Practitioner

Guidelines for Responsible AI

Features of Responsible AI

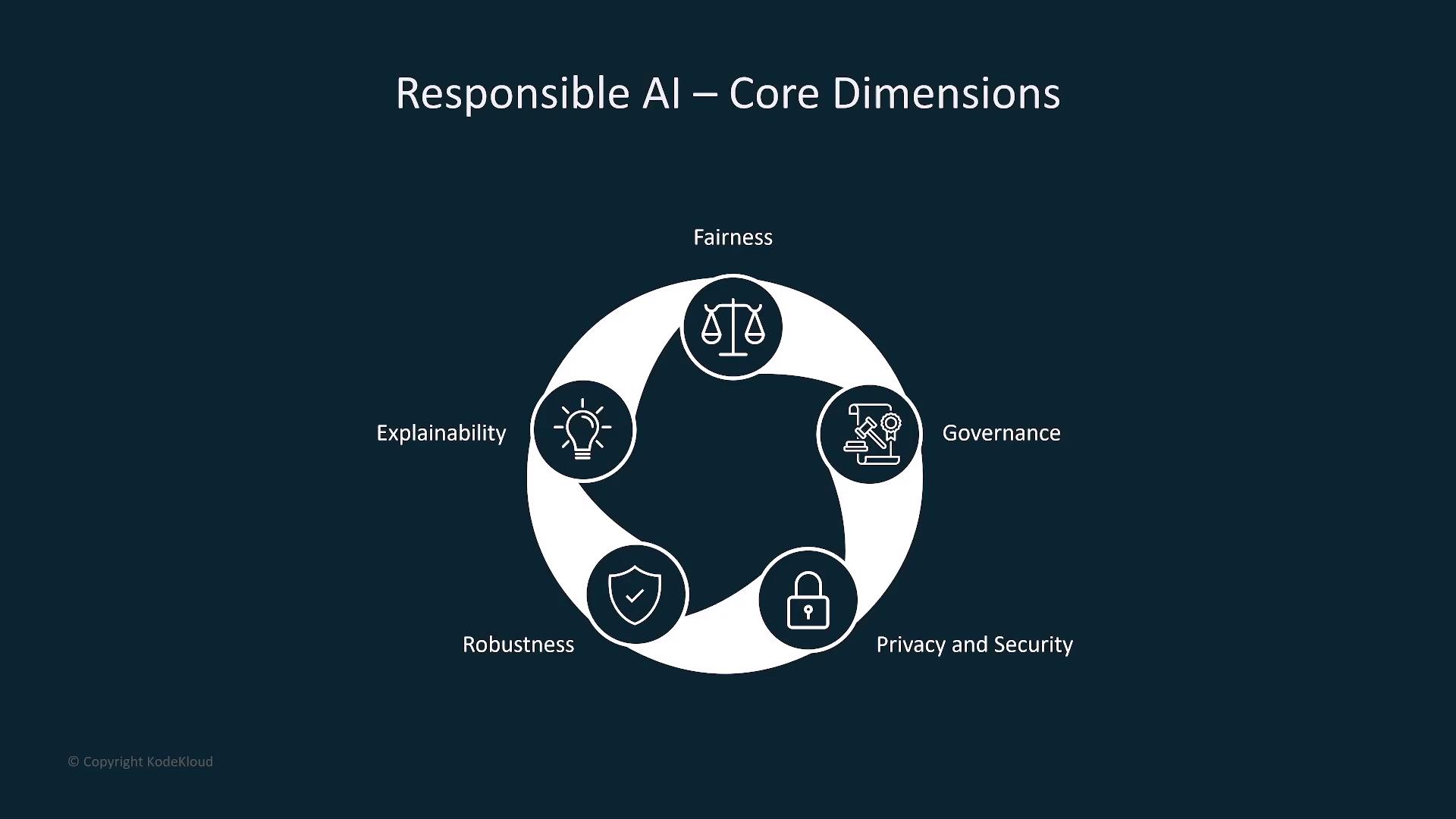

Welcome to this lesson on the key features of a responsible AI system. I’m Michael Forrester, and in this lesson, we will explore the essential dimensions that make AI systems ethical, transparent, and trustworthy. As industries such as healthcare, finance, and law embrace AI, it becomes imperative to design systems that operate safely and equitably. A responsible AI framework helps mitigate biases, ensuring fair treatment and avoiding societal harm. For instance, AI systems used in loan processing or medical diagnostics must be fair, explainable, and robust to safeguard user trust and ensure reliable outcomes.

Responsible AI is underpinned by a set of guidelines and principles that help design systems aligned with societal values while also meeting regulatory standards. The core dimensions include fairness, explainability, robustness, privacy and security, and governance.

Fairness

Fairness is the cornerstone of responsible AI. It guarantees that AI models do not discriminate based on attributes such as age, gender, or race. For example, a credit score model should evaluate individuals based solely on their financial history rather than extraneous attributes like gender or ethnicity. Without this emphasis, AI systems may reinforce societal biases and erode public trust.

A common challenge in developing fair AI is addressing bias that may arise from imbalanced training data. When certain groups are underrepresented, the system may struggle to assess them accurately. For instance, if a medical AI model is trained predominantly on male patient data, it might underdiagnose conditions in women. Addressing these imbalances is essential to achieve fairness and ensure reliable outcomes for diverse populations.

Explainability

Explainability helps foster accountability by making AI decision processes understandable for users. For example, if a loan application is rejected, the system should indicate whether the decision was influenced by factors such as inadequate credit history or low income. Transparent explanations reinforce user trust by ensuring that decisions are clear and justifiable.

Robustness

Robustness refers to the ability of an AI system to handle unexpected scenarios—such as missing data or unusual inputs—without crashing or producing inaccurate results. Whether it's deployed in autonomous vehicles or healthcare robots, a robust AI system can minimize faults and prevent errors that could lead to adverse outcomes.

Privacy and Security

In fields that handle sensitive data, such as healthcare and finance, privacy and security are non-negotiable. Robust data protection mechanisms help prevent unauthorized access and ensure compliance with regulations like GDPR. This focus not only maintains user trust but also reduces legal risks for organizations.

Governance

Governance integrates all other dimensions by ensuring that AI systems adhere to legal standards and industry best practices. Effective governance includes risk assessment, incident reporting, and structured response protocols to manage and mitigate potential issues.

Transparency

Transparency is vital for clarifying both the capabilities and limitations of AI systems. Organizations must communicate the potential risks and benefits, such as occasional "hallucinatory" outputs or inaccuracies, so users understand that the tool should not be solely relied upon for critical decisions. This openness helps prevent misuse and strengthens overall trust in AI.

Note

Understanding these key features is not only vital for designing ethical and reliable AI systems but is also a crucial part of our certification exam.

Conclusion

In this lesson, we have reviewed the main features of responsible AI: fairness, explainability, robustness, privacy and security, and governance. Incorporating these principles helps create AI systems that meet societal standards, adhere to legal regulations, and generate reliable outcomes. As you move forward in your AI journey, keep these core dimensions in mind to ensure the development of ethical and trustworthy systems.

Thank you for participating in this lesson. I look forward to our next discussion on advancing ethical AI practices.

Additional Resources

Watch Video

Watch video content