AWS Certified AI Practitioner

Security Compliance and Governance for AI Solutions

Regulatory Compliance Standards for AI Systems

Welcome to this comprehensive guide on regulatory compliance standards for AI systems. This article delves into the key aspects of compliance that every professional should know, especially those preparing for certification exams.

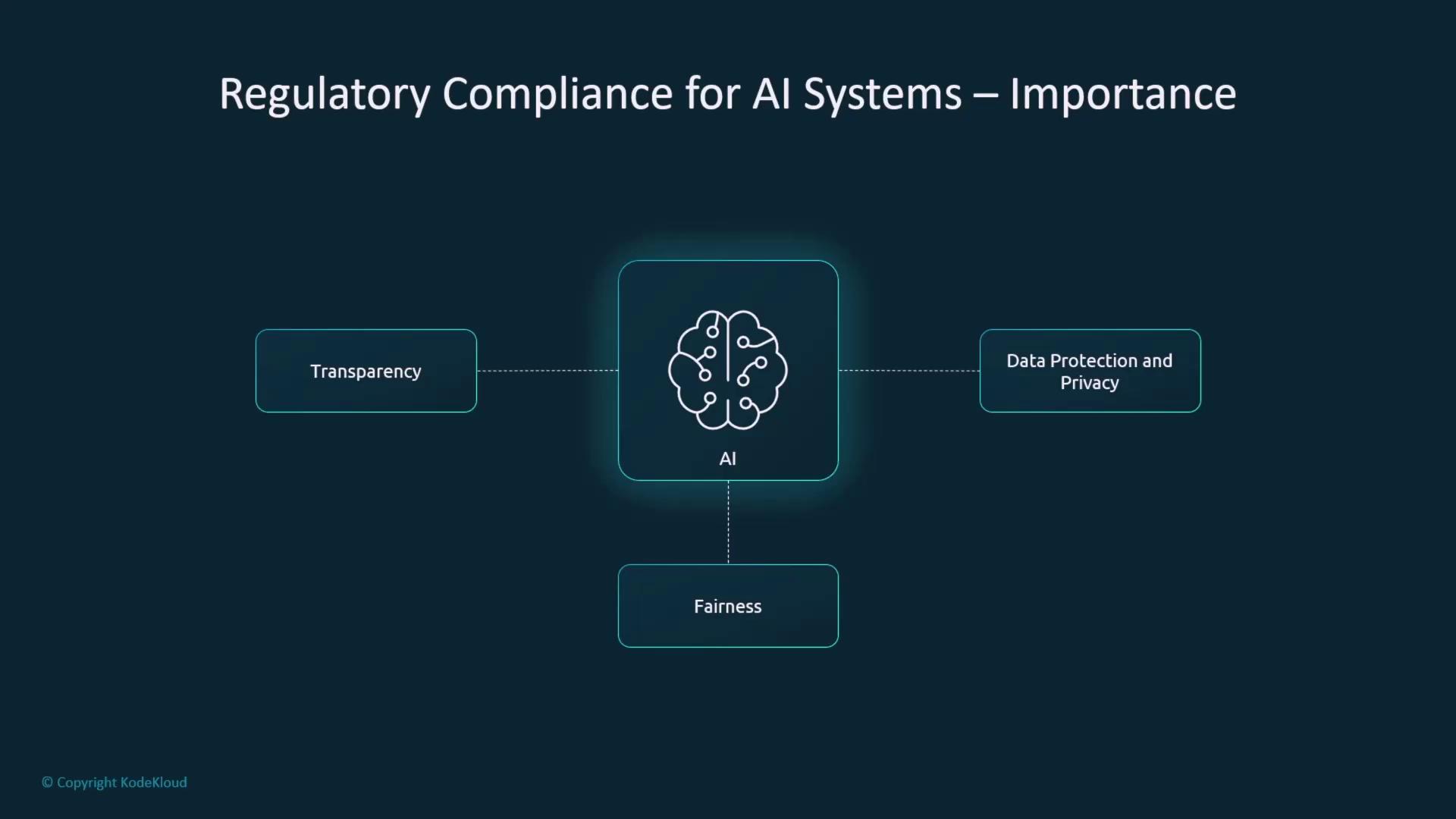

Key Aspects of Regulatory Compliance in AI

When managing AI systems, ensuring regulatory compliance is vital to safeguard both businesses and consumers. The main areas include:

Data Protection and Privacy

Guarantee that all data processed by generative AI models—whether private or sensitive—is handled with the highest care and protection.Fairness and Bias

Continuously monitor AI systems to identify and mitigate any unintentional bias. Ensuring fairness in decision-making processes prevents discriminatory outcomes.Transparency

Focus on two critical components: interpretability and explainability. Transparent AI decision-making processes are essential, especially when outcomes must be justified under scrutiny.

In addition to protecting business interests, adhering to compliance standards also secures consumer rights.

International Regulatory Frameworks

Regulatory compliance for AI extends beyond local rules to include several influential international frameworks:

ISO Standards for AI Systems

International standards such as ISO 42001 and ISO 23094 offer guidance on managing risks and promoting responsible AI practices. ISO 42001 emphasizes a non-prescriptive approach to risk management, while ISO 23094 focuses on ethical responsibility and interoperability.

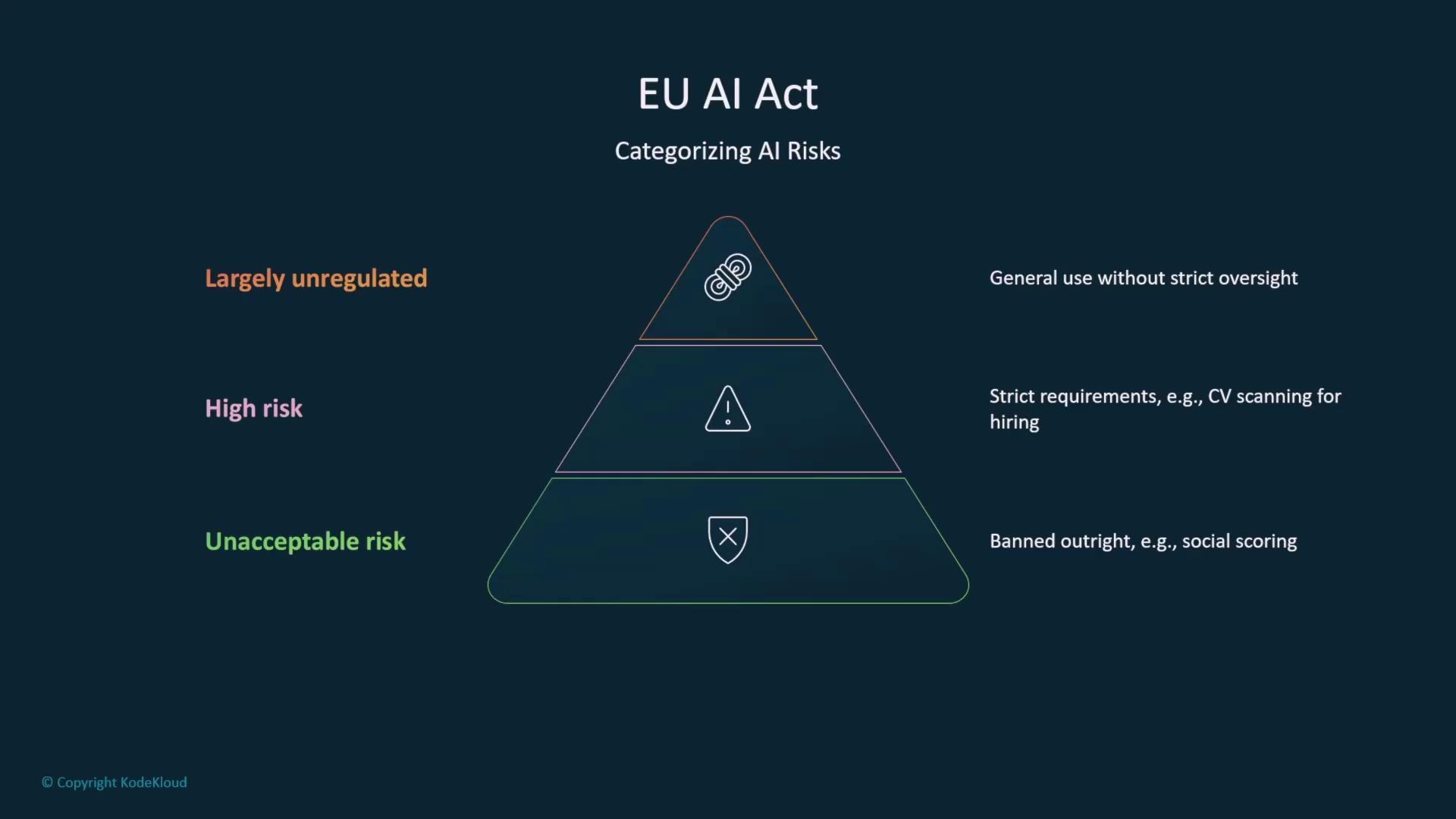

EU AI Act

The EU AI Act categorizes AI systems into three risk levels: unacceptable, high, and largely unregulated. Each category comes with specific guidelines, where higher risks may face stricter regulations and potential bans, while lower-risk systems operate under general oversight.

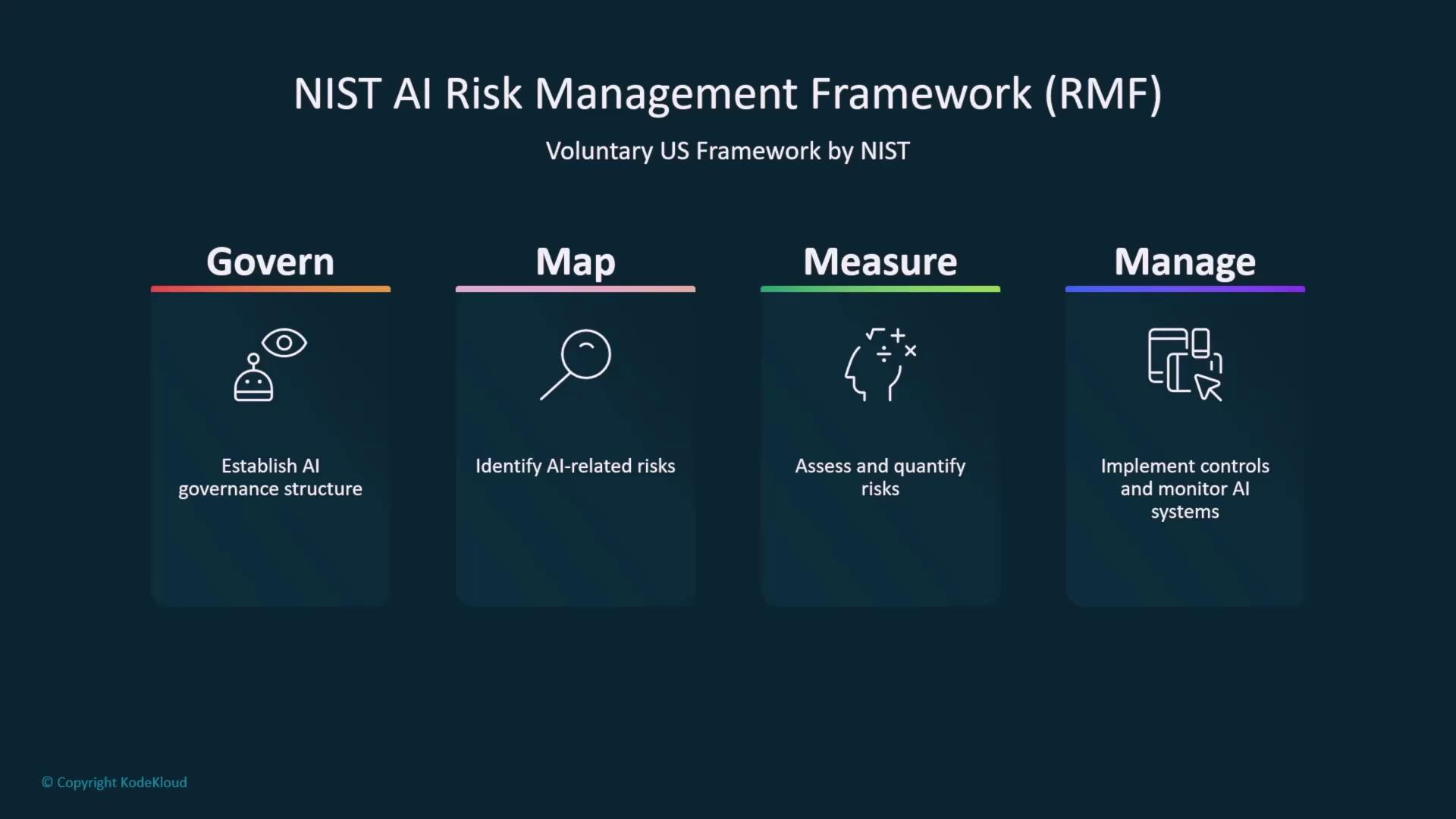

NIST AI Risk Management Framework (RMF)

The voluntary NIST AI RMF is designed to guide organizations in establishing robust controls around AI risks. It focuses on four key areas: govern, map, measure, and manage risks.

Algorithmic Accountability Act

Recently passed by the U.S. Congress, this act aims to enhance transparency by requiring organizations to provide interpretability and explainability of AI decision-making processes. It enforces accountability measures to trace the origin and rationale of AI decisions.

Related Resource

For more information on AI governance, visit NIST AI RMF.

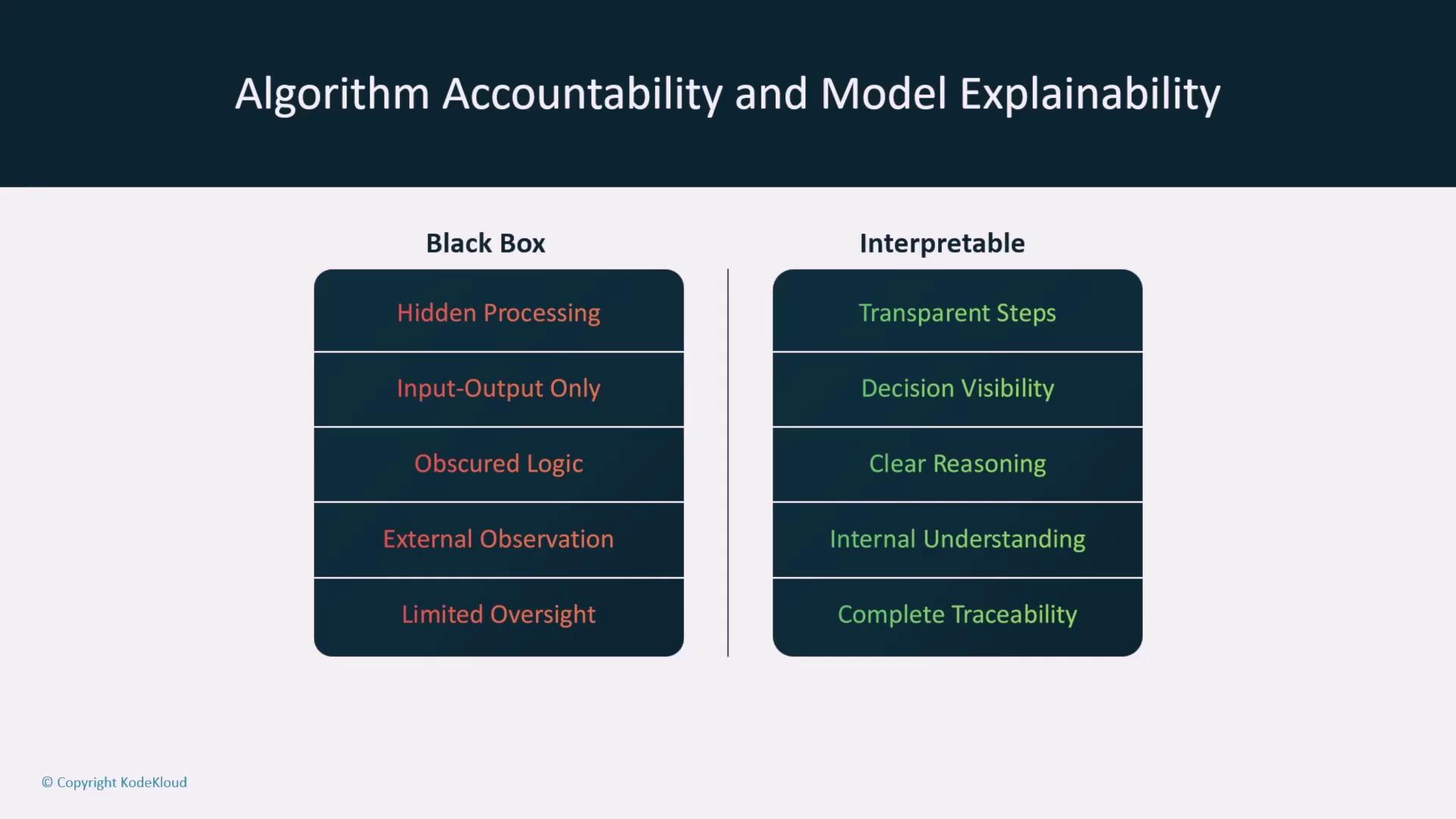

Explainability Versus Interpretability

Understanding the difference between explainability and interpretability is crucial in AI model evaluation:

Interpretability:

Refers to how clearly the decision-making process can be observed. Models designed for interpretability allow stakeholders to see the decision steps and logic used, ensuring full traceability.Explainability:

Involves providing a coherent explanation for the outcomes of a model, especially when internal workings are hidden (commonly seen in "black box" models). This is crucial for establishing trust in complex neural networks.

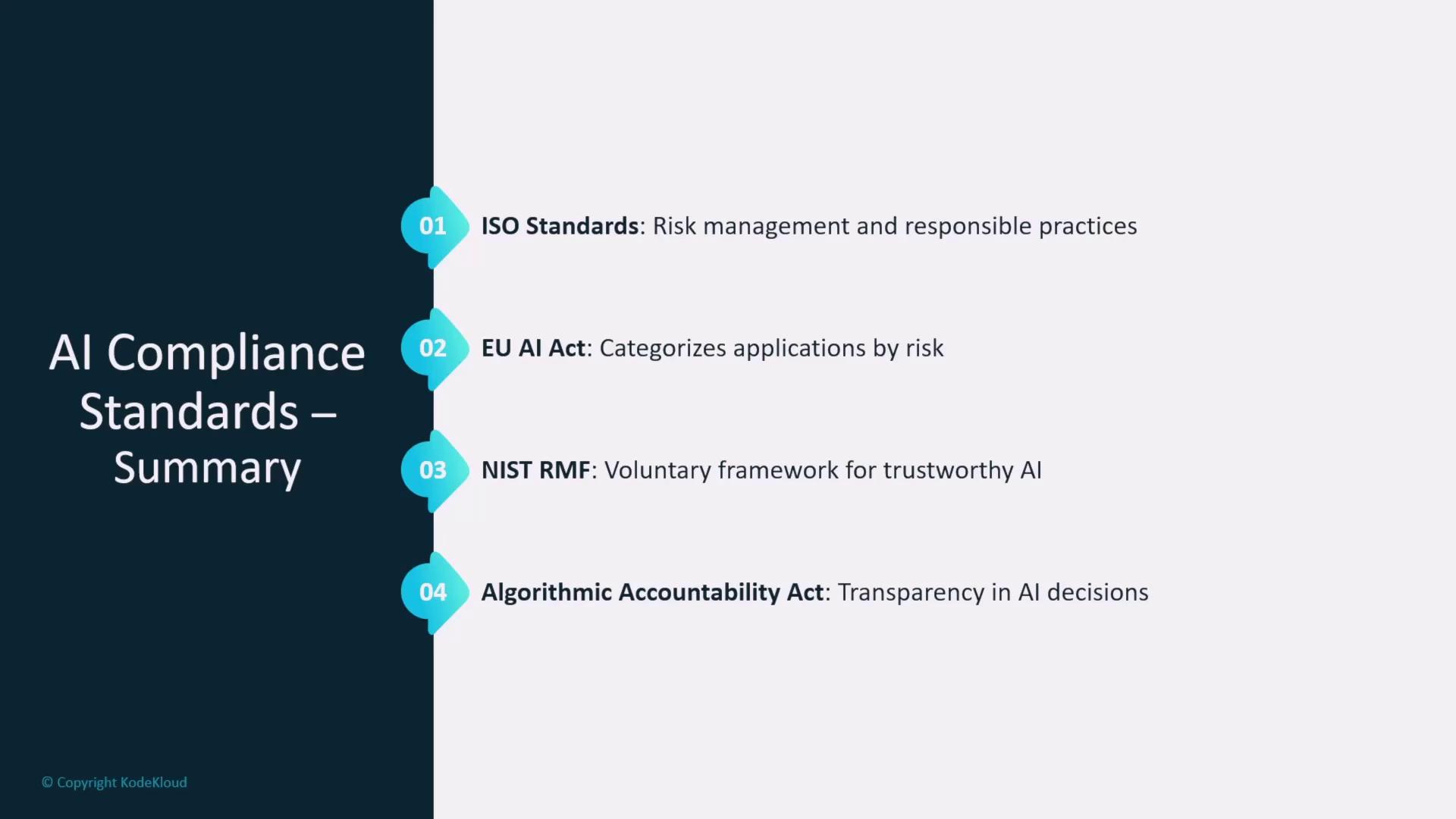

Summary of Compliance Standards

In summary, understanding these standards is essential for the responsible deployment of AI systems:

| Regulatory Framework | Key Focus Areas | Example Emphasis |

|---|---|---|

| ISO Standards (ISO 42001 & 23094) | Risk management and responsible AI practices | International interoperability and ethical guidelines |

| EU AI Act | Risk categorization of AI systems | Differentiating between unacceptable, high, and minimal-risk systems |

| NIST AI RMF | Governance and risk management for AI | Establishing a comprehensive risk management framework |

| Algorithmic Accountability Act | Transparency, interpretability, and accountability in AI decision-making | Mandating access to decision models and audit trails |

Final Thoughts

This guide provides an in-depth overview of regulatory compliance standards for AI systems, offering insights that are critical for both industry professionals and exam preparation. For further reading, consider exploring related topics in AI ethics and governance.

Watch Video

Watch video content