AWS Certified AI Practitioner

Security Compliance and Governance for AI Solutions

Security and Privacy Considerations for AI Systems

Welcome back, students. In this lesson, we dive into the critical security and privacy concerns involving AI systems, with a special focus on Generative AI (Gen AI). Understanding these risks is essential for securing AI model deployments effectively. We will examine key risks and mitigation strategies to help you safeguard your systems.

Data Poisoning and Training Data Integrity

Data poisoning is a major threat that compromises the integrity of training data. When adversaries corrupt training data—for example, by altering true positives to false negatives—they can cause mislabeling that affects the overall behavior of the model. This type of attack is particularly dangerous in sensitive fields such as healthcare diagnostics and fraud detection.

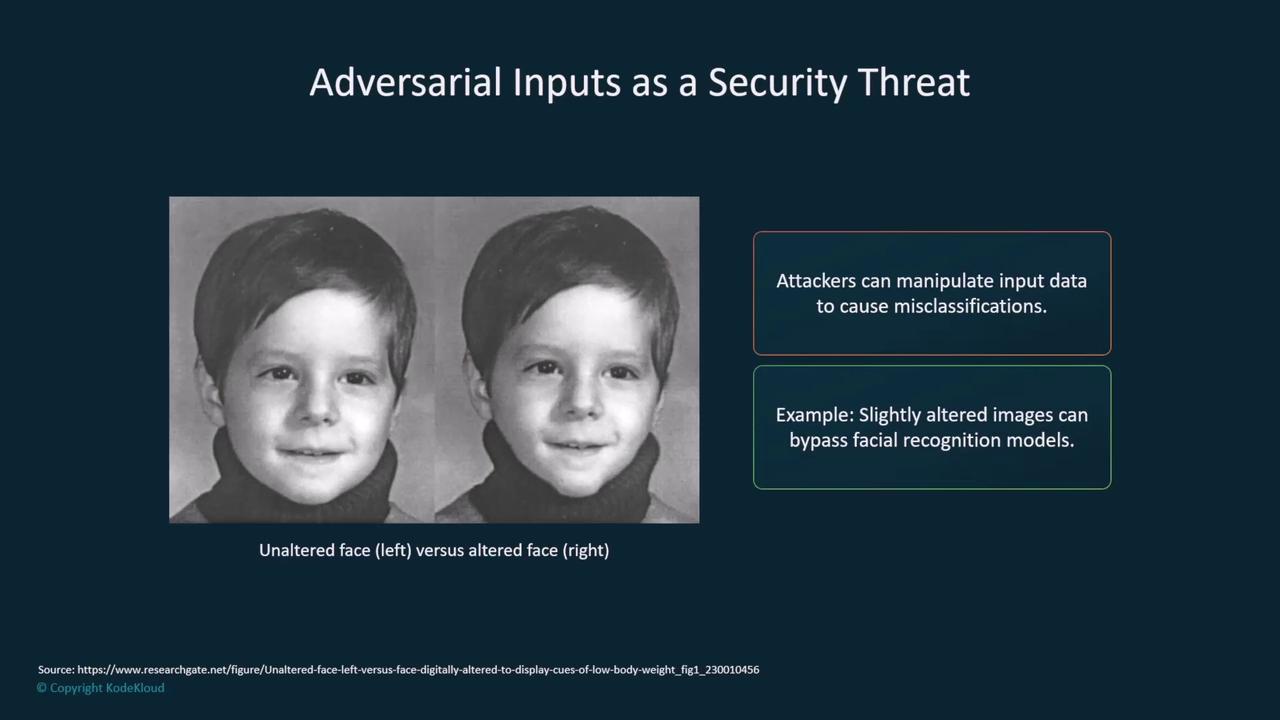

Adversarial Inputs in Facial Recognition

Another significant risk involves attackers introducing subtle alterations in input data. In facial recognition systems, minor changes to facial images can trigger false negatives, potentially allowing unauthorized access.

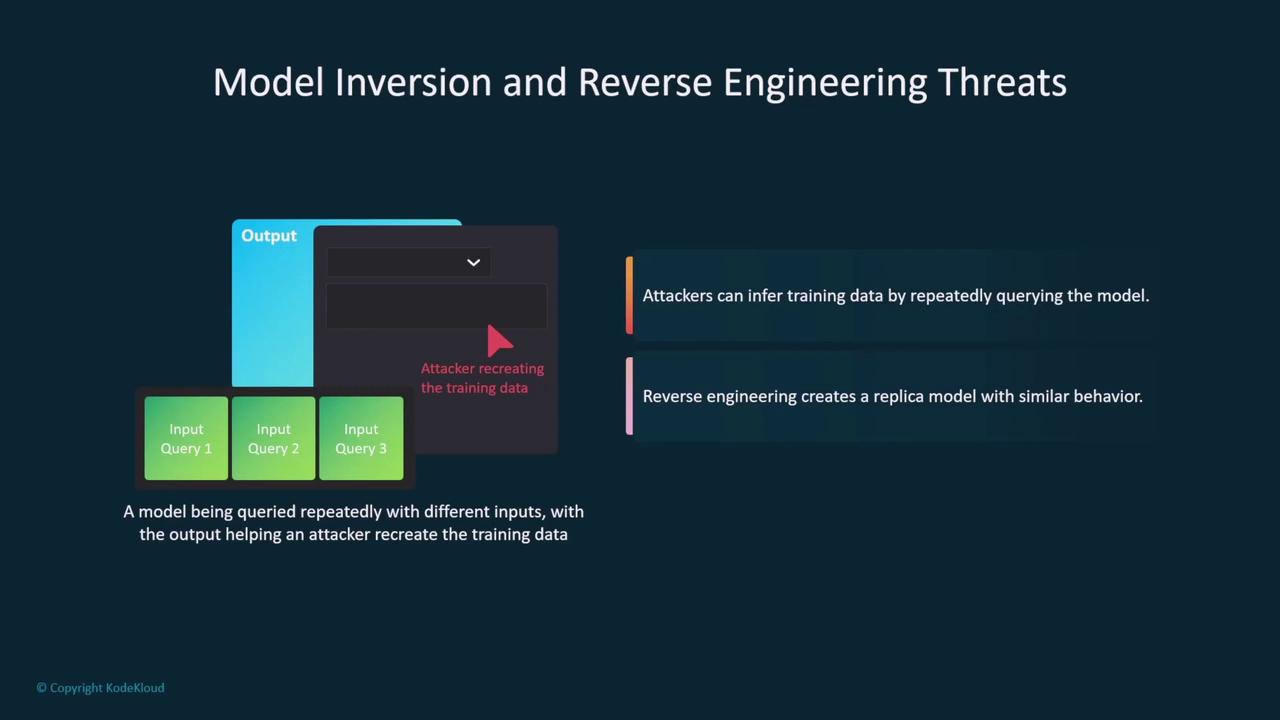

Attackers might also repeatedly query a model with a variety of input samples to approximate the training dataset. This reverse engineering can lead to the reconstruction of a replica model that mimics the behavior of the original, posing severe threats to data privacy and model integrity.

Prompt Injection Attacks

Prompt injection attacks are a particular concern for large language models. These attacks involve injecting malicious inputs that manipulate the model's output, potentially exposing sensitive internal information like system prompts or data sources. In worst-case scenarios, this can result in a 'jailbroken' model.

Warning

Ensure that your AI systems are equipped with robust input validation and monitoring mechanisms to defend against prompt injection and related vulnerabilities.

Mitigation Strategies

To mitigate these security threats, consider employing the following strategies:

- Access Controls and Encryption: Implement strict permission policies and use encryption to protect data both at rest and in transit. Services like AWS KMS and ACM are excellent tools for managing these security measures.

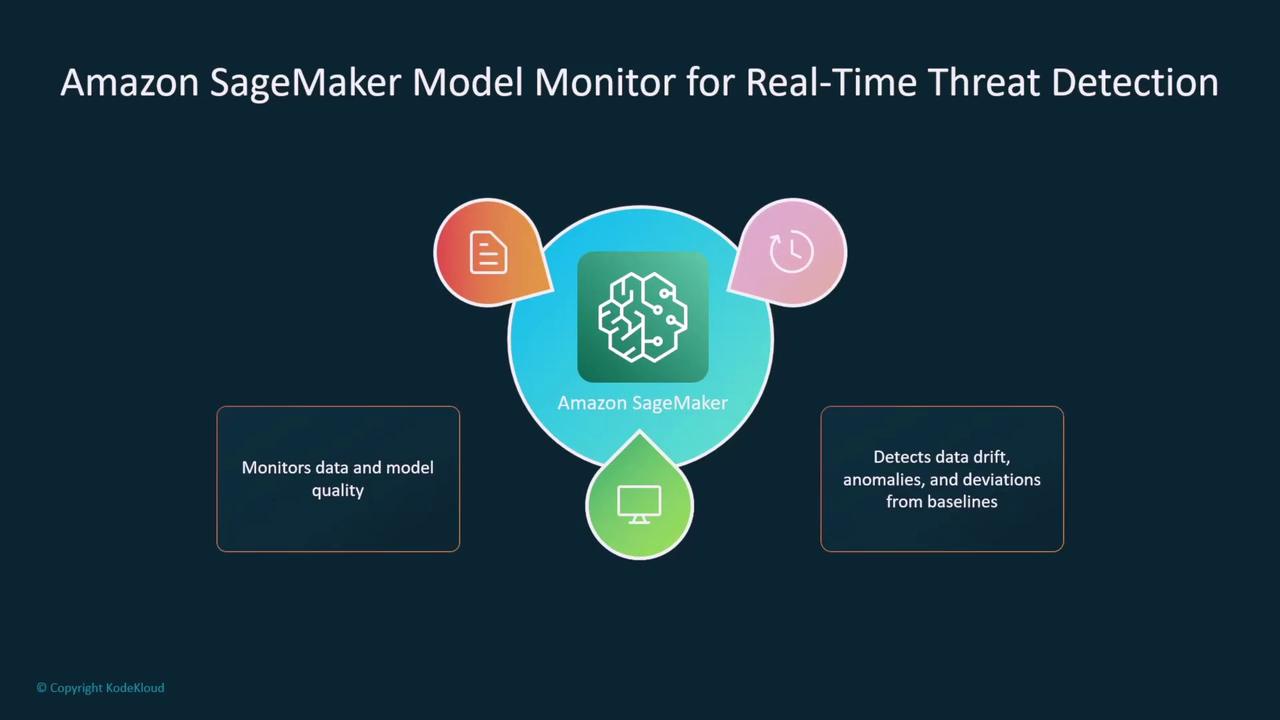

- Anomaly Detection and Guardrails: Utilize tools such as Amazon SageMaker Model Monitor to continuously assess data quality, detect drift, and identify anomalies in real time. Implement guardrails similar to those provided by AWS Bedrock to maintain a secure operational environment.

- Prompt Injection Protection: Enhance your models' resilience by training them to detect harmful prompt injection patterns. Set up monitoring systems that trigger alerts upon detecting suspicious input behavior.

For instance, a public AI service might incorporate an internal mechanism to flag a malicious prompt by displaying an alert symbol to both internal teams and end users.

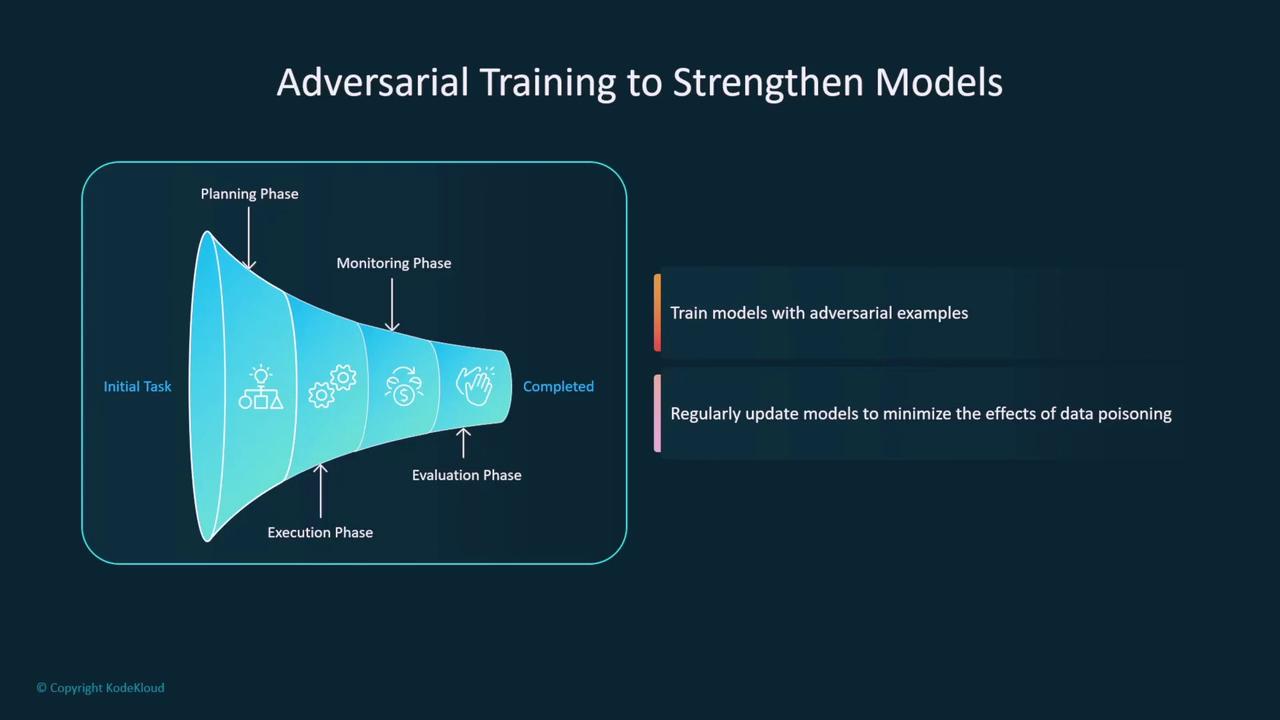

Another effective approach is adversarial training, where models are exposed to challenging and manipulated examples during training. This process helps minimize vulnerabilities resulting from edge cases and user-induced data poisoning.

Monitoring and Continuous Evaluation

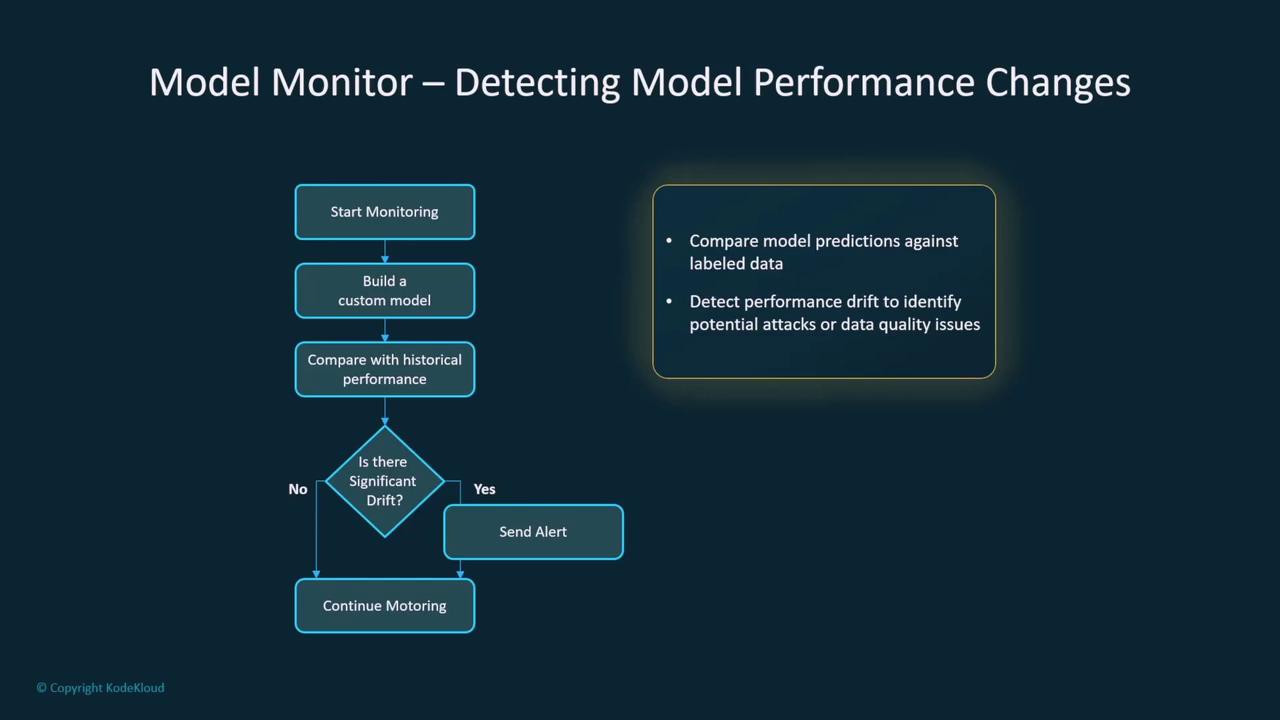

Maintaining the security of deployed models requires continuous monitoring. Amazon SageMaker Model Monitor, for example, can compare incoming data to baseline quality metrics, identify performance drift, and raise alerts if deviations are significant. This service continuously evaluates model inferences against labeled data, pinpointing any issues related to data quality or security breaches.

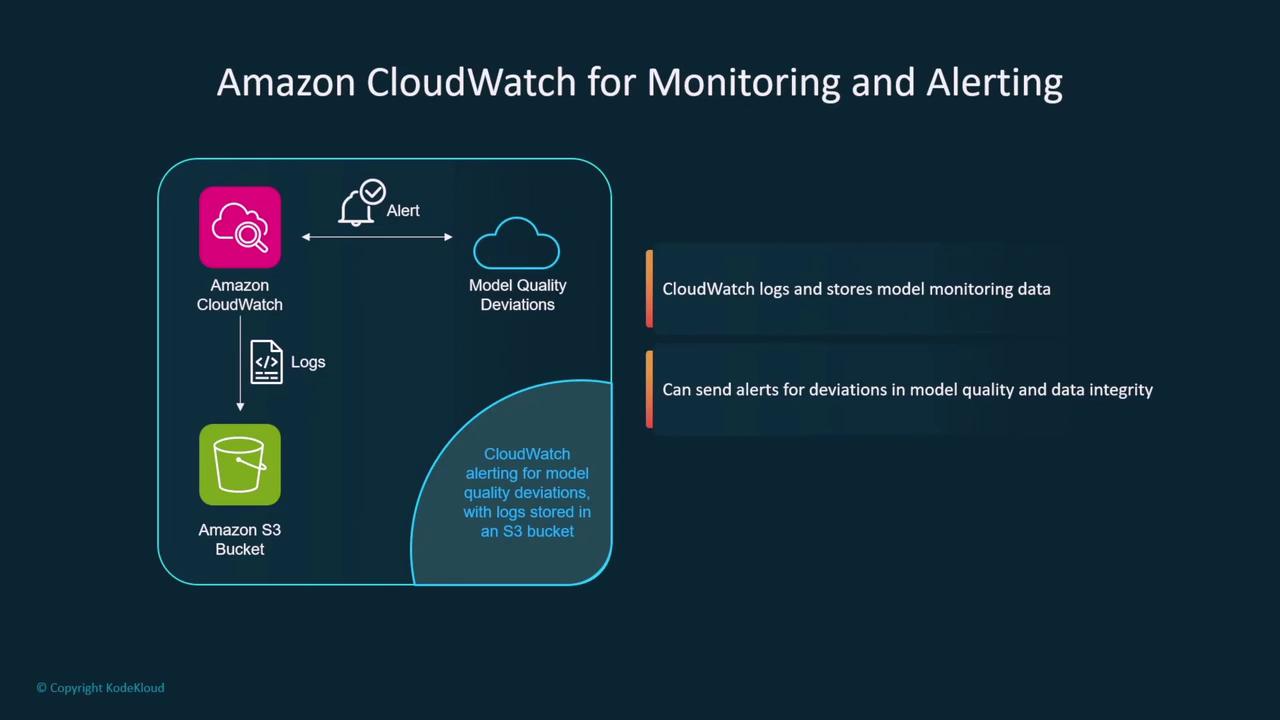

Additionally, you can integrate AWS CloudWatch to monitor logs and alert administrators when changes in model quality or data integrity occur.

Note

Regular monitoring and updates are crucial for maintaining a secure AI environment. Incorporate routine evaluations and leverage automated tools to ensure continuous protection.

Final Thoughts

In summary, securing AI models requires a comprehensive strategy that addresses data poisoning, adversarial manipulation, and prompt injection attacks, while also ensuring continuous monitoring and evaluation. Employing encryption, access controls, and robust anomaly detection mechanisms—using tools like SageMaker Model Monitor and CloudWatch—forms the backbone of an effective security posture for AI systems. By thoroughly understanding and mitigating these vulnerabilities, you can significantly enhance the overall security and privacy of your AI deployments.

Watch Video

Watch video content