AWS Certified Developer - Associate

Containers on AWS

ECS Demo Part 1

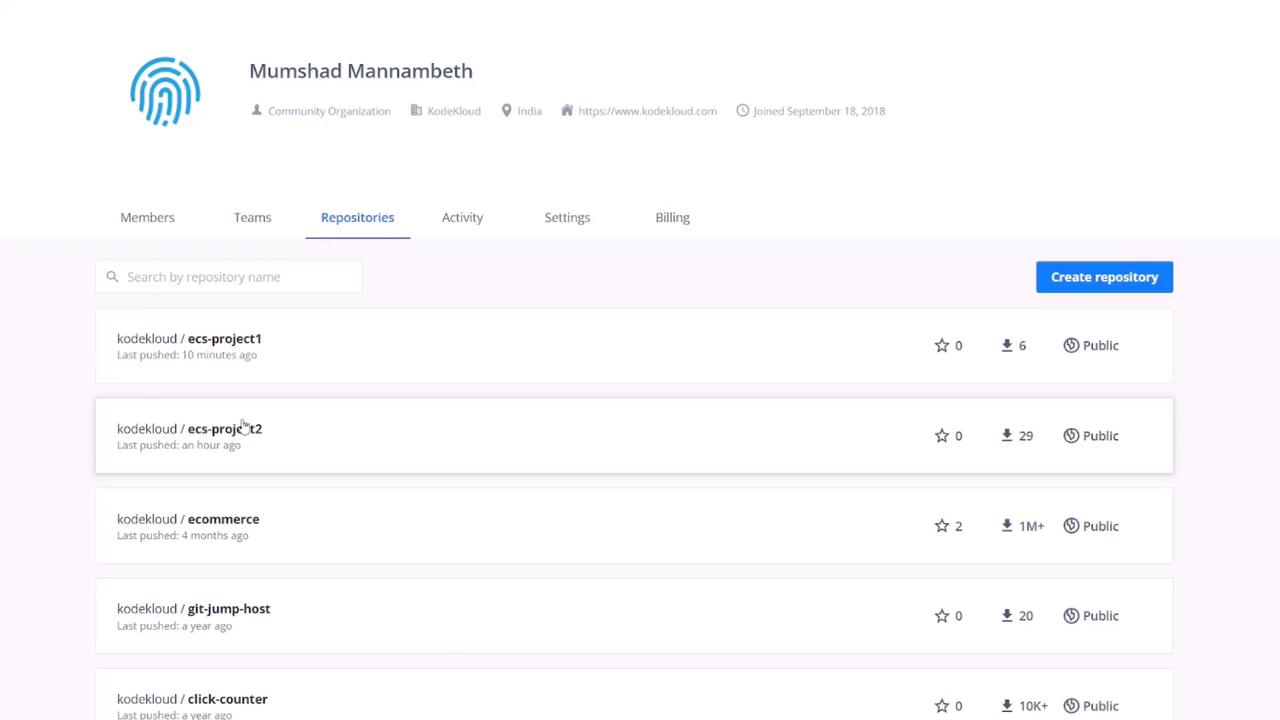

Before working with Amazon ECS in the AWS Console, visit Docker Hub and review the two images that form the basis of our demo projects. These public repositories—available at kodekloud.com/ecs-project1 and kodekloud.com/ecs-project2—contain the project images we will use.

Project One Overview

Project One uses a simple Node.js application powered by an Express server. When a GET request is sent to the root path, the server responds with a basic HTML file. Below is the HTML file delivered by the application:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<link rel="stylesheet" href="css/style.css" />

<title>Document</title>

</head>

<body>

<h1>ECS Project 1</h1>

</body>

</html>

An indicative terminal prompt might appear as follows:

user1 on user1 in ecs-project1 is v1.0.0

The core application is built with Express, as demonstrated below:

const express = require("express");

const path = require("path");

const app = express();

app.set("view engine", "ejs");

app.set("views", path.join(__dirname, "views"));

app.use(express.static(path.join(__dirname, "public")));

app.get("/", (req, res) => {

res.render("index");

});

app.listen(3000, () => {

console.log("Server is running on port 3000");

});

A sample Docker CLI prompt may look like:

user1 on 🐳 user1 in ecs-project1 is 🐳 v1.0.0 via 🐳

Important

Note that the Express server listens on port 3000.

The Dockerfile for this project is straightforward and exposes port 3000:

FROM node:16

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

RUN npm ci --only=production

COPY .

EXPOSE 3000

CMD [ "node", "index.js" ]

Setting Up ECS Using the AWS Console

Quick Start with ECS

- Log in to the AWS Console, search for "ECS", and select Elastic Container Service.

- If you're new to ECS, a quick start wizard will guide you. Although sample applications are available, select the custom option to configure your container manually.

- In the container configuration:

- Container Name: For example, "ECS-Project1".

- Image: Use "KodeKloud/ECS-Project1". If your image resides in a private repository, provide your credentials; otherwise, leave it as is.

- Port Mapping: Set to 3000/TCP to match the Express application.

Below is a recap of the Dockerfile content referenced earlier:

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

RUN npm ci --only=production

COPY .

EXPOSE 3000

CMD [ "node", "index.js" ]

For traditional Docker deployments, an external port can be mapped to an internal port like this:

# Example (not applicable for ECS)

docker run -p 80:3000

In ECS, however, the external and internal ports must match (e.g., both being 3000). The advanced container configuration also allows you to set up health checks, environment variables, and volumes through a graphical interface. Click "Update" when the container configuration is complete.

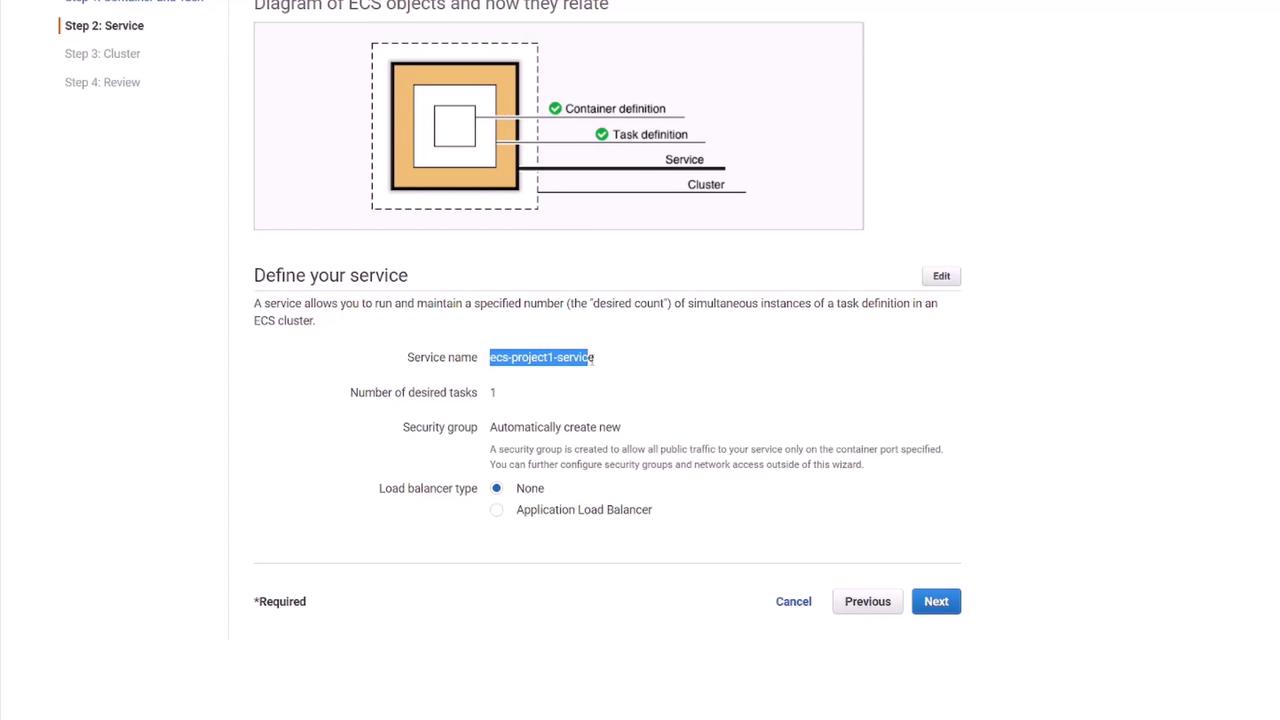

Defining Your ECS Service

After setting up the container:

- Service Name: For instance, "ECS-project1-service".

- Load Balancer: Optionally add one—select "none" for now.

The wizard creates a cluster that groups all underlying resources, provisioning a new VPC along with subnets automatically.

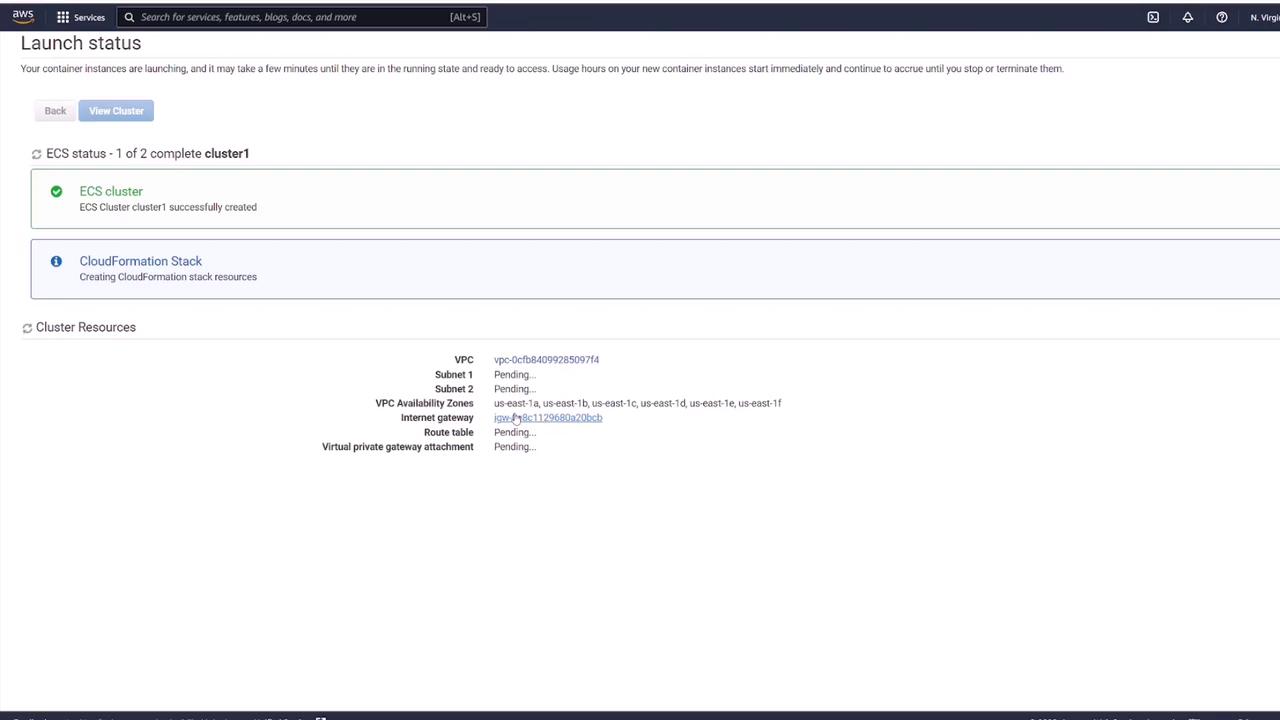

Review the configuration details including container definition, task definition, service details, and cluster settings. Then click "Create." Wait a few minutes for provisioning and click "View Service" when ready.

Understanding the ECS Task Wizard Components

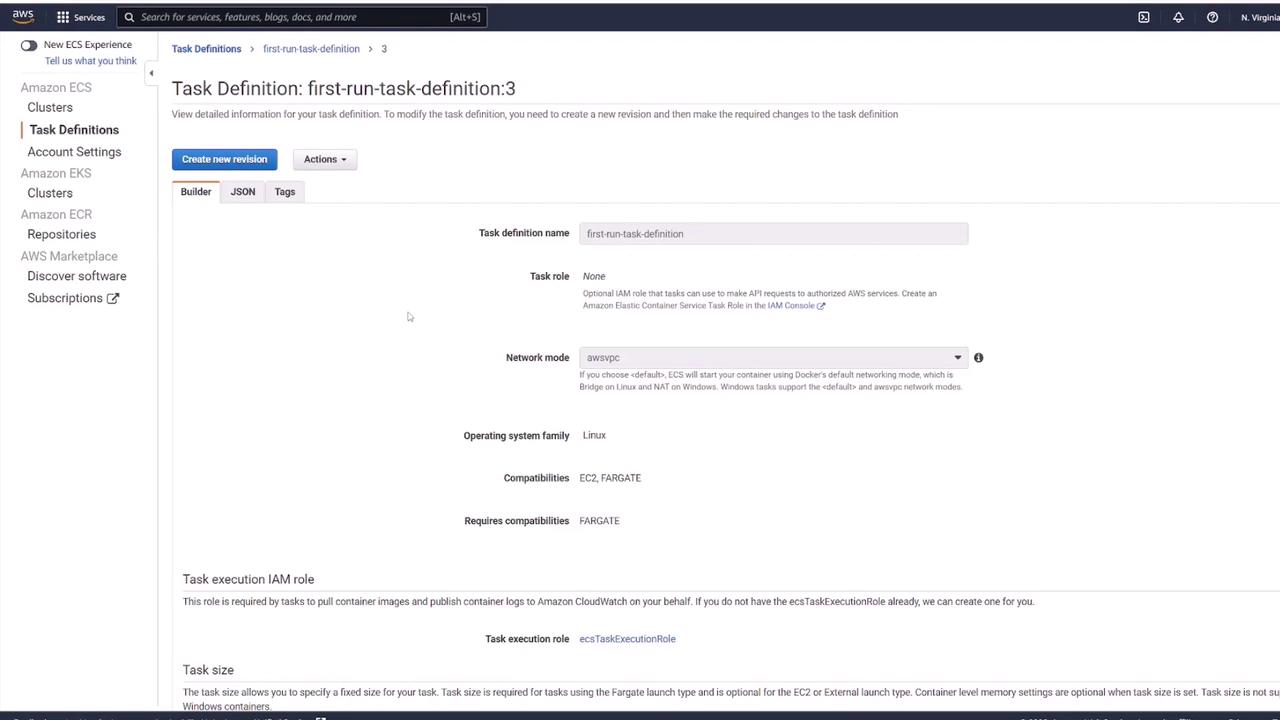

1. Task Definitions

Task definitions store all container configurations, including port mappings, volumes, and environment variables. Revision numbers help track changes, with the latest revision reflecting the current configuration.

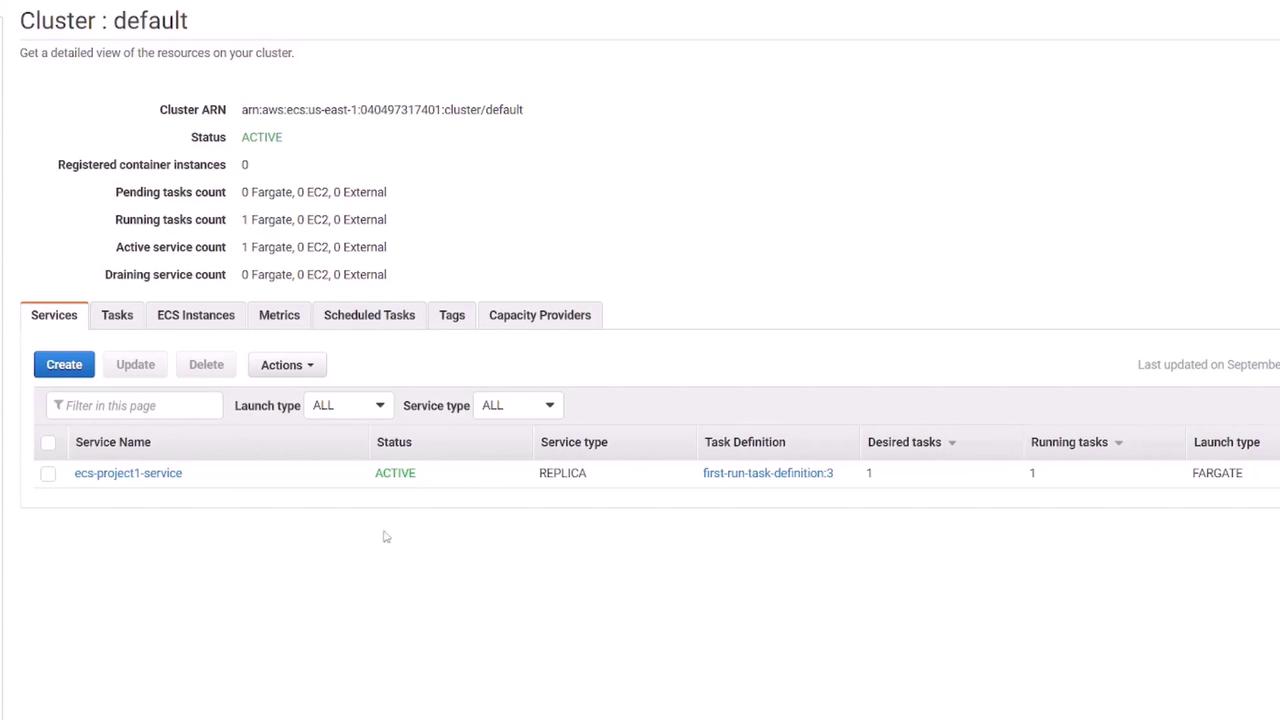

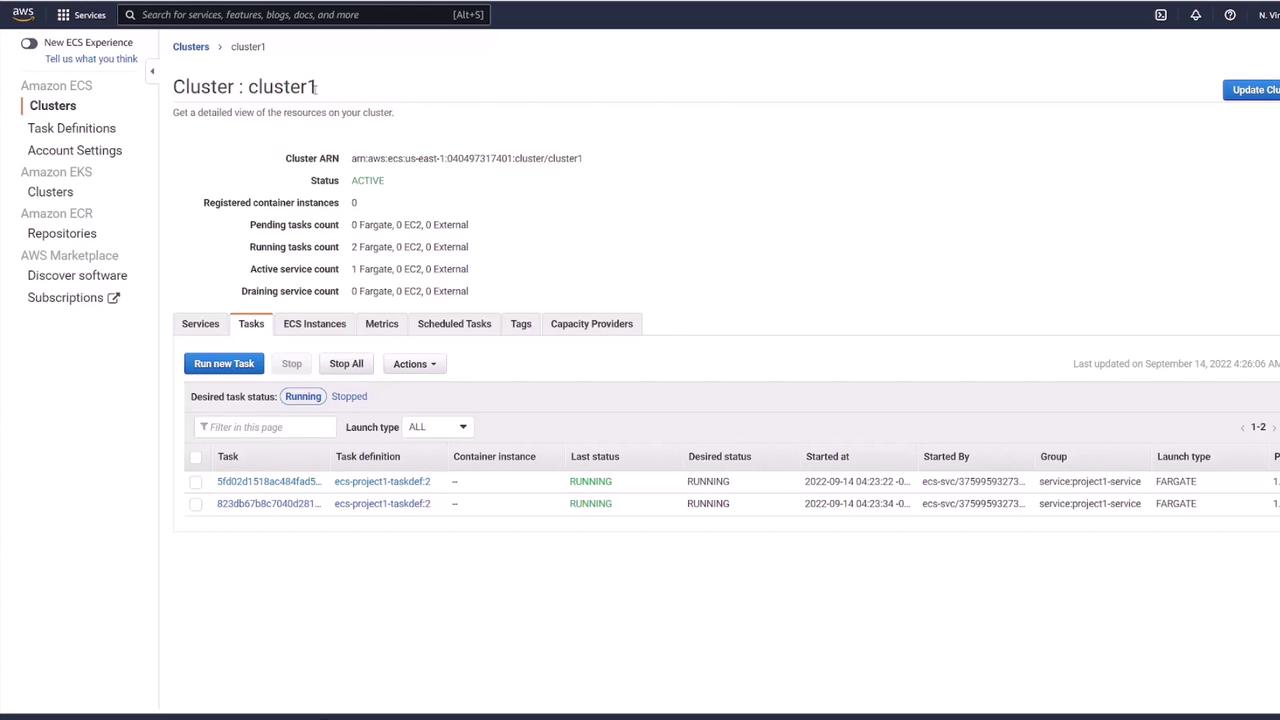

2. Cluster

The ECS cluster represents the infrastructure—whether EC2 instances when using the EC2 launch type, or a managed Fargate environment. The default cluster, set up by the wizard, includes a newly created VPC and subnets.

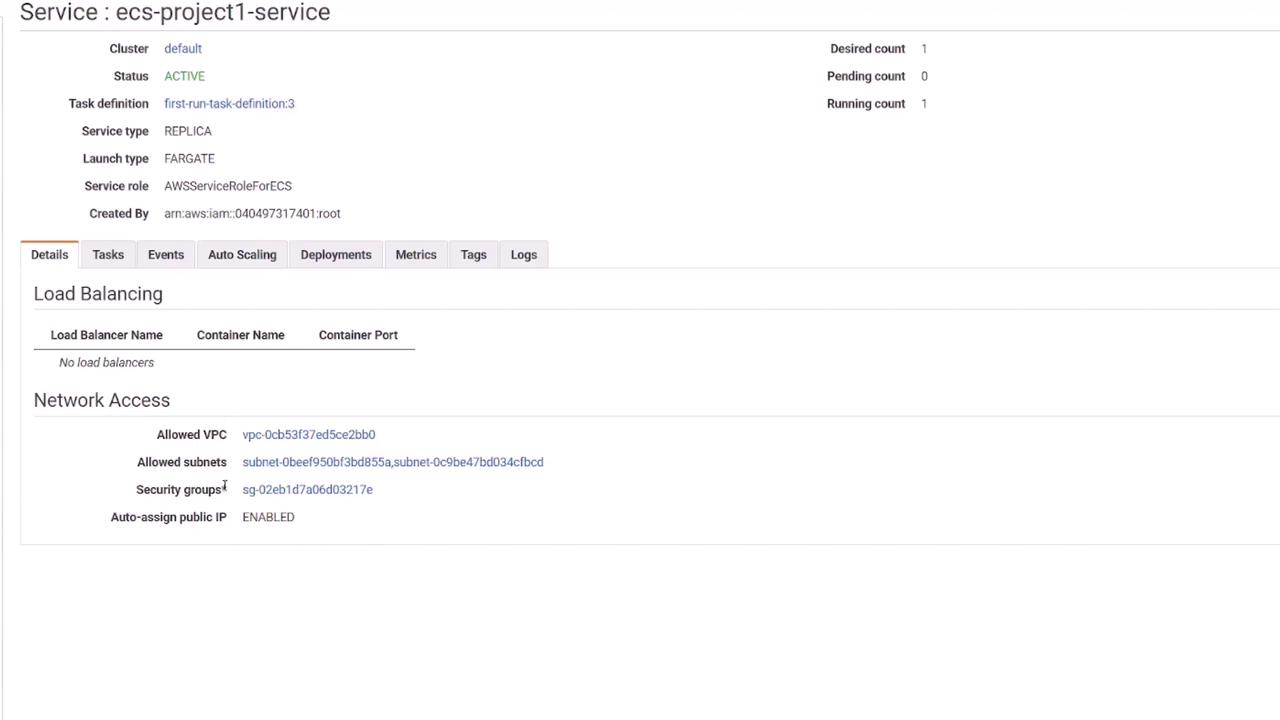

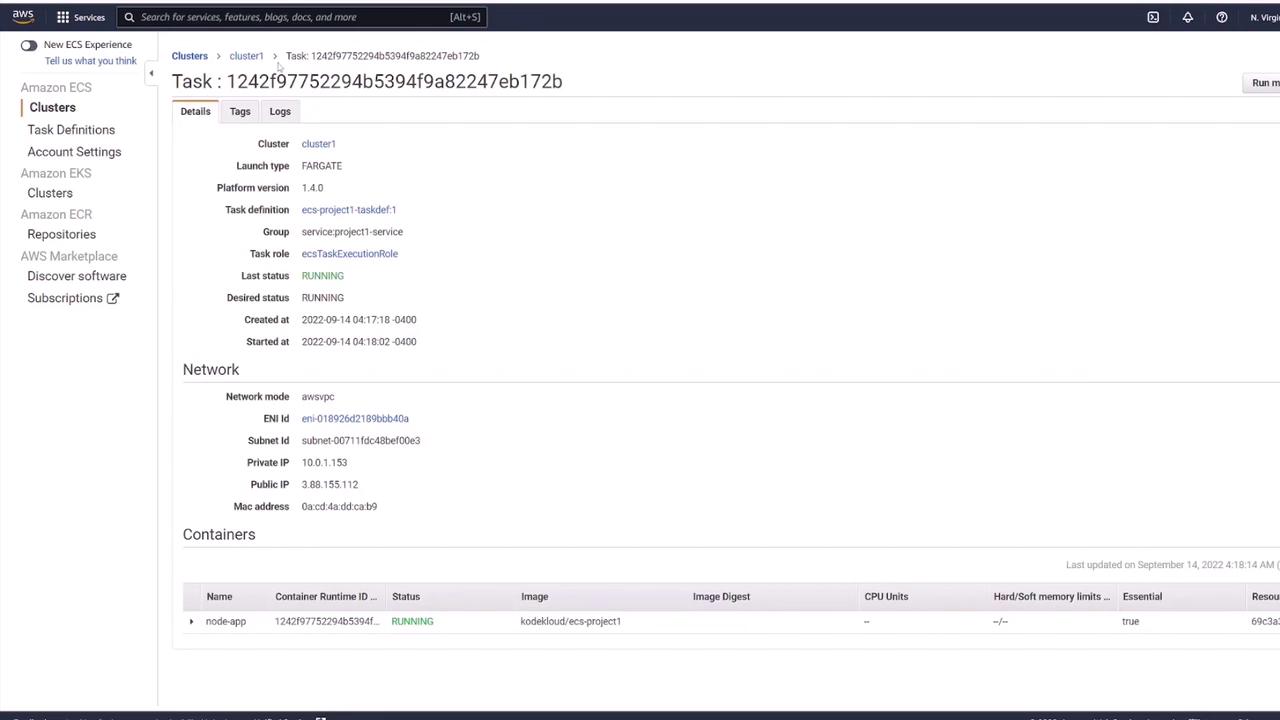

3. Service and Tasks

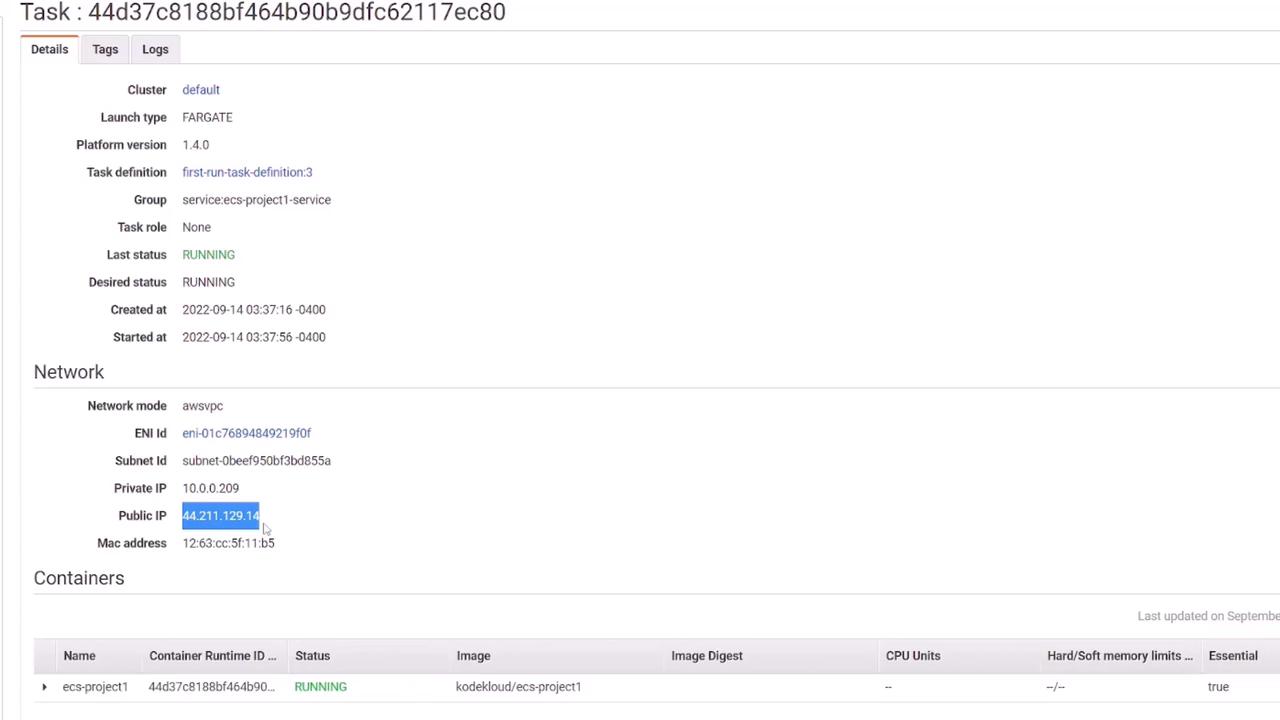

The service, "ECS-project1-service", is created with a desired task count (initially one). You can inspect network settings, including VPC, subnets, and security groups. The running task receives a public IP address which you can use to access the deployed application.

After obtaining the task’s public IP address and accessing it in a browser, you should see the demo HTML page served on port 3000, confirming the application deployment.

Cleaning Up the Quick Start Environment

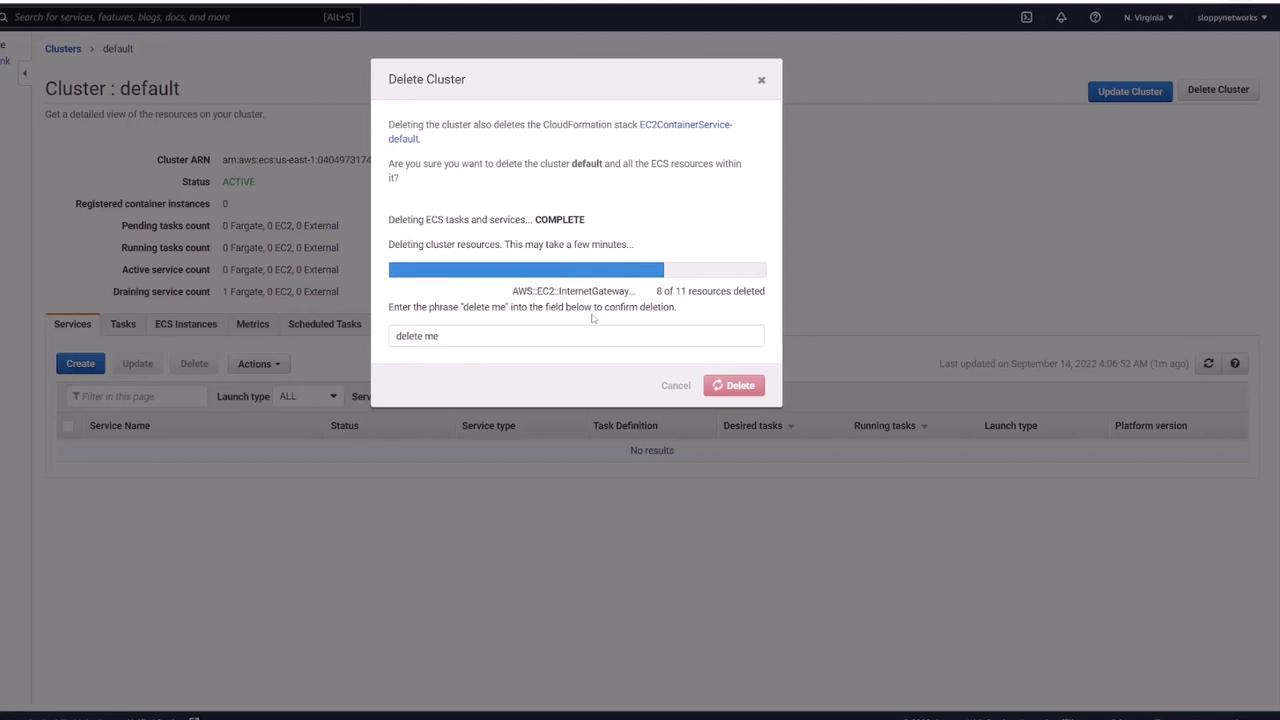

After verification, delete the environment created by the quick start wizard in order to redeploy from scratch:

- In your cluster, select the service and delete it. Confirm with "delete me." Ensure that all tasks are removed.

- Delete the cluster.

With the ECS environment cleared, you are now ready to deploy the application manually.

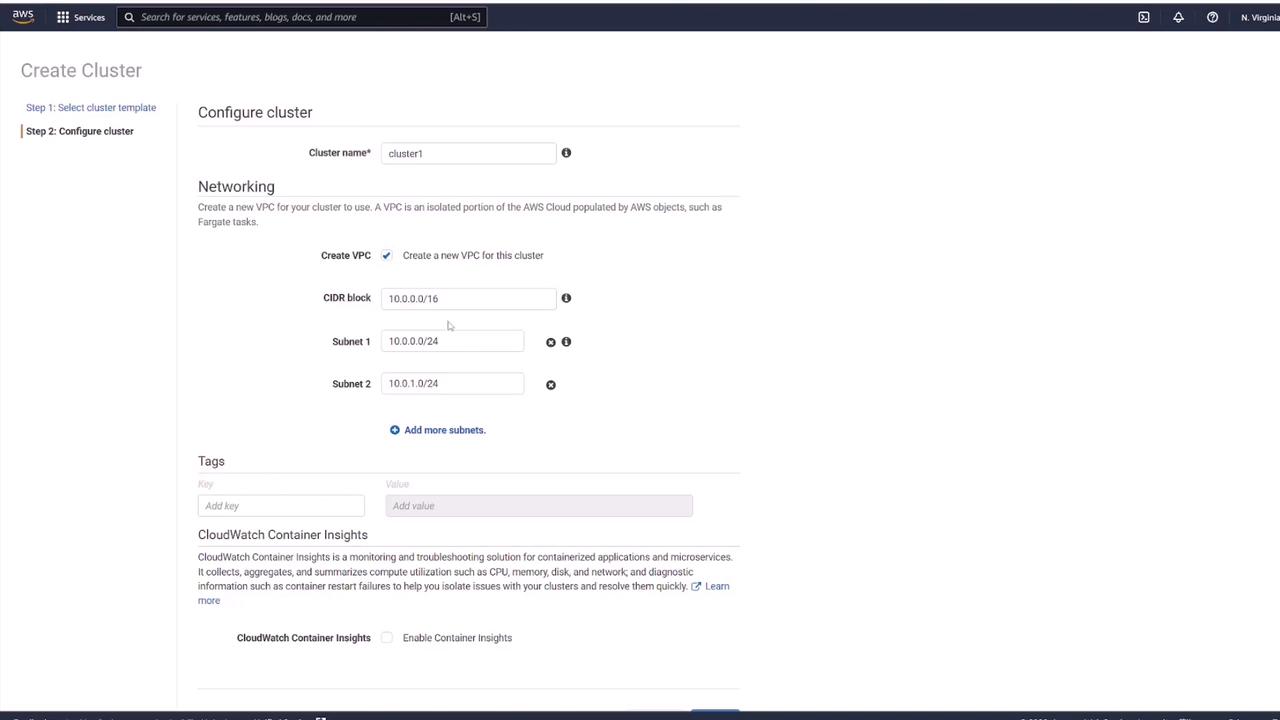

Creating a New ECS Cluster

- In the ECS Console, click Create Cluster.

- Choose Networking only if using Fargate. (For EC2, you can choose between Linux and Windows options.)

- Name your cluster (for example, "cluster1") and create a new VPC with default CIDR and subnet settings.

- Click Create.

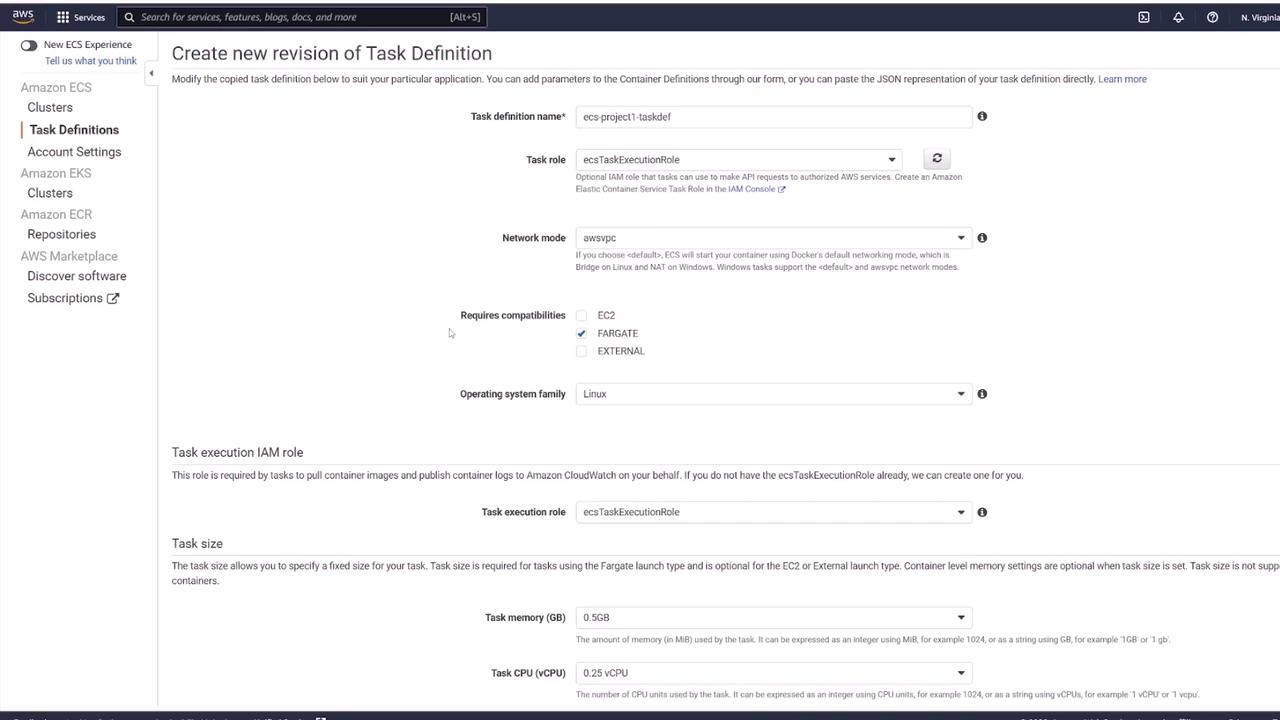

Creating Task Definitions for Your New Cluster

- Navigate to Task Definitions and click Create new Task Definition.

- Select Fargate as the launch type.

- Name the task definition (e.g., "ECS-Project1") and assign the appropriate task execution role.

- Choose Linux as the operating system and allocate modest CPU and memory resources for the demo.

- Add a container:

- Container Name: (e.g., "node app")

- Image: Use "KodeKloud/ECS-Project1"

- Port Mapping: Set to 3000

After configuring the task definition, click Add and then Create.

Creating the ECS Service

- In your new cluster ("cluster1"), go to the Services tab and click Create Service.

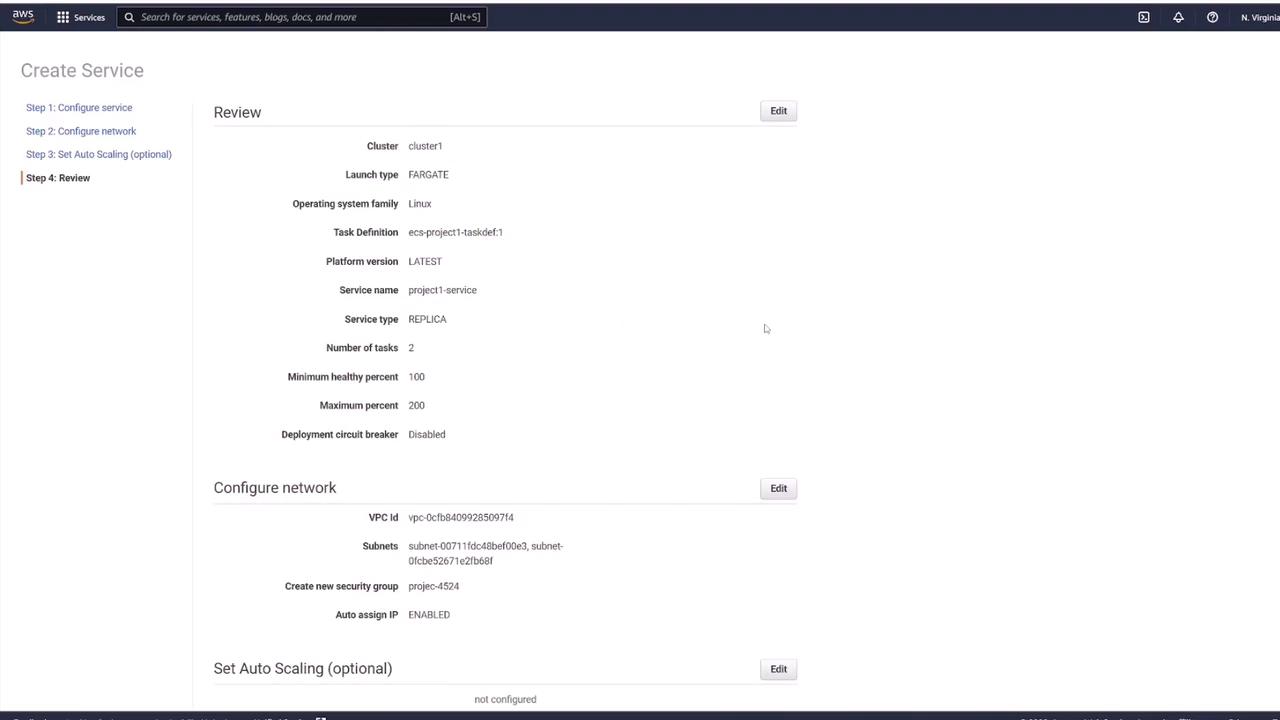

- Configure the following:

- Launch Type: Fargate

- Operating System: Linux

- Task Definition: Select "ECS-Project1" (latest revision)

- Service Name: (e.g., "project1-service")

- Number of Tasks: For demonstration purposes, choose 2 tasks.

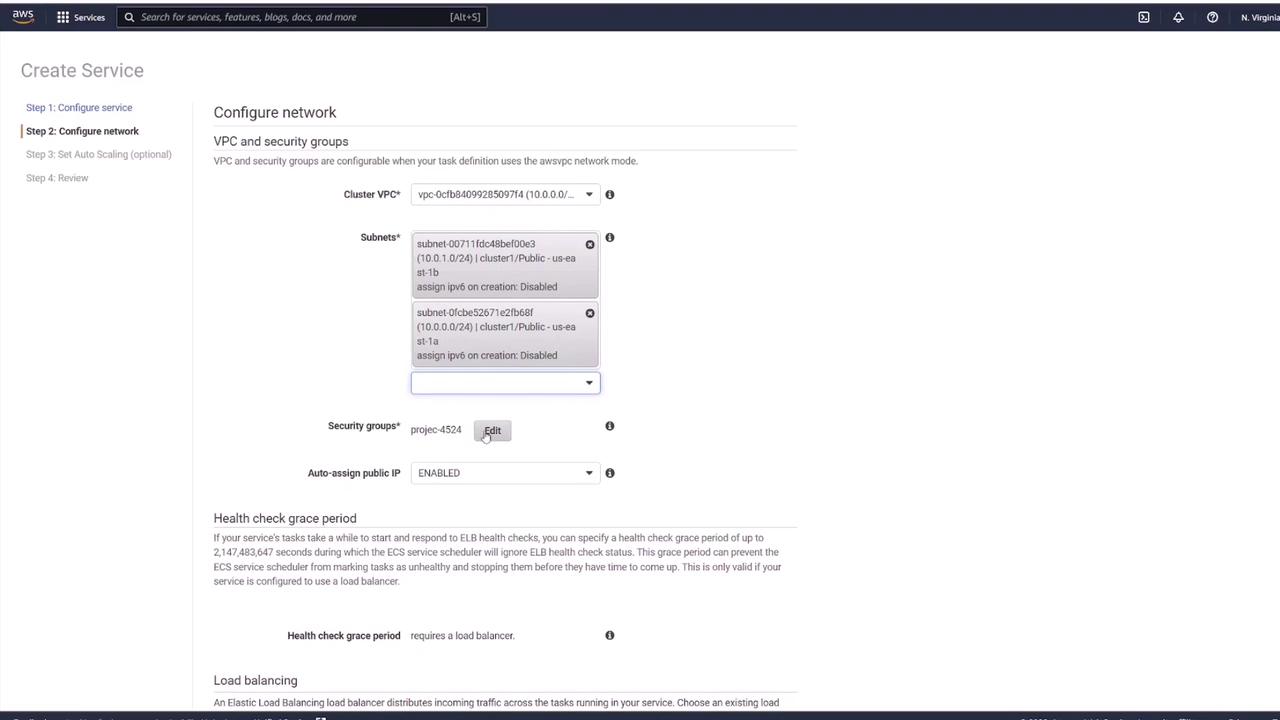

- Set up networking:

- Select the VPC created earlier.

- Choose the appropriate subnets.

- Configure the security group: Change the default setting (typically allowing traffic on port 80) to allow Custom TCP traffic on port 3000 from anywhere.

- Proceed without a load balancer by selecting No load balancer (this will be discussed later).

- Optionally configure auto scaling, then click Next to review all configurations.

- Finally, click Create Service.

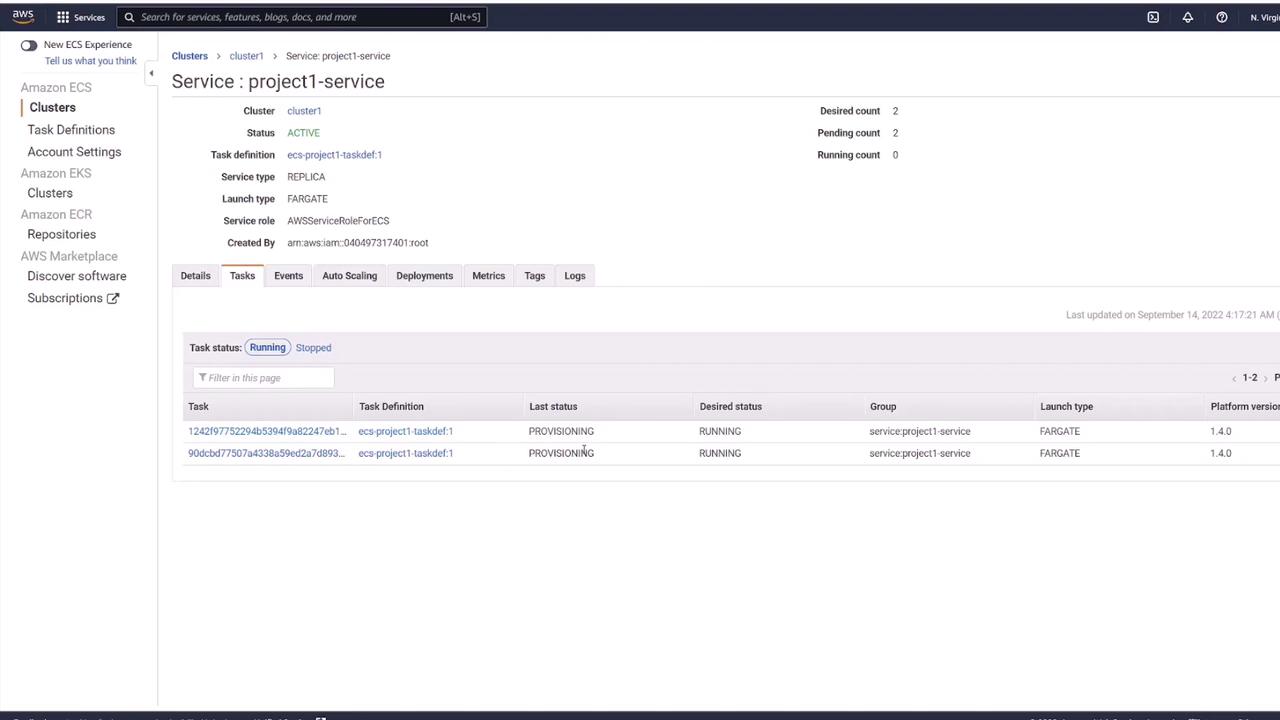

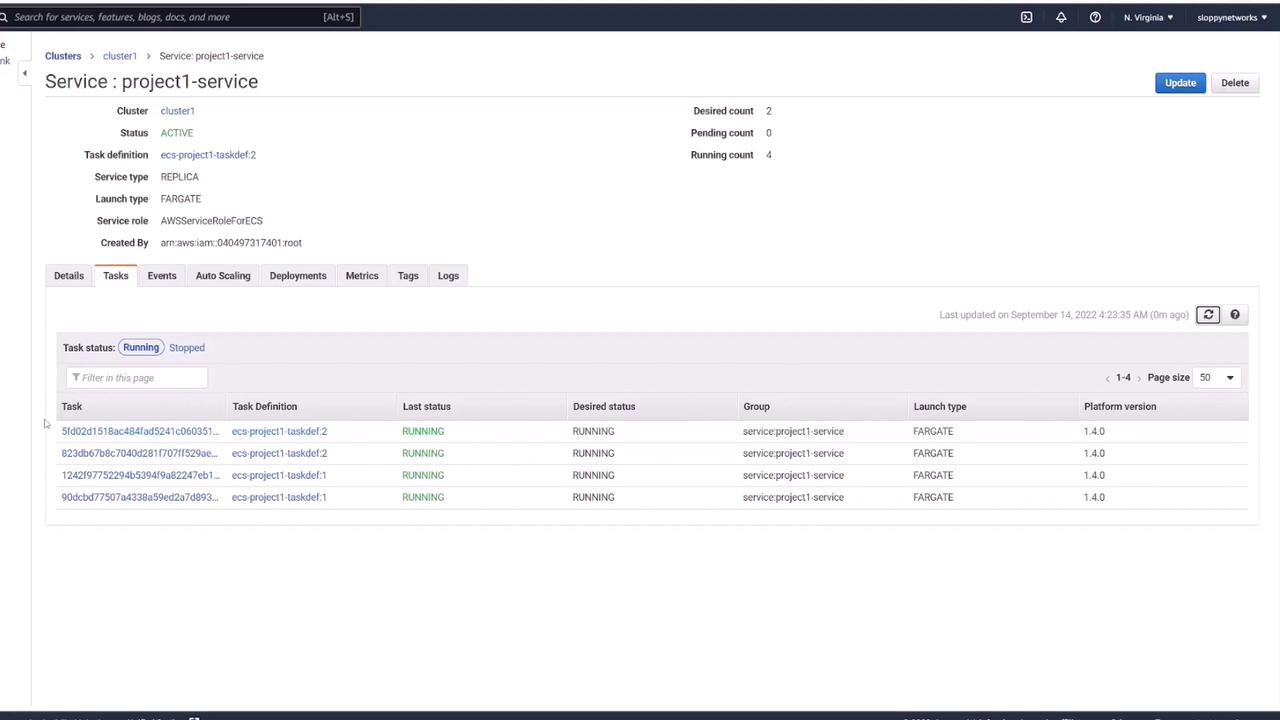

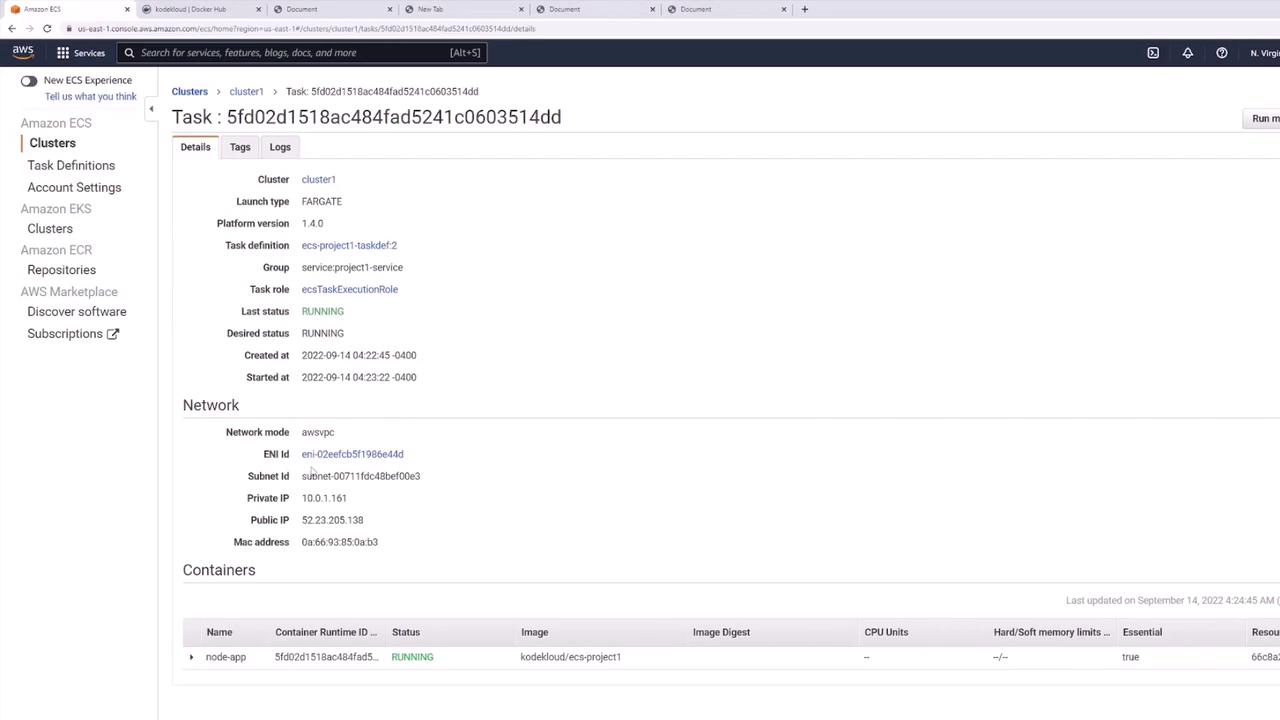

Initially, the console may show no tasks until refreshed; you should then notice two tasks being provisioned. Each task receives its own public IP address which requires tracking if not behind a load balancer. A load balancer is recommended for production environments to provide a consistent endpoint and handle traffic distribution.

Click on a task to view its details, then copy its public IP address and open it in your browser at port 3000. The expected output is the simple HTML page served by the application. Note that each new deployment generates new public IP addresses, which underscores the importance of using a load balancer in production.

Updating Your Application

Suppose you modify the HTML file by adding extra exclamation marks to the H1 tag. The updated HTML might look like this:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<link rel="stylesheet" href="css/style.css" />

<title>Document</title>

</head>

<body>

<h1>ECS Project 1!!!!</h1>

</body>

</html>

To build and push the changed Docker image, use the following commands:

docker build -t KodeKloud/ECS-project1 .

docker push KodeKloud/ECS-project1

Even after pushing the updated image, the running ECS service continues to use the old image until you force a new deployment. To do this, go to the ECS Console, select your service in the cluster, click Update, and then choose Force new deployment. This instructs ECS to pull the latest image and deploy updated tasks.

Alternatively, if you update the task definition, create a new revision (e.g., revision 2) and update the service to use it. ECS will then start tasks with the latest configuration, and once health checks pass, the old tasks are terminated.

When new tasks are deployed, they will obtain new public IP addresses. While this confirms the update, it also illustrates why a load balancer is essential—it provides a stable endpoint and manages traffic distribution automatically.

Refresh the ECS console to verify that only the desired number of tasks (in this example, two) are running, and that the deployment process has gracefully terminated the old tasks.

Final Notes

This demonstration has shown how to deploy and update a basic application on ECS using both the quick start wizard and manual configuration. Although each ECS task gets a unique IP address, a load balancer is recommended for production to provide a single, stable endpoint and to manage IP changes seamlessly.

After completing the demo, remember to delete the entire service before moving to more complex environments that involve databases, volumes, and load balancing.

Delete the service and confirm that all tasks are removed. The cluster will remain, allowing you to deploy your next application.

Summary

This guide detailed the process of setting up, deploying, updating, and cleaning up an ECS-based application. For production-grade deployments, always consider integrating a load balancer to manage traffic effectively.

Watch Video

Watch video content