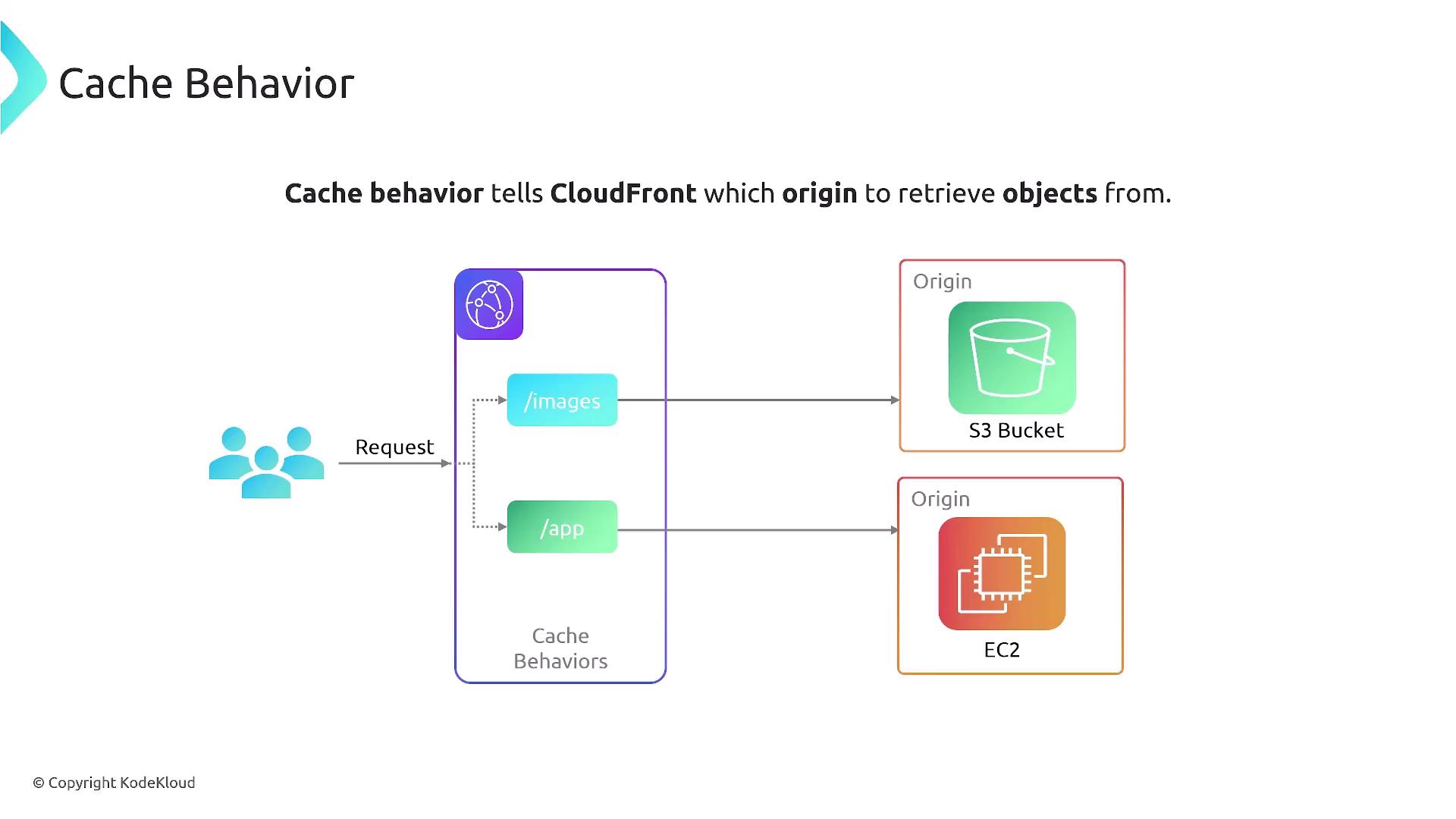

Cache Behavior Overview

CloudFront leverages cache behaviors and cache policies to determine both the origin from which to retrieve objects and the duration these objects remain fresh. When a request is received, CloudFront evaluates the URL and additional components to decide whether to serve the content from cache or fetch it from the origin. For example, a request for an image may be cached for an extended period (e.g., one week) because such assets typically remain unchanged. In contrast, dynamic content—like that served from/app interacting with an EC2 instance—might only be cached for as short as six seconds.

The diagram below demonstrates how CloudFront’s caching behavior defines which content is cached and which is not:

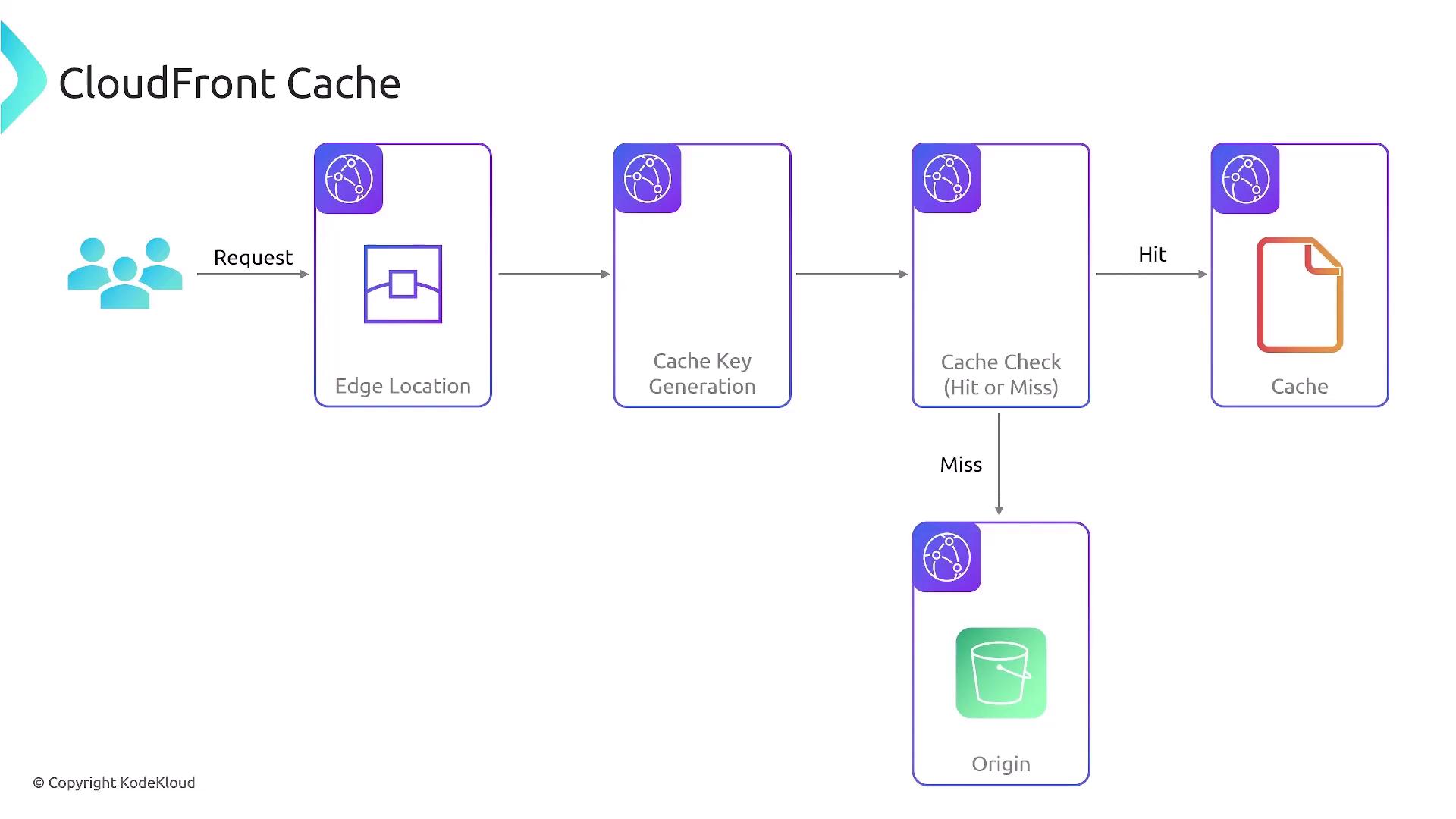

Request Flow and Cache Key Generation

When a user accesses a URL, DNS routing directs the request to the nearest CloudFront edge location. Here, a unique cache key is generated based on the request details. If the associated content exists in the cache, it is served immediately; otherwise, CloudFront retrieves the content from the origin, caches it, and then delivers it to the user. The flowchart below outlines the step-by-step process of how CloudFront manages caching—from user request at the edge location, cache key generation, checking for a cache hit or miss, and finally fetching from the origin when needed:

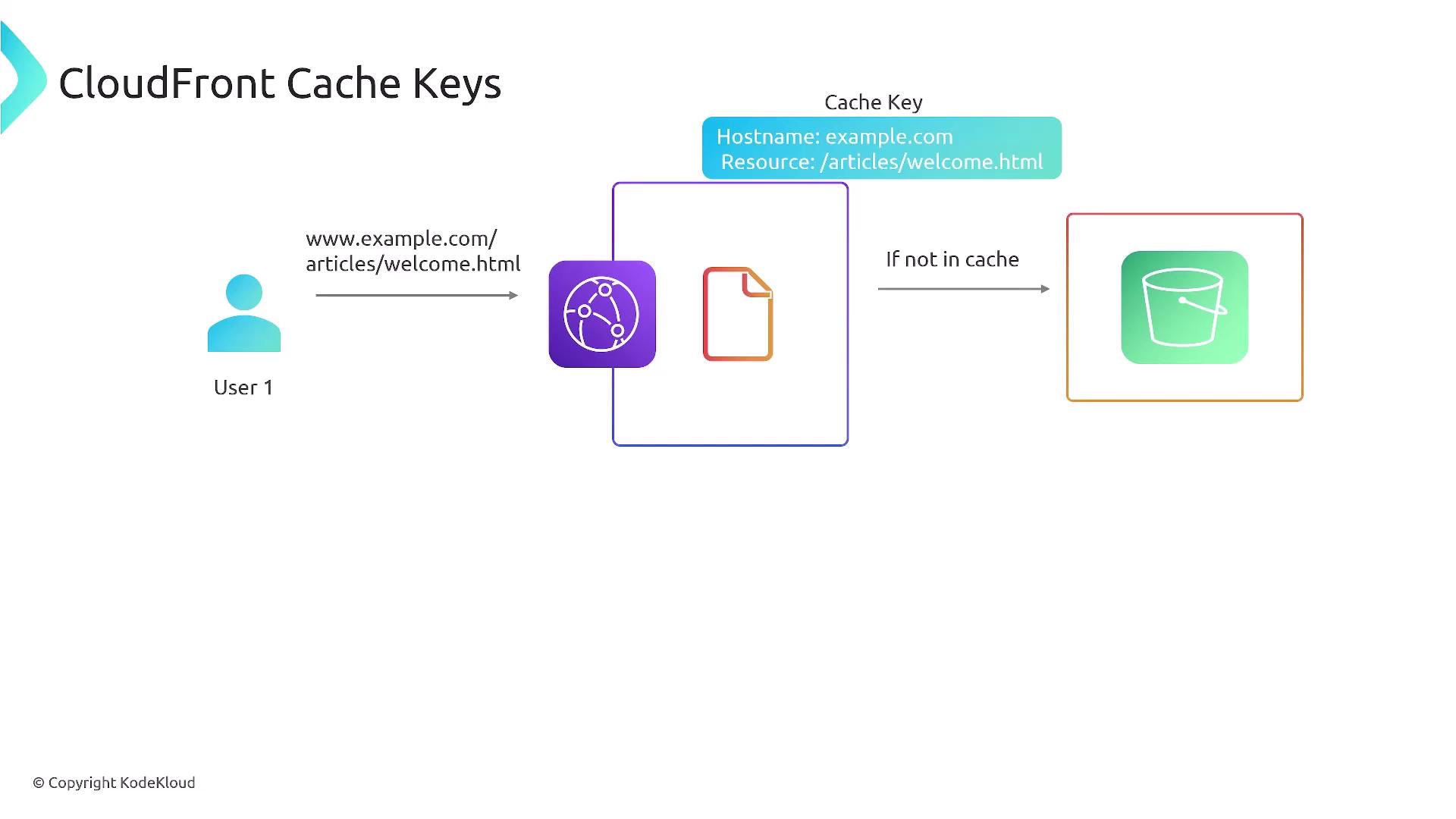

example.com/articles/welcome.html uses the entire URL as its cache key. If the URLs are randomized (for example, by appending a random number), each request generates a new cache key, leading to fewer cache hits. Therefore, maintaining static URLs—especially when dynamic URL parameters are unnecessary—will streamline the caching process.

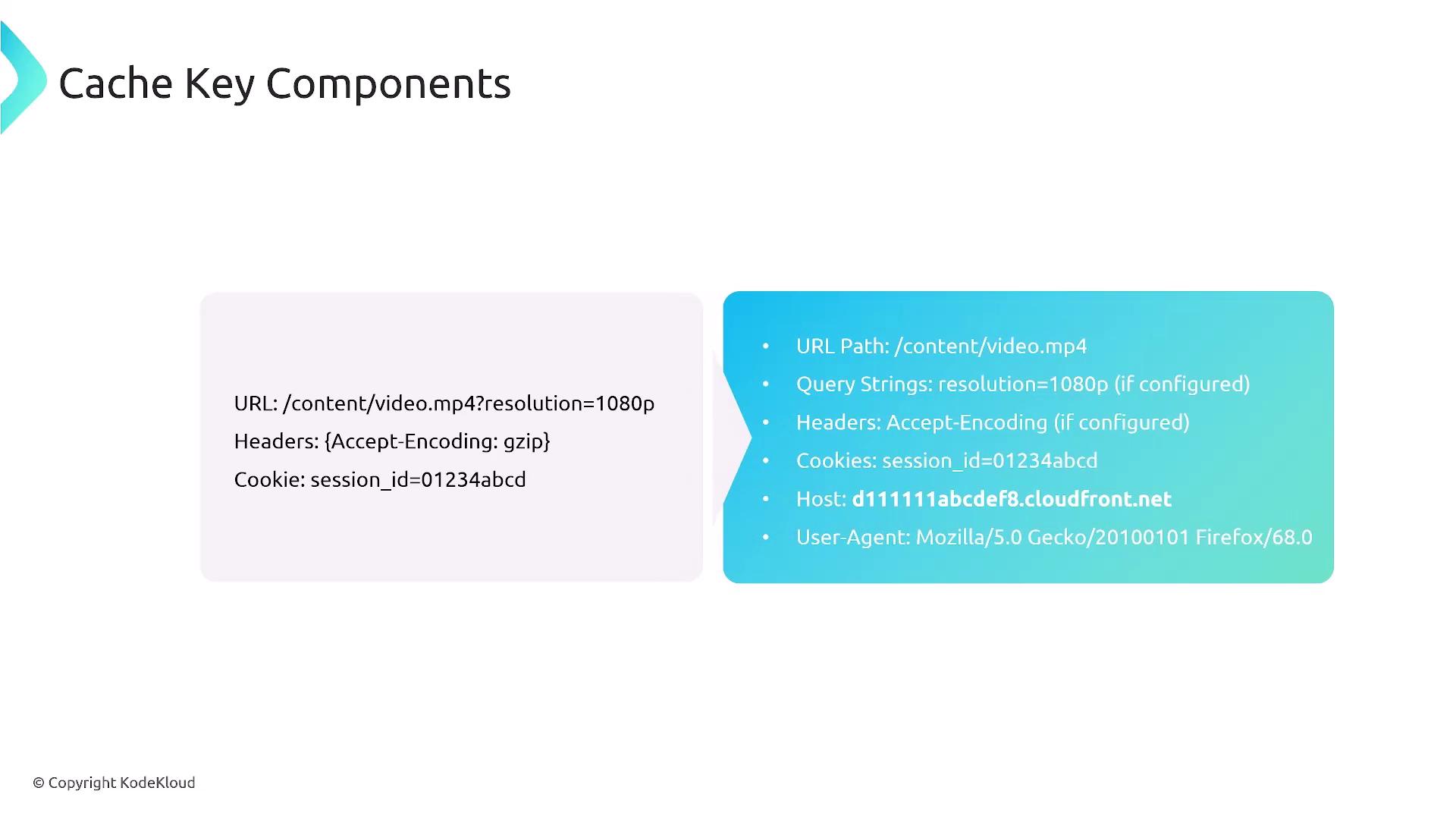

Components of a Cache Key

A cache key can be composed of several elements including the URL path, query strings, headers, cookies, and even the host. For instance, a query string parameter likeresolution=1080p or a cookie containing a session ID may be part of the cache key. CloudFront also supports optimizations, such as compression, to reduce data transfer.

The diagram below outlines all the possible components that can form a cache key:

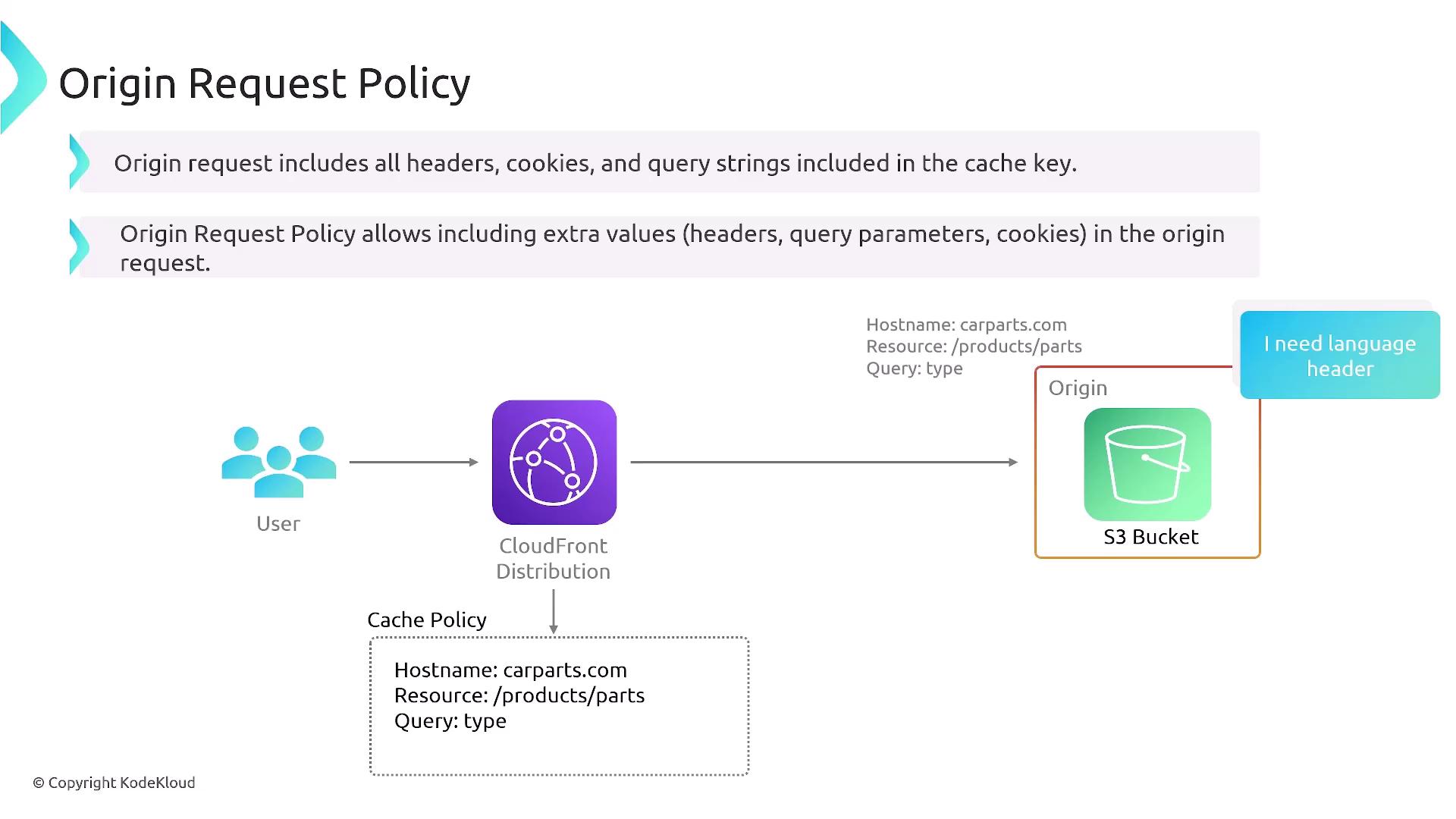

Understanding Cache and Origin Request Policies

While the cache policy dictates the construction of the cache key and the lifespan of cached objects, the origin request policy specifies which headers, cookies, and query strings should be forwarded to the origin server. For example, if your S3 bucket requires a specific language header, you can configure that in the origin request policy. This policy can influence the cache key by incorporating additional values—such as language preferences—to tailor the request more precisely.

Potential Caching Issues and Mitigation Strategies

CloudFront caching may face several challenges, including stale content and increased origin load due to low cache hit ratios. Below are common scenarios along with recommended solutions:- If CloudFront serves outdated content because the TTL (time-to-live) is set t…

If CloudFront serves outdated content because the TTL (time-to-live) is set too high, consider the following approaches:

- Invalidate the cache for specific files.

- Implement versioning within your filenames.

- Adjust the TTL values appropriately.

- Dynamic content with frequently changing URLs can result in cache misses and …

Dynamic content with frequently changing URLs can result in cache misses and higher origin load. To mitigate this:

- Optimize cache keys by removing unnecessary query parameters.

- Configure CloudFront to ignore certain query parameters to improve cache hit ratios.

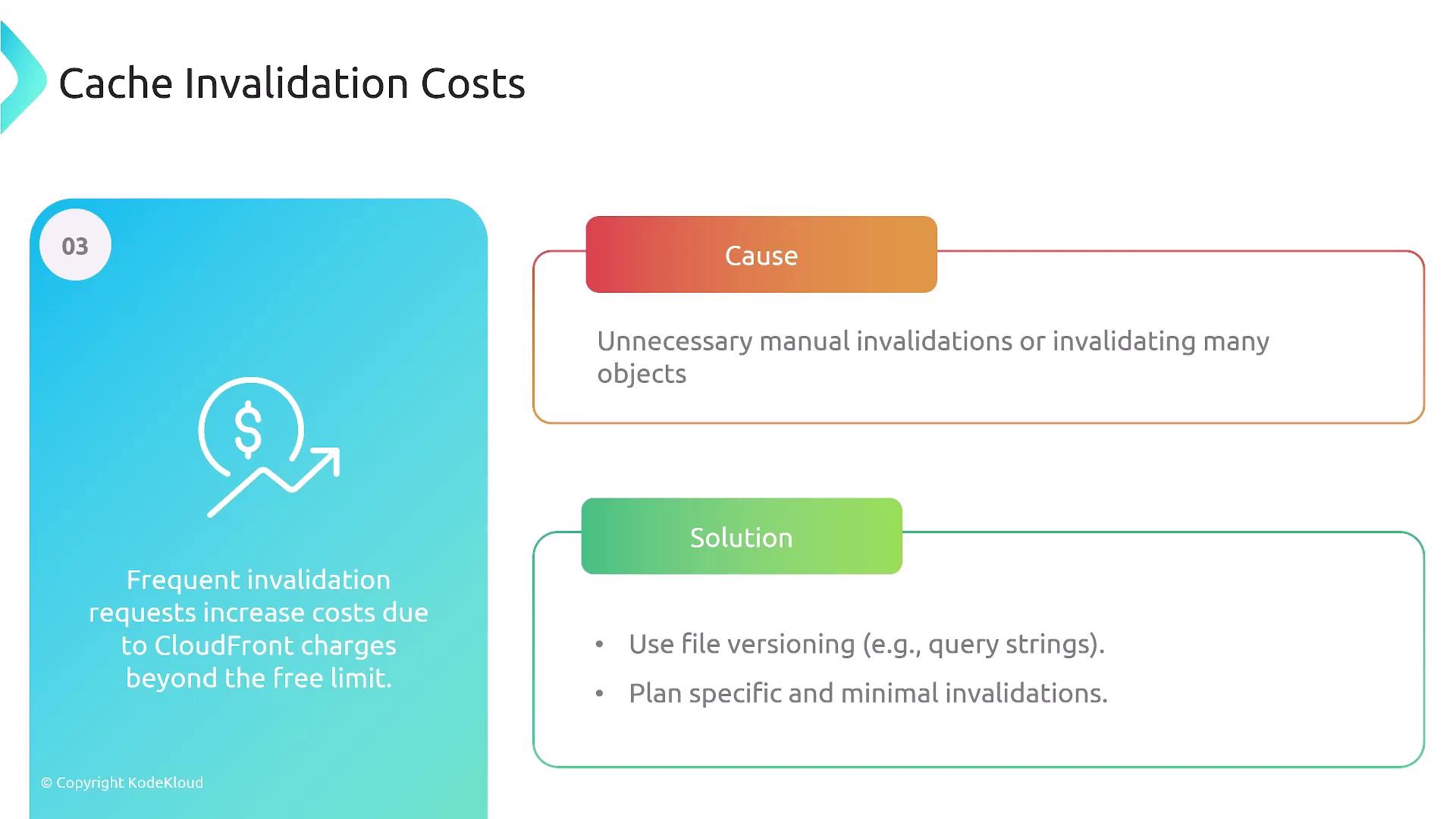

- While cache invalidation is a useful feature, frequent requests for invalidat…

While cache invalidation is a useful feature, frequent requests for invalidation can lead to higher costs. Instead of relying on mass invalidations, consider versioning filenames to ease this process.

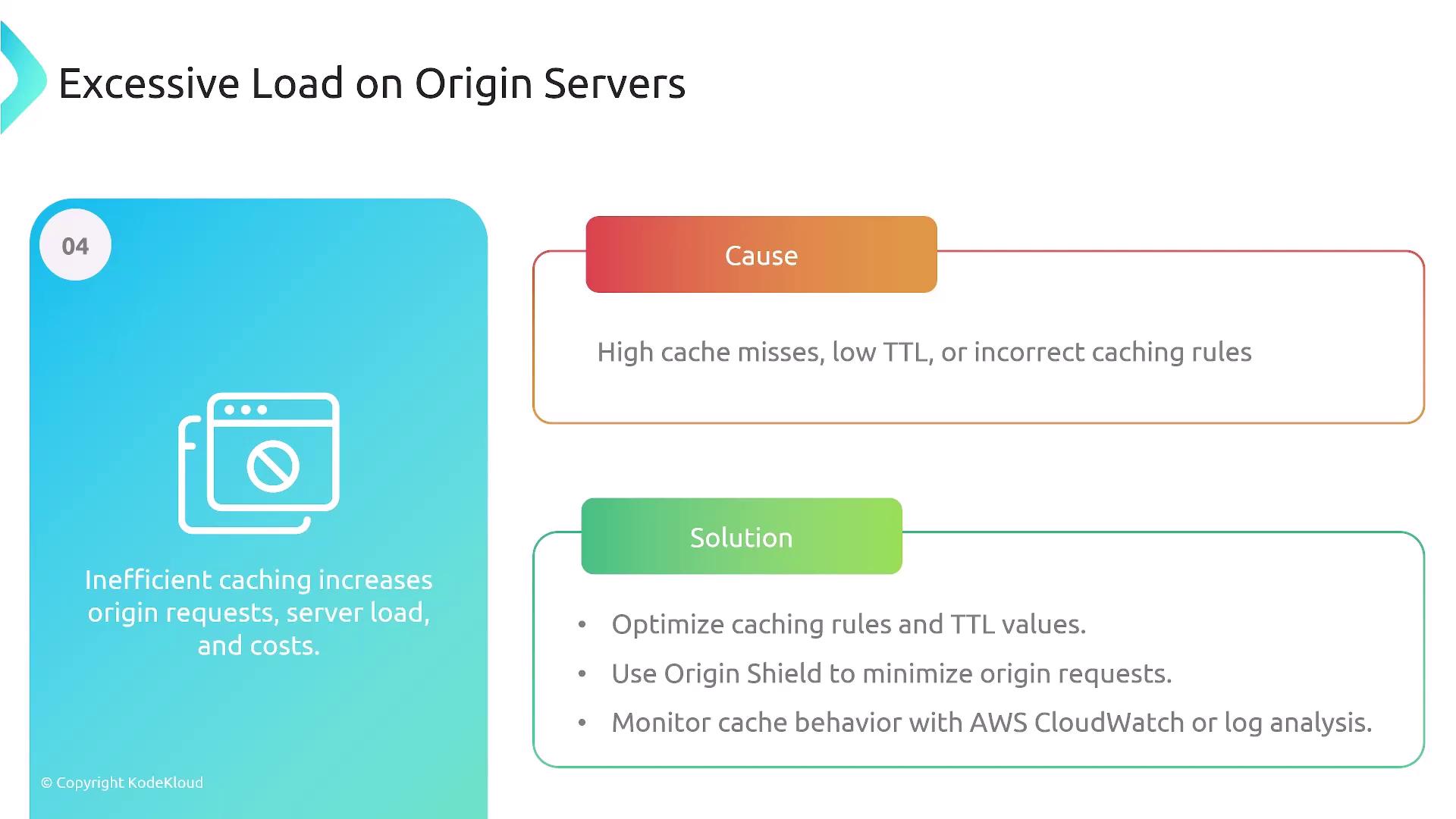

- High cache miss rates can lead to an excessive load on origin servers

High cache miss rates can lead to an excessive load on origin servers. Mitigation strategies include:

- Optimizing caching configurations.

- Adjusting TTL values.

- Implementing features such as Origin Shield, which acts as an additional caching layer to reduce the burden on your origin servers.