The Need for Global Content Delivery

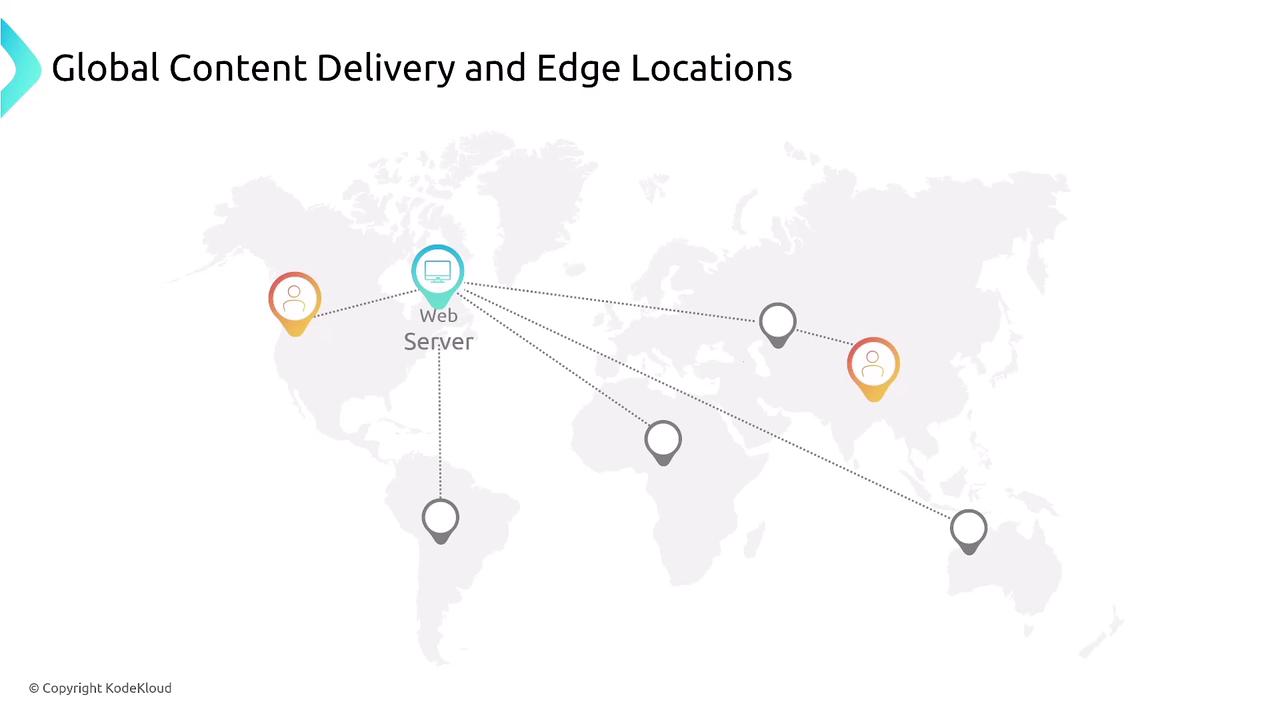

When a user located far from a web server requests content, the physical distance can lead to increased latency—even if the delay is only a few milliseconds. For instance, a request originating in Asia for content hosted in North America might experience noticeable delays compared to a request served closer to the user. Instead of deploying full-featured web servers in every region, CloudFront replicates static content (such as images, videos, and HTML files) across multiple locations worldwide. This process is illustrated in the diagram below, which shows the distribution of original source content to edge servers globally:

How CloudFront Works

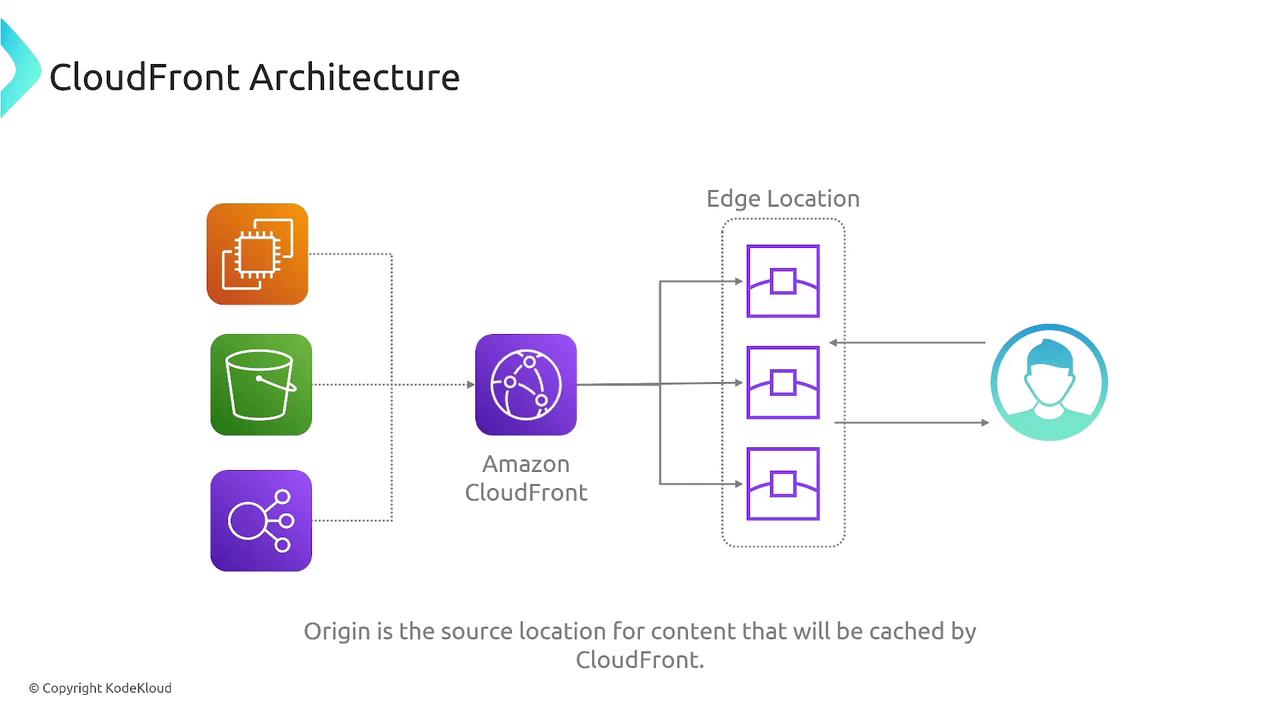

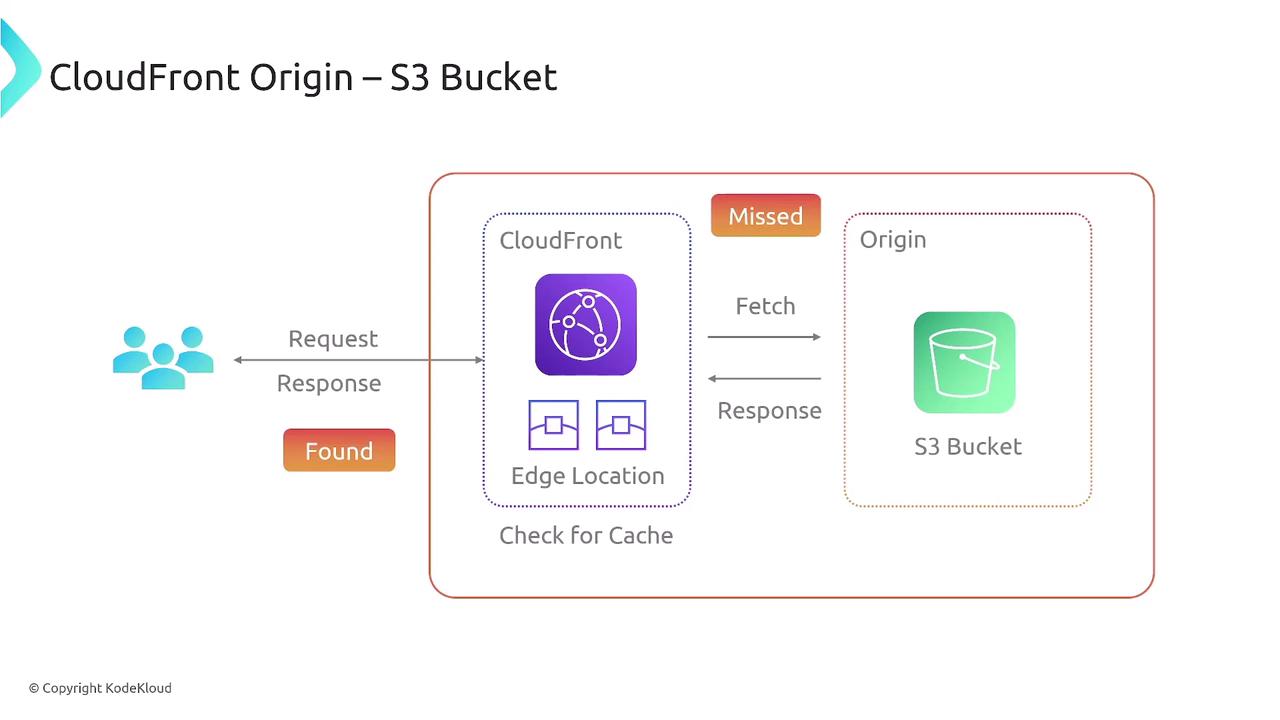

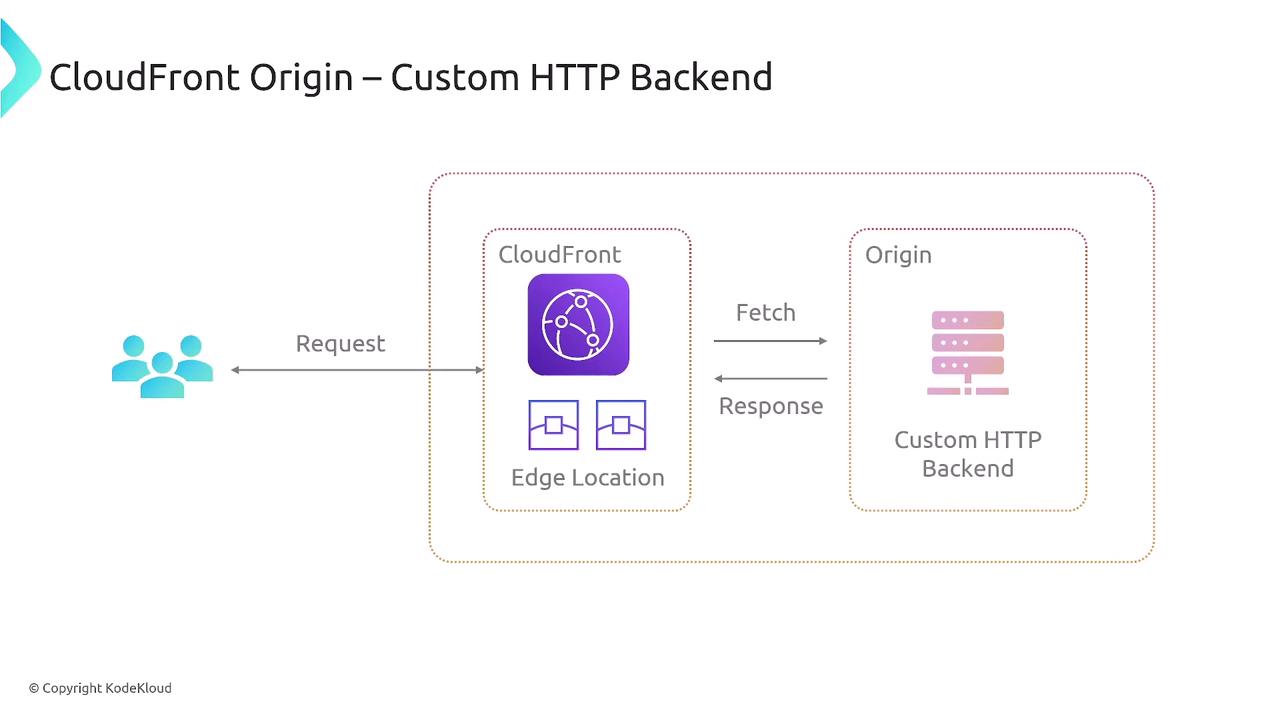

CloudFront reduces latency by caching content at edge locations and serving users from the nearest server. When a user requests content, DNS resolution directs the request to the closest edge location—even if the origin (such as your web server or S3 bucket) is positioned elsewhere. This redirection is transparent to the user’s browser, ensuring a seamless content delivery experience. The architecture of CloudFront consists of the following key components:- Origin: The source of your original content.

- Distribution Settings: Configuration options include security protocols (HTTPS/HTTP), geofencing rules, caching policies, and more.

- Edge Locations: CloudFront caches copies of your content in multiple global locations.

- Content Delivery: DNS directs user requests to the optimal edge location, ensuring rapid delivery of cached content.

Advanced Features and Customizations

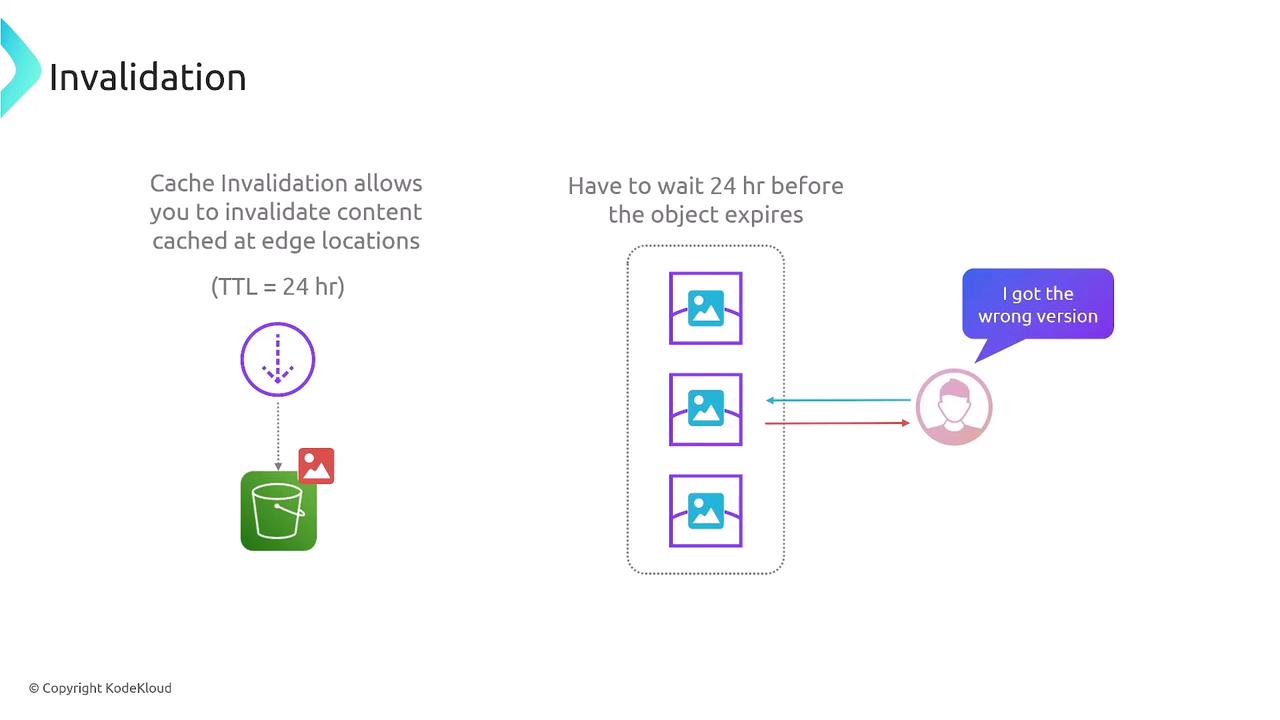

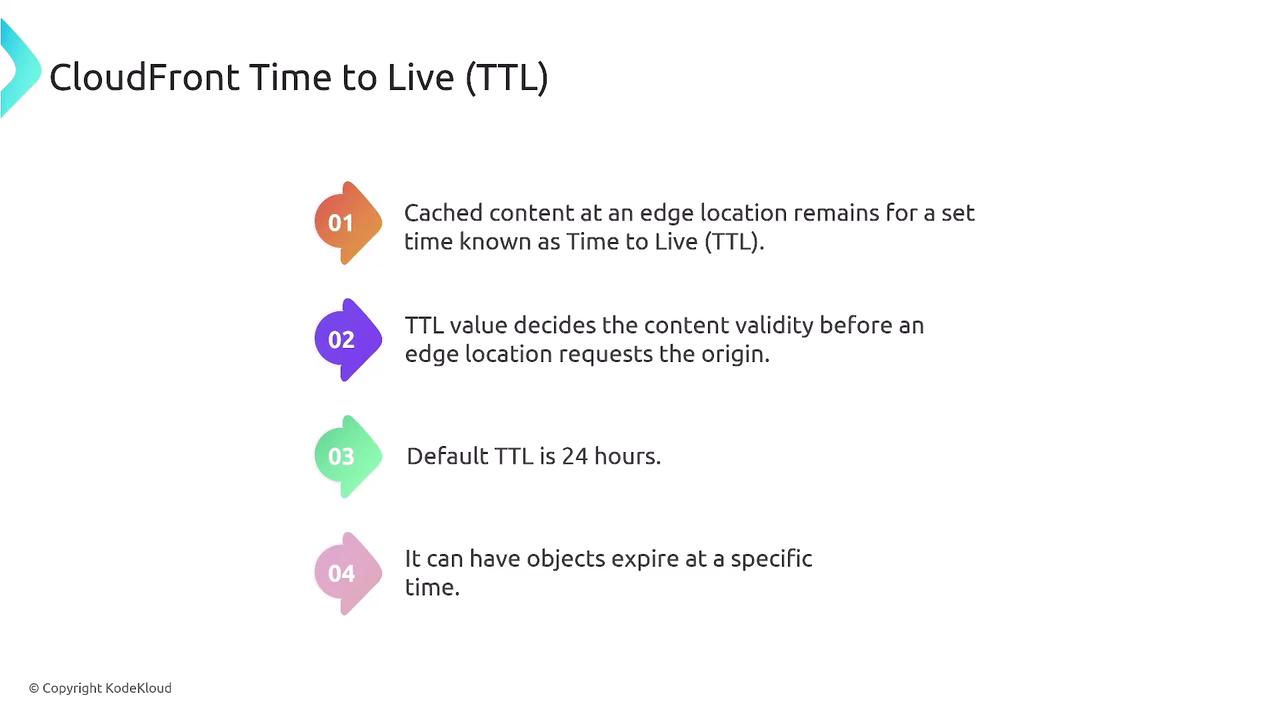

CloudFront offers a range of features designed to optimize performance and bolster security:- Cache Management: CloudFront caches your content at edge locations with a default Time to Live (TTL) of 24 hours. When content expires or is manually invalidated, CloudFront retrieves the latest version from the origin. You can configure TTL settings for individual objects or leverage cache-control headers to enforce refreshes.

- Cache Invalidation: If immediate updates are necessary, you can invalidate cached content across all edge locations. This ensures users always receive the most current data rather than outdated cached copies.

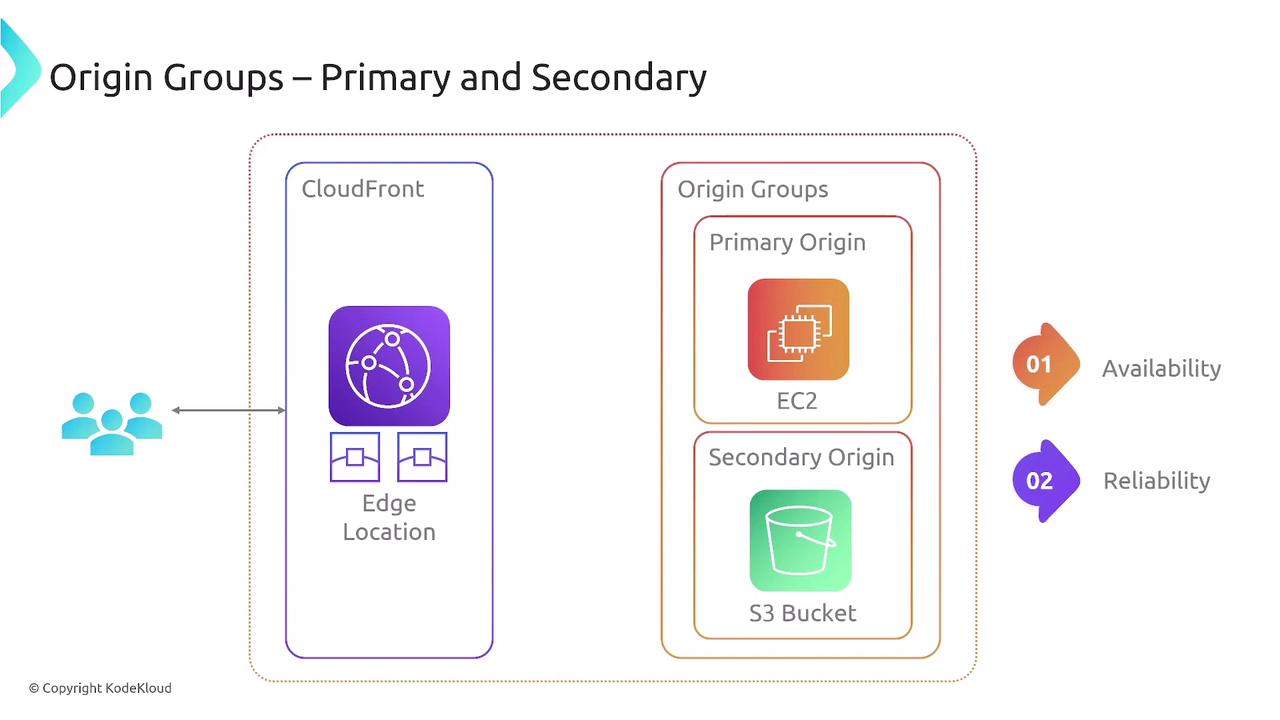

- Origin Failover: CloudFront supports configuring both primary and secondary origins. Under normal circumstances, content is fetched from the primary source; however, if the primary origin becomes unavailable, CloudFront automatically switches to the secondary source, enhancing availability and reliability.

- Request and Response Customization: Customize HTTP headers, cookies, and query strings on the fly to tailor content delivery to your application’s specific needs.

- Logging and Monitoring: CloudFront integrates with CloudWatch for detailed logging and supports storing logs in S3 for further analysis. These logs include critical data such as request times, client IP addresses, response status codes, and user-agent information.

- Security Enhancements: CloudFront seamlessly integrates with AWS WAF, supports SSL/TLS enforcement, and offers additional security measures such as data compression and encryption.

When configuring CloudFront, consider using cache-control headers to fine-tune the TTL and optimize performance based on your specific application needs.

Time to Live (TTL) and Cache Busting

CloudFront uses a Time to Live (TTL) mechanism to manage how long content remains cached at edge locations. With a default TTL of 24 hours, CloudFront will automatically refresh cached content after the set duration. You can adjust these settings or use cache-control headers to enforce updates as needed. Cache busting (or invalidation) allows you to override the default TTL, ensuring that users always receive the most up-to-date content. This mechanism is particularly beneficial for managing static assets that are updated infrequently while maintaining optimal performance. The diagram below summarizes CloudFront’s TTL mechanism and its role in managing cached content:

Conclusion

CloudFront is a robust, globally distributed CDN service that improves user experience by caching content at strategically located edge servers around the world. By efficiently managing content replication, DNS-based request redirection, cache invalidation, and advanced security features, CloudFront offers a comprehensive solution for fast, reliable content delivery. Whether you are serving static assets from an S3 bucket or dynamic content from a custom web server, CloudFront ensures that your users receive content quickly and reliably, regardless of their geographic location. Thank you for reading this article. We hope it has provided you with a clear, technically accurate understanding of CloudFront and its powerful capabilities.For more detailed information, explore the AWS Documentation on CloudFront and learn how to customize your distribution settings for optimal performance.