AWS Certified SysOps Administrator - Associate

Domain 6 Cost and Performance Optimization

Navigating Concurrency Cold Starts and Other Enhancements for Lambda

Welcome, students. In this lesson, we explore advanced techniques to optimize AWS Lambda performance by addressing concurrency, cold starts, and other improvements.

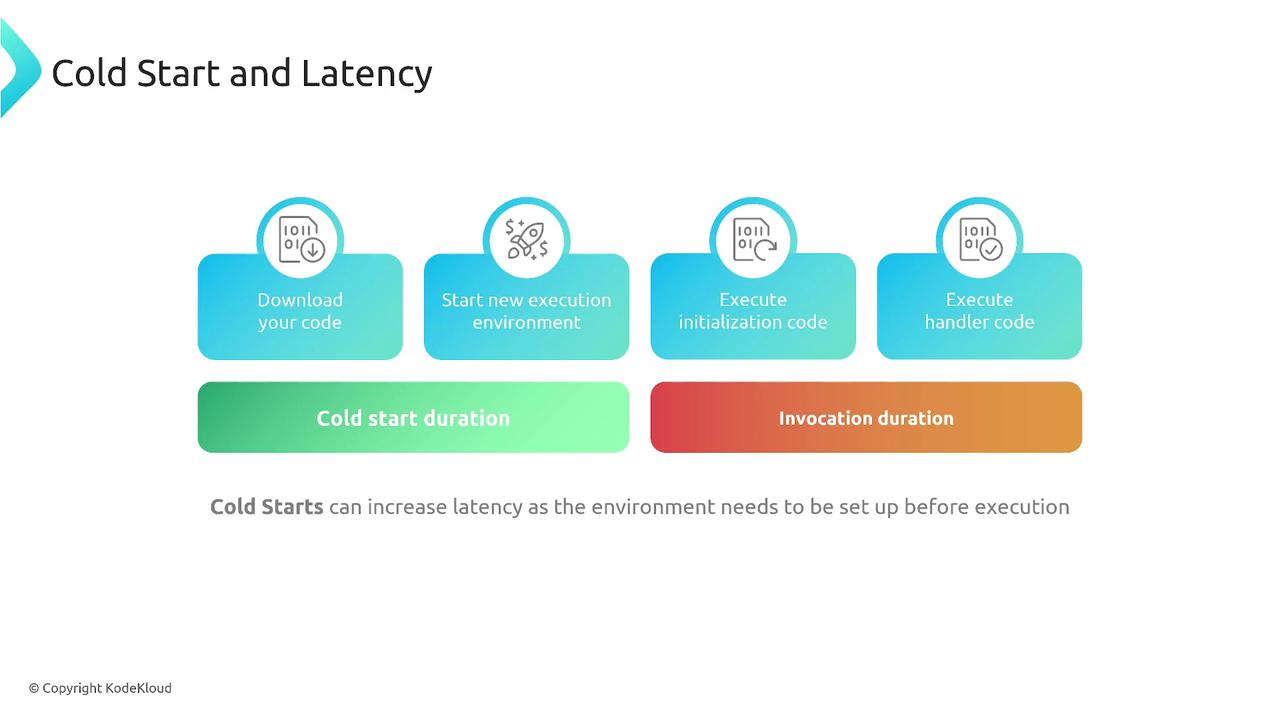

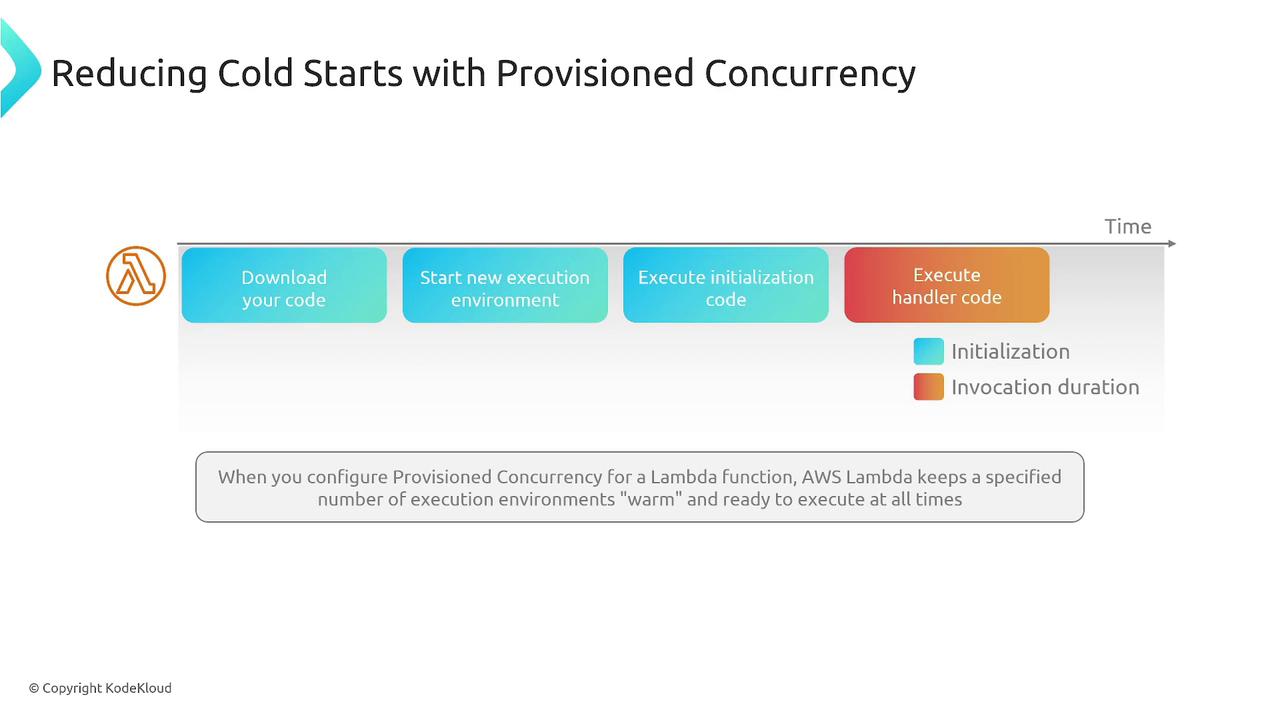

When you invoke a Lambda function for the first time, AWS creates a new execution environment. This process—known as a cold start—entails initializing a container or micro virtual machine and running the handler code. Cold starts can introduce latency, particularly if the function has been idle for more than 15 minutes, as the environment must fully warm up.

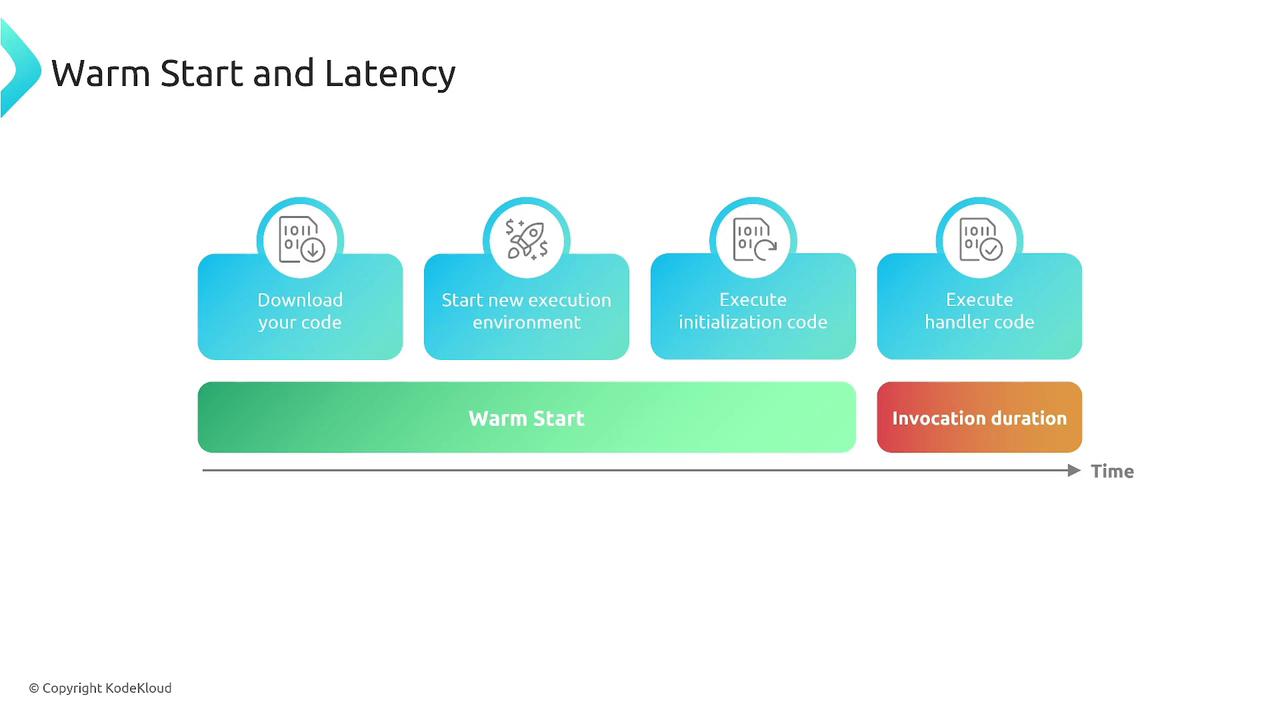

Once the environment has been initialized, subsequent invocations—known as warm starts—reuse this environment. In warm start scenarios, only the handler code is executed, significantly reducing latency compared to cold starts. This usually applies when a new request is received within approximately 5 to 15 minutes after the previous invocation.

In production, cold starts are relatively infrequent, generally occurring in less than 1% of invocations. They tend to be more noticeable in development or testing environments. Depending on the conditions, cold start response times can range from 100 milliseconds to over one second.

Performance Optimization Tip

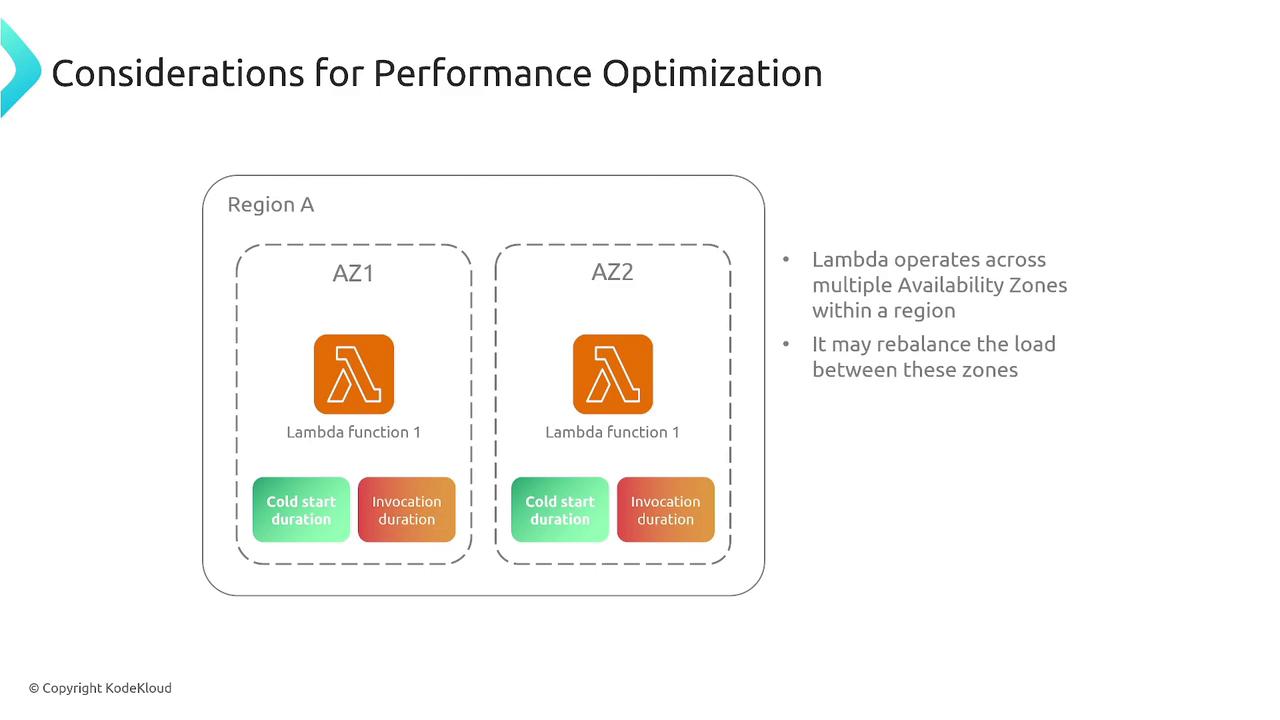

AWS Lambda employs internal strategies, such as reusing execution environments across different availability zones within a region, to mitigate the impact of cold starts. However, these optimizations depend heavily on environment reuse.

To further reduce latency, streamline your invocation code by keeping it as lean as possible. Alternatively, consider using provisioned concurrency, which maintains a specified number of warm execution environments. This setup ensures that function invocations are processed immediately without the latency penalties associated with cold starts.

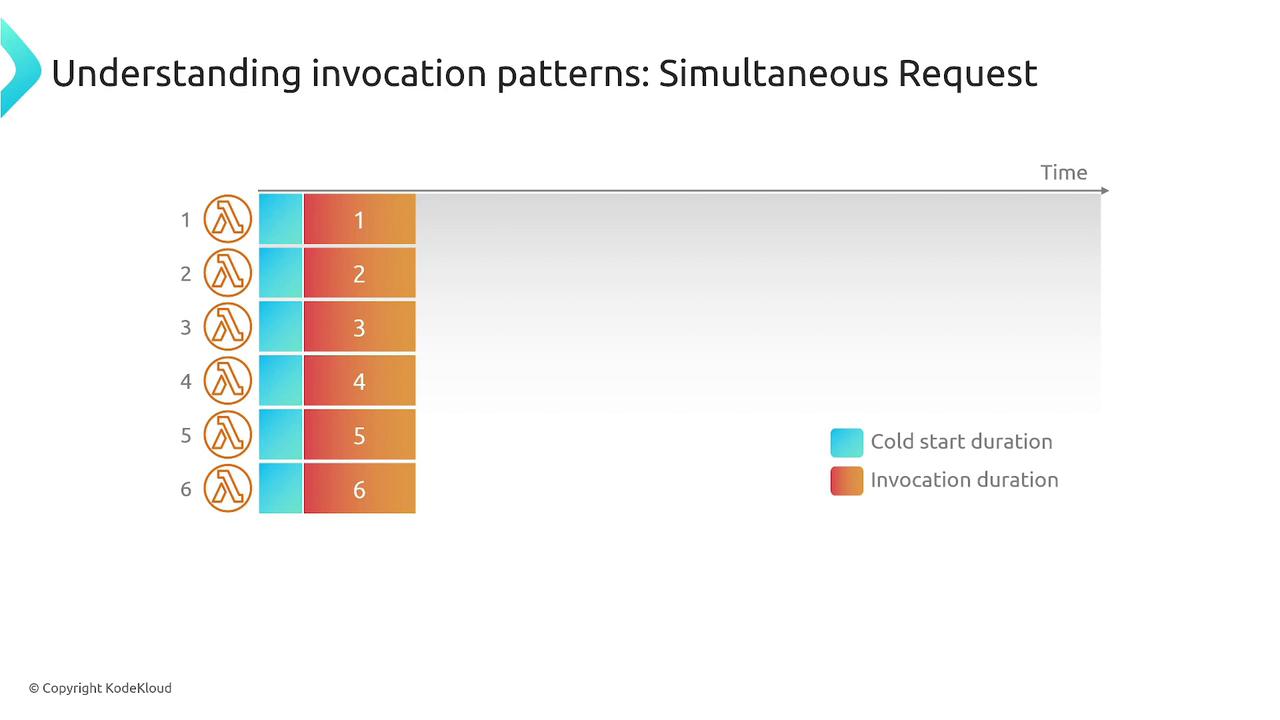

Typically, Lambda processes one request per execution environment at a time. Once an invocation completes, the environment remains available for subsequent requests. If multiple requests occur simultaneously, Lambda scales by provisioning additional execution environments. Note that each new environment may experience a cold start if it hasn't been pre-warmed.

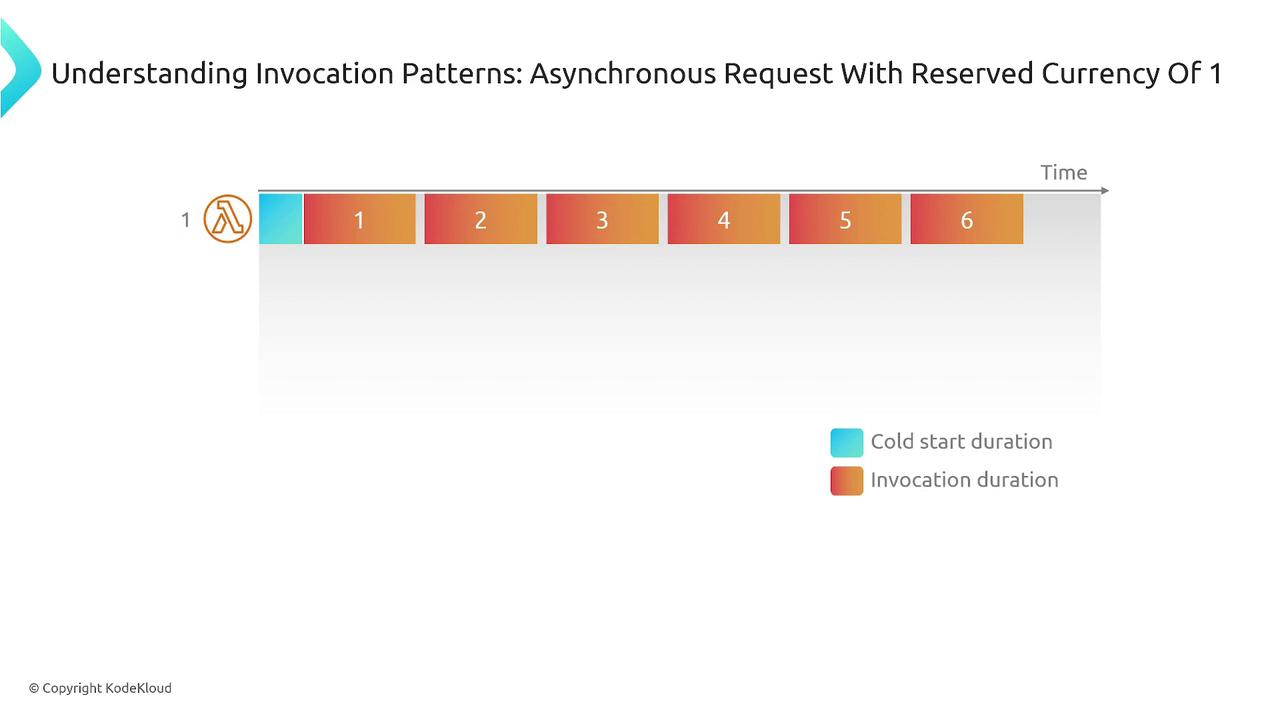

To minimize unnecessary cold starts during concurrent requests, consider staggering their arrivals or using asynchronous invocations. In asynchronous scenarios, an internal queue holds messages until the Lambda function is ready to process them. For example, if reserved concurrency is set to six, only six functions will run concurrently, each handling one message at a time.

Memory allocation plays a critical role in function performance. The allocated memory can range from the default 128 MB up to 10 GB, and it also determines the CPU and networking capacity. Adjust this parameter incrementally to best suit your performance needs.

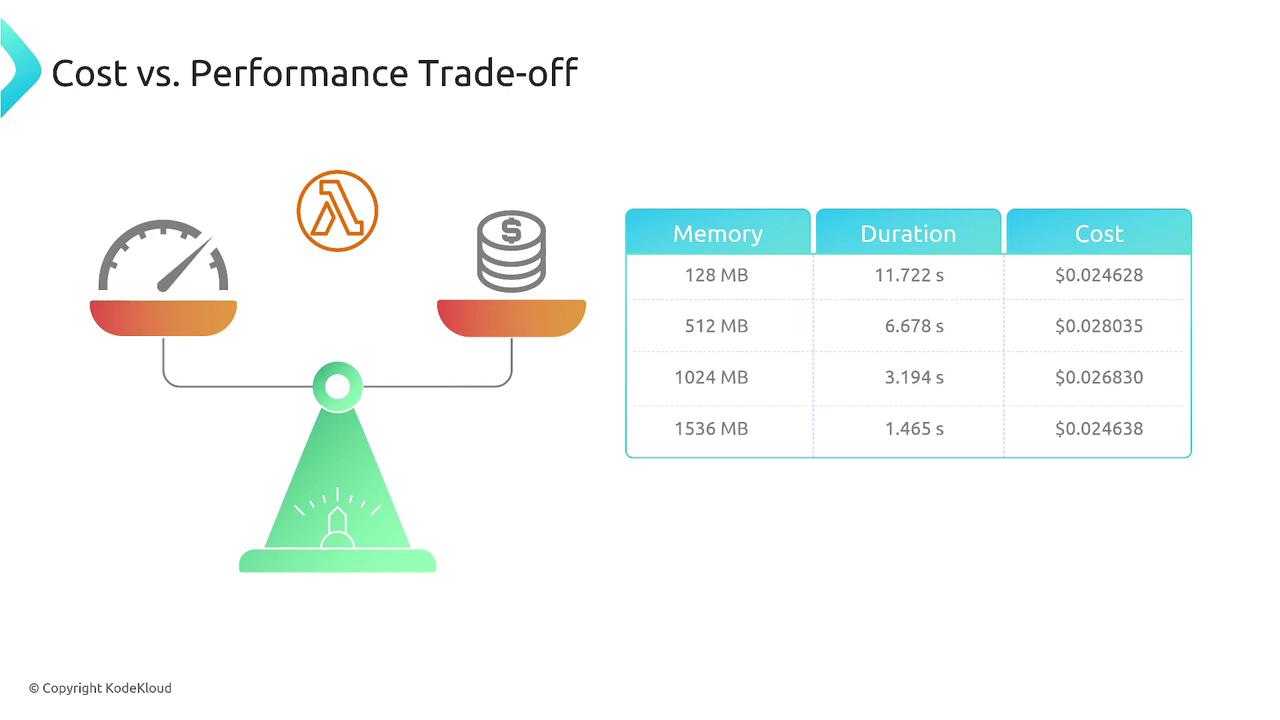

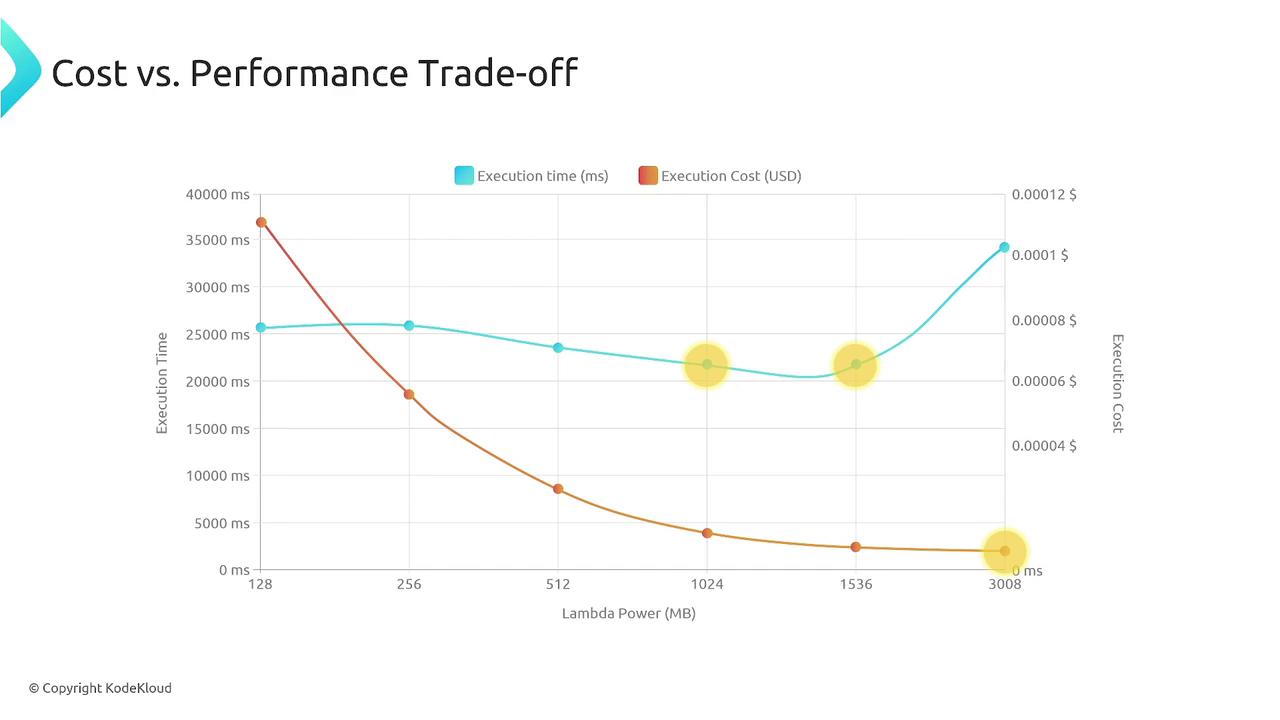

When considering cost and performance trade-offs, it is important to strike the right balance. For example, a 128 MB function running for 11 seconds may cost about 0.24 cents, while a 512 MB function may run faster and potentially reduce overall execution cost. Keep in mind, however, that a larger memory allocation, such as 3 GB, might yield the quickest response times but at a higher expense. Experimentation is essential to identify the optimal configuration for your specific use case.

Another critical factor in optimizing AWS Lambda functions is static initialization—the phase before the main logic of the Lambda handler runs. This stage typically involves importing libraries, initializing logging, and establishing database connections. Minimizing initialization latency is particularly important during cold starts.

Below is an example of static initialization in Python, where logging, an S3 client, and basic configurations are set up before the Lambda handler is defined:

import os

import json

import cv2

import logging

import boto3

s3 = boto3.client('s3')

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def lambda_handler(event, context):

print("Starting handler")

# Get object metadata from the event

input_bucket_name = event['Records'][0]['s3']['bucket']['name']

file_key = event['Records'][0]['s3']['object']['key']

output_bucket_name = os.environ['OUTPUT_BUCKET_NAME']

output_file_key = file_key.replace('.jpg', '.png')

print("Input bucket:", input_bucket_name)

print("Output bucket:", output_bucket_name)

Initialization Best Practices

Keep your initialization code minimal. Import only necessary modules, initialize database connections early, and consider lazy-loading global variables when needed. This helps avoid performance bottlenecks and minimizes data leakage between invocations.

Summary

To optimize your AWS Lambda functions:

- Keep the invocation code slim to reduce cold start latency.

- Use provisioned concurrency for immediate response in high-demand scenarios.

- Adjust memory allocation to balance execution cost against performance benefits.

- Streamline static initialization by importing only what is necessary and setting up connections early.

- Use asynchronous processing and reserved concurrency to effectively handle spikes in concurrent requests.

Thank you for reading this article. These concepts are essential for optimizing AWS Lambda functions and may also assist you in your certification exam. See you in the next lesson.

Watch Video

Watch video content