Generative AI in Practice: Advanced Insights and Operations

Application and Assessment of LLMs

Assessing LLM Performance

Evaluating large language models (LLMs) and foundation models presents two primary challenges that developers and enterprises must navigate.

Subjective Quality and Custom Needs

Assessing the quality of LLMs is inherently challenging because a "good" model is subjective. Criteria for model performance and behavioral traits vary from user to user. When incorporating proprietary data with Retrieval Augmented Generation (RAG), the evaluation process becomes twofold:

- Measure the effectiveness of the retrieval component to ensure that the most relevant context is consistently fetched.

- Verify that the model leverages that context to produce accurate and useful responses.

Different use cases require tailored evaluation methods. For enterprise applications, running standardized tests (such as checks for undesirable outputs like profanity) is essential before adding a model to your catalog. In contrast, developing RAG applications demands custom metrics to assess both the retrieval quality and the overall response generation.

Blind Testing and Community Benchmarks

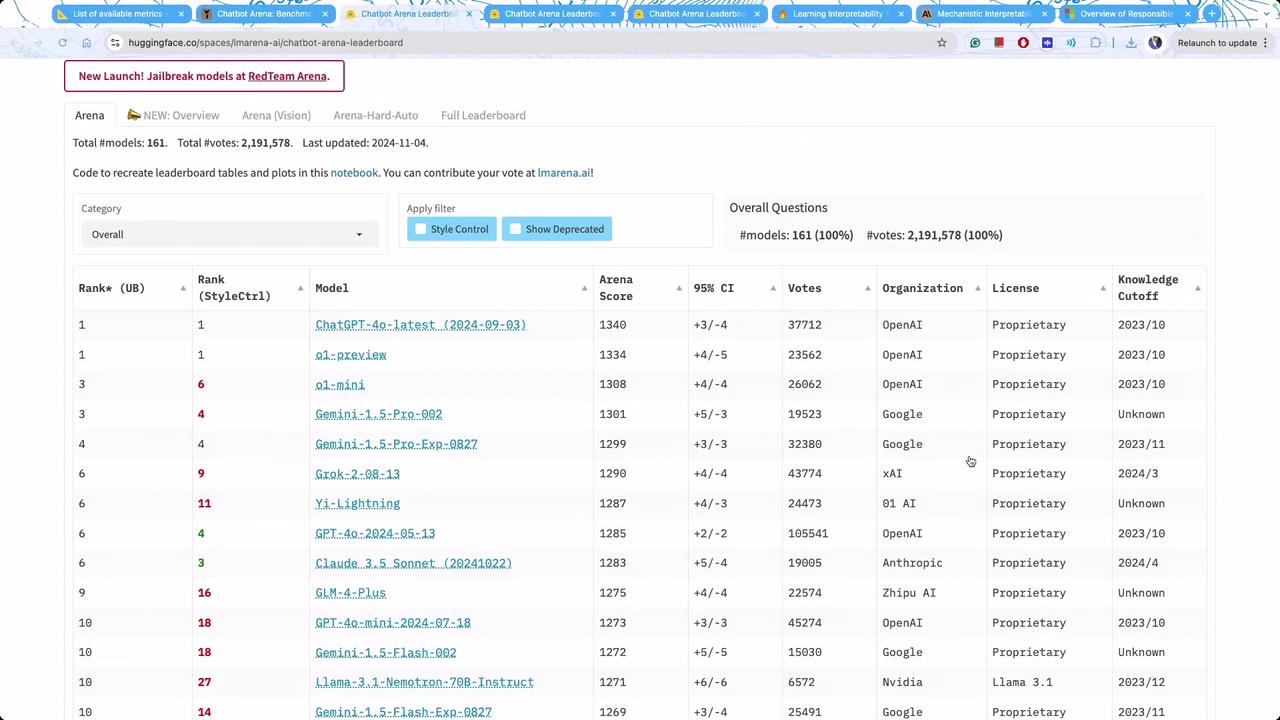

A widely accepted approach to evaluating models is to consider public and blind test feedback. In blind tests, evaluators compare two models and choose the one that generates higher-quality responses. One notable example is the Chatbot Arena, which displays rankings and scores for various AI models. Currently, the leaderboard indicates that proprietary models generally perform exceptionally well. The image below illustrates the leaderboard provided by Chatbot Arena:

Key Insight

Proprietary models often outperform meta models in user interaction contexts despite sometimes ranking lower on standardized tests such as MMLU.

Evaluating Technical and Conversation Metrics

Techniques like reinforcement learning from human feedback (or even AI-driven reinforcement) have been pivotal in aligning proprietary models closely with human expectations. When evaluating models through both technical metrics (like MMLU) and human preferences, a strong correlation emerges between user satisfaction and model ranking. However, it's important to note that models developed by major organizations ("meta models") may excel in standardized testing but may not perform as naturally or effectively in conversational settings.

For RAG systems, additional metrics become critical:

- Context Recall: Ensures relevant context is correctly retrieved for the model.

- Noise Sensitivity: Assesses how distractions or irrelevant data affect model performance.

These metrics are vital when designing RAG pipelines or intelligent agents, as the overall performance depends not only on the underlying model but also on the model’s ability to select appropriate tools for specific tasks.

Conclusion

A comprehensive evaluation framework is essential for building robust Generative AI applications. By combining standardized tests with custom RAG metrics, organizations can accurately identify both strengths and areas for improvement in their models. Establishing clear evaluation criteria is fundamental to continuously enhancing the performance of LLMs and RAG systems.

Takeaway

A well-defined evaluation framework enables a balanced view of model capabilities, ensuring that both technical performance and user experience improvements are tracked effectively.

Watch Video

Watch video content