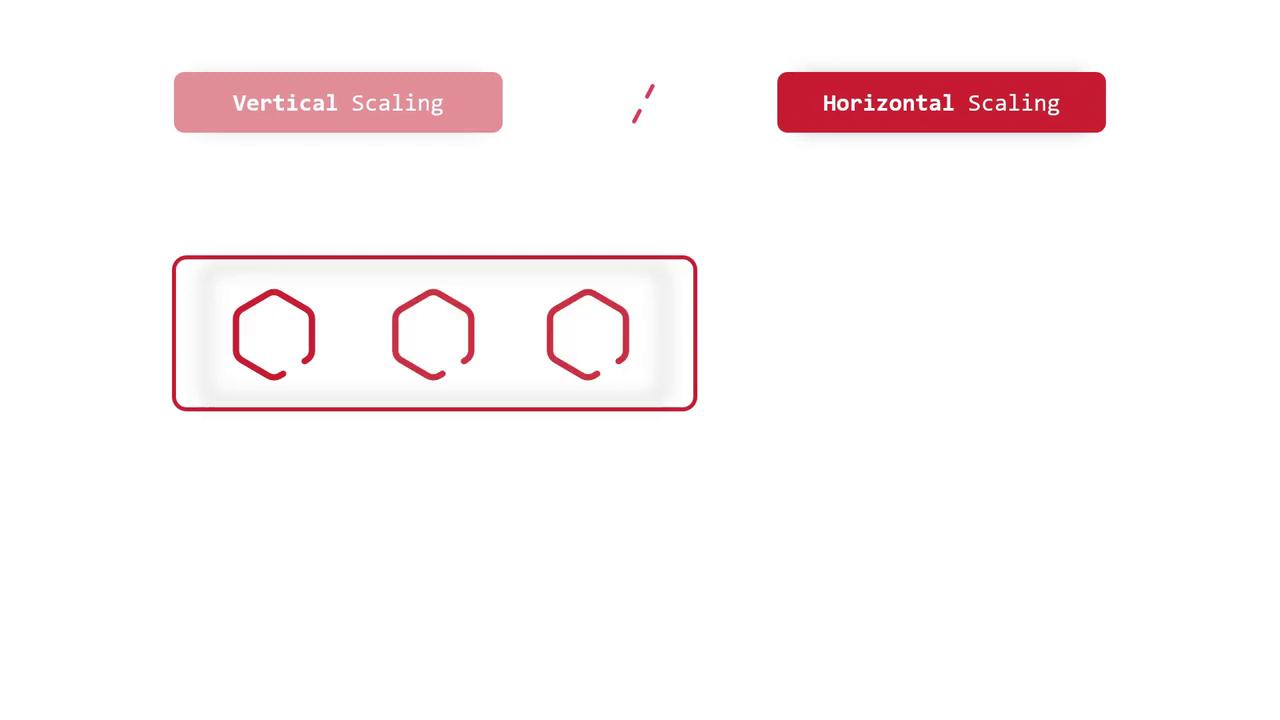

Vertical Scaling

Vertical scaling enhances the resources—such as CPU and memory—of a single pod. This approach is useful when a pod faces performance bottlenecks due to high traffic or increased processing demands. By increasing the pod’s resource allocation, you can sometimes manage short-term spikes in workload. However, vertical scaling is generally less common and is typically reserved for specific scenarios where scaling out horizontally is not feasible.Vertical scaling is most effective for applications with limited scalability options or when stateful workloads prevent easy horizontal partitioning.

Horizontal Autoscaling

Horizontal autoscaling involves the dynamic creation and removal of pod replicas based on current load conditions. For example, your application may start with a single pod, scale out to three pods when user traffic increases, and scale back down when the demand subsides. This method ensures optimal performance and efficient resource utilization by adapting in real time to varying traffic levels.

How HPA Works

The Horizontal Pod Autoscaler continuously monitors CPU and/or memory resource usage across the pods in an OpenShift environment. It compares the current metrics to predefined target values and automatically adjusts the number of replicas to meet the desired performance. This automation helps manage application load efficiently, ensuring that the system can handle peak traffic without manual intervention.One of the primary advantages of HPA is its ability to automatically scale the number of pods based on real-time usage metrics. This responsiveness helps maintain application performance and optimizes resource allocation during both high-demand and low-demand periods.