AWS Certified AI Practitioner

Guidelines for Responsible AI

Transparent and Explainable Models

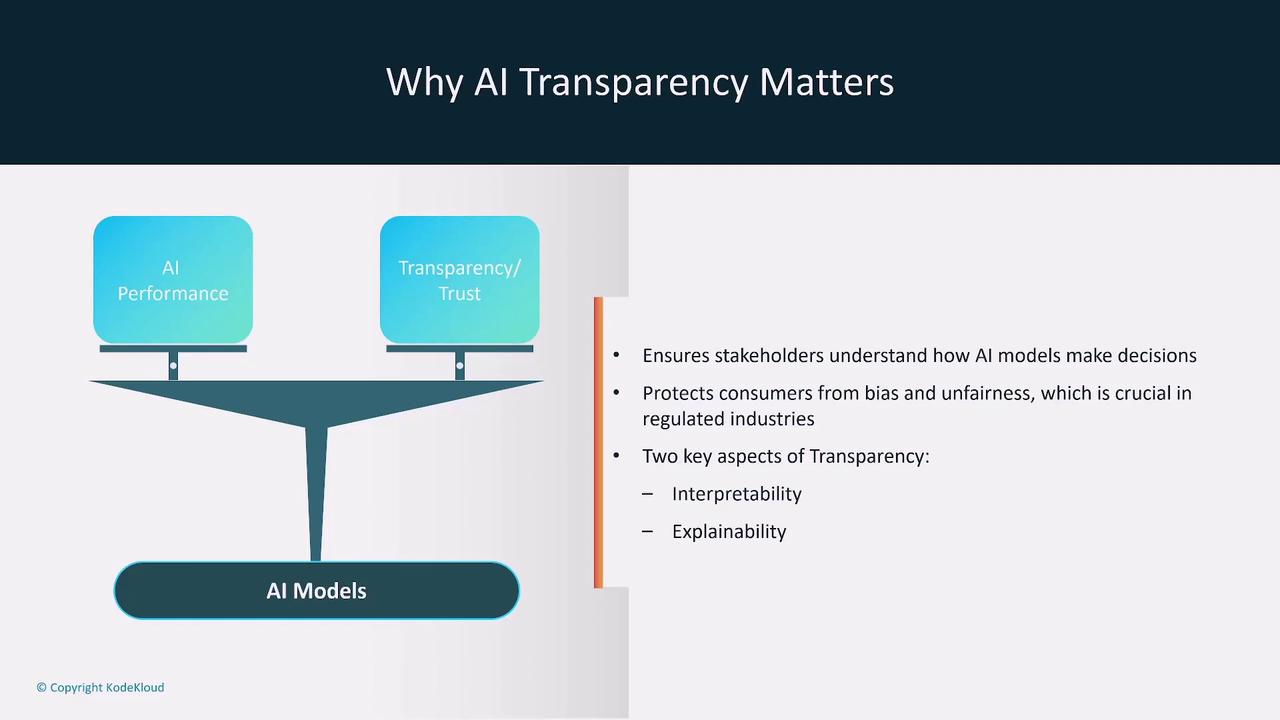

Welcome to this lesson on AI transparency and explainability, crucial aspects of modern artificial intelligence applications in sectors such as finance and healthcare. In this session, we explore how balancing high-performance AI with transparency and trust is essential, especially when issues like fairness and bias affect real-world outcomes.

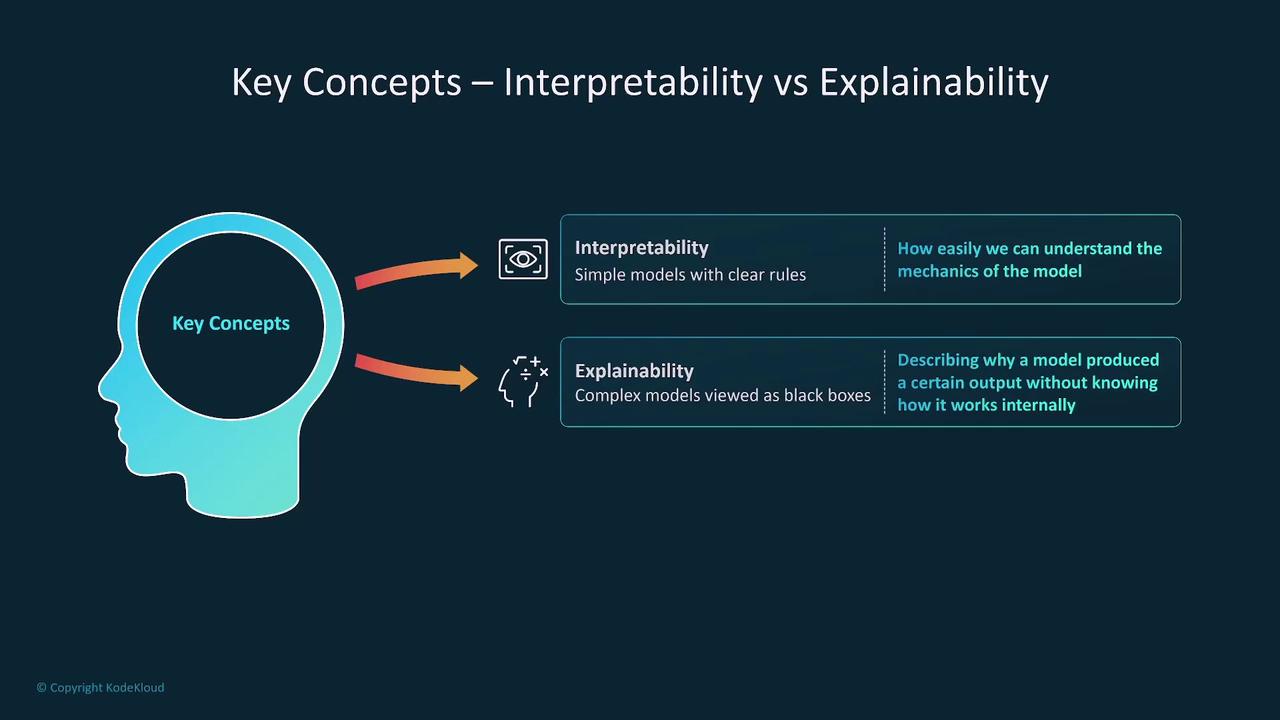

Two central concepts in AI transparency are interpretability and explainability. Interpretability involves understanding a model's internal mechanics. For example, in simple models like decision trees or linear regression, you can trace the decision-making process step-by-step. However, in complex models such as deep neural networks, the inner workings form a "black box," meaning that while we can observe inputs and outputs, the detailed processes in between remain hidden.

Due to these complexities, even if we cannot fully interpret a deep neural network, we can provide explainability by describing the relationships between inputs and outputs. Regulatory frameworks in industries like finance and healthcare often demand high interpretability. If a model falls short on this, explainability serves as an approximation to understand the model’s decision-making process.

When we rely on explainability, we visualize how information flows through a model. Each input produces an output, and although we can generally discern these relationships, the contributions and interactions within the model remain abstract.

Note

Simpler models offer high transparency and ease of interpretation but may not address complex tasks effectively. Conversely, complex models deliver superior performance at the expense of transparency.

It is important to consider trade-offs when selecting a model. While simpler models deliver clear insights into decision-making, they might lack the performance capacity required for complex tasks. Additionally, higher transparency can sometimes expose models to security risks, as adversaries might exploit detailed insights into the model's inner workings. In contrast, opaque models force adversaries to rely on outputs alone, potentially enhancing security.

Balancing privacy with transparency is equally critical. Revealing too much about a model’s design or training data could expose proprietary information or sensitive details. While general insights can be shared openly, sensitive information must be carefully managed to maintain both transparency and confidentiality.

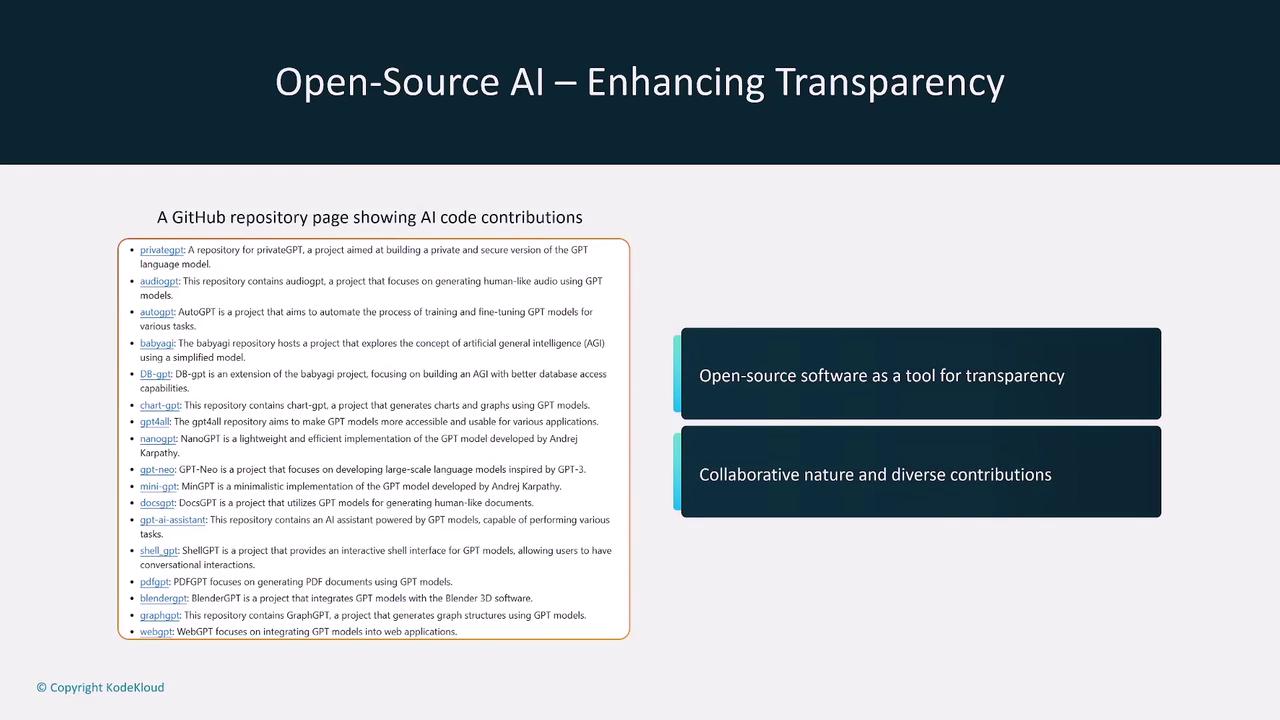

Regulatory standards such as GDPR and industry-specific guidelines in healthcare and finance heavily influence model selection. In many cases, models must be highly interpretable to meet legal requirements. Open-source platforms like GitHub promote collaboration by allowing scrutiny of the underlying code, which in turn helps reduce bias and promote fairness.

Different companies adopt various approaches to transparency. For instance, AWS offers AI service cards that provide detailed information about a model’s intended use, limitations, and design. These service cards, covering services such as Rekognition, Textract, and Comprehend, help users understand the intricacies behind the models.

Similarly, SageMaker leverages model cards to document the entire model lifecycle—from training to evaluation. Tools such as Data Wrangler and SageMaker Clarify play pivotal roles by detailing the training data, documenting datasets, and evaluating performance. For example, SageMaker Clarify employs techniques like partial dependence plots (PDP) to visualize how variations in features, such as age, can impact predictions.

Incorporating human-centered AI practices ensures that ethics, fairness, and transparency are integral to model design. Amazon Augmented AI (A2I) integrates human review into the decision process, allowing low-confidence predictions to be manually reviewed and corrected through reinforcement learning from human feedback. SageMaker Ground Truth further supports this by enabling human data labeling via platforms like Mechanical Turk or private teams.

Additionally, SageMaker Model Monitor tracks the performance of deployed models in real time, identifying issues such as data drift and bias. Complementary solutions like Amazon OpenSearch, along with databases such as RDS, Aurora, DocumentDB, and Neptune Graph, enhance transparency by providing powerful search and vector search capabilities to explore data relationships comprehensively.

This lesson has provided a comprehensive overview of how transparency, interpretability, and explainability in AI models are interwoven to foster trust and compliance. By understanding these critical concepts, you are better equipped to select, deploy, and regulate AI solutions that meet industry standards and ethical requirements.

Thank you for reading, and we look forward to exploring more advanced topics with you in the next lesson.

Watch Video

Watch video content