AWS Certified AI Practitioner

Security Compliance and Governance for AI Solutions

Securing AI Systems with AWS Services

Welcome back! In this lesson, we'll explore how to secure AI systems using AWS services. This guide is designed for students with foundational knowledge in cloud services and security. We'll begin with an overview of key security concepts and then dive into how AWS prioritizes security responsibilities, IAM best practices, logging, encryption, and network isolation.

The AWS Shared Responsibility Model

Understanding the AWS Shared Responsibility Model is essential. AWS secures the underlying infrastructure—hardware, data centers, virtualization, and networking—while you are accountable for securing everything you configure or access.

AWS has an excellent track record of managing its portion of security. However, you must carefully manage security settings for the services you control. For instance, if you can log into an operating system, patching becomes your responsibility. Similarly, if you access a database system, applying updates is your task. Meanwhile, managed services like AWS Lambda only require you to focus on securing your code.

AWS Identity and Access Management (IAM)

AWS Identity and Access Management (IAM) is crucial for controlling user access, groups, roles, and policies. Implementing multi-factor authentication (MFA) significantly enhances security by enforcing strong access practices. Assign permissions wisely when configuring services such as Bedrock and SageMaker.

IAM policies, commonly defined using JSON or configured via the AWS console, dictate the access permissions for various AWS resources. Always adhere to the principle of least privilege, granting only the permissions necessary for each task.

Below is an example of an IAM policy that grants permissions to list buckets and to get or put objects within a specific S3 bucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow", // This policy allows access

"Action": [

"s3:ListBucket" // Permission to list all buckets in S3

],

"Resource": [

"arn:aws:s3:::example-bucket" // Specifies the bucket resource

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject", // Permission to read object data

"s3:PutObject" // Permission to upload or modify object data

],

"Resource": [

"arn:aws:s3:::example-bucket/*" // Applies to all objects within the bucket

]

}

]

}

Exam Tip

This sample IAM policy is provided as a reference and is not mandatory for the AWS AI Practitioner exam.

Avoid using the AWS root user for everyday tasks since it has unrestricted access. Instead, create IAM users and assign them to appropriately named groups (e.g., "Developer-ProjectB" or "Read-Only") to simplify permission management and enhance security.

IAM roles provide temporary access by allowing one entity to assume another's security profile. Each role comprises a permissions policy and a trust policy. For example, to assume a role in another account, that role must explicitly trust your IAM user. Think of roles as temporary security identities, similar to using the "sudo" command in Linux.

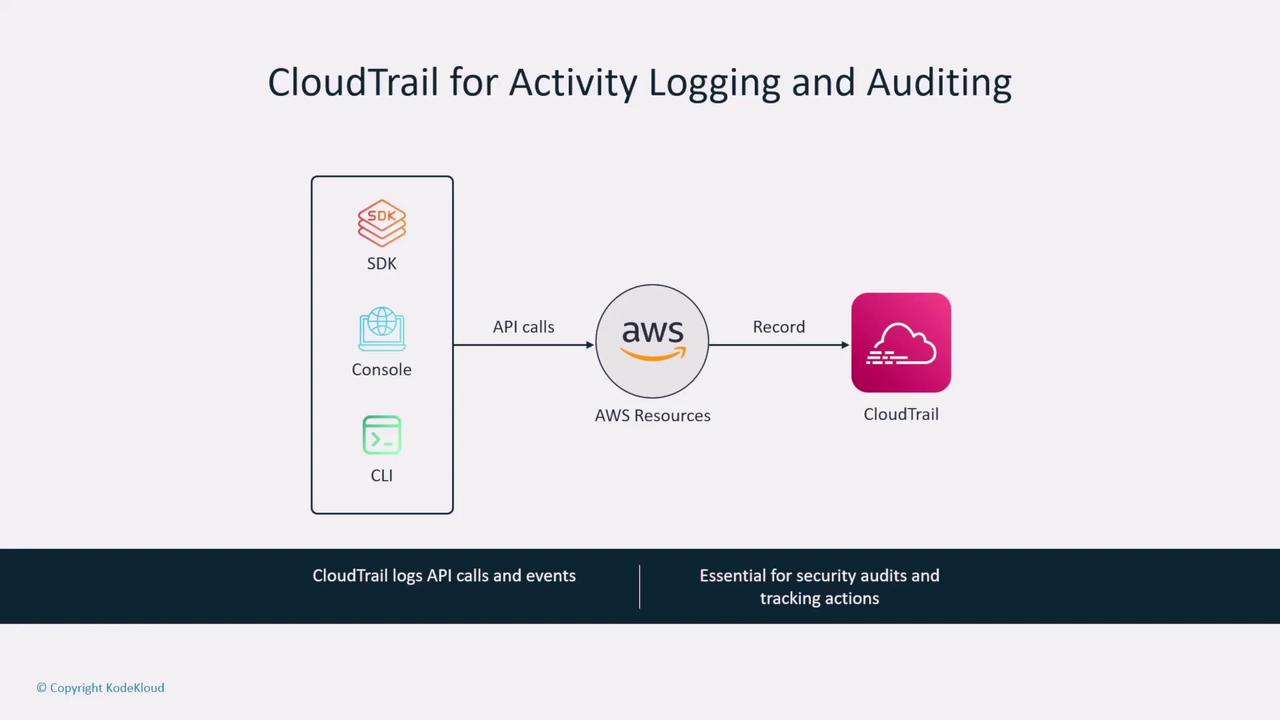

Logging with AWS CloudTrail

AWS CloudTrail is indispensable for recording all API calls made to your AWS account. Even though it doesn't log operating system or database activities, it tracks requests made through tools like the AWS CLI, SDK, or console. Storing these logs in an S3 bucket is critical for auditing and forensic analysis.

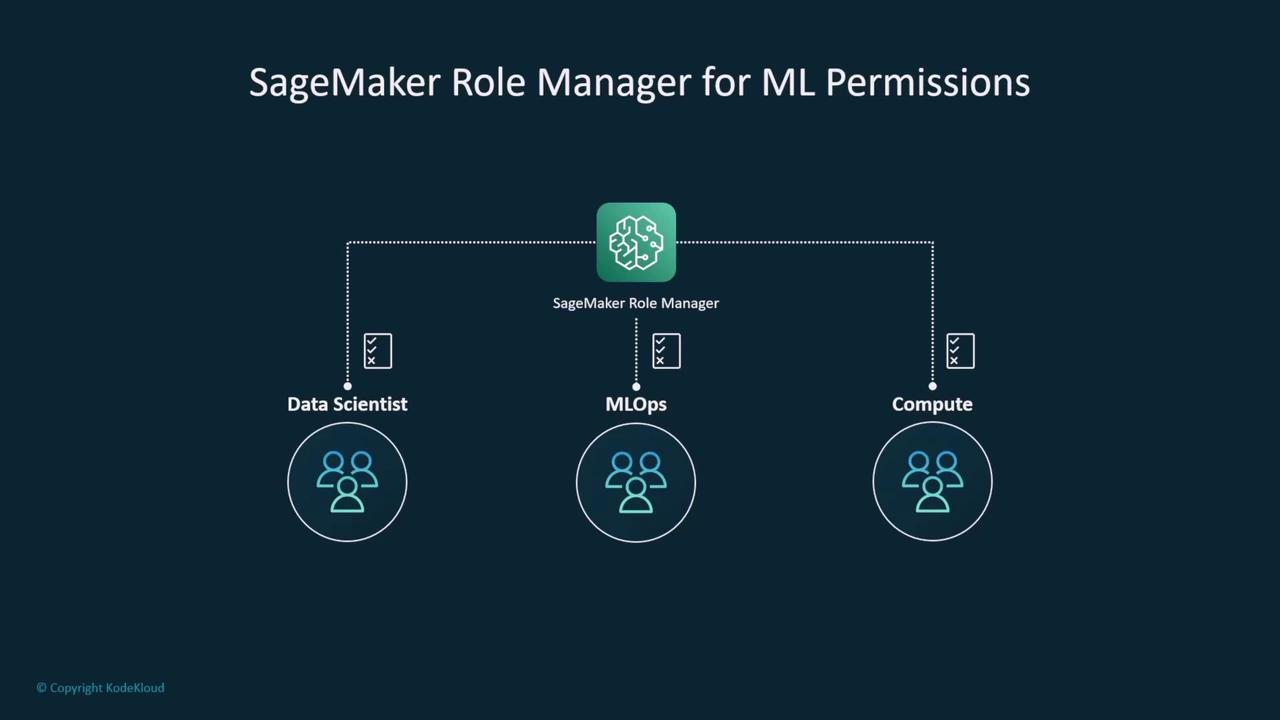

It is best practice to enable CloudTrail by default and configure it to write logs to a secured S3 bucket—ideally in a separate account to prevent unauthorized changes. Ensure that public access to these S3 buckets is blocked, and that roles (e.g., those used by SageMaker) are set up for different functions such as data science, operations, and compute.

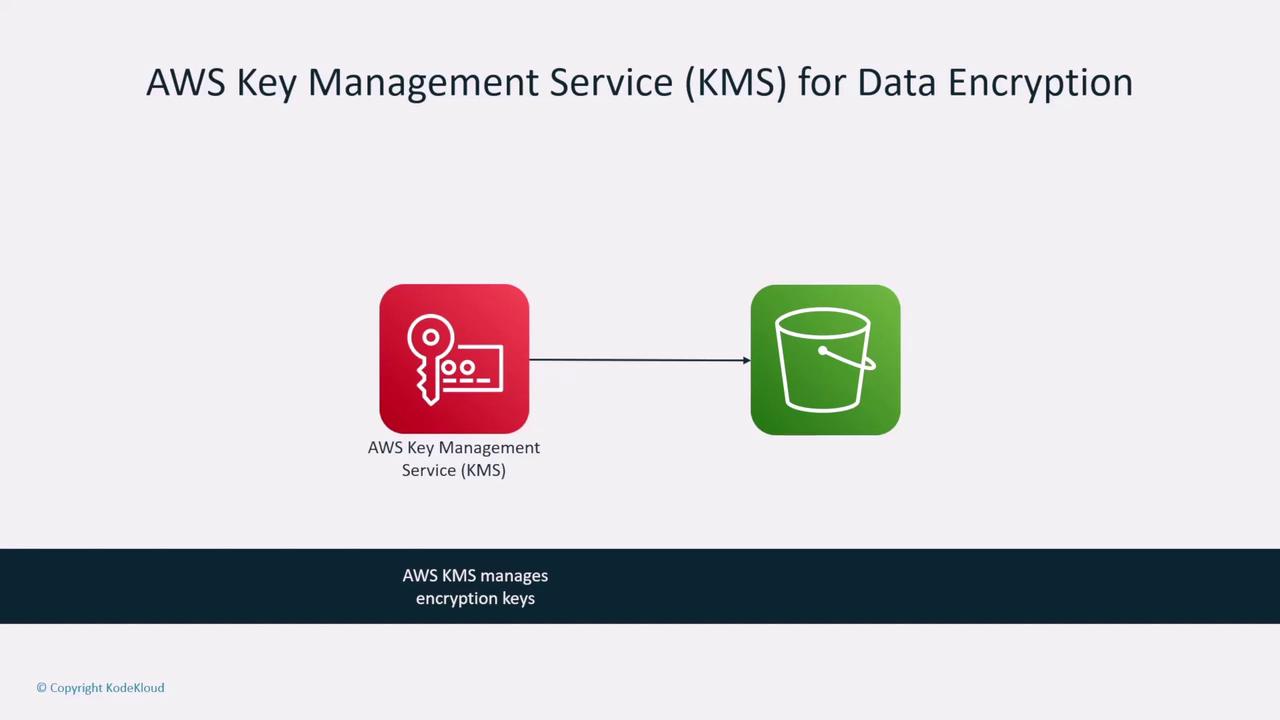

Data Encryption with AWS KMS and TLS

Another critical service is the AWS Key Management Service (KMS), which manages encryption keys to secure data at rest. AWS supports various encryption methods—from client-side encryption to server-side encryption using KMS (the latter often used for disk storage).

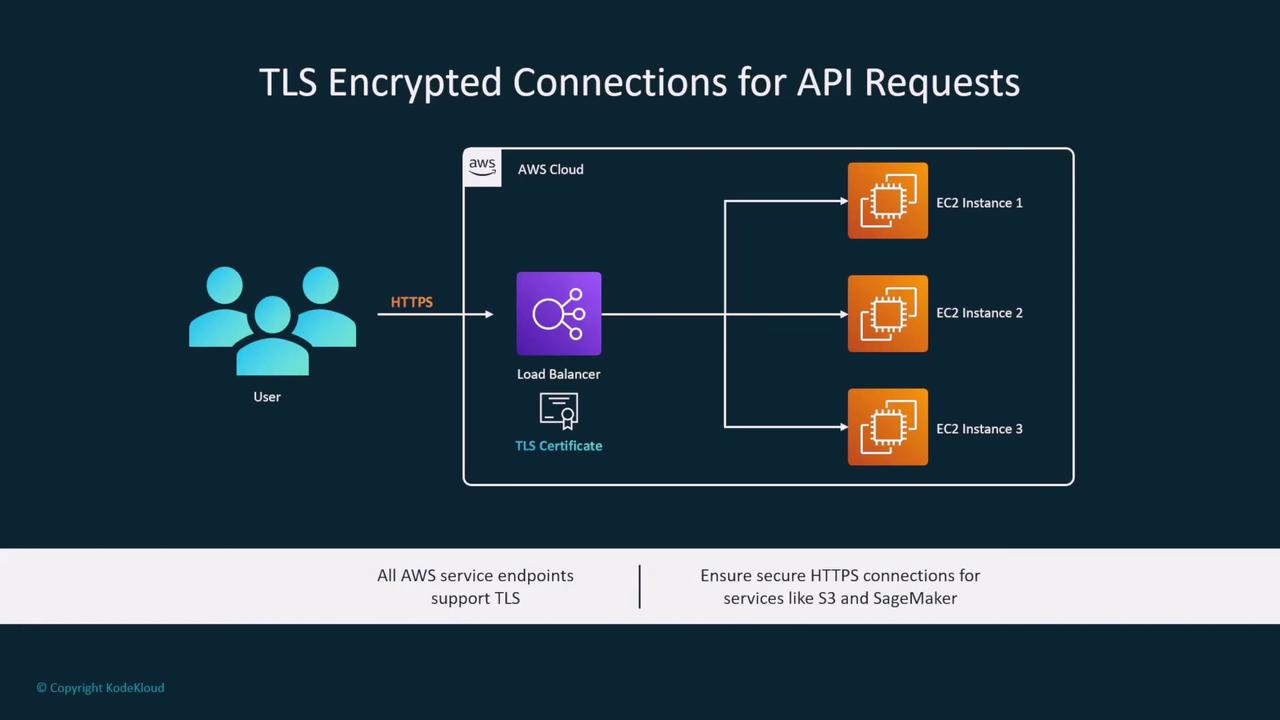

For data in transit, AWS offers robust encryption methods. Load balancers can be configured with TLS/SSL certificates to ensure secure connections between end users and the load balancer. Moreover, you can encrypt the traffic between the load balancer and backend EC2 instances, based on your security needs.

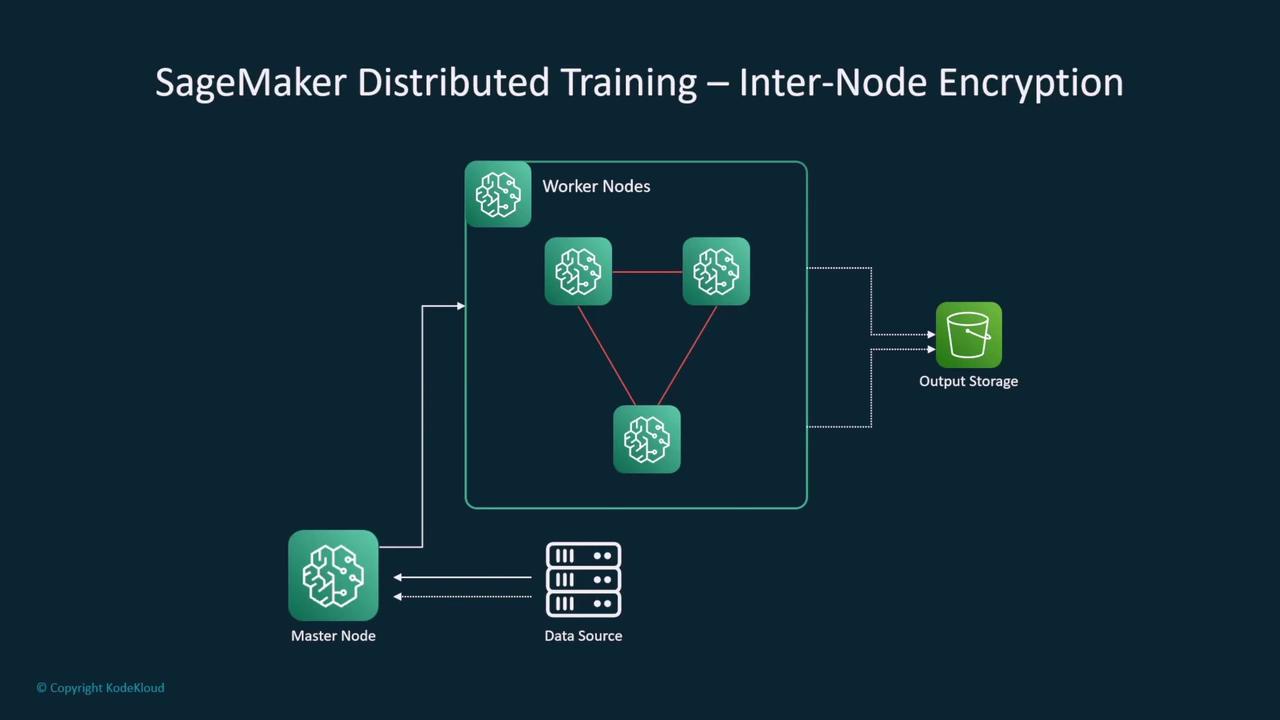

For distributed training jobs in SageMaker, you can enforce inter-node encryption to secure communications between worker nodes—an essential feature when transmitting sensitive data.

Securing Your Network in AWS

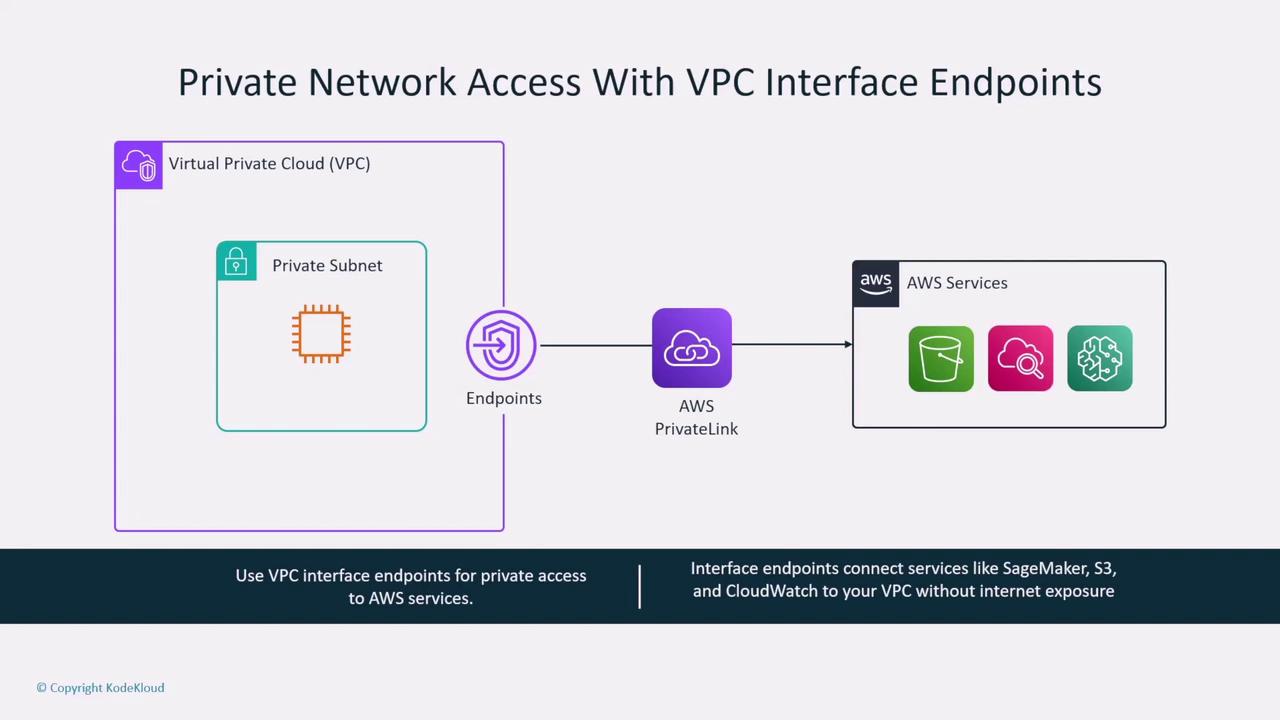

AWS regions encompass multiple Availability Zones, which are clusters of data centers within a geographic area and are by default connected to the internet. To enhance network security, you can isolate your network environments using VPC endpoints—powered by PrivateLink—or NAT gateways, ensuring that your traffic remains within the AWS network.

When launching SageMaker instances within customer-managed VPCs, it is crucial to control network access through built-in security groups, network ACLs, or even an additional network firewall. AWS also provides VPC interface endpoints (and gateway endpoints for S3 and DynamoDB) to securely access AWS services without using public internet routes.

Summary

In this lesson, we covered the essential AWS security options required for safeguarding data at rest, securing data in transit, and protecting network access. This high-level overview builds on the foundational concepts needed to design secure AI systems on AWS.

Thank you for reading! For further information or any clarifications, please refer to the AWS Documentation or join discussions in the AWS forums. We look forward to seeing you in the next lesson.

Next Steps

Explore additional AWS security features and real-world use cases to deepen your understanding and expertise in securing AI systems.

Watch Video

Watch video content