Challenges in Managing Large Repositories

Large repos combine deep commit histories with sizable binary files, which leads to:- Slow

git cloneandgit fetch - Long checkout times

- Increased local disk usage

- Stale objects and packfiles

1. Shallow Cloning

A shallow clone limits the commit history you download, drastically reducing clone time and disk usage.<number-of-commits> with the number of recent commits you need.

Shallow clones omit older history, so

git log --follow and certain bisecting operations may not work as expected.

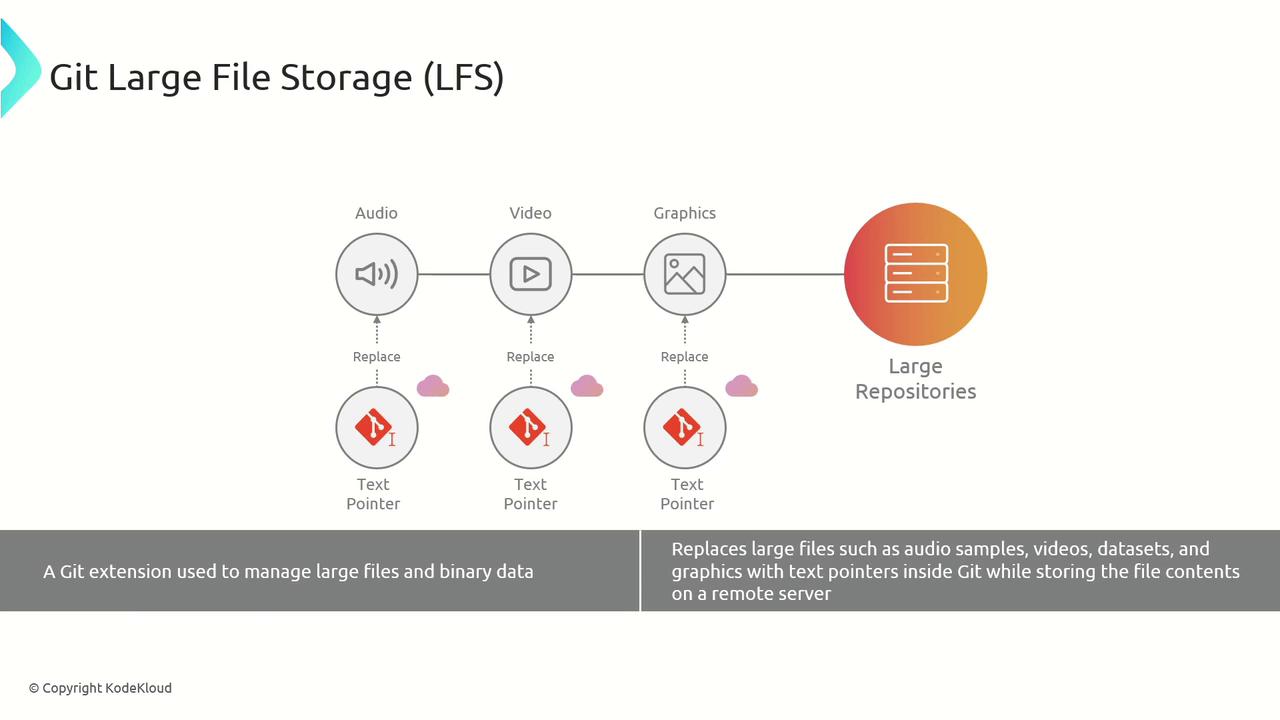

2. Git Large File Storage (LFS)

Git LFS offloads large binary files to a remote LFS server while storing lightweight text pointers in your repo’s history.Git LFS can incur additional bandwidth and storage costs on hosted services. Check your LFS quota before adopting large-scale storage.

3. Alternative: Git-Fat

Git-Fat is a lightweight alternative to LFS that stores large assets in a separate backend (e.g., S3, your own server) and keeps only references in Git.

4. Cross-Repository Sharing

Extract common libraries or components into a shared repo or package registry to avoid duplication across multiple projects.- Create a

common-uiorshared-utilsrepository - Publish versions to npm, PyPI, or a private registry

- Reference in other repos as a dependency

5. Sparse Checkout

Sparse checkout lets you clone the full repo, but check out only specific directories or files to your working tree.

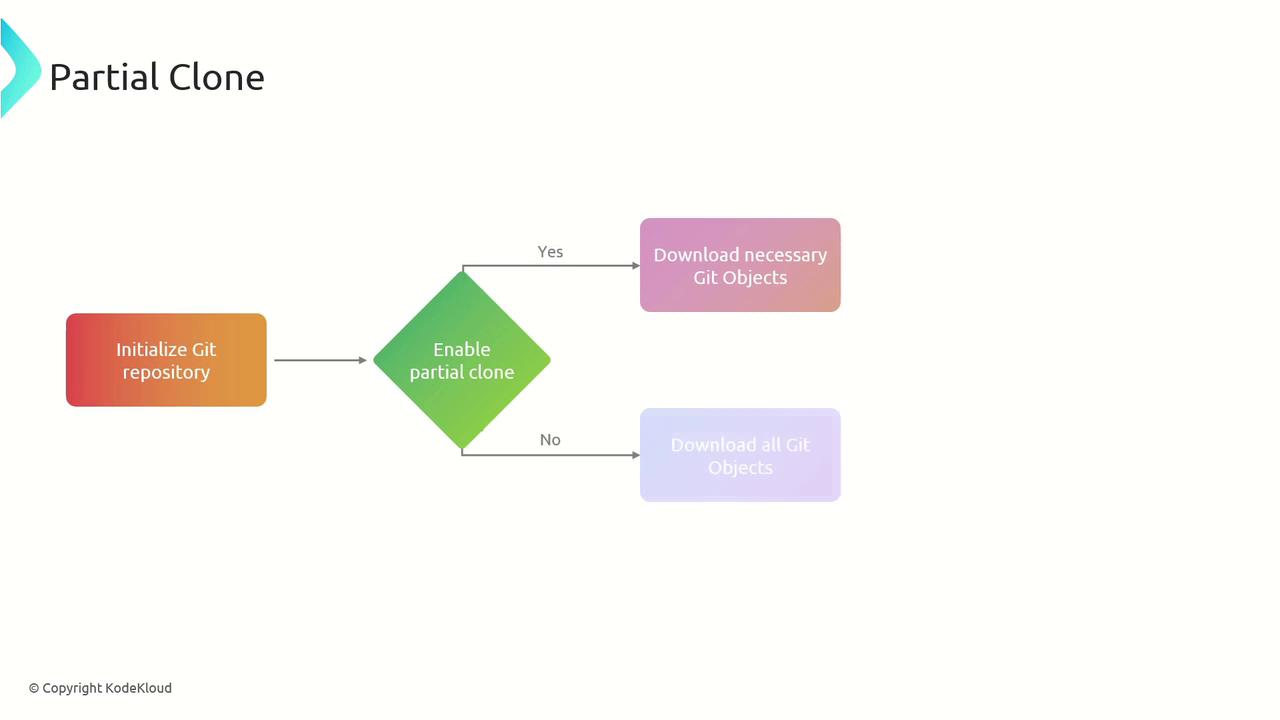

6. Partial Clone

A partial clone defers downloading large Git objects until they’re actually needed, reducing initial clone size.

7. Background Prefetch

Enable background prefetch to automatically download Git objects from remotes (e.g., hourly), sogit fetch runs almost instantly.

Strategy Overview

| Strategy | Use Case | Example Command |

|---|---|---|

| Shallow Clone | Speed up clones with limited history | git clone --depth 10 https://... |

| Git LFS | Manage large binaries (audio, video, graphics) | git lfs track "*.mp4" |

| Git-Fat | Alternative external storage for large assets | git-fat init |

| Cross-Repository Sharing | Reuse shared code across multiple projects | Publish to npm/PyPI or git submodule |

| Sparse Checkout | Check out only needed directories | git sparse-checkout set docs/ |

| Partial Clone | Delay blob downloads until required | git clone --filter=blob:none |

| Background Prefetch | Automate object prefetch to speed up interactive fetch | git config scalar.foregroundPrefetch false |