GitHub Copilot Certification

GitHub Copilot Basics

Mitigating AI Risks

GitHub Copilot accelerates development with context-aware suggestions, acting as a “pair programmer” that predicts your next line. However, relying on AI can introduce hidden vulnerabilities, bias, and non-compliant implementations. In this guide, we explore why these risks matter and present a governance framework to keep Copilot’s assistance secure, transparent, and reliable.

Risks of AI-Generated Code

Below is an overview of the top risks when integrating Copilot into your workflow:

| Risk | Description | Potential Impact |

|---|---|---|

| Lack of Transparency | Suggestions originate from a black box with unknown rationale. | Inefficient algorithms; unhandled edge cases. |

| Unintended Outcomes | Models inherit biases or insecure coding patterns from repos. | Data leaks, compliance violations (e.g., GDPR). |

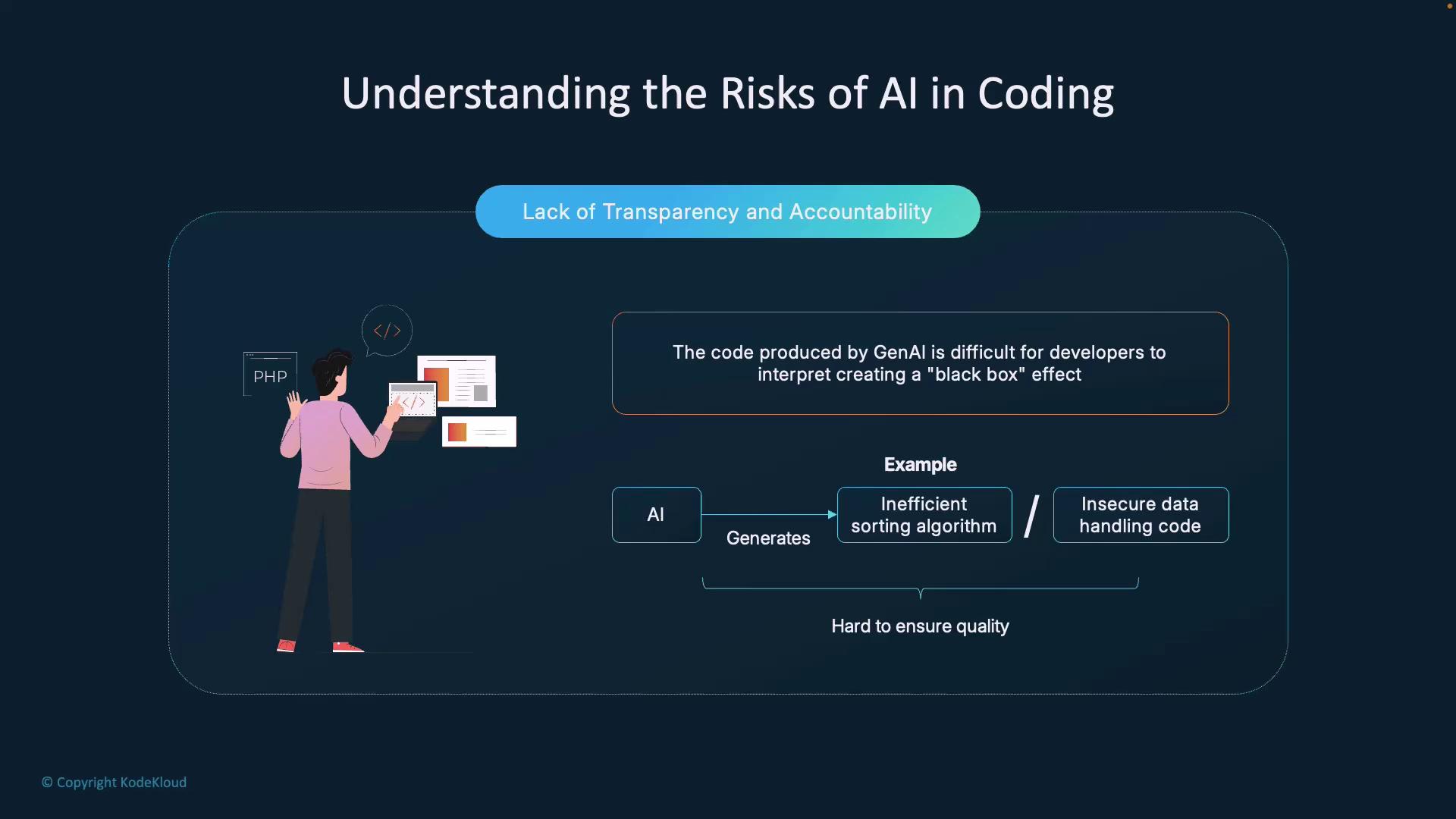

1. Lack of Transparency

Copilot can rapidly scaffold solutions, but you rarely see how or why an approach was chosen. For example, it might suggest a quicksort that doesn’t account for worst-case inputs, degrading performance on large arrays. Without manual review, these inefficiencies slip into production.

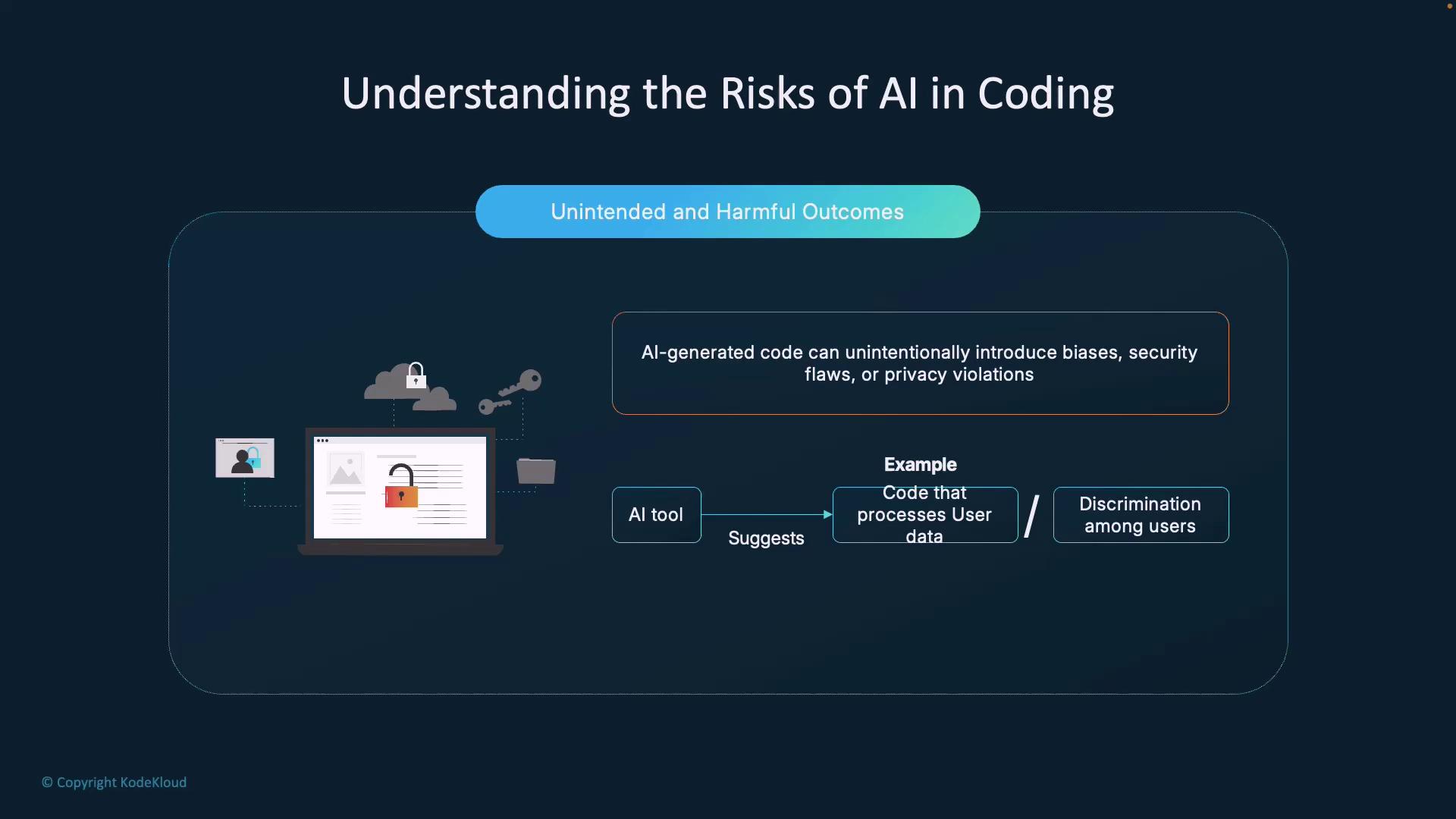

2. Unintended Outcomes

Copilot’s training data spans public repositories, so it can regurgitate insecure or biased snippets. Imagine it proposing an authentication flow that omits encryption—exposing sensitive user data and breaching regulations in finance or healthcare.

Mitigation Strategies

Implement a multi-layered governance framework with human oversight and tooling to validate every AI suggestion.

| Strategy | Description |

|---|---|

| Peer Reviews | Flag Copilot PRs and enforce human sign-off. |

| Audit Trails | Tag and log AI contributions for accountability. |

| Automated Testing | Integrate static analysis & unit tests in CI/CD. |

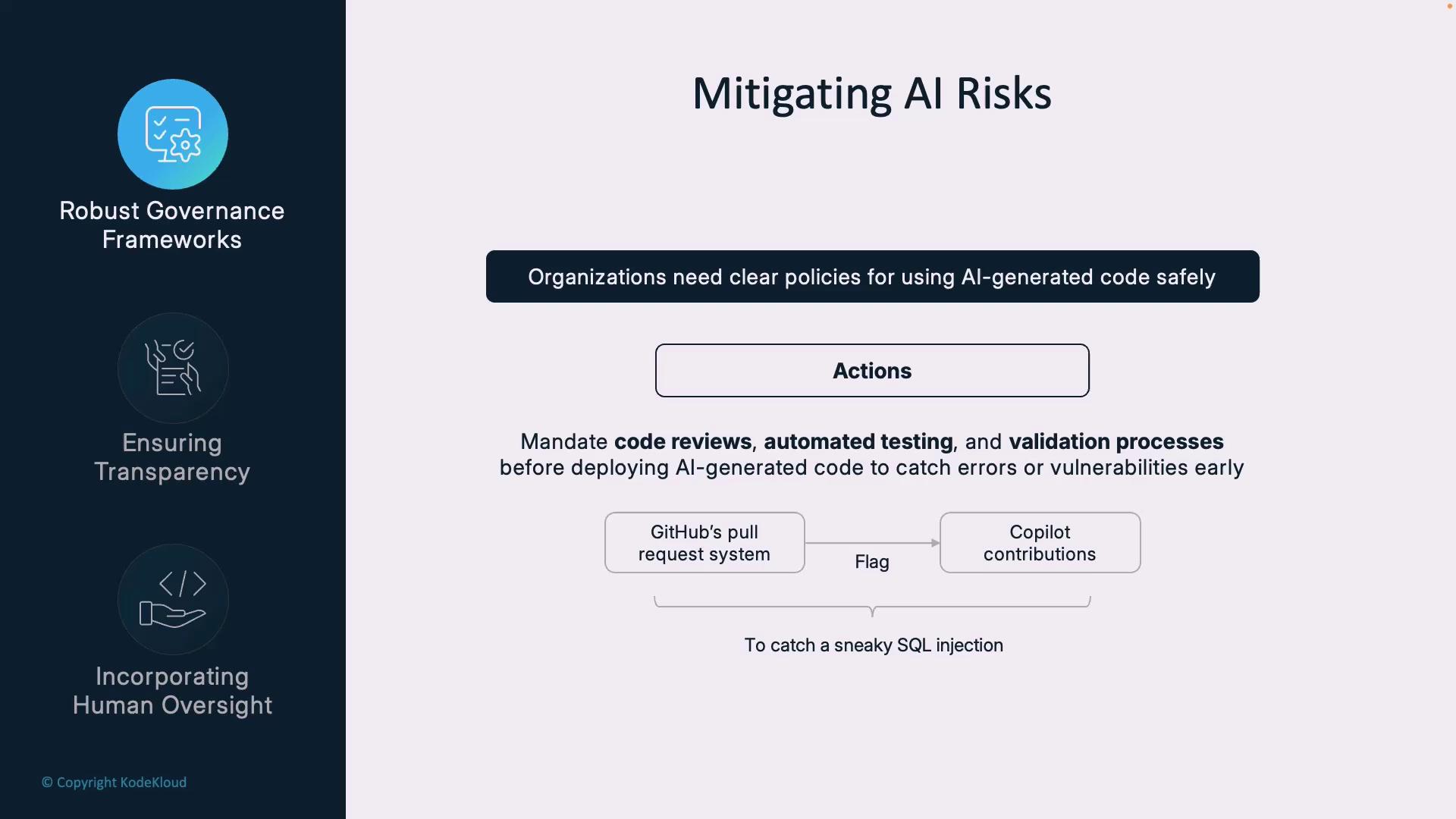

1. Enforce Peer Reviews

Require that all AI-generated code is delivered via pull requests and reviewed by at least one developer. Use branch protection rules to prevent merging without explicit approval.

Warning

Never merge AI-generated code into production without a thorough code review. You risk introducing SQL injections, logic bugs, or outdated dependencies.

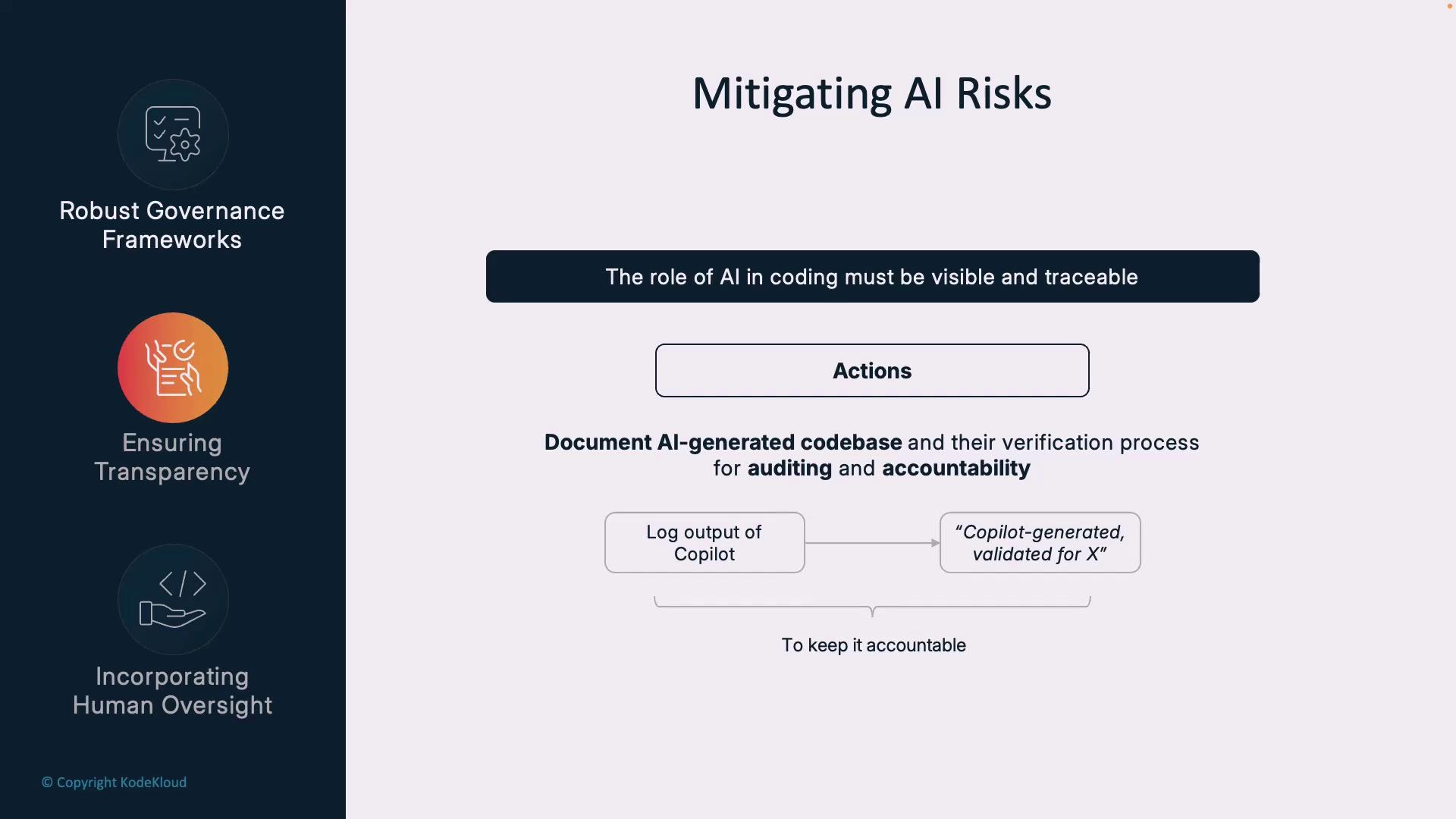

2. Maintain an Audit Trail

Annotate all Copilot snippets with clear comments or commit tags to track their origin:

# [AI-Generated] Reviewed for SQL injection vulnerabilities

Use commit messages like:

git commit -m "[AI-generated] Add input sanitization"

Note

An audit trail simplifies compliance reporting and future code health checks.

3. Apply Human Oversight

Treat Copilot like a junior engineer. Integrate static-analysis tools (e.g., SonarQube) and unit-testing frameworks (e.g., PyTest) into your CI/CD pipeline to catch security flaws and regressions before merging.

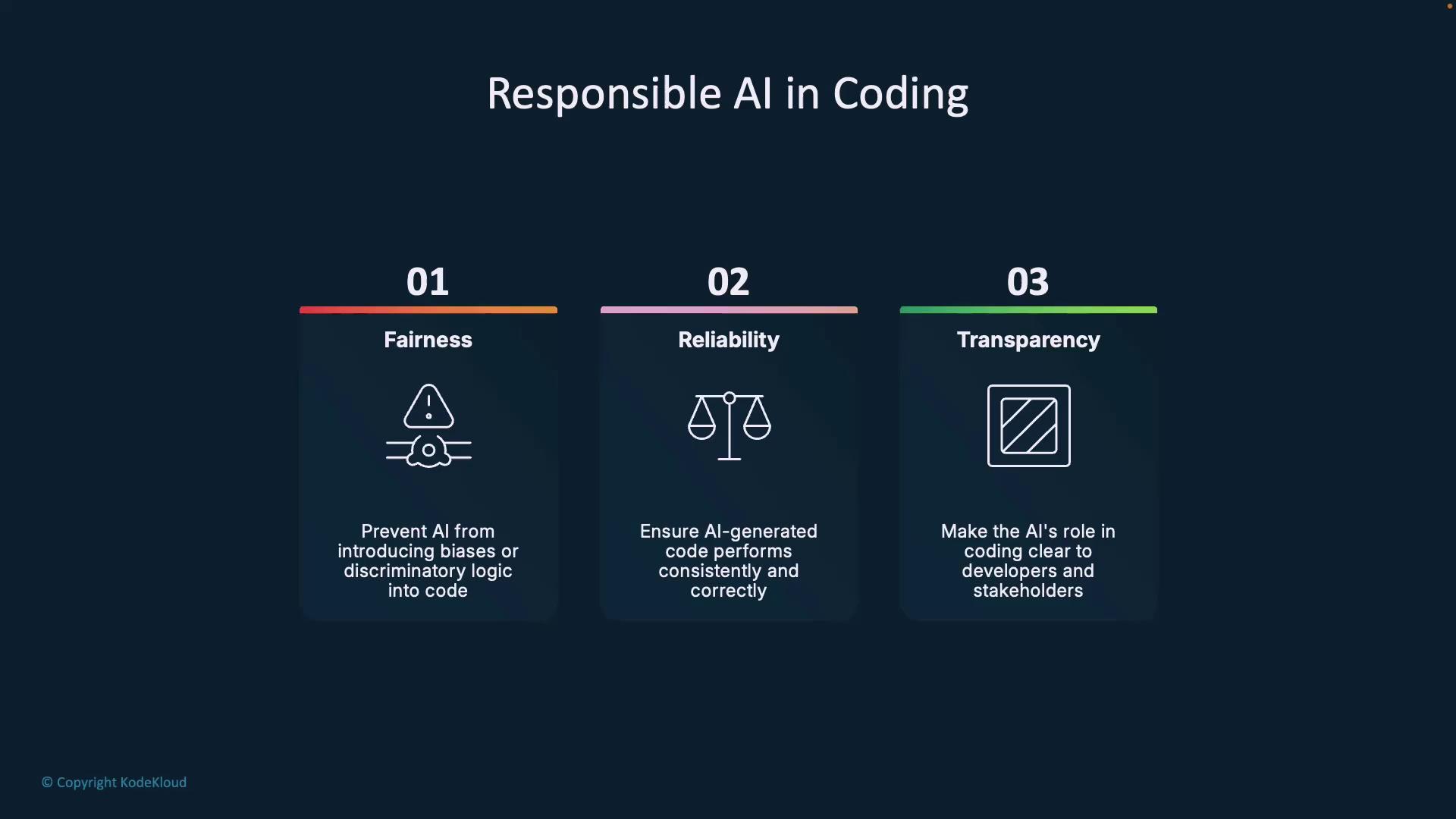

Responsible AI Principles

Embedding ethics and quality controls into AI-assisted development ensures your code remains fair, reliable, and transparent.

| Principle | Implementation |

|---|---|

| Fairness | Run bias detection (e.g., Fairlearn). |

| Reliability | Validate edge cases and performance under load. |

| Transparency | Label AI-generated code and document reviews. |

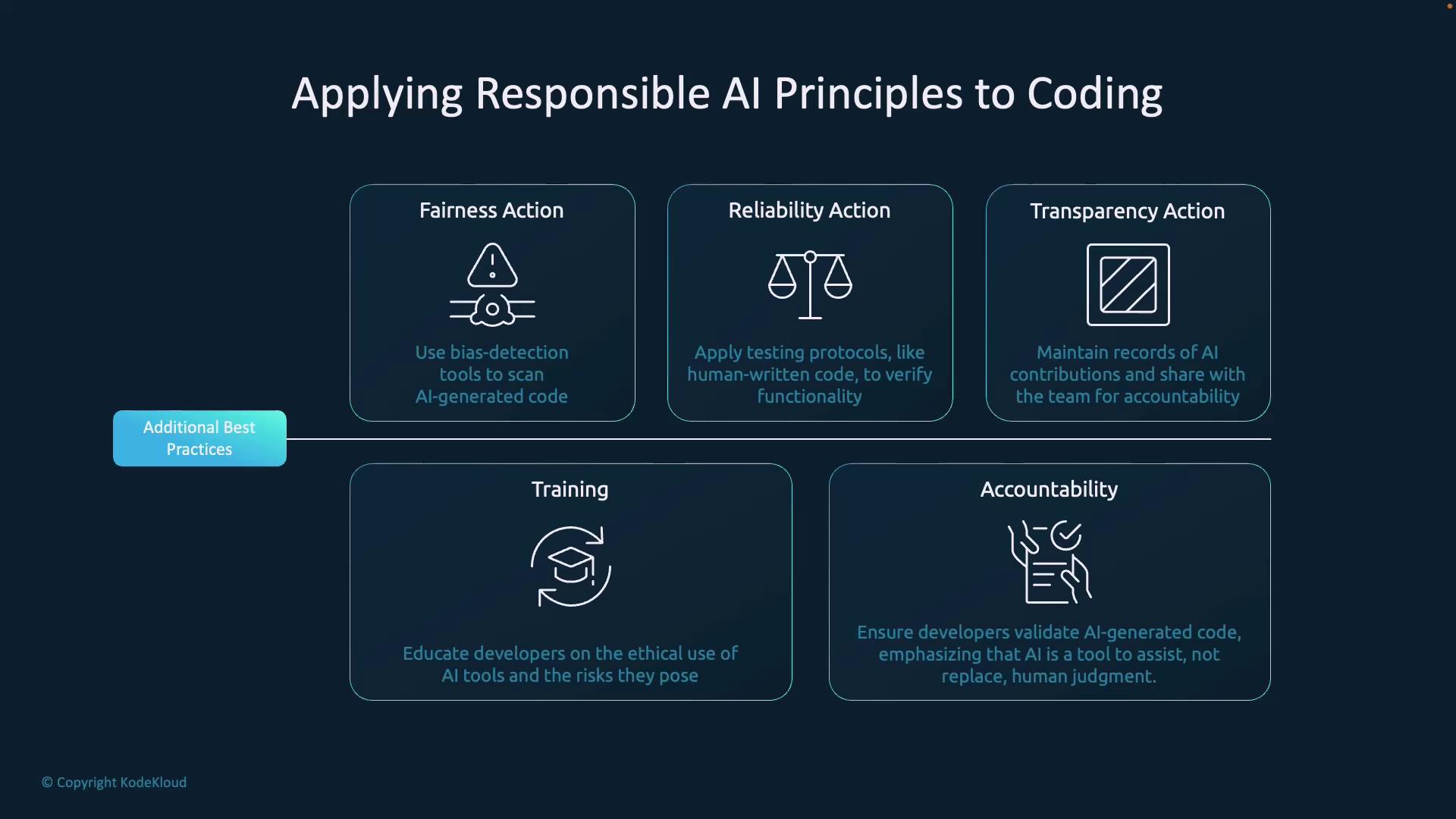

Additional Best Practices

- Use bias-detection tools to scan AI-written logic for discriminatory patterns.

- Apply the same testing rigor to Copilot suggestions as to human-written code.

- Keep shared documentation of AI contributions for team visibility.

- Provide training on ethical AI use and potential pitfalls.

- Always validate AI code in a sandbox before production deployment.

Tools and Implementation Examples

| Tool | Purpose | Example Usage |

|---|---|---|

| Fairlearn | Bias detection | fairlearn.metrics.MetricFrame(...) |

| PyTest | Unit & integration testing | pytest tests/ |

| SonarQube | Code quality & security analysis | Integrate via GitHub Actions or Jenkins |

| Git Commit Tags | Trace AI contributions | git commit -m "[AI-generated] Validate input" |

Summary

Generative AI like GitHub Copilot offers significant productivity gains but also introduces opacity, bias, and security risks. By enforcing a governance framework, maintaining transparency, and applying rigorous human oversight, you can harness Copilot’s potential and keep your codebase secure, fair, and maintainable.

Links and References

- GitHub Copilot

- SonarQube Documentation

- PyTest Documentation

- Fairlearn

- GDPR Compliance Overview

- CI/CD Best Practices

Watch Video

Watch video content