In this guide, you’ll learn how to inject your Kubernetes kubeconfig into a GitLab CI pipeline so that kubectl can authenticate and interact with your cluster. This setup is essential for automated deployments, health checks, and infrastructure management within your CI/CD workflows.

Why You Need to Provide a Kubeconfig When kubectl runs without a valid kubeconfig, it can only display client information and will fail to contact the API server:

# .gitlab-ci.yml (without KUBECONFIG) k8s_dev_deploy : stage : dev-deploy image : alpine:3.7 before_script : - wget https://storage.googleapis.com/kubernetes-release/release/\ $(wget -q -O - https://storage.googleapis.com/kubernetes-release/stable.txt)/\ bin/linux/amd64/kubectl - chmod +x kubectl && mv kubectl /usr/bin/kubectl script : - kubectl version -o yaml

Attempting to run the job yields:

$ kubectl version -o yaml ClientVersion: gitVersion: v1.29.1 ... ERROR: Job failed: exit code 1

Without server credentials in a kubeconfig, kubectl cannot reach your cluster’s API endpoint.

Local vs. CI: Kubernetes Authentication On Your Local Machine With a valid ~/.kube/config, you will see both client and server versions:

$ kubectl version -o yaml

clientVersion : gitVersion : v1.29.1 serverVersion : gitVersion : v1.29.1

Your trimmed kubeconfig might look like this:

apiVersion : v1 clusters : - name : vke-cluster cluster : server : https://example-cluster:6443 certificate-authority-data : LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0t... contexts : - name : vke-cluster context : cluster : vke-cluster user : admin current-context : vke-cluster kind : Config users : - name : admin user : client-certificate-data : <omitted> client-key-data : <omitted>

Verify your context and nodes locally:

kubectl config get-contexts kubectl get nodes

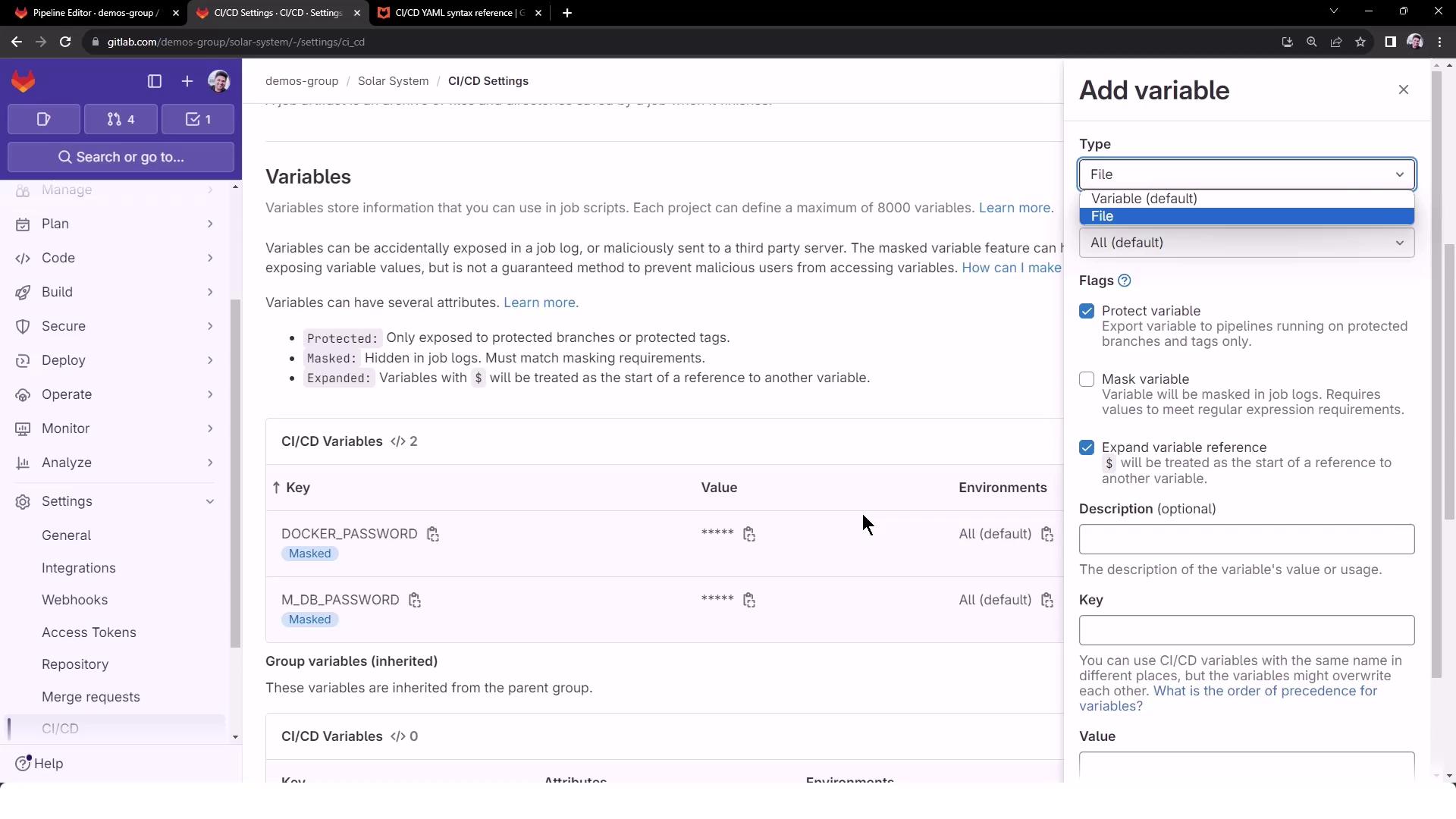

Storing Kubeconfig in GitLab CI/CD To securely pass your kubeconfig into CI jobs, add it as a File -type variable in your project’s CI/CD Settings:

Key Type Value Environment Scope DEV_KUBE_CONFIGFile Paste entire kubeconfig content All (or specific)

Treat your kubeconfig as sensitive data. File variables are stored encrypted, but avoid exposing them in job logs or unsecured scopes.

Updating the CI Job to Use Kubeconfig Modify your .gitlab-ci.yml job to export the KUBECONFIG environment variable from the File variable before invoking any kubectl commands:

# .gitlab-ci.yml k8s_dev_deploy : stage : dev-deploy image : alpine:3.7 before_script : - wget https://storage.googleapis.com/kubernetes-release/release/\ $(wget -q -O - https://storage.googleapis.com/kubernetes-release/stable.txt)/\ bin/linux/amd64/kubectl - chmod +x kubectl && mv kubectl /usr/bin/kubectl script : - export KUBECONFIG=$DEV_KUBE_CONFIG - kubectl version -o yaml - kubectl config get-contexts - kubectl get nodes

Commit your changes and trigger the pipeline. The k8s_dev_deploy job should now complete successfully:

Verifying the CI Job Output With the kubeconfig in place, your CI job will display both client and server details and list the cluster nodes:

$ kubectl version -o yaml ClientVersion: gitVersion: v1.29.1 ServerVersion: gitVersion: v1.29.1 $ kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO * vke-cluster vke-cluster admin $ kubectl get nodes NAME STATUS ROLES AGE VERSION gitlab-node-1 Ready < non e > 5h v1.29.1 gitlab-node-2 Ready < non e > 5h v1.29.1

Further Reading and References