Infrastructure security extends beyond individual containers or workloads. It requires a holistic approach that includes network policies, host configuration, and secure management of sensitive data.

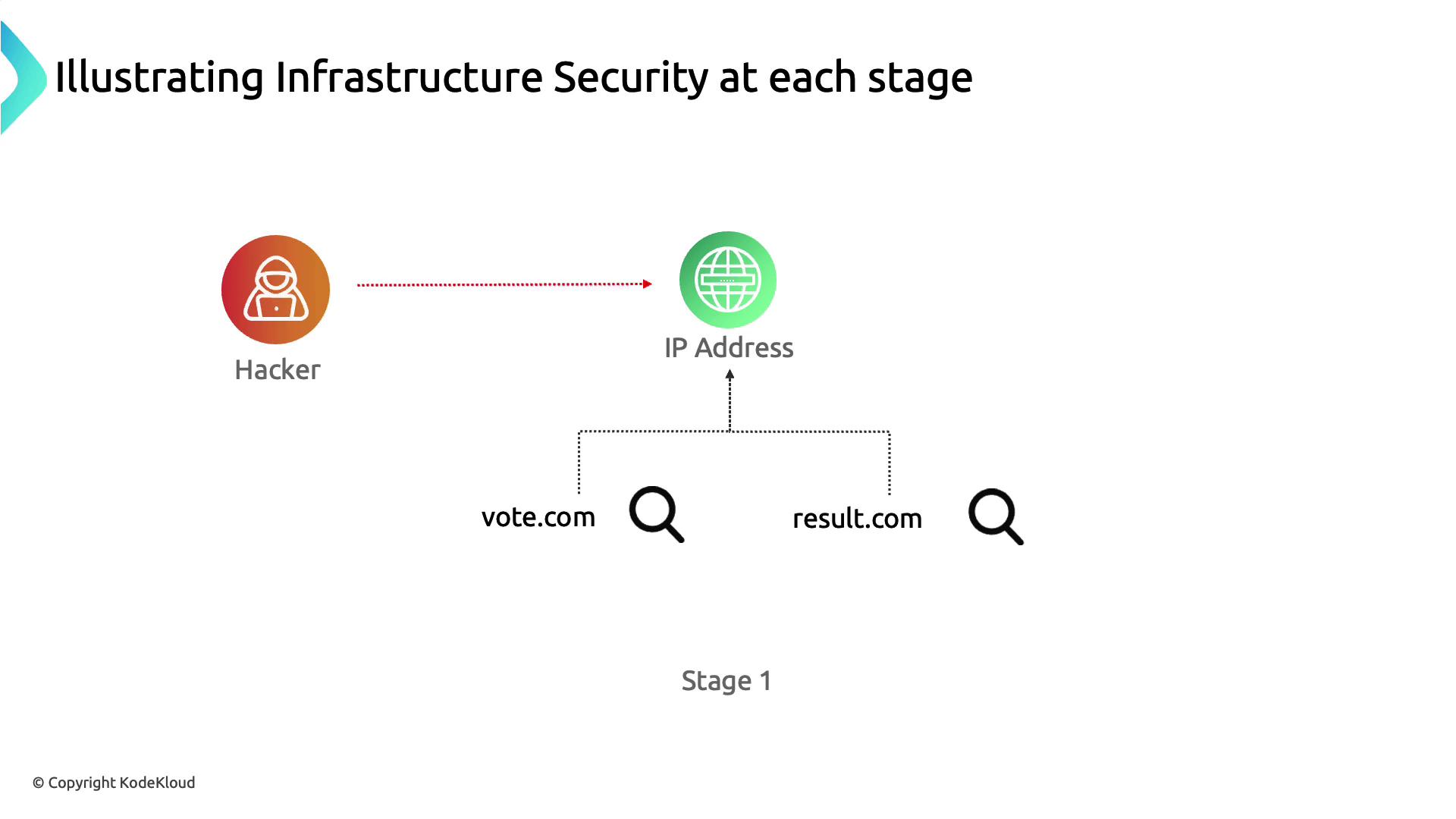

Stage 1: Network Segmentation & API Exposure

In our attack scenario, the adversary discovers that vote.com and drizzle.com resolve to the same IP address, revealing a lack of network segmentation that allows one compromised application to threaten the entire host.

| Vulnerability | Risk | Mitigation |

|---|---|---|

| Shared IP hosting multiple domains | Compromise of one app exposes all apps | Use VPCs/Subnets or separate servers |

| Public Kubernetes API endpoint | Unrestricted API discovery and access | Remove public IP, enforce VPN or private endpoints |

- Isolate applications in distinct networks or VPC subnets.

- Remove or restrict the Kubernetes API server’s public IP.

- Implement firewall rules and network access control lists (ACLs).

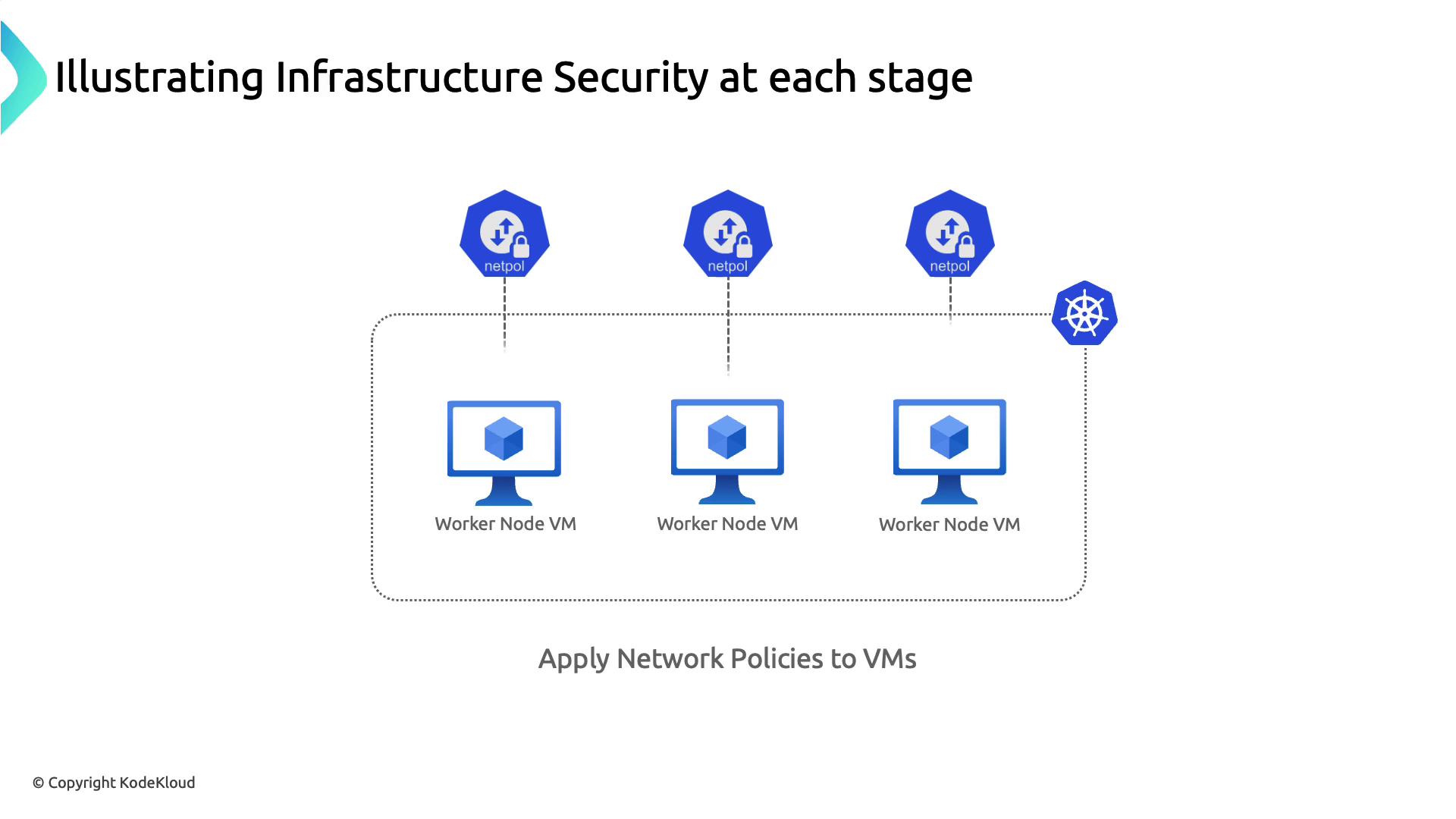

Stage 2: Securing Docker Daemon Access

After identifying the host, the attacker scans open ports and finds Docker’s default remote port (2375) exposed without TLS:

Apply network policies or cloud firewall rules at the host level to restrict Docker daemon access to trusted IPs or management subnets.

- Use host-based firewalls (e.g., iptables, ufw) to block port 2375.

- Enable TLS authentication on the Docker daemon (

--tlsverify). - Apply Kubernetes NetworkPolicies to restrict Pod-to-Pod and Pod-to-Host traffic.

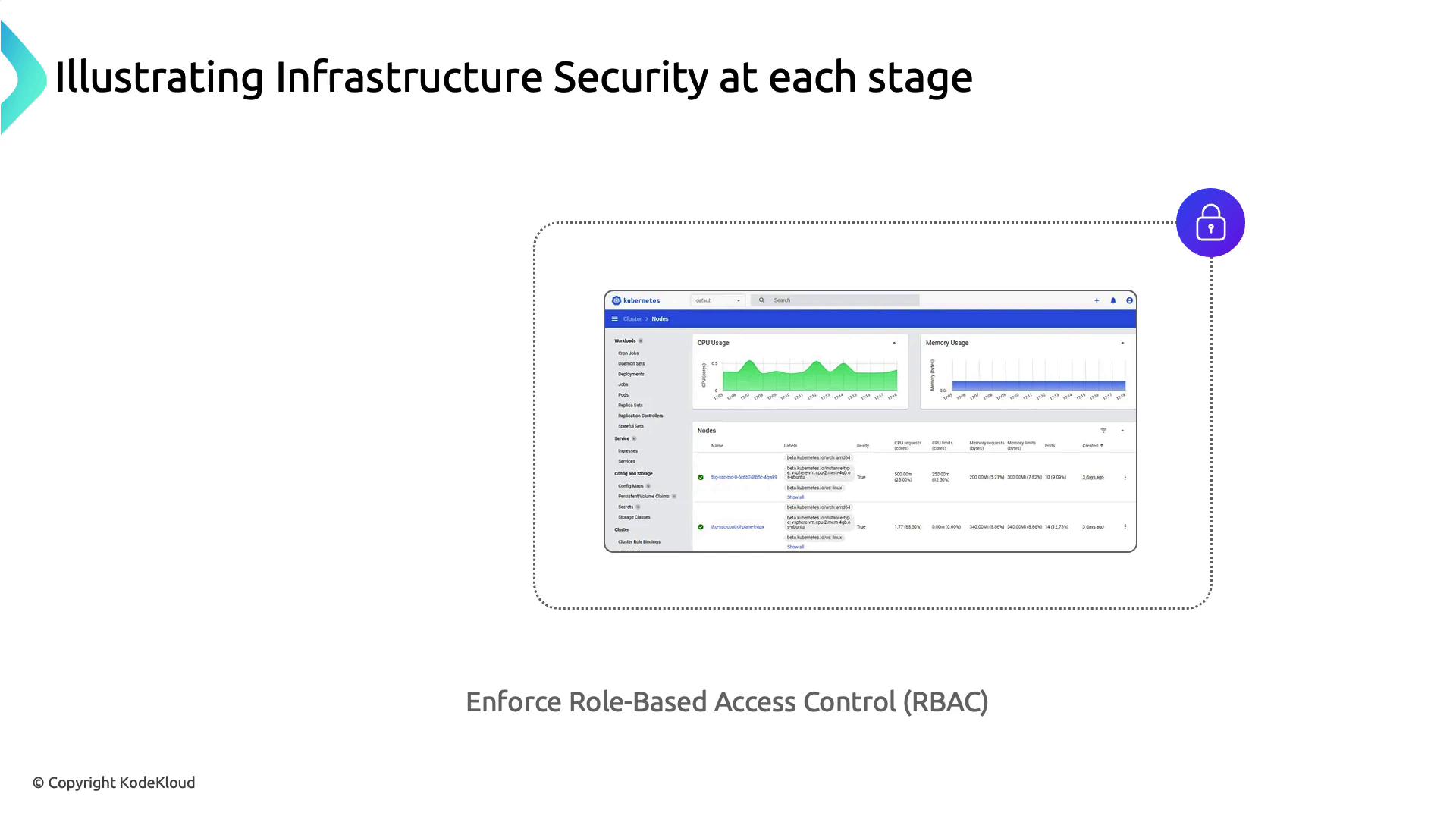

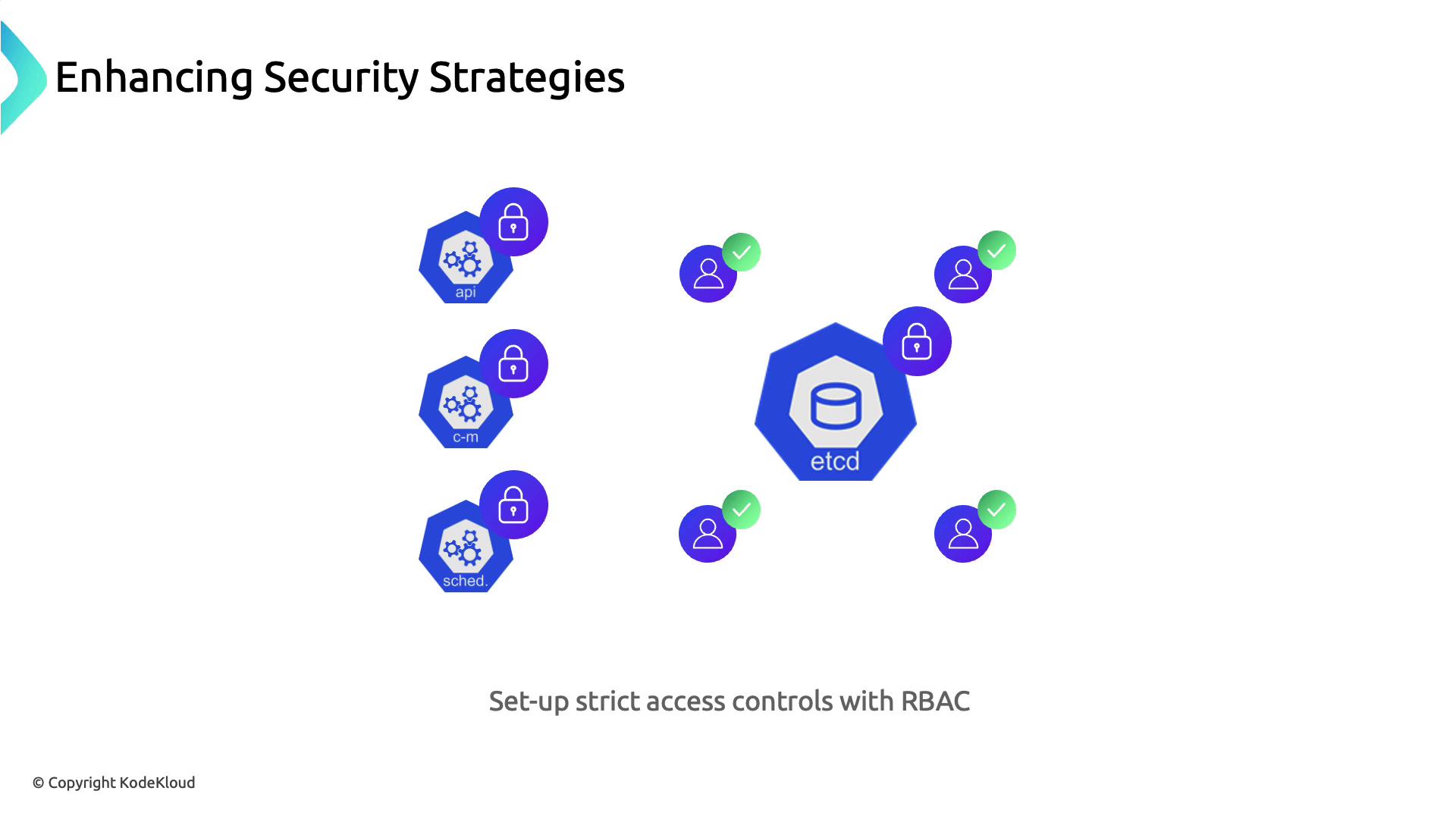

Stage 3: Least Privilege & RBAC Enforcement

Leveraging a vulnerable privileged container (RDKALV), the attacker gains root on the node. A publicly accessible Kubernetes Dashboard then provides full cluster visibility and control.

- Enforce the principle of least privilege: run containers with non-root users and minimal capabilities.

- Secure the Kubernetes Dashboard with RBAC and authentication mechanisms.

- Rotate service account tokens and limit scope using RBAC rules.

Stage 4: Secure Management of Secrets & etcd

The attacker extracts database credentials from plain-text environment variables in a compromised Pod. Storing sensitive data securely is critical.

- Use Kubernetes Secrets to encrypt sensitive values at rest.

- Enable encryption providers for etcd data following etcd encryption documentation.

- Enforce TLS authentication for etcd client-server and peer communication.

- Apply tight RBAC rules to etcd access.

In managed Kubernetes services, direct etcd access is usually abstracted. Review your provider’s security controls and backup strategies to ensure data durability and confidentiality.

Summary

- Segment critical workloads into separate networks or servers.

- Block or secure Docker daemon ports with TLS and host-based firewalls.

- Apply least-privilege principles and lock down the Kubernetes Dashboard with RBAC.

- Store all secrets in Kubernetes Secrets and encrypt etcd data at rest.

- Use TLS for etcd communication and enforce strict RBAC policies.