Access and IAM Integration in EKS

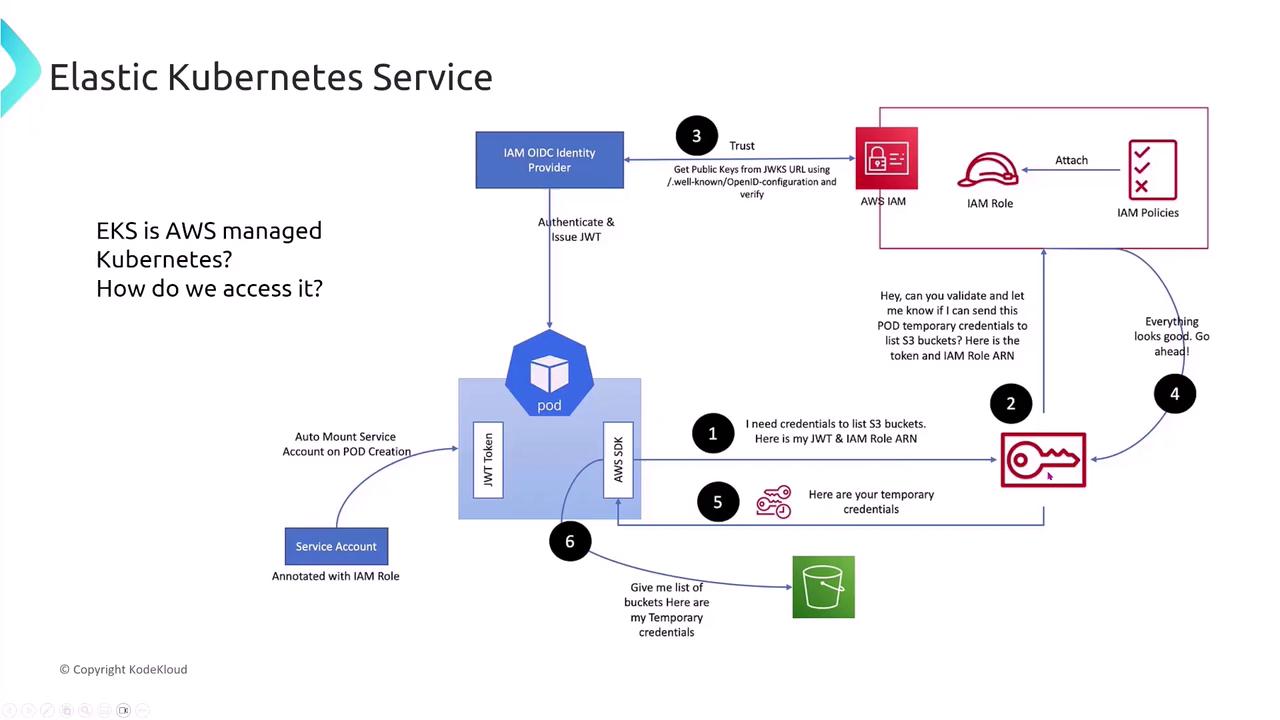

Establishing a trust relationship between your Kubernetes cluster and AWS IAM is a critical challenge when accessing EKS. Historically, pods acquired permissions via service accounts annotated with roles. Today, emerging pod identity services allow you to assign an IAM role directly to a pod, eliminating the need to manage separate service roles for each containerized application. Although this new approach may eventually appear on certification exams, we focus on the established method for now. For secure interactions with AWS, the entire cluster must trust IAM. To achieve this, you create a trust policy between the cluster and AWS IAM and assign specific permissions to the cluster (often with administrative privileges for infrastructure tasks) as well as to individual pods (with the minimum required privileges). When a pod needs to access AWS services (for example, S3), it presents a JSON Web Token (JWT) along with its role information. The Secure Token Service (STS), an integral component of IAM, validates the pod’s credentials before issuing temporary access keys. Consider the flow below that illustrates how a pod uses its JWT and role information to acquire temporary credentials from STS:

OIDC Integration with IAM

Global retail companies deploying applications on EKS can enhance security and simplify role management by integrating an OpenID Connect (OIDC) identity provider with AWS IAM. By registering your Kubernetes cluster as an OIDC identity provider in IAM, you can associate specific IAM roles with service accounts, which in turn are attached to pods and other Kubernetes resources. The typical process involves:- Enabling OIDC for the EKS cluster.

- Providing the cluster’s URL during IAM OIDC provider creation, which allows automatic discovery of necessary details.

IAM Integration and Role Assignments in EKS

Proper IAM integration involves several distinct role assignments that work collectively to secure your EKS environment. Below is a summary of the key roles and their responsibilities:| Role Type | Description | Example Use Case |

|---|---|---|

| Cluster IAM Roles | Grant the control plane permissions for managing AWS resources like EC2 instances and ELBs. | Enabling the control plane to manage auto-scaling and network tasks. |

| Node IAM Roles | Allow worker nodes (typically EC2 instances) to register with the control plane and access services like ECR and CloudWatch. | Pulling container images and logging activities. |

| Pod Execution Roles | Enable individual pods to assume IAM roles independently from the worker node’s role. | Providing limited AWS access for specific containerized applications. |

| IAM Connector Role | Bridge Kubernetes RBAC and AWS IAM to allow management of the cluster through the AWS Management Console. | Integrated visual management from the AWS Console. |

Cluster IAM Roles

Before creating an EKS cluster, assign an IAM role that includes the Amazon EKS cluster policy. This role enables the control plane (e.g., cloud controllers) to interact with AWS resources such as EC2 instances and Elastic Load Balancers. For instance, if new EC2 instances are launched to host additional containers, the cluster role must have the appropriate permissions to manage these resources.

Node IAM Roles

Worker nodes (typically EC2 instances in your EKS cluster) require an IAM role for registration with the control plane as well as to access other AWS services—such as ECR for pulling container images and CloudWatch for logging. These roles should be precisely scoped to allow only the required permissions.

Pod Execution Roles

The pod execution role allows individual pods to assume an IAM role and access AWS services independently of the worker node’s role. Even though advances such as the Pod Identity Service are changing the landscape, understanding pod execution roles remains important.

IAM Connector Role

The IAM connector role integrates the Kubernetes RBAC system with AWS IAM, allowing the Kubernetes cluster to be managed and monitored via the AWS Management Console. This role is essential for achieving seamless visual management.

These distinct roles—cluster, node, pod execution, and connector—work together to ensure your Kubernetes workloads have necessary permissions while maintaining robust security boundaries.

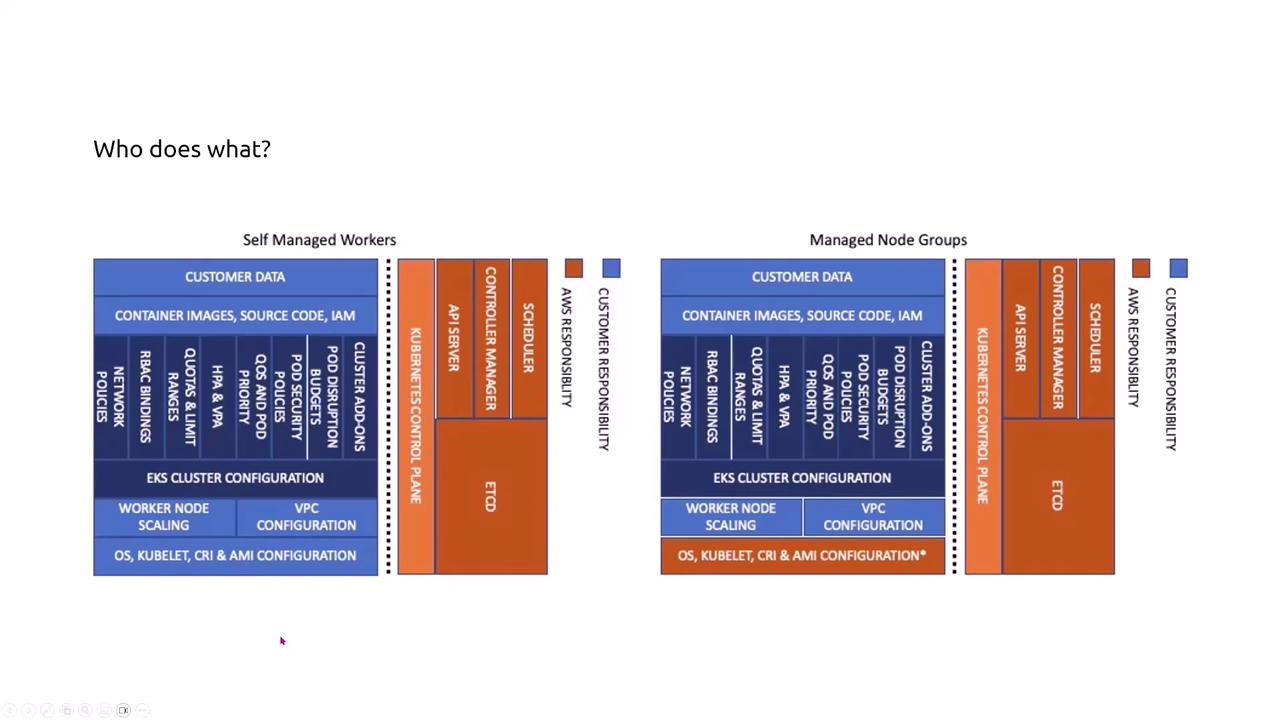

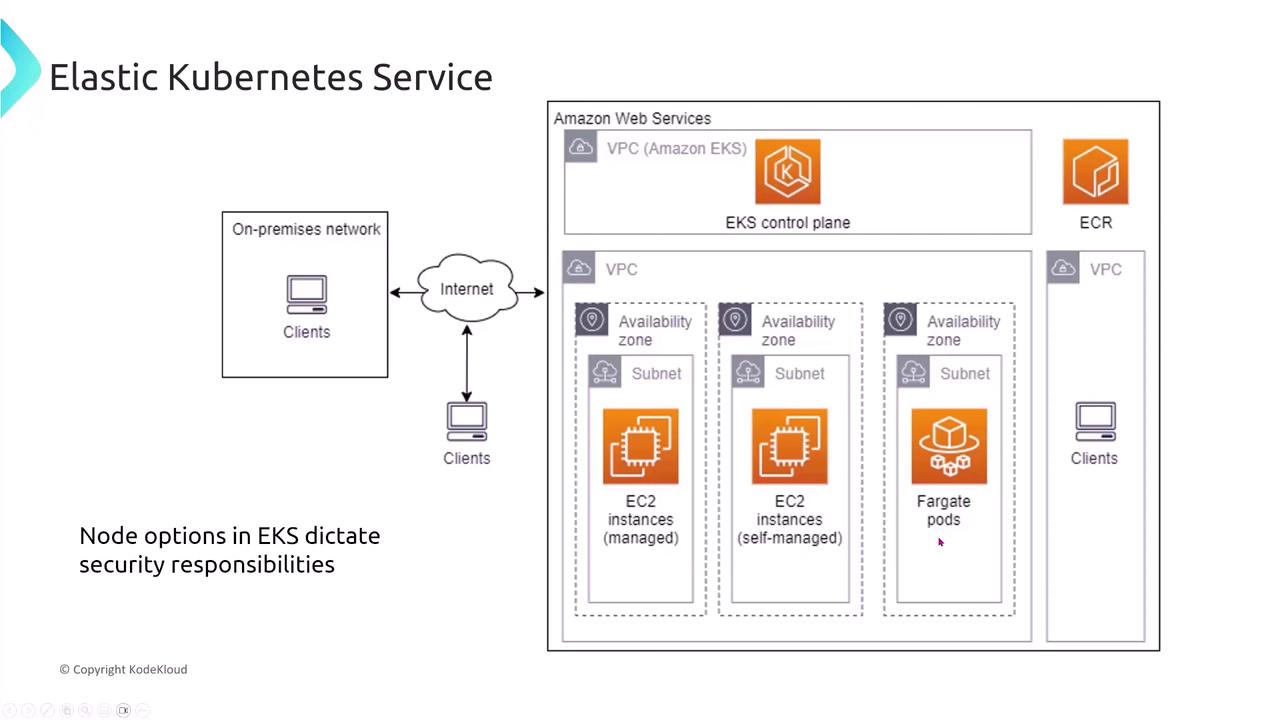

Node Types and Security Responsibilities

When deploying EKS, you must choose the appropriate type of worker nodes depending on your administrative preferences and security requirements:- Self-Managed Nodes: You have full control over compliance, patching, and security updates, but this control increases administrative overhead.

- Managed Node Groups: AWS automatically applies operating system security patches and supports features like auto-scaling, reducing management effort.

- Fargate Pods: AWS manages the underlying operating system entirely, further reducing your security responsibilities.

Logging, Monitoring, and Data Protection

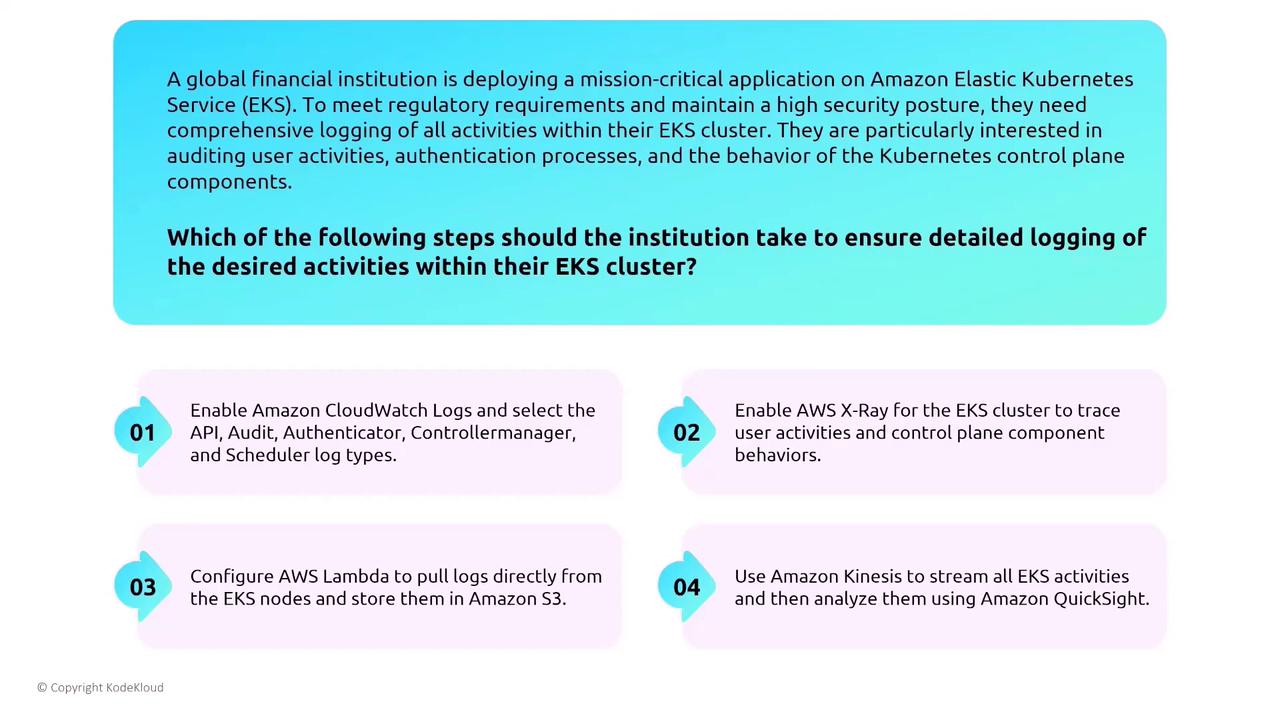

Logging and Auditing

Regulatory compliance and security best practices require detailed logging of all control plane activities. For EKS environments, consider the following steps:- Enable logging for the API server, controller manager, and scheduler.

- Configure CloudWatch Logs to capture these events, simplifying auditing and monitoring.

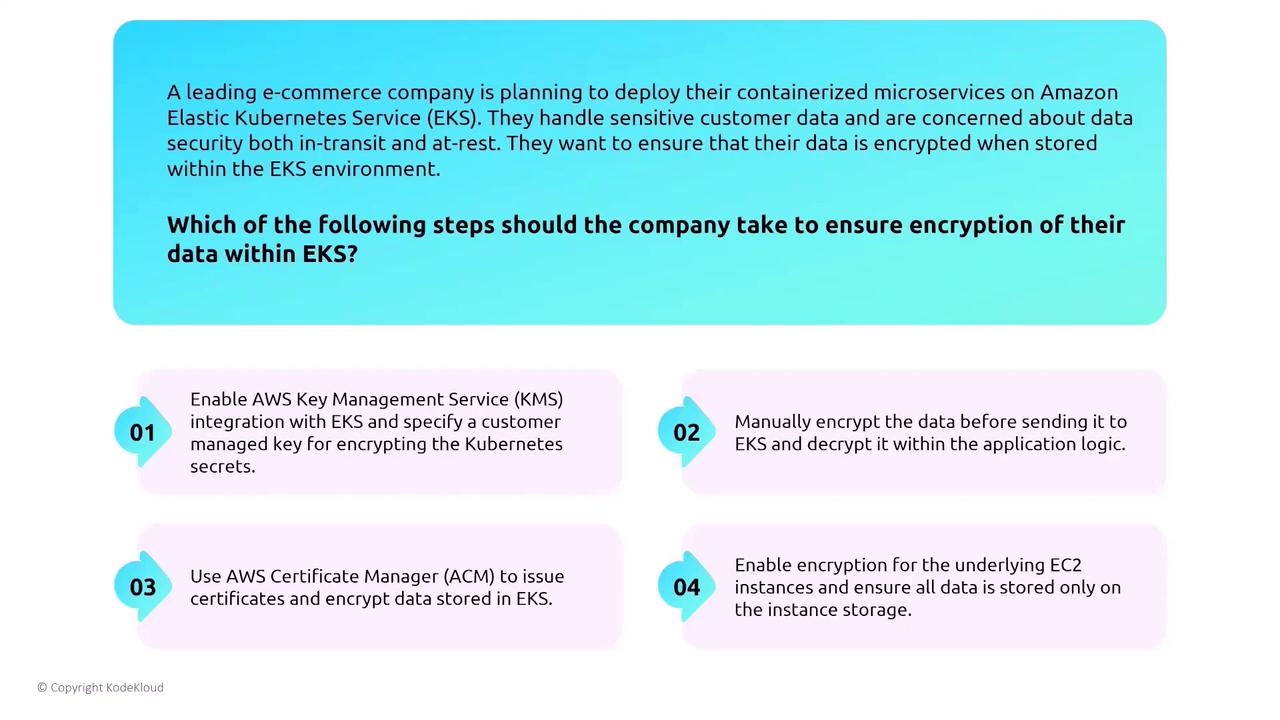

Data Protection

To secure data both in transit and at rest:- Integrate with AWS Key Management Service (KMS) to encrypt Kubernetes secrets.

- Configure your storage class with the encrypted parameter set to true for EBS volumes.

- Use encrypted EFS configurations for secure shared file storage.

Without KMS integration, Kubernetes secrets are simply base64 encoded and do not provide true encryption—ensuring proper encryption is critical for protecting sensitive data.

Persistent Storage with CSI Drivers

For containerized storage:- EBS Volumes: Use the EBS CSI driver with your Storage Class defined to enable encryption by default when persistent volumes are dynamically provisioned.

- EFS: Ensure storage classes or PersistentVolume definitions are configured to enforce data encryption.

- FSx for Lustre or OpenZFS: Although many FSx solutions are encrypted by default, review the CSI driver configuration to confirm proper encryption parameters.

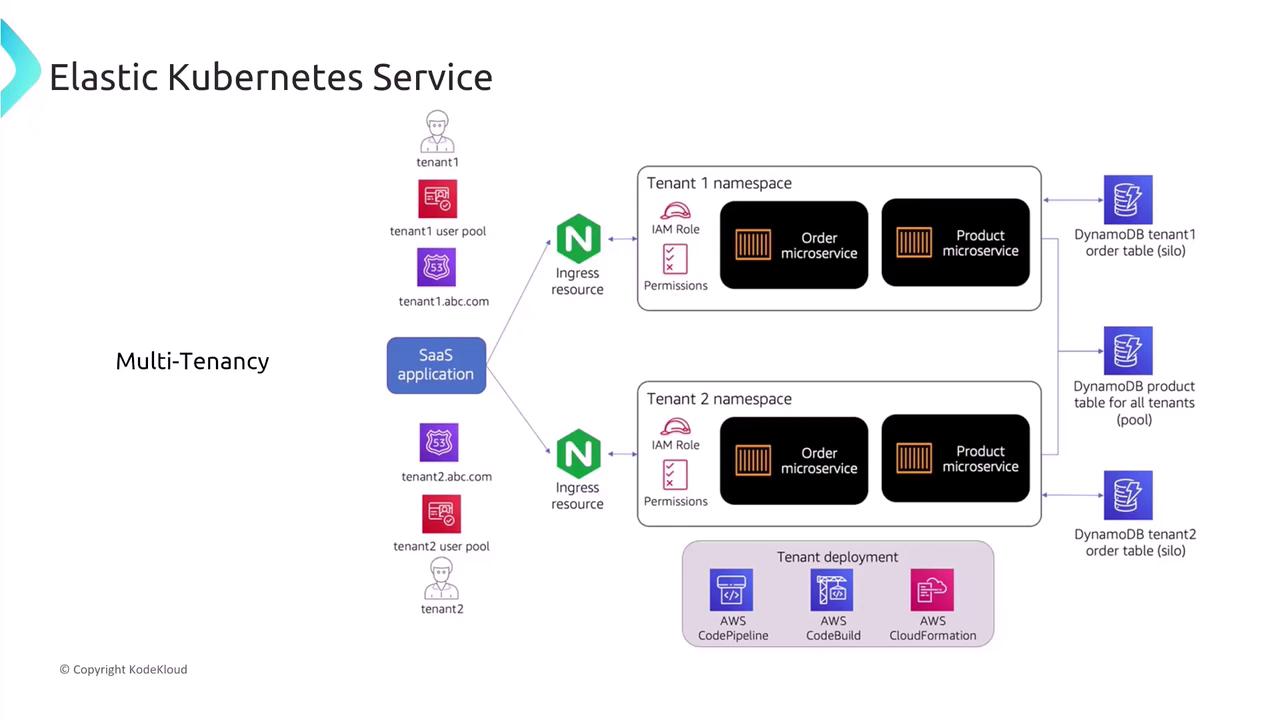

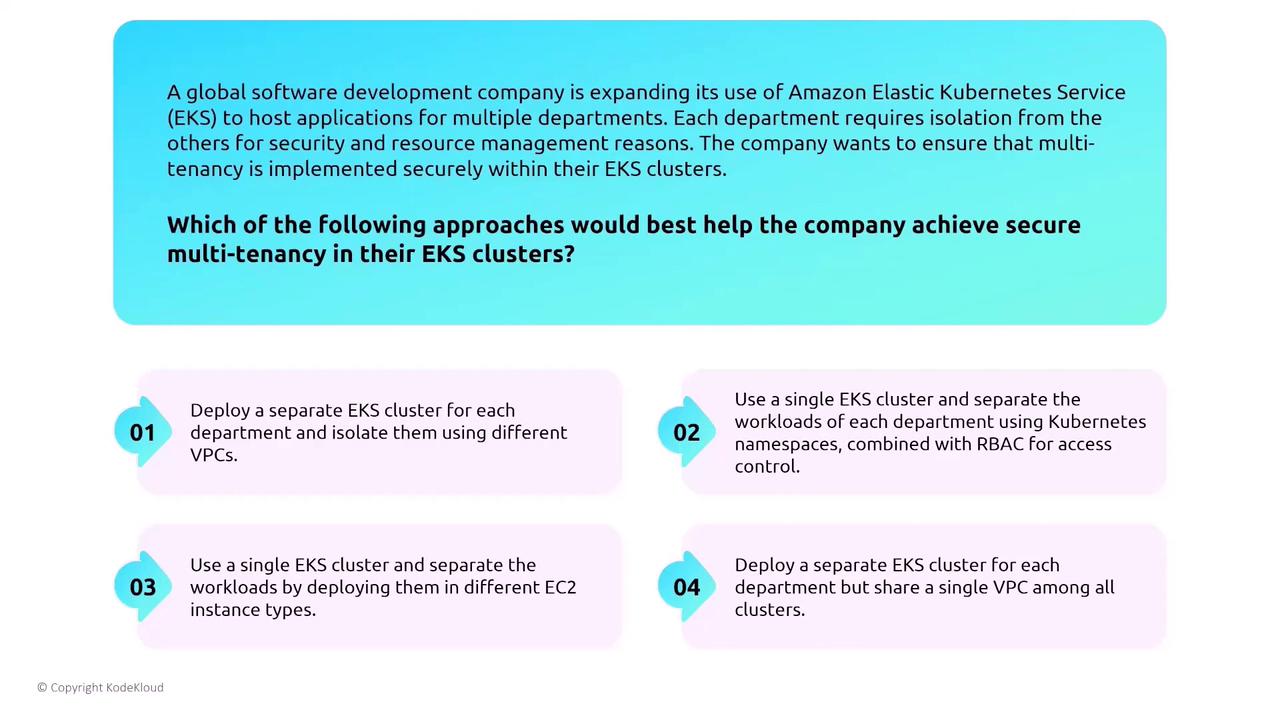

Multi-Tenancy in EKS

Kubernetes is not inherently multi-tenant because its control plane is shared by all workloads. However, you can simulate a multi-tenant environment by:- Separating workloads using Kubernetes namespaces.

- Implementing role-based access control (RBAC) to isolate tenant resources.

- Applying resource quotas and limit ranges to manage resource consumption effectively.

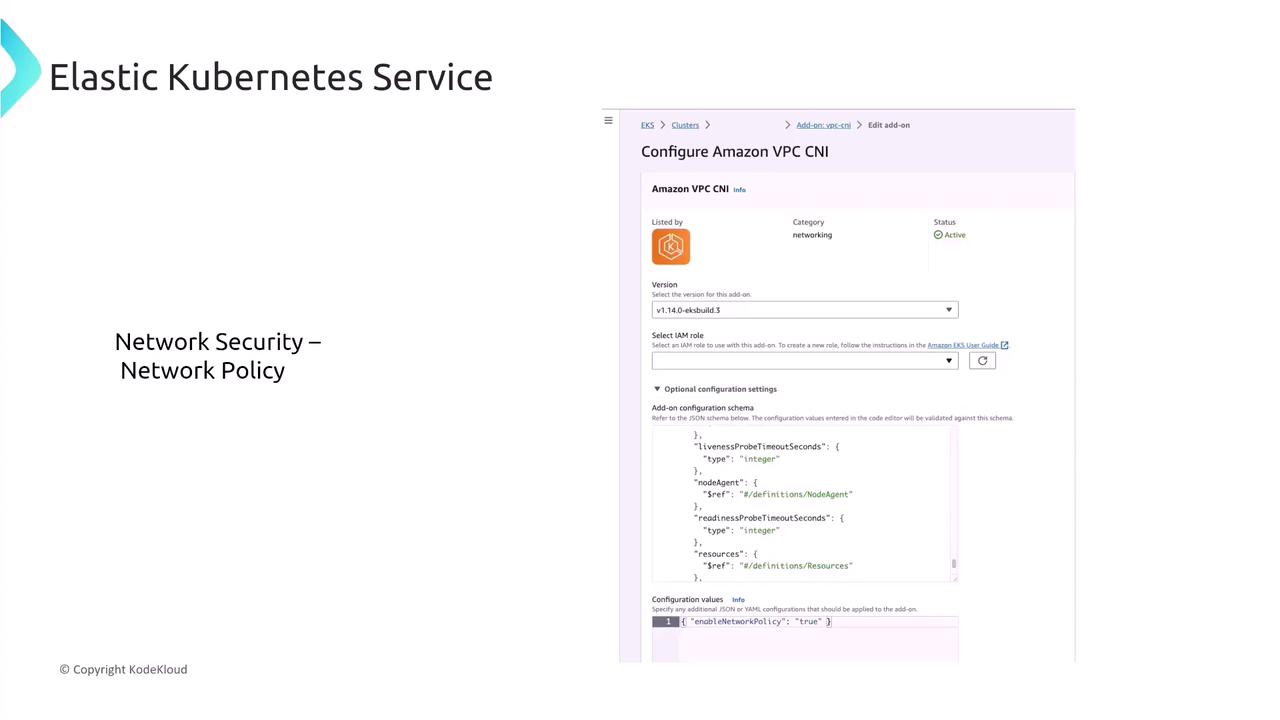

Network Security and Policies

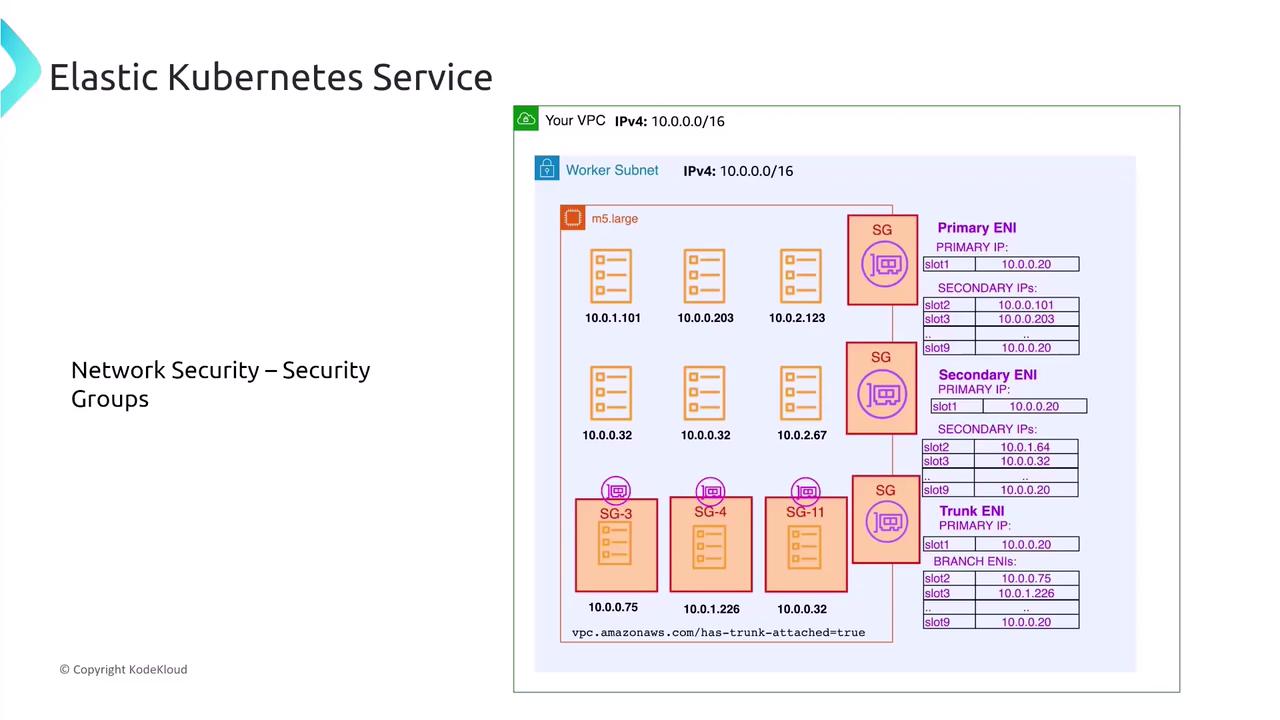

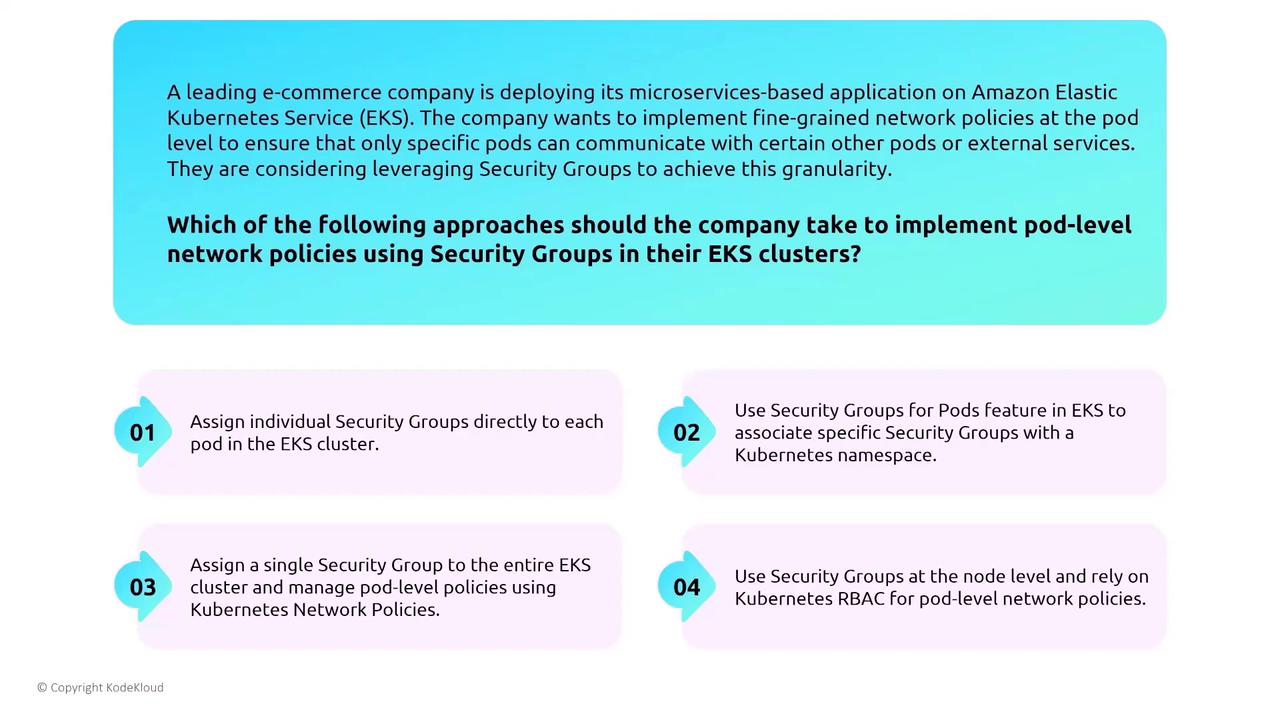

By default, Kubernetes allows all pod-to-pod communication within the cluster. To enforce restricted network boundaries, consider the following best practices:- Leverage the Amazon VPC Container Network Interface (CNI) plugin that assigns each pod its own IP address.

- Implement Kubernetes Network Policies to limit both inbound and outbound communications between pods.

- Avoid assigning individual security groups directly to each pod (this is not yet the norm).

- Instead, group pods by namespace and combine Kubernetes Network Policies with VPC-level security groups.

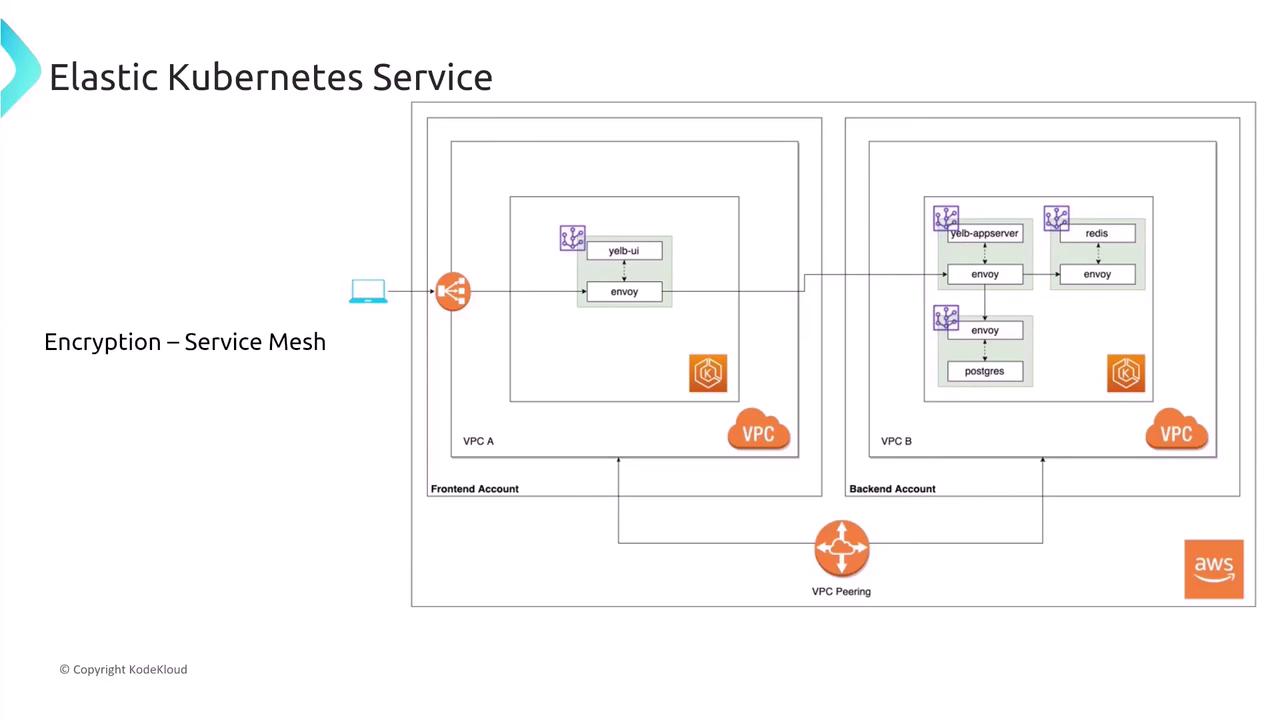

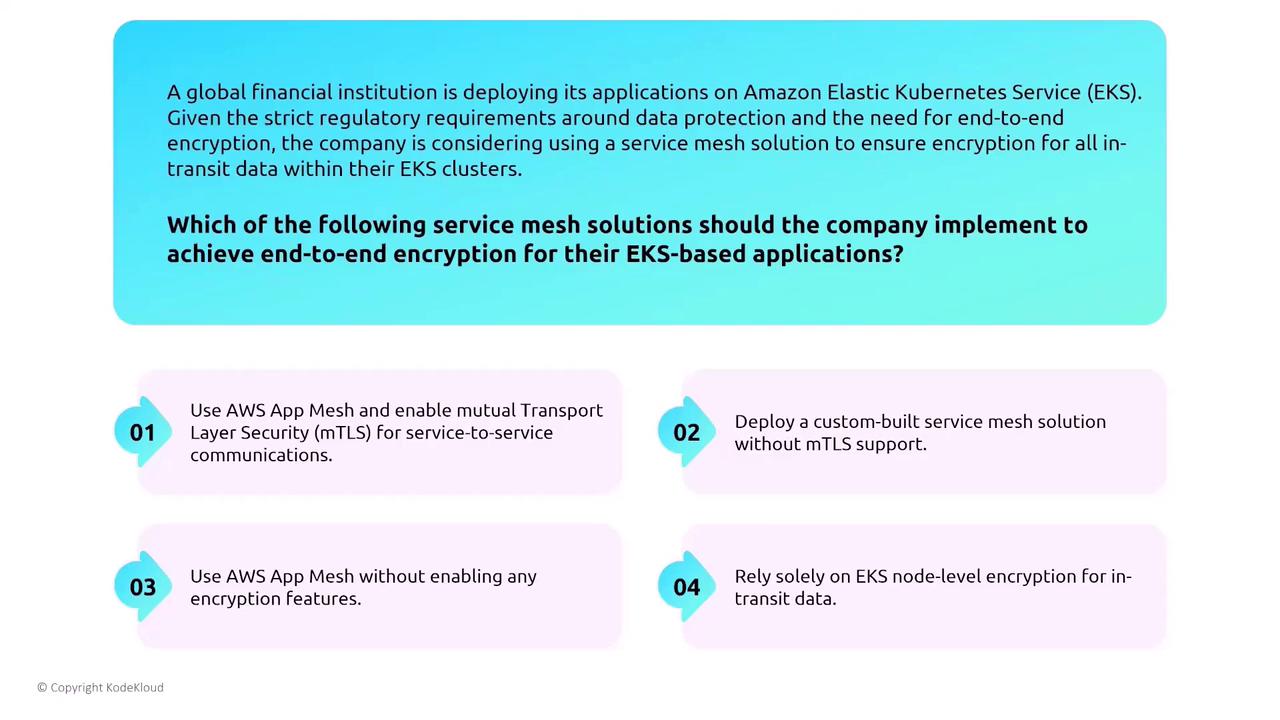

In-Transit Data Encryption with Service Mesh

To secure data in transit between services, many organizations deploy a service mesh. AWS App Mesh is one solution that implements mutual TLS (mTLS) for secure service-to-service communication within EKS. Sidecar proxies, such as Envoy, handle encryption seamlessly and protect all intra-cluster traffic without requiring significant changes to your application configurations.

- Enable AWS App Mesh and set up mTLS across all services.

- Avoid deploying without TLS support; relying solely on EKS-level encryption may not offer comprehensive protection.

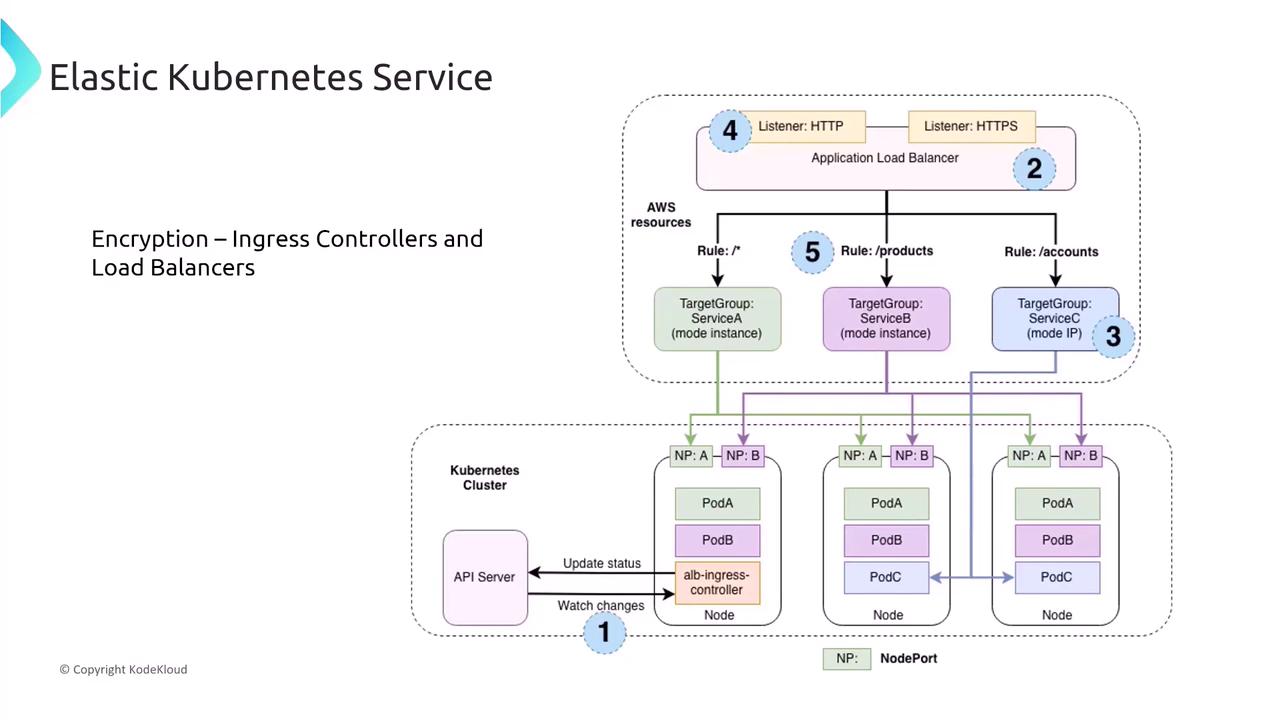

- Use an Application Load Balancer (ALB) with the ALB Ingress Controller for standard HTTP/HTTPS traffic with intelligent routing.

- For ultra-high throughput and minimal overhead, consider a Network Load Balancer (NLB), though ALB is generally recommended for its advanced routing capabilities.

CSI Drivers and Storage Encryption

When working with Container Storage Interface (CSI) drivers for persistent storage, consider the following best practices:- EBS Volumes:

Ensure your Storage Class is defined with encryption enabled. The EBS CSI driver will provision encrypted volumes when the encrypted parameter is set to true.

- EFS:

Configure the Storage Class or PersistentVolume definitions to require encryption for shared file systems. - FSx for Lustre or OpenZFS:

Although many FSx solutions are encrypted by default, verify the CSI driver configuration to ensure encryption settings are correctly specified.

Comprehensive Cluster Architecture

Review the following comprehensive diagram that combines all of the discussed components—EKS, its control plane, worker nodes, associated AWS services (like load balancers, databases, and storage), and various security and networking configurations. Key aspects include:- Deployment across multiple Availability Zones with both public and private subnets.

- An EKS control plane and nodes with designated IAM roles.

- Integration with essential components like load balancers, NAT gateways, endpoints, and AWS services such as S3, SES, and CloudTrail.

- Inclusion of security services like AWS Config, Systems Manager, and Certificate Manager.

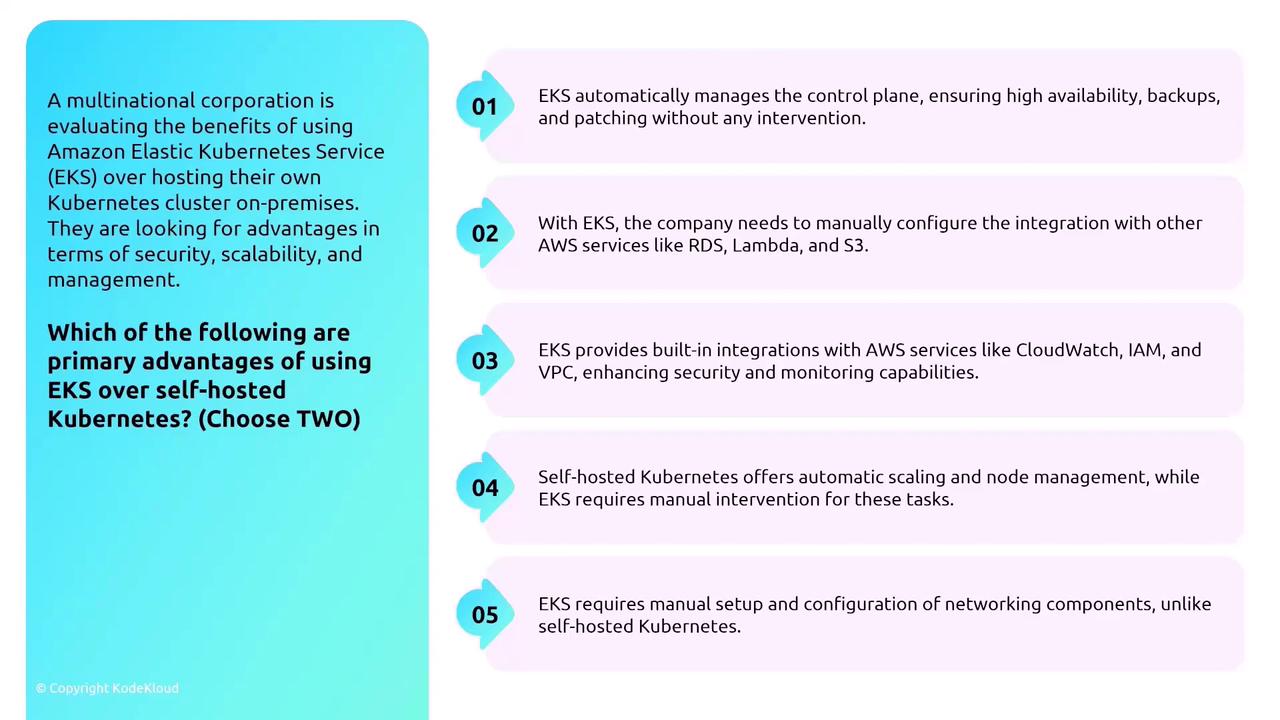

- Automatic patching and management of the control plane.

- Native integrations with CloudWatch, IAM, and VPCs for enhanced security and operational simplicity.

Conclusion

In this lesson, we covered the following key topics:- The evolution of pod identity in EKS and the role of Secure Token Service (STS).

- Best practices for IAM integration for clusters, nodes, pods, and the connector role.

- Options for managing worker nodes: self-managed nodes, managed node groups, and Fargate pods—and their impact on security responsibilities.

- Techniques to secure data at rest and in transit using CSI drivers, encryption strategies, and service meshes.

- Methods to enforce network segmentation and secure multi-tenancy in an EKS environment.

- Logging, monitoring, and architectural best practices to ensure your EKS cluster meets security and compliance requirements.