AWS Solutions Architect Associate Certification

Services Compute

Batch

In this lesson, we explore AWS Batch—a fully managed service that efficiently handles batch computing jobs without any manual intervention.

Imagine a professional kitchen where every dish requires precise preparation: vegetables must be diced and peeled, meat marinated, soup simmered, and frozen ingredients thawed. A manager assigns the right number of staff to each task, ensuring that critical processes are never delayed while preventing resource waste on simpler tasks. AWS Batch works in a similar way for computing jobs. For instance, if you need to convert a large collection of videos, you simply submit a job and AWS Batch executes the conversion script on the most appropriate compute resources, all without constant oversight.

AWS Batch automates the entire process—when you specify your job requirements, the service intelligently selects the appropriate EC2 instance(s) or Fargate instances from a resource pool, based on CPU, memory, and other needs. It also queues jobs until the necessary resources are available, allowing you to assign different priority levels to ensure that critical tasks are executed first.

Key Benefit

AWS Batch eliminates the manual burden of managing compute resource allocation so you can focus on improving your application and workflows.

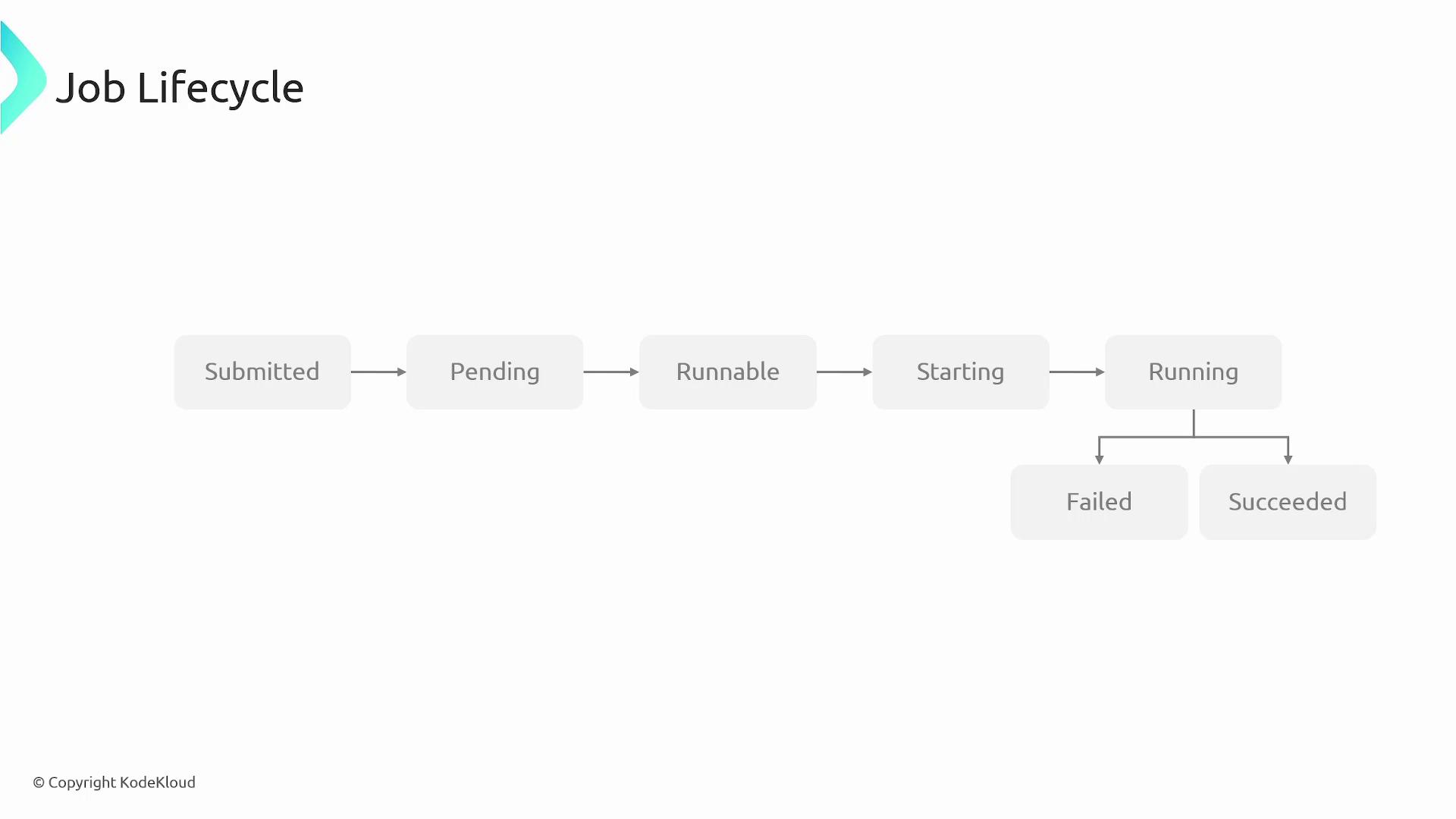

Job Lifecycle

The lifecycle of an AWS Batch job is comprised of several well-defined stages:

- Submitted: The job has been received by AWS Batch but has not yet been queued.

- Pending: The job waits in line, either for resource availability or to satisfy job dependencies.

- Runnable: The job becomes ready for assignment and waits for the necessary compute resources (vCPUs, memory, etc.) to become available.

- Starting: The job is assigned to a compute resource, and its container begins to initialize.

- Running: The job is actively executed, processing the prescribed commands.

- Succeeded/Failed: The job concludes by entering a "succeeded" state if it completed successfully or "failed" if any issues arose during execution.

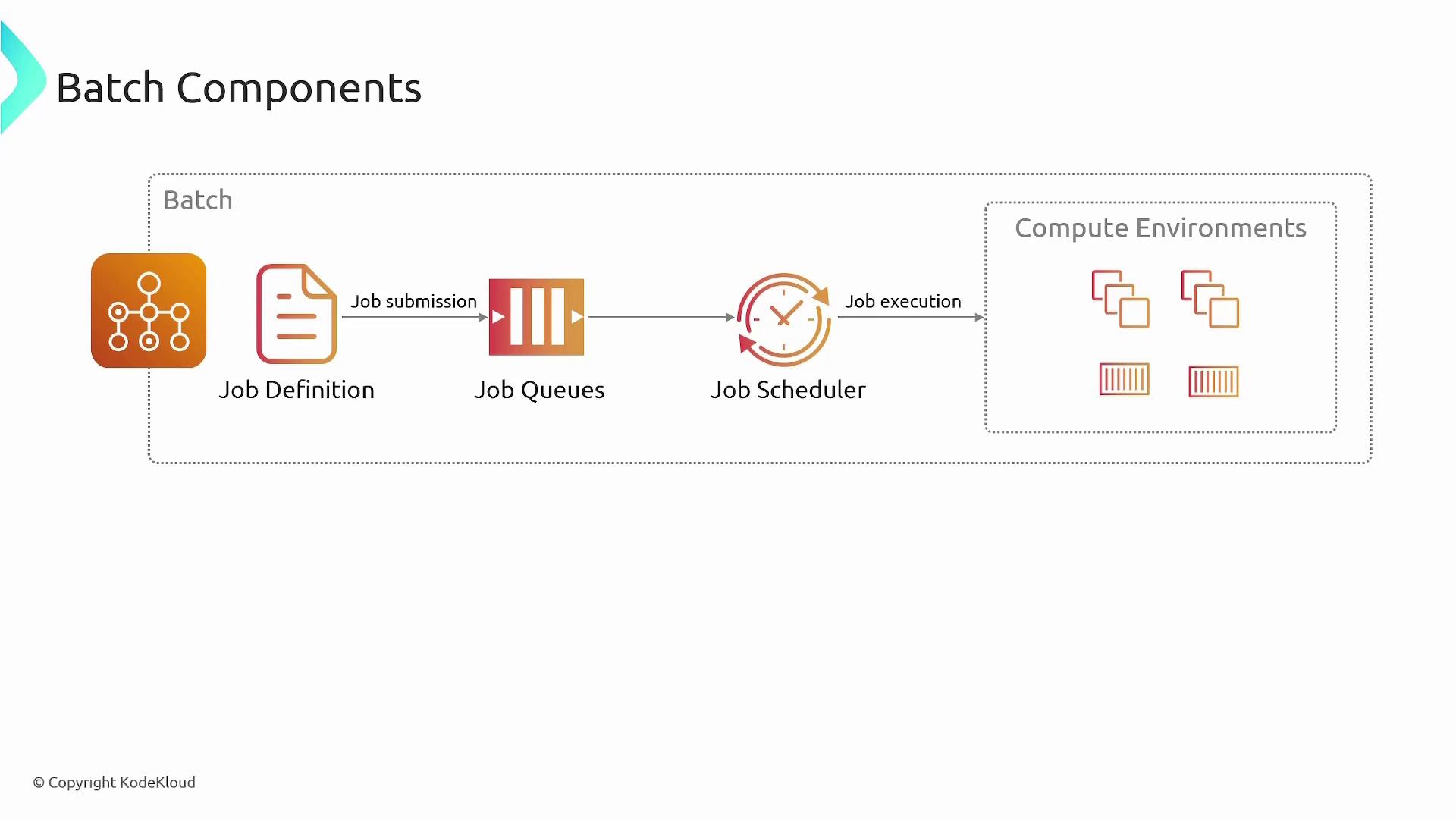

Key AWS Batch Components

AWS Batch is built around several core components that work together to streamline batch job processing:

Job Definition: Acts as a template that outlines how a job should run. It includes specifications such as the Docker image to use, vCPU count, memory requirements, commands to execute, and environment variables.

Job Submission: With a job definition in place, submissions create an instance of that template. Parameter overrides can be applied at this stage as needed.

Job Queue: Submitted jobs are placed into queues that are linked to one or more compute environments. Each queue features its own priority setting to help determine execution order.

Job Scheduler: This component assesses job priorities and resource requirements to optimally place jobs onto available compute resources. When suitable resources are free, the scheduler transitions jobs to the runnable and then running state.

Container Execution: Jobs are executed within Docker containers. AWS Batch pulls the specified Docker image, sets up the container environment, and runs your job command, ensuring consistency and isolation.

Compute Environment: A set of compute resources (either EC2 instances or Fargate) where jobs run. AWS Batch supports three compute environment types:

- EC2 On-Demand

- EC2 Spot

- Fargate

AWS Batch automatically scales these environments based on the job queue, ensuring optimal resource utilization.

Features and Benefits

Leveraging AWS Batch can significantly optimize your workflow and resource management. Here are some key advantages:

- Dynamic Resource Provisioning: Automatically provisions the optimal amount and type of compute resources according to your batch job’s requirements.

- Job Queues and Prioritization: Efficiently manages job queues, ensuring that critical jobs are prioritized.

- Cost Efficiency: Utilizes EC2 Spot instances and auto-scaling features to minimize over-provisioning, subsequently reducing operational costs.

- Seamless AWS Integration: Conveniently integrates with other AWS services such as EC2, ECS, ECR, CloudWatch, and IAM.

- Customizable Compute Environments: Allows you to define compute environments tailored to specific needs—including instance types, vCPU limits, and launch templates.

Efficiency Tip

By leveraging AWS Batch’s automated scaling and priority-based job scheduling, you can enhance both performance and resource utilization while keeping costs under control.

Transcribed by https://otter.ai

For more detailed insights, consider exploring additional AWS documentation or related AWS Batch tutorials.

Watch Video

Watch video content