AWS Solutions Architect Associate Certification

Services Data and ML

Sagemaker

Welcome, Solutions Architects and future Solutions Architects! In this article, you'll discover Amazon SageMaker—AWS’s premier machine learning service that streamlines the process of hosting, training, and deploying ML models. SageMaker empowers you to detect patterns in data and make reliable predictions, making it an essential tool for any AI-driven project.

Machine learning and artificial intelligence rely on mathematical algorithms to identify trends and patterns. These algorithms analyze input data to make predictions or decisions. For example, an algorithm might analyze demographic data to predict a person's likelihood of purchasing an item, such as a hat.

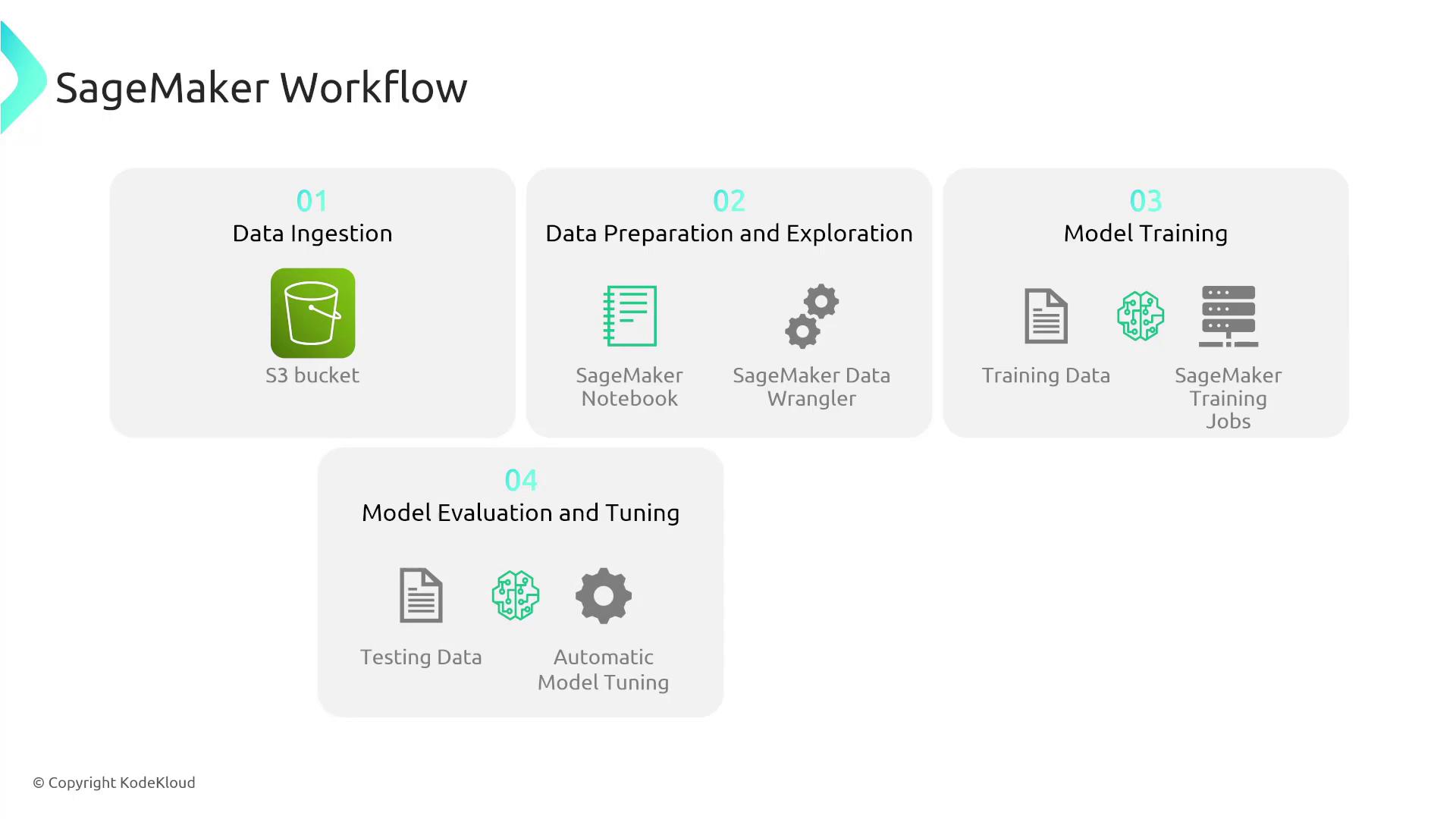

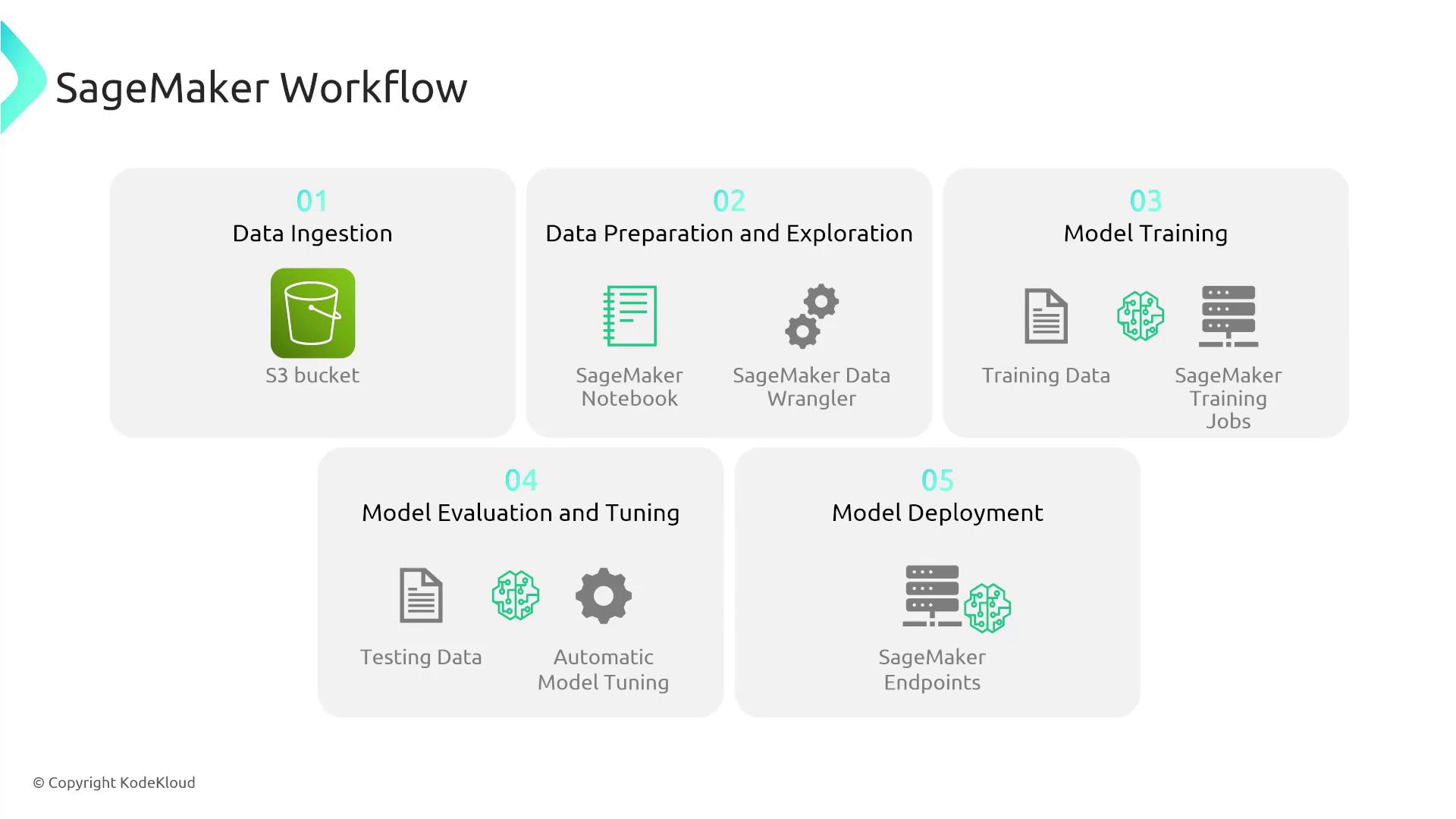

SageMaker supports the entire ML workflow with a comprehensive suite of tools and services. Below is an overview of the key steps in the machine learning lifecycle with SageMaker.

Key Steps in the Machine Learning Workflow

1. Data Ingestion and Preparation

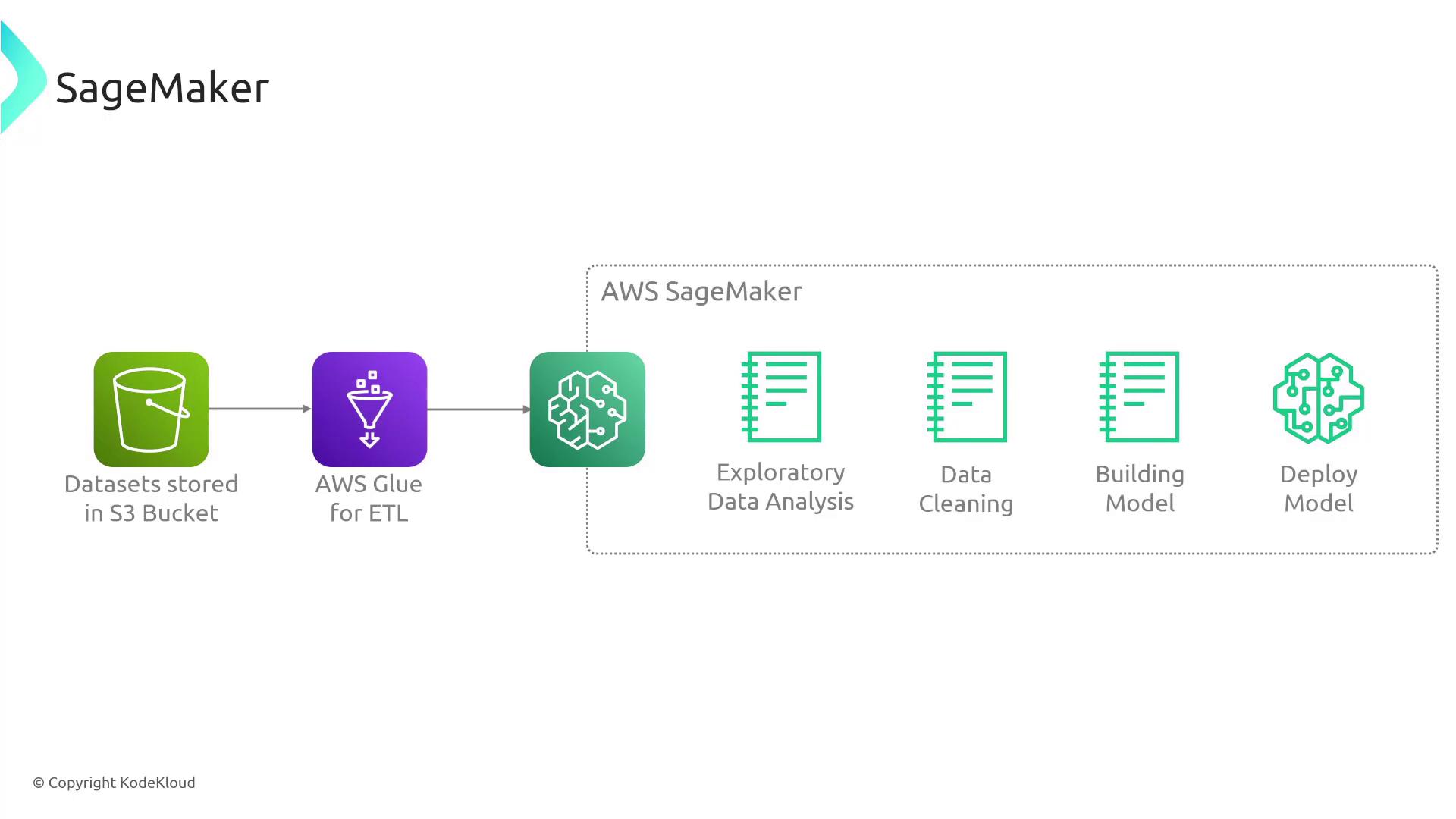

Begin by gathering relevant data that addresses your business or technical problem. For example, you might store demographic data and historical purchase information in an Amazon S3 bucket. SageMaker integrates with services like AWS Glue DataBrew, Data Wrangler, and SageMaker Notebooks (Jupyter Notebooks) to help you explore, clean, and pre-process your data. Tools such as SageMaker Data Wrangler offer an intuitive interface to remove outliers, transform data, and ensure high-quality input for model training.

2. Model Training

Once your data is prepared, you can train your machine learning model. During training, the model learns relationships within the data by processing both known outcomes and new cases. The objective is to develop a model capable of making accurate predictions—for instance, determining whether a person is likely to purchase a hat with high certainty.

3. Model Evaluation and Tuning

After training, SageMaker provides tools to validate and fine-tune your model. This phase involves adjusting parameters to improve accuracy and reliability. Evaluating the model before deployment ensures that it performs effectively in production environments.

4. Model Deployment

When your model is tuned and validated, you can deploy it to production using SageMaker Endpoints. This enables near real-time predictions and supports advanced deployment options such as A/B testing, auto scaling, and concurrent serving of multiple models.

Deep Dive into SageMaker Components

Notebook Instances

SageMaker offers interactive Jupyter Notebooks that allow you to write, run, and visualize Python code. This environment is ideal for exploring data and developing code in a web-based setting.

Training Jobs

You can launch training jobs that leverage various machine learning algorithms suitable for diverse data scenarios. SageMaker also supports distributed training across multiple instances, which can significantly reduce the time required to train your models.

Endpoints for Deployment

Deploy your trained and tuned models to SageMaker Endpoints to integrate machine learning predictions into your live applications. This feature includes support for auto scaling to handle varying workloads.

Note

SageMaker integrates seamlessly with other AWS services. For example, AWS Glue can be used for ETL tasks, while S3 serves as secure and scalable data storage.

Advanced Features of SageMaker

SageMaker offers several advanced capabilities that make it a complete ML platform:

- Integrated Jupyter Notebooks: Use a familiar environment for interactive development.

- Distributed Training: Scale training across multiple instances to speed up the process.

- Automatic Model Tuning: Optimize model parameters to enhance prediction accuracy.

- SageMaker Studio: Access a unified IDE covering every stage of the ML lifecycle, from data preparation to deployment.

- Built-in Algorithms and Bring Your Own Algorithm (BYOA): Flexibility to choose from pre-built algorithms or integrate your custom algorithms within SageMaker’s containerized environment.

![]()

Summary

If you're aiming to train machine learning models using production-ready notebooks, distributed training environments, automatic model tuning, and flexible algorithm choices, Amazon SageMaker is the ultimate solution. Its end-to-end support for every phase of the ML workflow makes it an indispensable tool for deploying scalable, efficient AI applications.

For more detailed information, check out the AWS Documentation and Amazon SageMaker Developer Guide.

I'm Michael Forrester. If you have any questions, please reach out on Slack. Otherwise, see you in the next article!

Watch Video

Watch video content