PyTorch

Getting Started with PyTorch

Introduction to PyTorch

Artificial intelligence and machine learning are not mere buzzwords—they are transformative forces reshaping industries, enhancing daily lives, and pushing the boundaries of technology. Frameworks like PyTorch power these advancements by enabling developers and researchers to build and experiment with deep learning models efficiently.

![]()

From powering virtual assistants and delivering personalized recommendations to enabling autonomous vehicles and driving medical breakthroughs, these transformative technologies are redefining the future.

At the heart of many of these innovations is the PyTorch framework, developed by Facebook's AI Research Lab in 2016. As an open-source machine learning library written in Python, PyTorch is celebrated for its ease of use, flexibility, and high performance. Its dynamic computation graph allows developers to modify model architectures on the fly, making it especially popular for research and experimental projects.

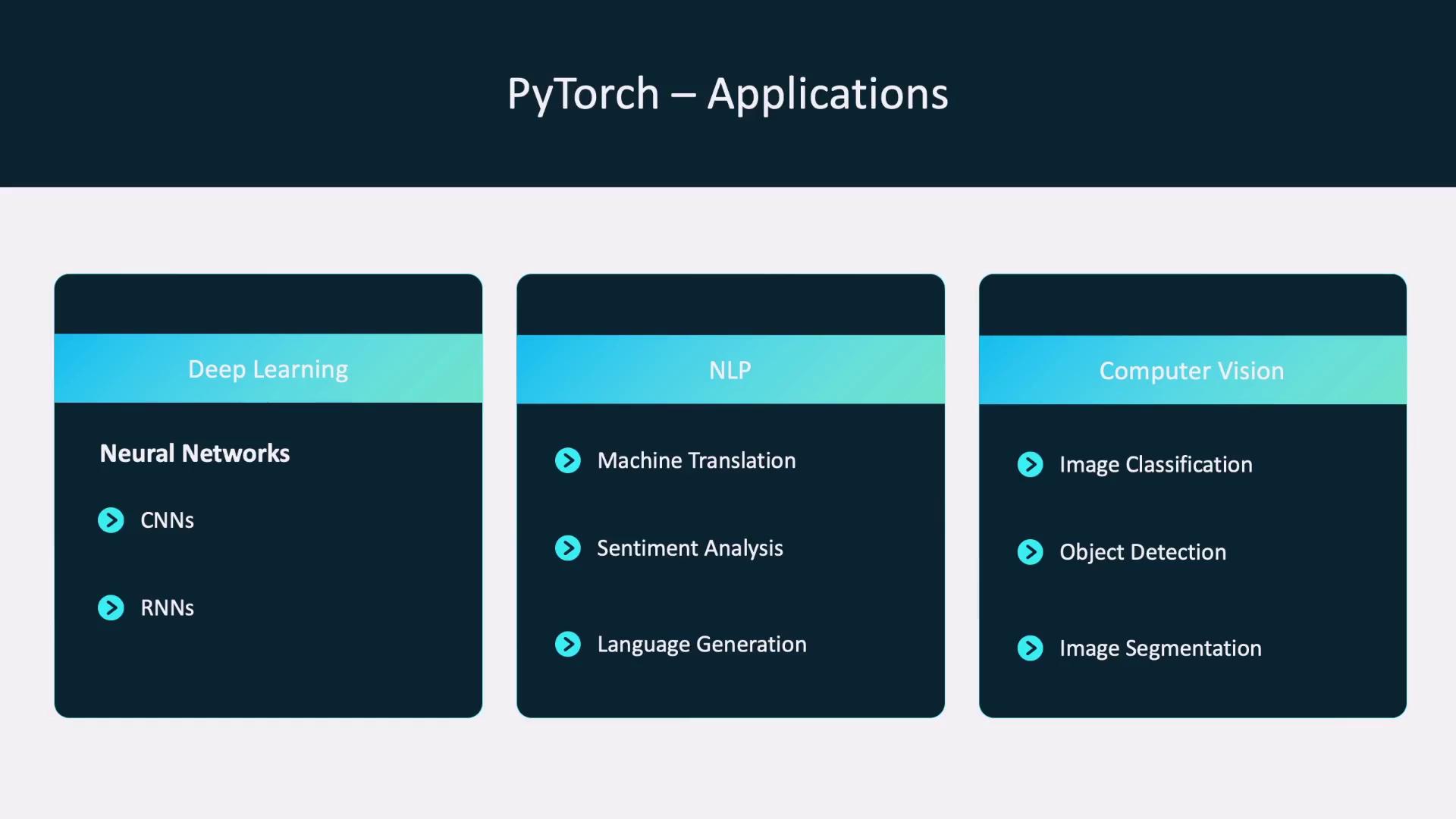

Applications of PyTorch

PyTorch is widely applied in various machine learning domains. Its strength in deep learning is evident in the construction of convolutional neural networks (CNNs) and recurrent neural networks (RNNs). Furthermore, PyTorch is highly effective for natural language processing tasks—such as machine translation, sentiment analysis, and language generation—as well as for computer vision tasks including image classification, object detection, and image segmentation when paired with the TorchVision library.

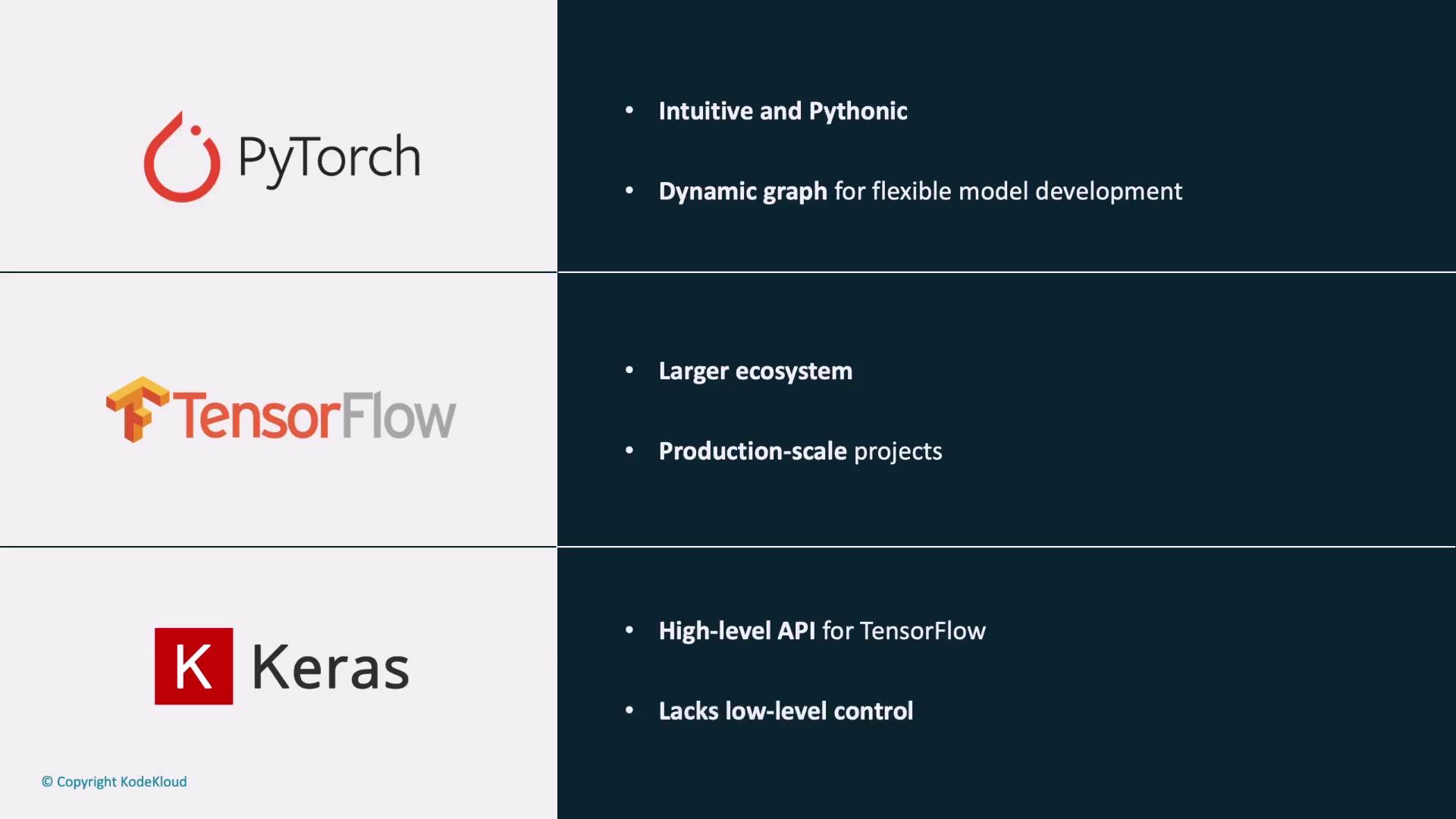

When compared to other frameworks such as TensorFlow and Keras, PyTorch distinguishes itself with an intuitive Pythonic syntax and a dynamic approach to computation. While TensorFlow is known for its expansive ecosystem and benefits in production-scale projects, its static graph structure can be less flexible. Similarly, Keras, serving as a high-level API for TensorFlow, does not offer the same level of low-level control available in PyTorch.

PyTorch also boasts native GPU support and seamless integration with the Python ecosystem, making it a versatile option for both academic research and industrial applications.

Key Advantages of PyTorch

One of the standout features of PyTorch is its dynamic computation graph, enabling eager execution. This means that operations are computed immediately, which simplifies debugging and accelerates iterative experimentation. Its highly Pythonic design ensures a smooth integration with existing Python libraries, easing the learning curve for new users.

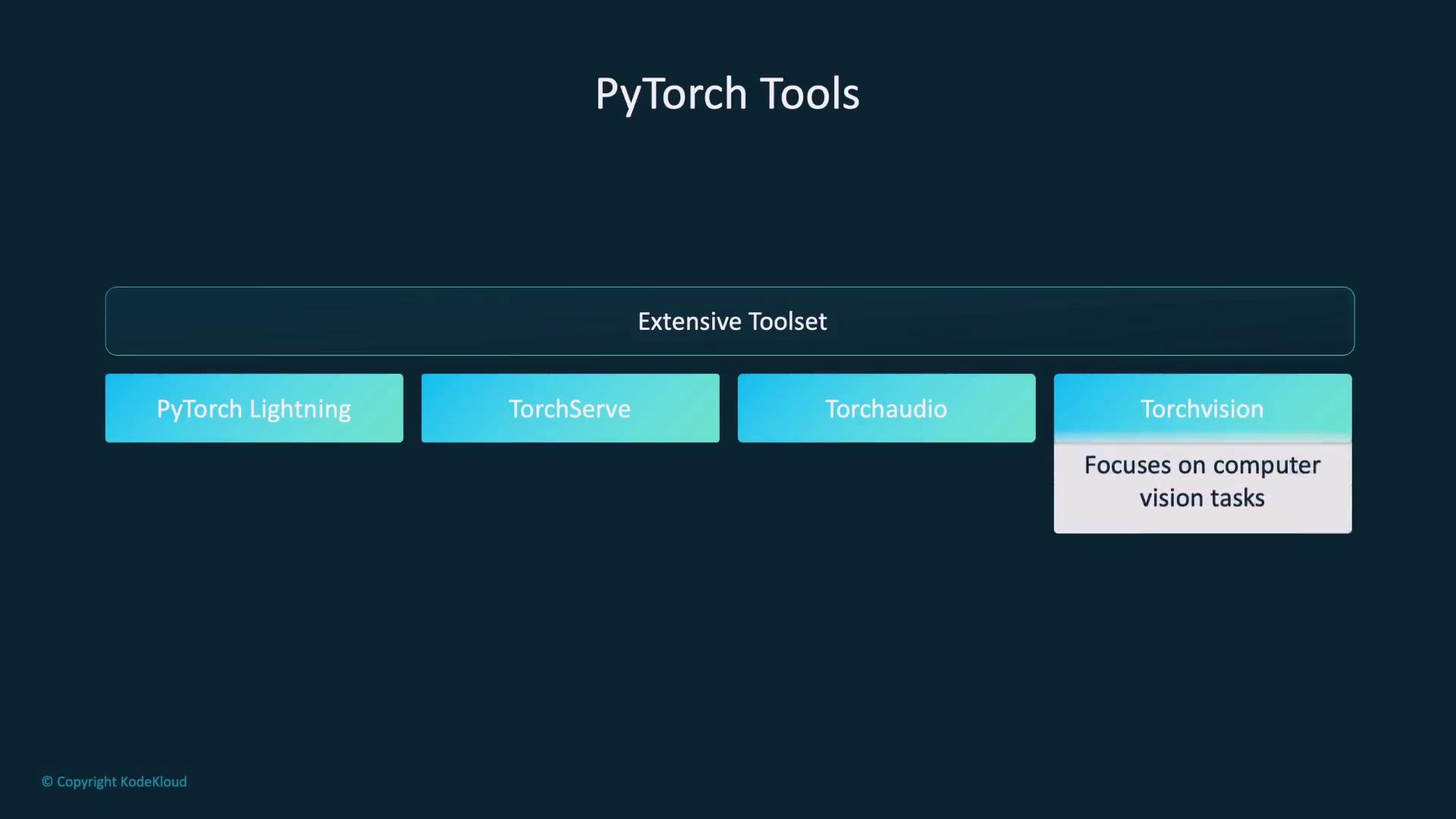

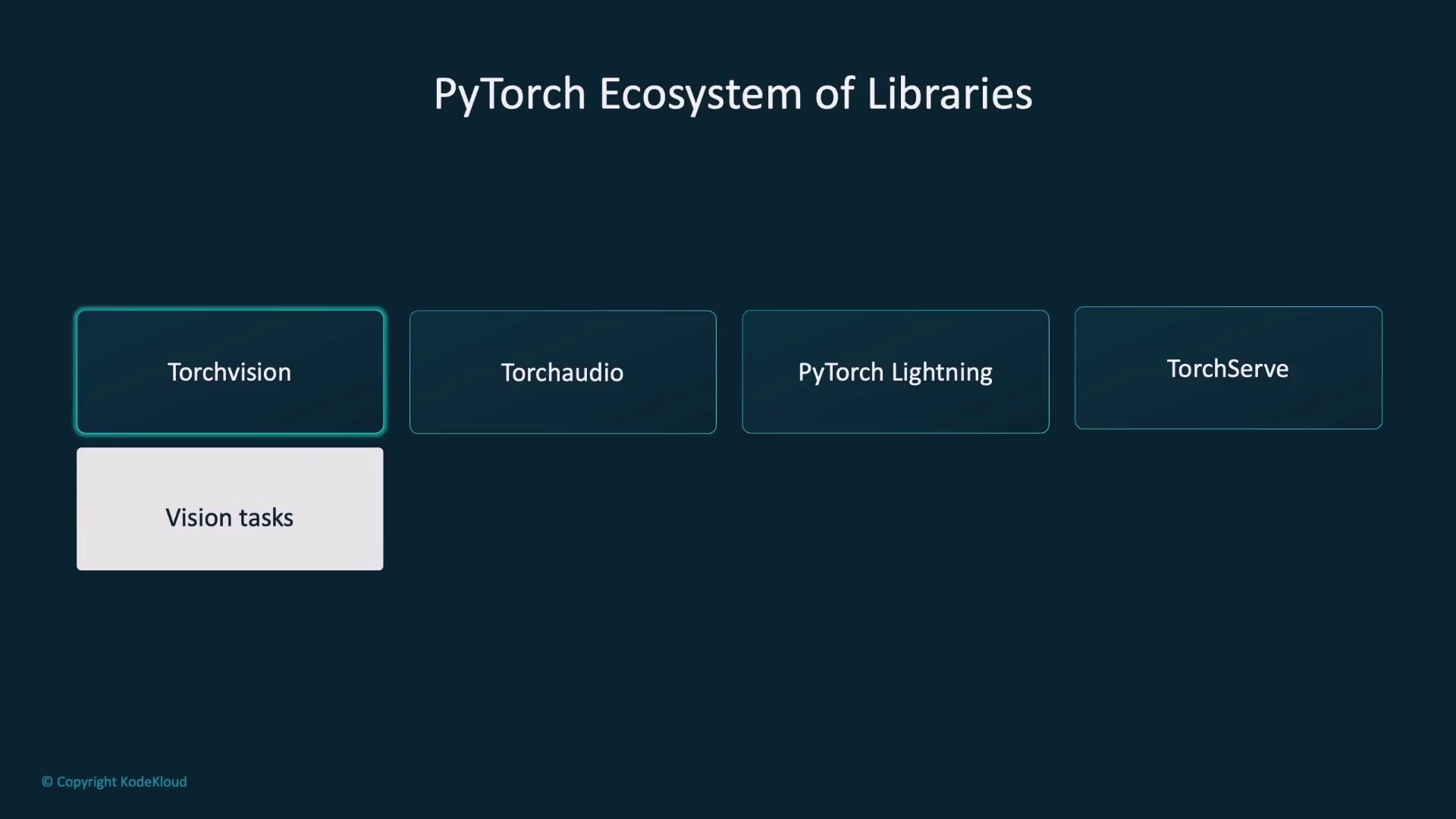

The extensive PyTorch ecosystem further enhances its functionality:

- PyTorch Lightning: Simplifies the training process and reduces boilerplate code.

- TorchServe: Eases the deployment of trained models into production environments.

- TorchAudio: Optimizes audio data processing.

- TorchVision: Provides utilities and pre-trained models for computer vision tasks.

Additionally, support for both CPU and GPU acceleration, combined with a dynamic and active community, ensures that PyTorch continues to evolve and deliver comprehensive support and improvements.

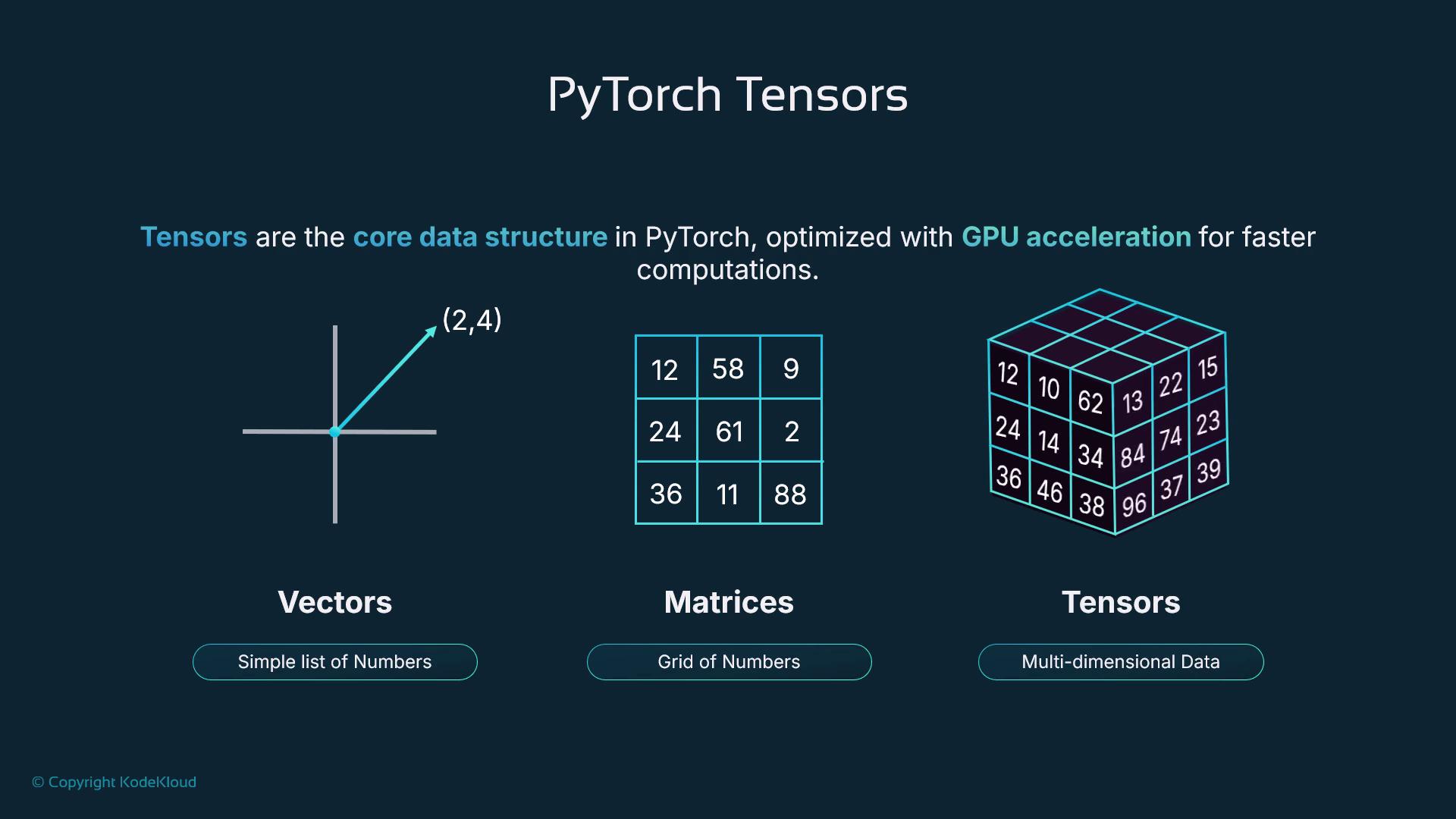

PyTorch Tensors

A fundamental component of PyTorch is the Tensor, akin to a NumPy array but optimized for GPU acceleration and enhanced computations. Tensors are versatile data containers capable of representing everything from single values to complex multi-dimensional arrays. This flexibility is essential for deep learning applications where efficient data manipulation is critical.

With operations ranging from element-wise addition and multiplication to matrix-level computations, PyTorch Tensors can be reshaped, sliced, and manipulated effortlessly. For example, image data stored in a tensor may need reshaping to fit the input specifications of a neural network—a process that PyTorch handles efficiently.

Further Learning

For an in-depth exploration of tensors and their operations, look out for our detailed guide on PyTorch Tensors in the upcoming sections.

Autograd: Automatic Differentiation

A pivotal feature of PyTorch is its Autograd library for automatic differentiation. Autograd tracks all operations performed on tensors and automatically computes the gradients required to optimize machine learning models. This process simplifies backpropagation, streamlining the training process and reducing common errors associated with manual gradient computation.

Tip

Keep an eye on upcoming sections where we delve deeper into Autograd and its critical role in model training and optimization.

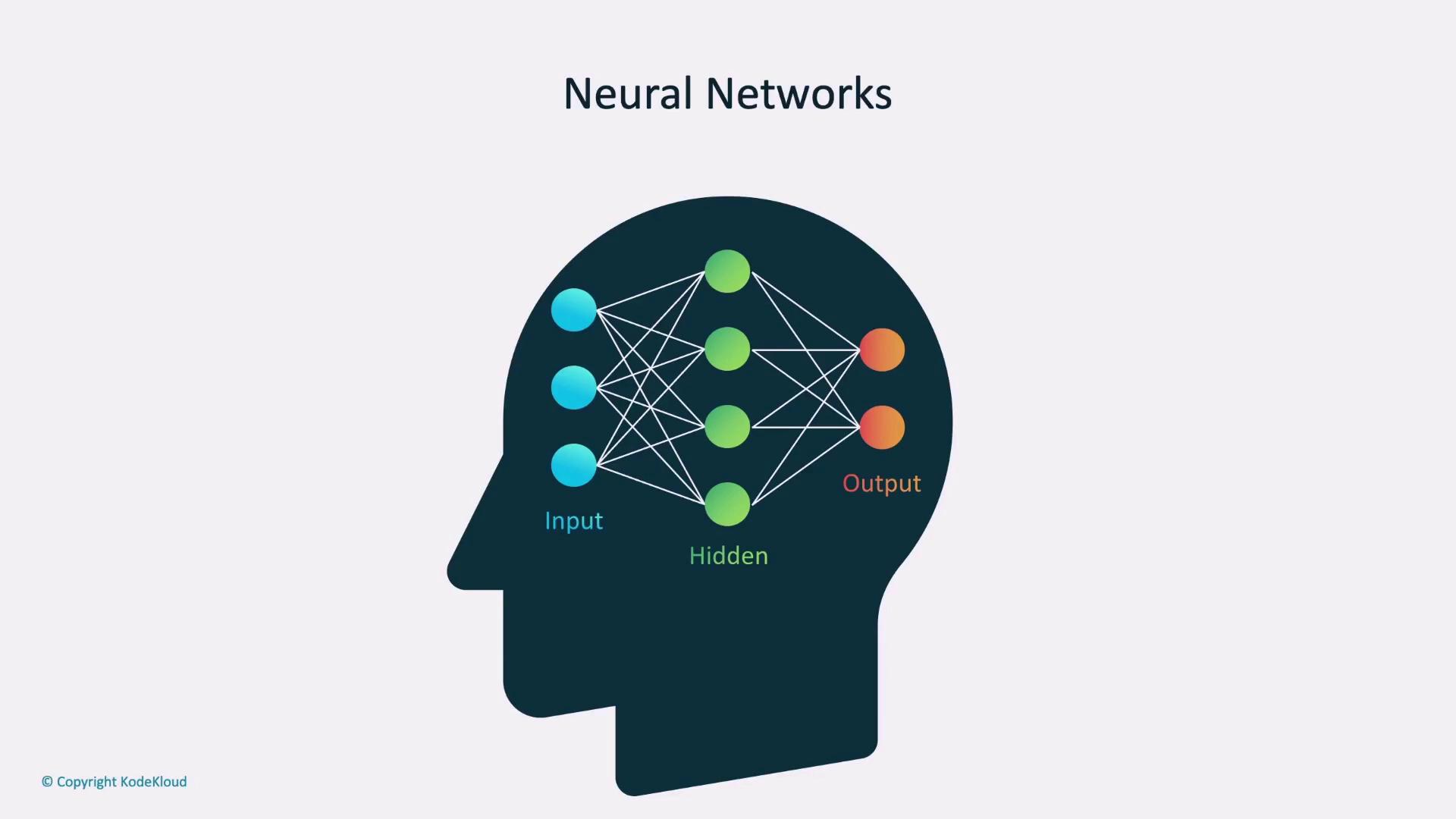

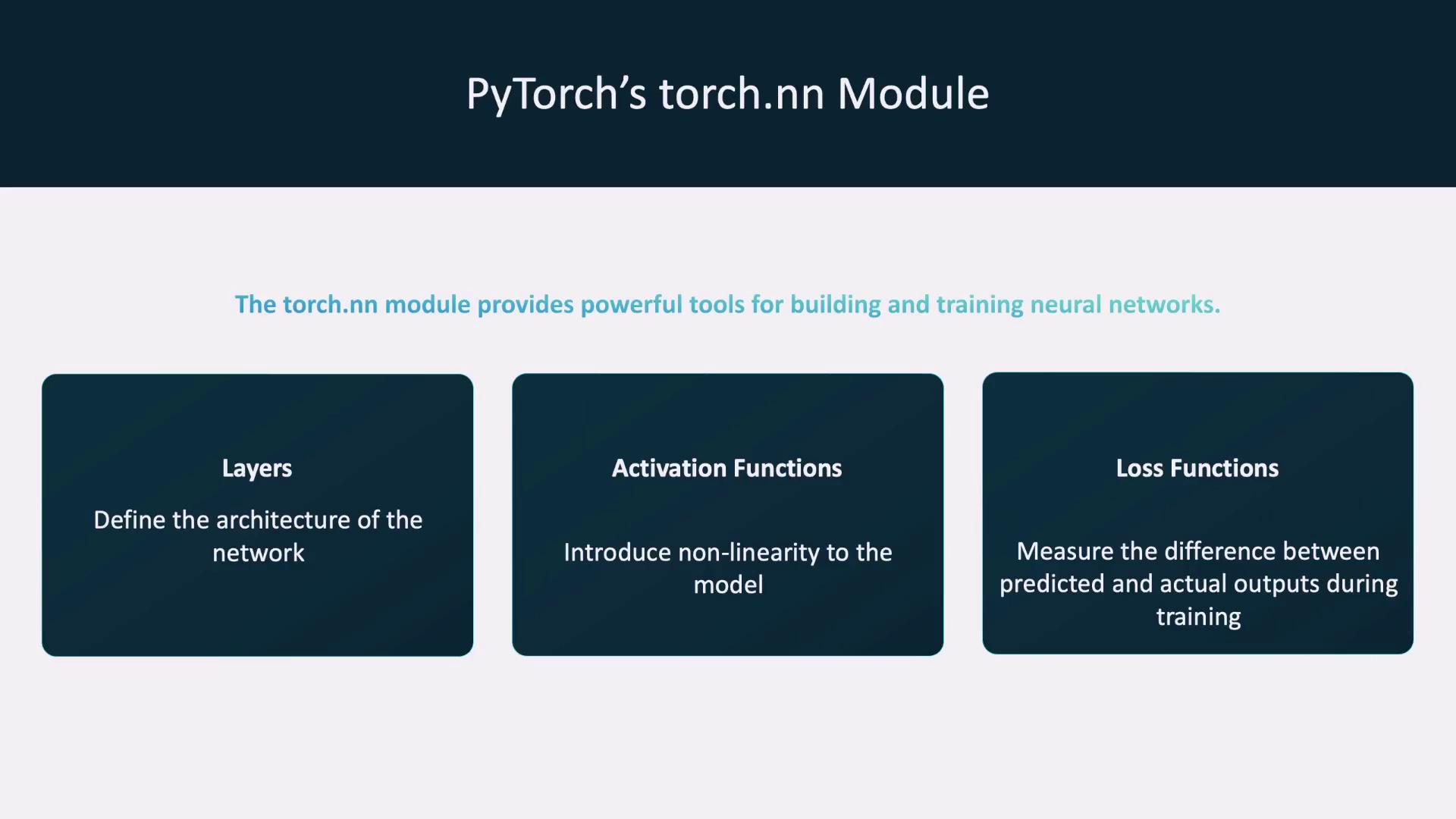

Building Neural Networks with PyTorch

PyTorch simplifies the creation of neural networks—computational models inspired by the human brain that process data through interconnected layers. Its torch.nn module provides an array of pre-built components, including layers, activation functions, and loss functions, which enable developers to build and train neural networks with ease.

Using torch.nn, developers can construct simple feedforward networks by stacking layers and selecting appropriate activation functions and loss metrics. This modular approach allows for the creation of both simple and sophisticated network architectures.

The PyTorch Ecosystem

Beyond the core framework, PyTorch features a vibrant ecosystem of libraries and tools that address specialized tasks:

| Library | Purpose | Example Use |

|---|---|---|

| TorchVision | Pre-trained models and utilities for computer vision tasks | Image classification and object detection |

| TorchAudio | Tools for efficient audio processing | Speech recognition and sound classification |

| PyTorch Lightning | High-level interface to streamline model training | Simplifying complex training procedures |

| TorchServe | Deploying trained models into production | Serving models in production environments |

These libraries, among others, extend PyTorch’s functionality and make it a comprehensive solution for tasks ranging from research to deployment.

Adoption and Community Support

PyTorch is trusted by major companies and academic institutions alike. Some notable adopters include:

- Meta (Facebook): Utilizing PyTorch for advanced AI research and development.

- Tesla: Leveraging the framework for designing deep learning models for autopilot systems and self-driving technology.

- Stanford University: Employing PyTorch in cutting-edge research projects.

- Uber: Applying PyTorch in predictive modeling and autonomous driving research.

The PyTorch Foundation, established in 2022 under the guidance of the Linux Foundation, plays a crucial role in fostering community development and supporting the ecosystem’s growth. A robust open-source community, active forums, and continuous contributions further ensure that PyTorch remains on the cutting edge of technological innovation.

Summary

In this article, we introduced the core components and advantages of PyTorch—from dynamic tensors and automatic differentiation with Autograd to powerful neural network modules and an extensive ecosystem. Whether you are a researcher or an industry practitioner, understanding these fundamental concepts will serve as a solid foundation as you dive deeper into machine learning with PyTorch.

Next Steps

Explore our upcoming tutorials for detailed insights into PyTorch’s tensor operations, model building, and optimization techniques.

Watch Video

Watch video content