PyTorch

Getting Started with PyTorch

PyTorch Ecosystem

This lesson provides a comprehensive overview of the PyTorch ecosystem, detailing essential tools, community engagement opportunities, and valuable resources designed to extend your learning and streamline your development process.

We begin with an agenda that highlights the key discussion points for this lesson:

The PyTorch ecosystem integrates a diverse array of tools, libraries, and projects contributed by researchers, developers, and machine learning practitioners worldwide. Its mission is to empower you with innovative resources to simplify experimentation, boost performance, and accelerate development with PyTorch.

The ecosystem delivers two primary benefits:

Since PyTorch's inception, numerous complementary tools have emerged. If you develop a tool that enhances the PyTorch experience, you can apply to have your project featured among the ecosystem tools. This inclusion significantly increases its visibility within the community.

Among the standout ecosystem tools are PyTorch Lightning, Ray, Accelerate, DeepSpeed, Stable Baselines 3, and Transformers. These projects are integral to modern machine learning and AI workflows. Below is an in-depth overview of each tool and its benefits:

PyTorch Lightning

PyTorch Lightning is a high-level framework built on top of PyTorch that abstracts much of the boilerplate code involved in training loops, optimization steps, and device management. By simplifying these repetitive tasks, Lightning empowers developers to focus on refining model logic and experimentation. It is especially useful for managing complex codebases and scaling models seamlessly across multiple GPUs or nodes.

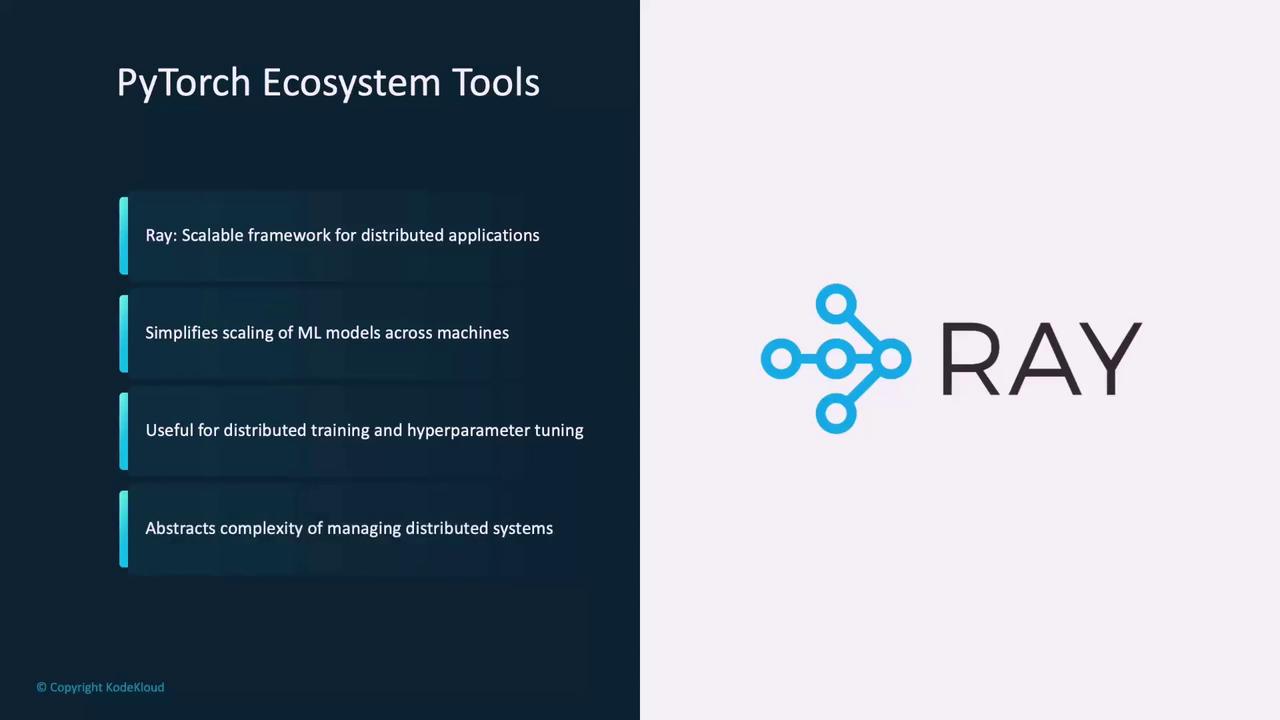

Ray

Ray is a versatile, scalable framework that simplifies distributed application development. Within the context of PyTorch, Ray excels at distributed training and hyperparameter tuning. By leveraging parallelism, Ray accelerates model optimization while abstracting the complexity of managing multi-node environments.

Accelerate

Accelerate is a powerful Python library engineered to simplify the execution of PyTorch models across various hardware configurations, including multiple GPUs, TPUs, or even across several machines. With its intuitive interface, Accelerate automatically manages device placement, parallelism, and distributed training—allowing you to concentrate on model development without wrestling with hardware complexities.

DeepSpeed

DeepSpeed is designed to significantly accelerate the training of large-scale machine learning models. It is particularly valuable for models that exceed typical memory capacities or require lengthy training times. DeepSpeed optimizes execution on GPUs to minimize memory consumption and power usage while distributing workload across multiple GPUs or machines. Its support for distributed training is comparable to Ray, offering efficient workload management.

Stable Baselines 3

Stable Baselines 3 streamlines the implementation of reinforcement learning algorithms using PyTorch. It provides easy-to-use implementations of popular reinforcement learning techniques, making it particularly beneficial for beginners. With Stable Baselines 3, you can implement and experiment with robust, well-tested reinforcement learning methods without the need to build algorithms from scratch.

Transformers

Developed by Hugging Face, Transformers is a widely adopted library that offers ready access to state-of-the-art machine learning models, particularly optimized for natural language processing tasks such as text classification, translation, and question answering. In addition to its comprehensive support for PyTorch, Transformers includes numerous pre-trained models that allow you to rapidly deploy powerful NLP solutions without extensive training.

PyTorch Community Resources

The vibrant PyTorch community offers extensive resources to help you overcome challenges and deepen your understanding. Engage with peers on the official discussion forum at discuss.pytorch.org, where you can ask questions, share insights, and contribute to the collective knowledge base.

Community Discussion

Consider signing up for the PyTorch forum and Slack channel to stay updated on advanced topics, network with industry experts, and discover emerging projects.

Additionally, a dedicated Slack channel provides a platform for discussing advanced topics and fostering collaboration with peers. Request access through the available community link to join the conversation.

Additional Developer Resources

Beyond community support, PyTorch offers an array of resources to empower both beginners and seasoned developers:

- The PyTorch Examples GitHub repository features a variety of examples that highlight different use cases, making it an excellent starting point for learning or exploring advanced functionalities.

- PyTorch’s recent inclusion in the Linux Foundation opens up access to both free and paid training courses designed to further your expertise.

- The PyTorch website is a rich resource for discovering how to contribute to the project, understanding its governance structure, and learning more about its design philosophy and maintainers.

For more detailed information and updates, visit PyTorch.org. The ecosystem is continuously evolving, so we encourage you to explore and experiment with these tools to harness the full potential of PyTorch.

Keep Exploring

This lesson only scratches the surface of the PyTorch ecosystem. Conduct further research and explore additional resources to gain a deeper understanding of the powerful tools and vibrant community that PyTorch offers.

Watch Video

Watch video content