- Architecture Selection

- Compute and Hardware Performance

- Data Management Performance

- Networking and Content Delivery Performance

- Process and Culture

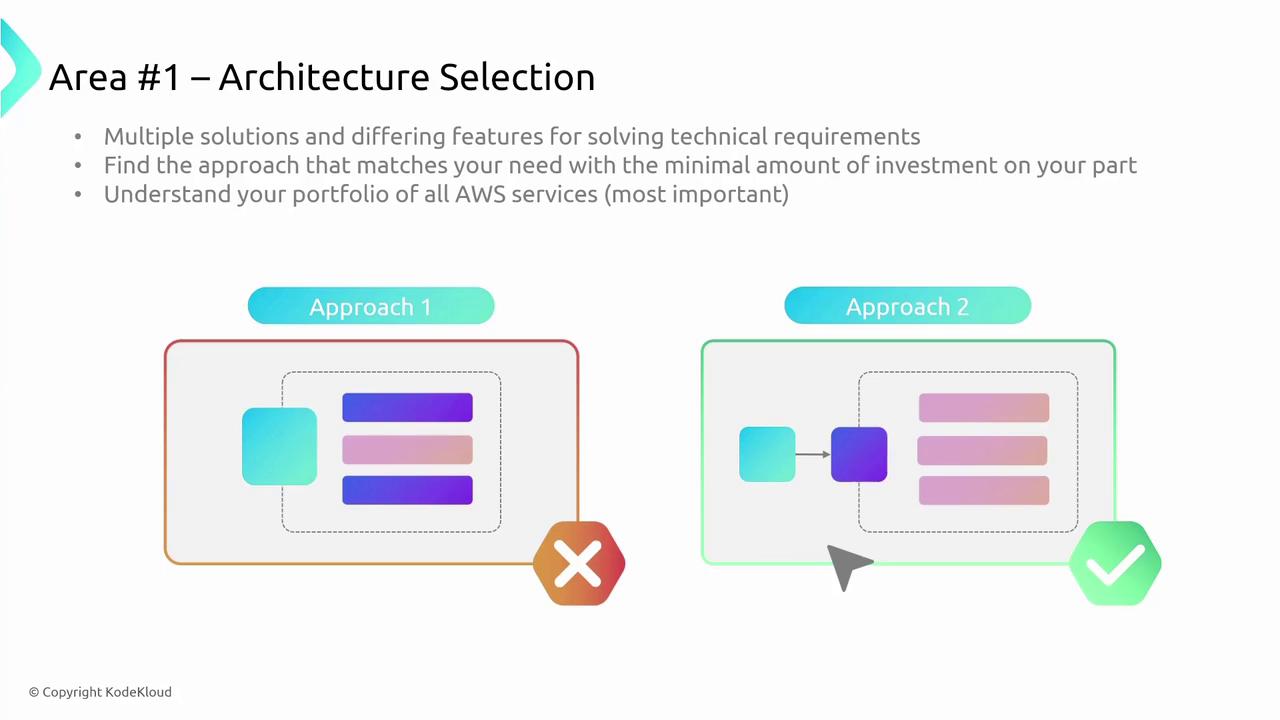

1. Architecture Selection

The optimal solution for a specific workload depends on varying technical requirements. A well-architected workload often deploys multiple solutions and leverages diverse AWS resource configurations to boost performance while minimizing investment. Strategic architectural decisions play a pivotal role in determining compute, storage, and networking environments. For instance, while one approach may fall short, another that utilizes AWS services like DynamoDB can achieve single-digit millisecond latency with a fully managed NoSQL database—eliminating the burden of server management. Knowing AWS’s extensive service portfolio is crucial to match your workload requirements with the best solution.

Always consider cost, trade-offs, and reference architectures. Utilize data-driven benchmarking and proof-of-concepts (POCs) to ensure your chosen approach meets your performance needs.

2. Compute and Hardware Performance

Choosing the right compute resources is vital and depends on your application’s design, usage patterns, and configuration settings. Different components of your workload might require distinct compute options; for example, a dynamic website necessitates a different host configuration than a static one. Regularly reassessing your compute choices is essential to stay current with evolving performance needs. Monitoring metrics and leveraging features like dynamic scaling and auto-scaling ensures your AWS architecture adapts seamlessly to workload fluctuations.

- Periodically reassessing and selecting the optimal compute instance.

- Utilizing monitoring and metrics for data-driven adjustments.

- Implementing auto-scaling to respond dynamically to varying demand.

3. Data Management Performance

Data management performance is influenced by the storage type—block, file, or object—and specific access patterns such as random versus sequential access, throughput requirements, and data update frequency. AWS advises using purpose-built data stores to match your durability, availability, and performance needs. Effective data management strategies involve:- Selecting storage solutions that align with your workflow.

- Optimizing for distinct data access patterns and frequency.

- Leveraging metadata to monitor and fine-tune performance.

Remember, there is no one-size-fits-all storage solution. Customize your data management approach using purpose-built tools that best suit your application’s needs.

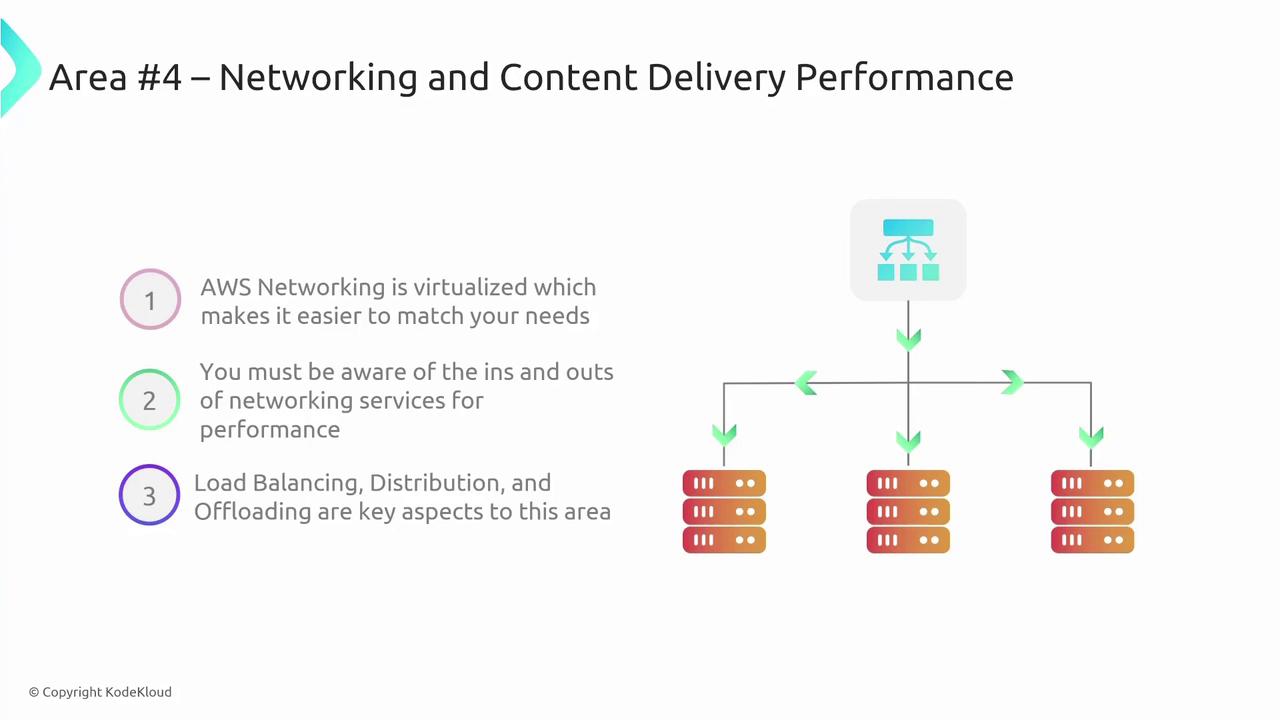

4. Networking and Content Delivery Performance

Effective networking and content delivery are crucial for maintaining optimal performance on AWS. Factors like latency, throughput, jitter, and bandwidth must be carefully considered. AWS’s virtualized networking environment provides the flexibility to scale and adjust your network infrastructure without the complexities of physical hardware. When scaling your AWS infrastructure, consider that instance sizing often influences networking performance. For example, upgrading from a small to a larger EC2 instance can enhance networking capabilities, even if CPU or memory resources are not the primary constraints. An additional advantage is AWS’s integrated load balancing, which distributes traffic across multiple compute resources to maintain efficient performance.

5. Process and Culture

The final performance area focuses on the processes and cultural practices that drive continuous improvement within an organization. Although this aspect may not be directly tested in certification exams, it is crucial for sustaining high-performing cloud workloads. Key practices include:- Implementing Infrastructure as Code (IaC) to create reusable deployment templates.

- Establishing secure deployment pipelines (“paved roads”) for efficient application releases.

- Defining and tracking metrics tied to both technical and business key performance indicators (KPIs).

- Conducting rigorous performance testing and consistently reviewing operational metrics.

- Adopting a culture of continuous improvement at both the organizational and individual levels.

Embracing a culture of continuous improvement not only drives performance but also fosters innovation and efficiency across your organization.

Conclusion

In summary, achieving exceptional performance on AWS involves a deep understanding and careful optimization of the five key areas: architecture selection, compute and hardware performance, data management performance, networking and content delivery performance, and process and culture. These categories integrate seamlessly with AWS design principles, ensuring that you choose the right services and configurations to meet your workload demands.