AWS Solutions Architect Associate Certification

Designing for Performance

Performance Optmiziation Concepts with Various AWS Services

Welcome, Solutions Architects. This final installment of the "Designing for Performance" series delves into tuning performance across AWS services. In this lesson, we explore autoscaling, offloading, and serverless architectures to enhance performance as defined by the AWS Well-Architected Framework.

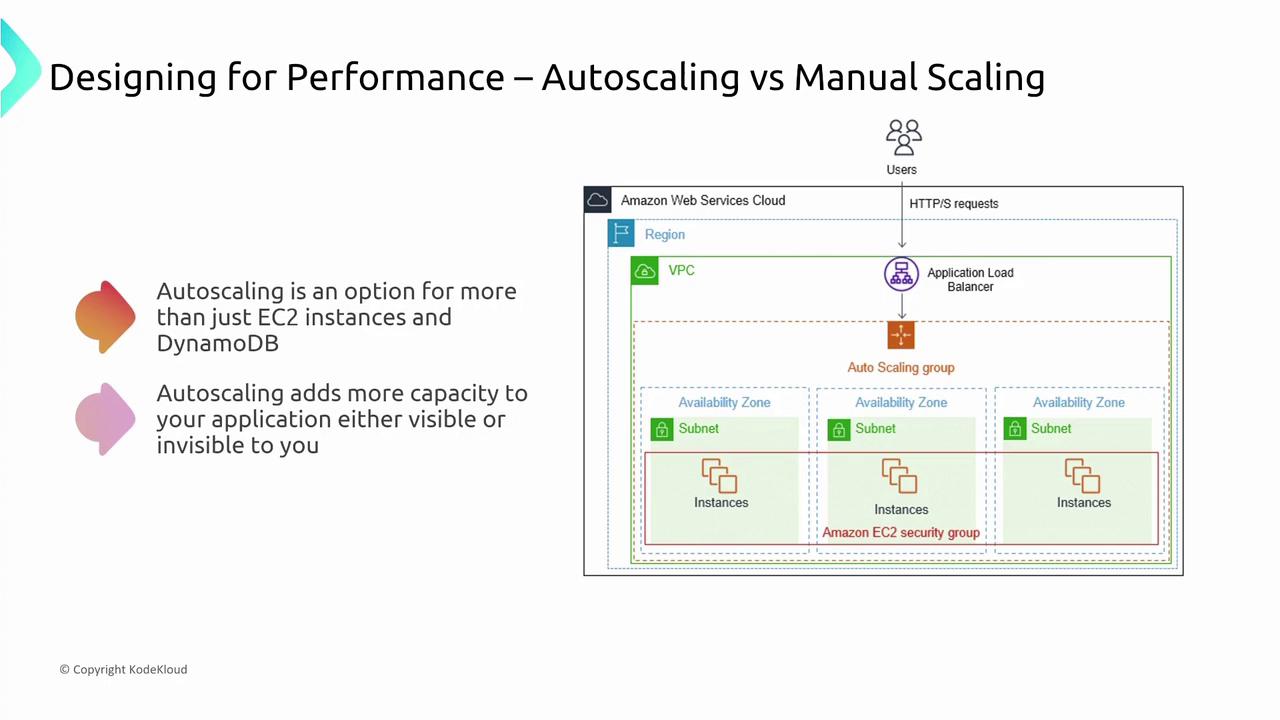

Autoscaling in AWS

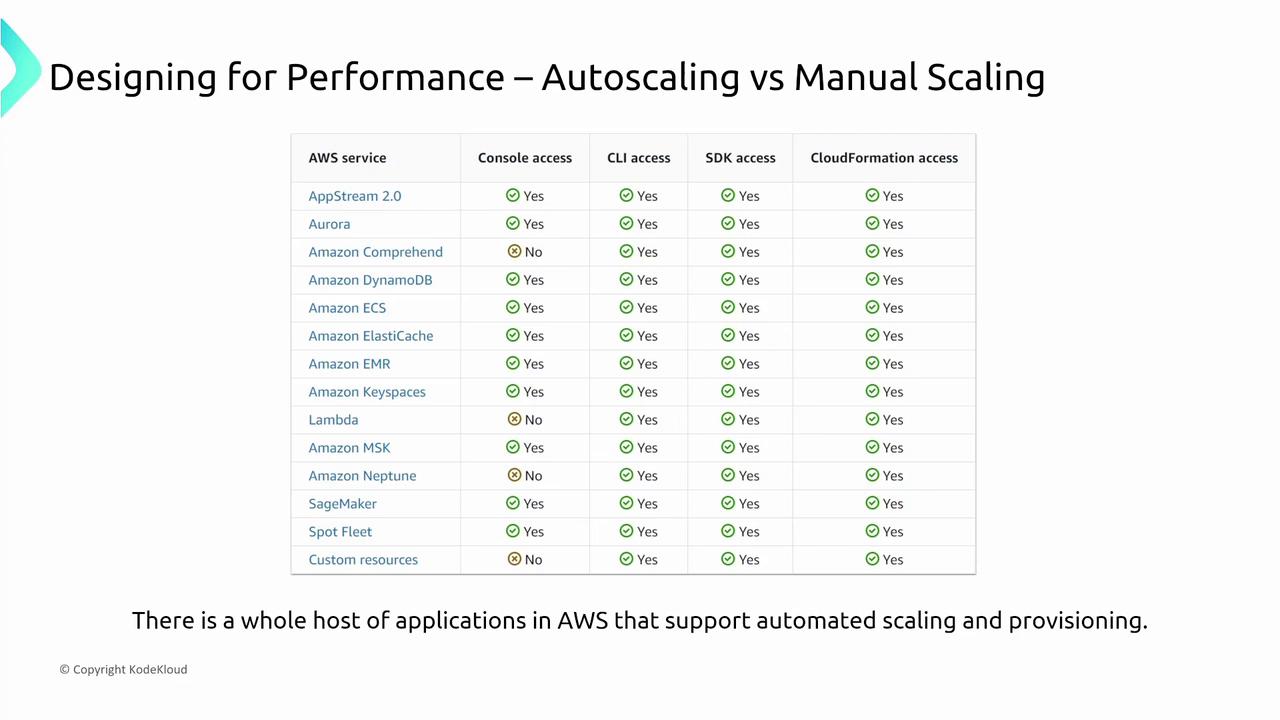

Autoscaling automatically adjusts resource capacity based on demand. While it is commonly associated with EC2—using scaling groups to add or remove servers based on load—AWS extends this capability to many other services. For example, DynamoDB adjusts capacity behind the scenes, with changes reflected in performance metrics rather than visible infrastructure modifications.

Keep in mind that numerous AWS hosting services, including EC2, ECS, Lambda, SageMaker, and Spot Fleet, support autoscaling. Additionally, managed databases like Aurora, DynamoDB, ElastiCache, Keyspaces, Neptune, and managed Kafka services are designed to scale automatically. When answering exam questions that compare manual scaling with autoscaling, choose the option where AWS supports automated scaling.

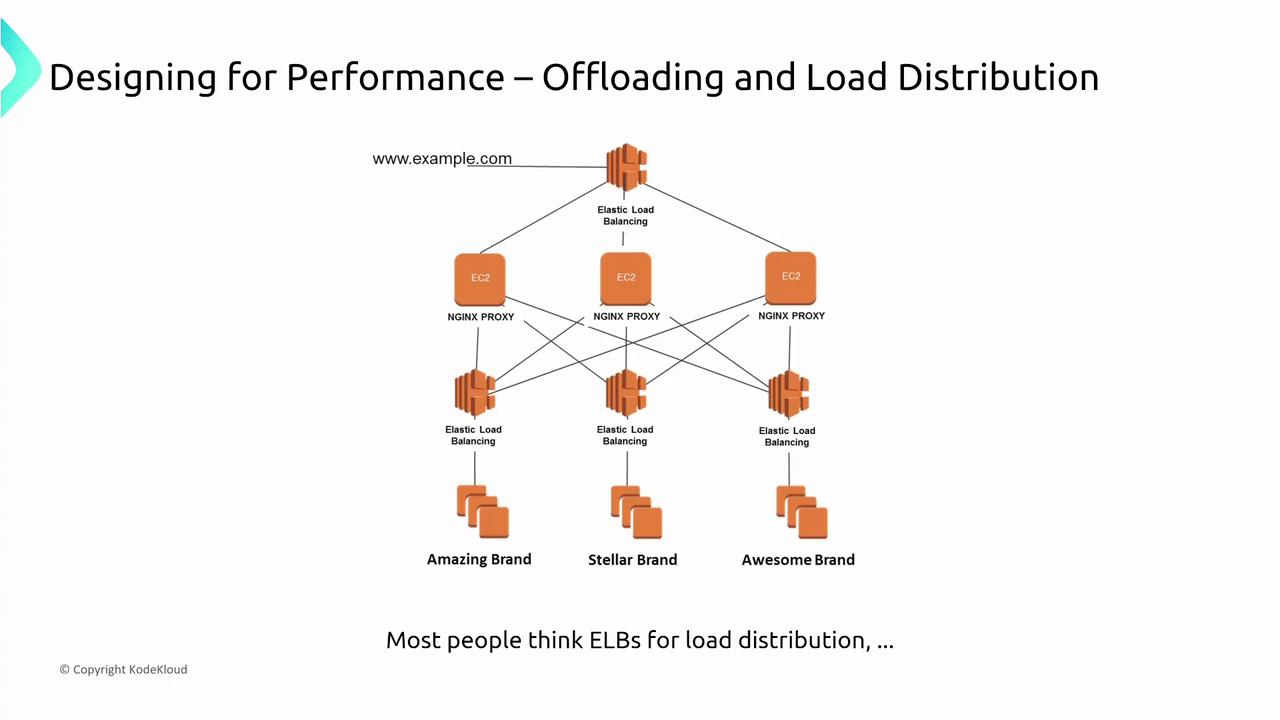

Offloading and Load Distribution

Offloading shifts tasks to auxiliary systems, reducing the processing load on a primary server. A common approach pairs offloading with load balancers. Instead of routing all traffic to a single web server, Elastic Load Balancing (ELB) distributes incoming requests. In some setups, an NGINX proxy layer routes traffic before it reaches the servers, and services like S3 handle static content so that web servers can focus on dynamic content.

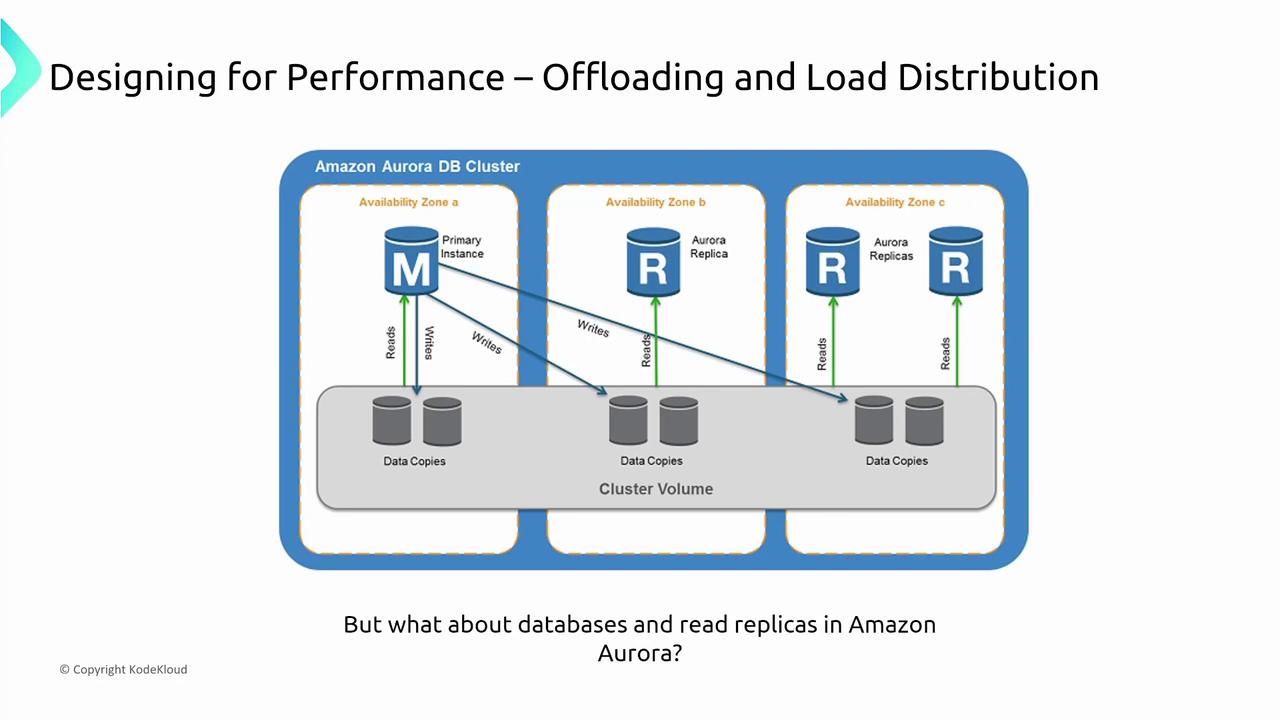

Offloading is equally critical in database architectures. Traditional designs often rely on a primary instance for both reads and writes. Modern architectures frequently use read replicas to manage the bulk of read traffic—commonly an 80/20 read-to-write ratio. For instance, in an Aurora setup, the primary instance handles writes while one or more replicas serve read requests.

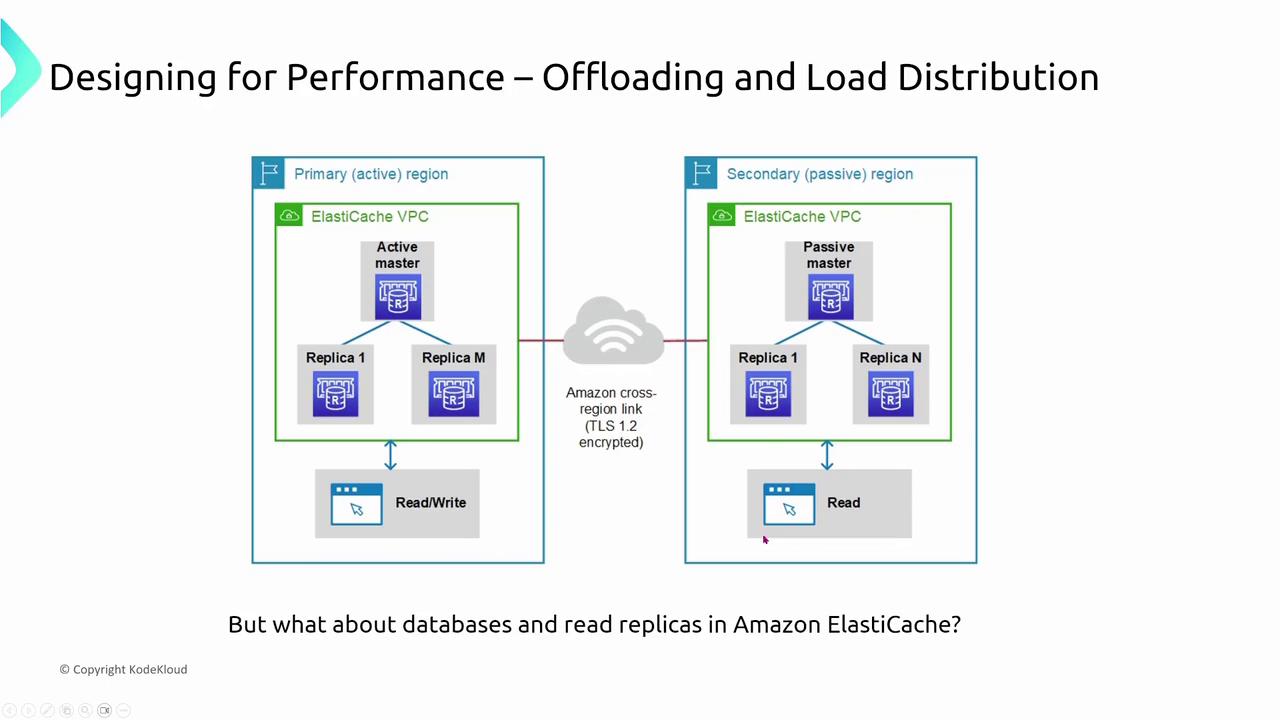

Similarly, Amazon ElastiCache supports cross-region replication. In a typical configuration, a primary node manages writes (and some reads), while standby or replica nodes handle additional read traffic. In the event of a failure, the endpoint used for writes is updated, typically without impacting reads.

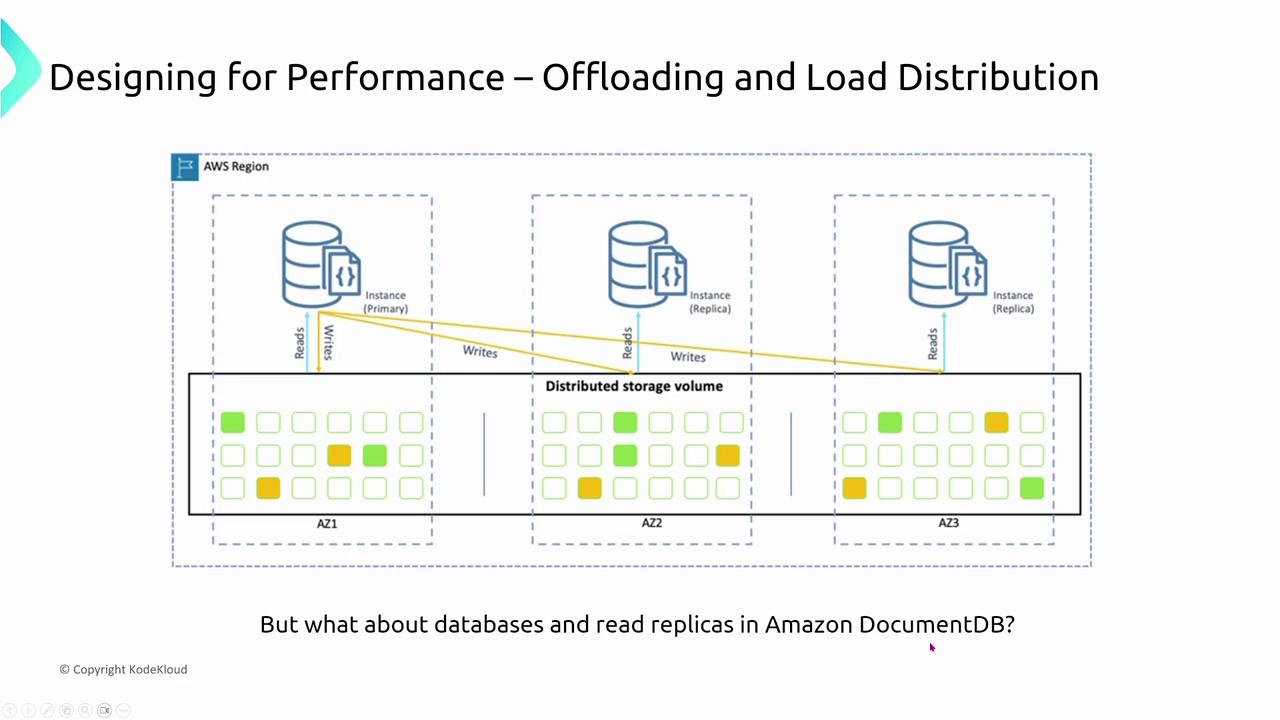

Amazon DocumentDB follows a similar pattern by supporting instance replicas that help redirect read traffic, just like Aurora.

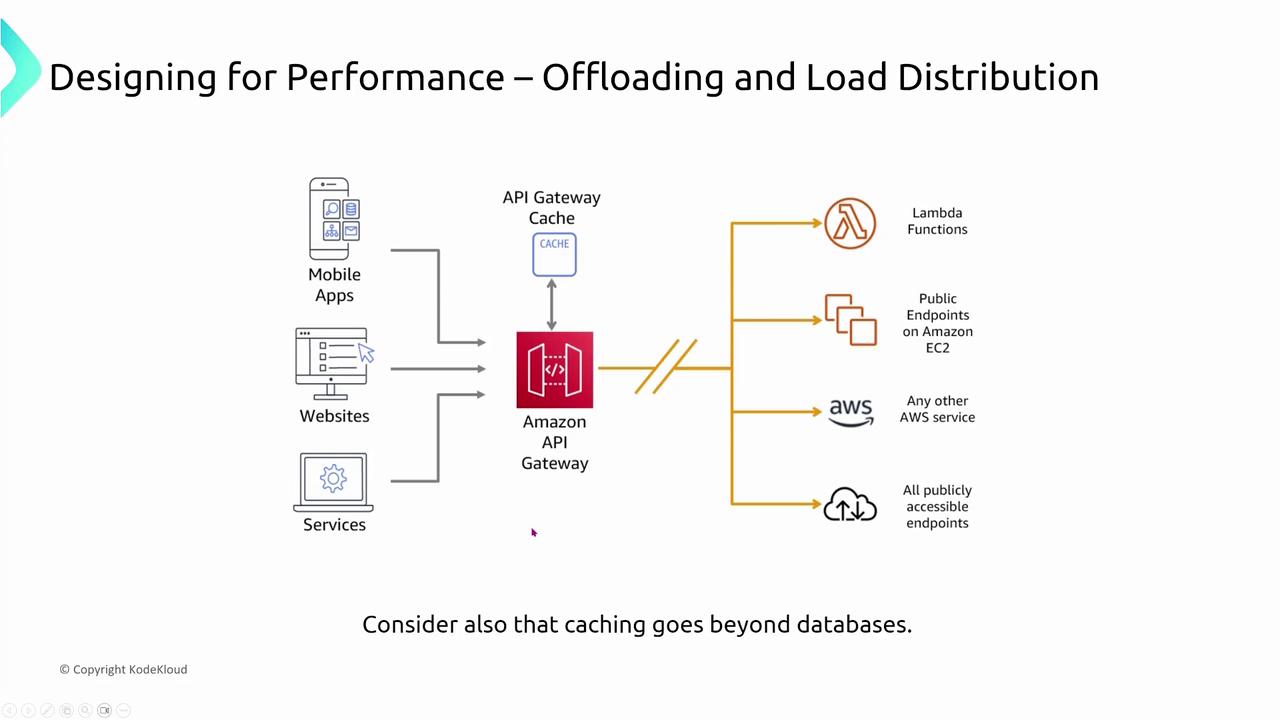

Beyond databases, offloading enhances the performance of distributed caches and content delivery networks. For example:

- API Gateway: Offers caching to improve response times for read-only endpoints.

- CloudFront: Distributes static content across hundreds of edge locations worldwide, decreasing the load on the origin servers.

Key Takeaway

Offloading improves performance by distributing workloads efficiently across various systems, ensuring that primary servers remain unburdened.

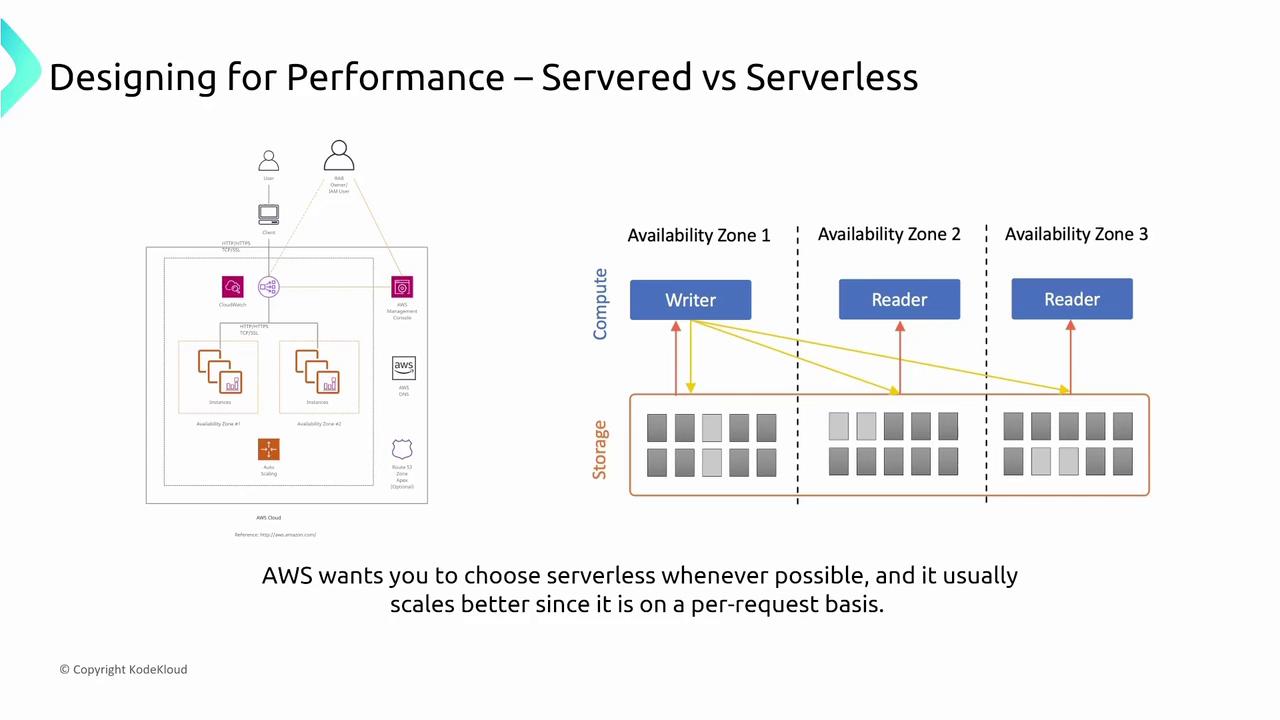

Embracing Serverless Architectures

Transitioning from a server-based model to a serverless architecture can dramatically enhance performance by automating scaling at granular levels. AWS serverless services—such as Lambda, Fargate, serverless Aurora, serverless Redshift, and serverless EMR—reduce the overhead associated with server provisioning and autoscaling. Typically, serverless options offer superior cost efficiency, enhanced performance, and reduced operational complexity compared to traditional server-based setups.

Advantages of Serverless

Serverless architectures deliver benefits including automatic scaling, minimized management effort, and improved resource utilization.

Summary

This lesson covered three essential AWS performance improvement concepts:

- Autoscaling: AWS services such as EC2, DynamoDB, Aurora, DocumentDB, Managed Kafka, and Lambda offer robust autoscaling, which is crucial for maintaining optimal performance.

- Offloading and Load Distribution: By delegating tasks like read operations to replicas and caches, offloading reduces the load on primary systems across both application and database layers.

- Serverless Architectures: Employing serverless solutions like AWS Lambda and serverless database services minimizes operational overhead while enhancing scalability and performance.

When preparing for the AWS exam or addressing real-world scenarios, remember to prioritize solutions that incorporate autoscaling, offloading, and serverless architectures, as AWS favors these approaches for enhanced performance and operational efficiency.

I'm Michael Forrester. Feel free to join the discussions in the forums or connect on Slack or Discord. I look forward to engaging with you in the next lesson.

Watch Video

Watch video content