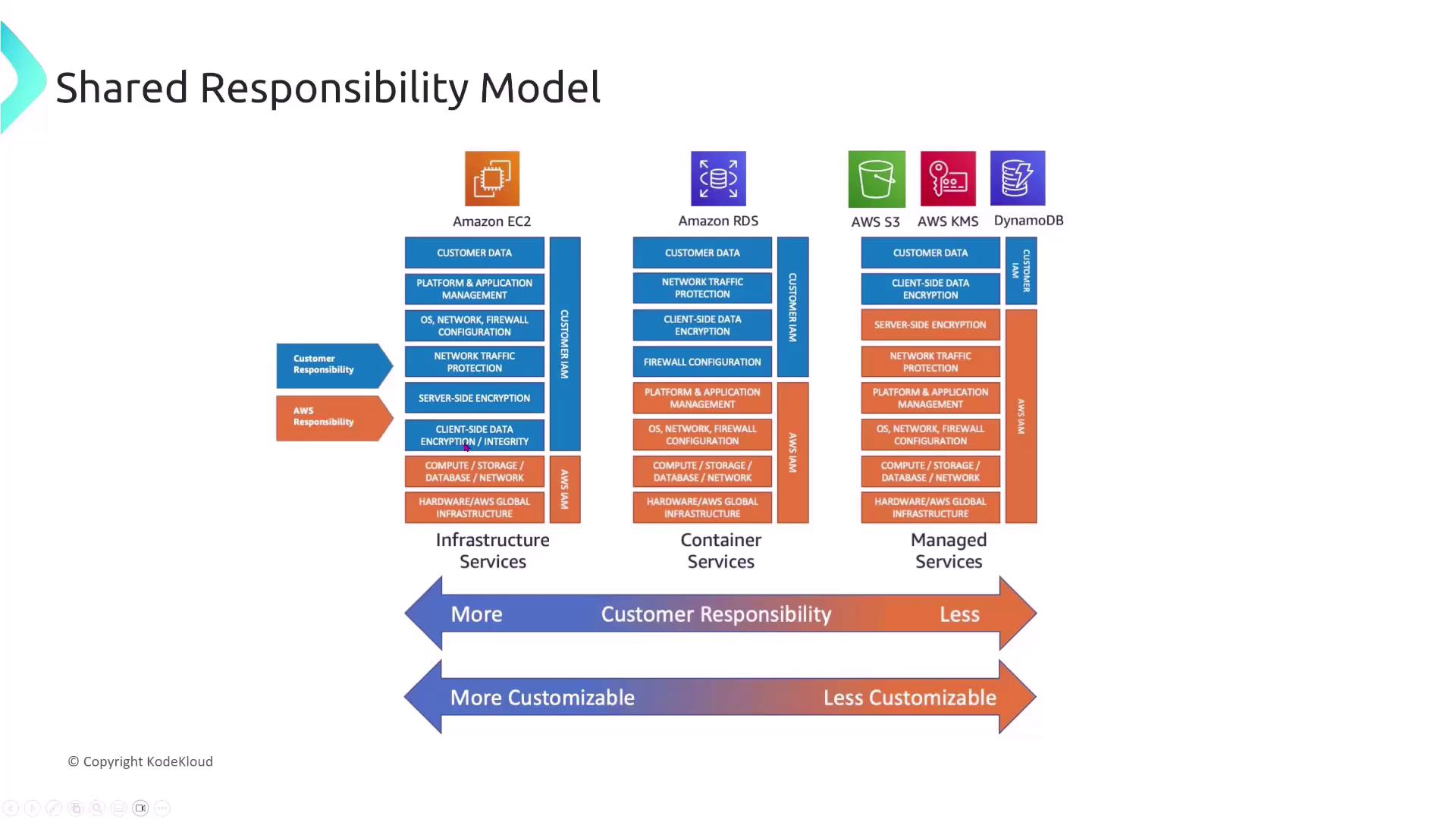

The Shared Responsibility Model

Before we dive into compute services, let’s revisit the AWS Shared Responsibility Model. In this framework, AWS is responsible for the security “of” the cloud—covering infrastructure, hardware, and managed services—while you are responsible for security “in” the cloud, which includes configuring your applications, data, and security settings. For example:- Infrastructure Services (e.g., EC2): You secure the virtual machines.

- Container Services (e.g., ECS, EKS): Security responsibilities are more evenly distributed.

- Managed Services (e.g., Lambda): AWS manages most security aspects on your behalf.

A Scenario on the Shared Responsibility Model

Imagine a rapidly growing startup migrating its infrastructure to AWS. The Chief Information Security Officer (CISO), who is new to cloud computing, wants to adhere to best practices under the AWS Shared Responsibility Model. Which of the following best describes this model?- AWS is responsible for the security of the cloud while customers are responsible for security in the cloud.

- AWS is responsible for both security of the cloud and security in the cloud.

- Customers are responsible for security of the cloud while AWS is responsible for security in the cloud.

- AWS and customers share equal responsibility for all aspects of security.

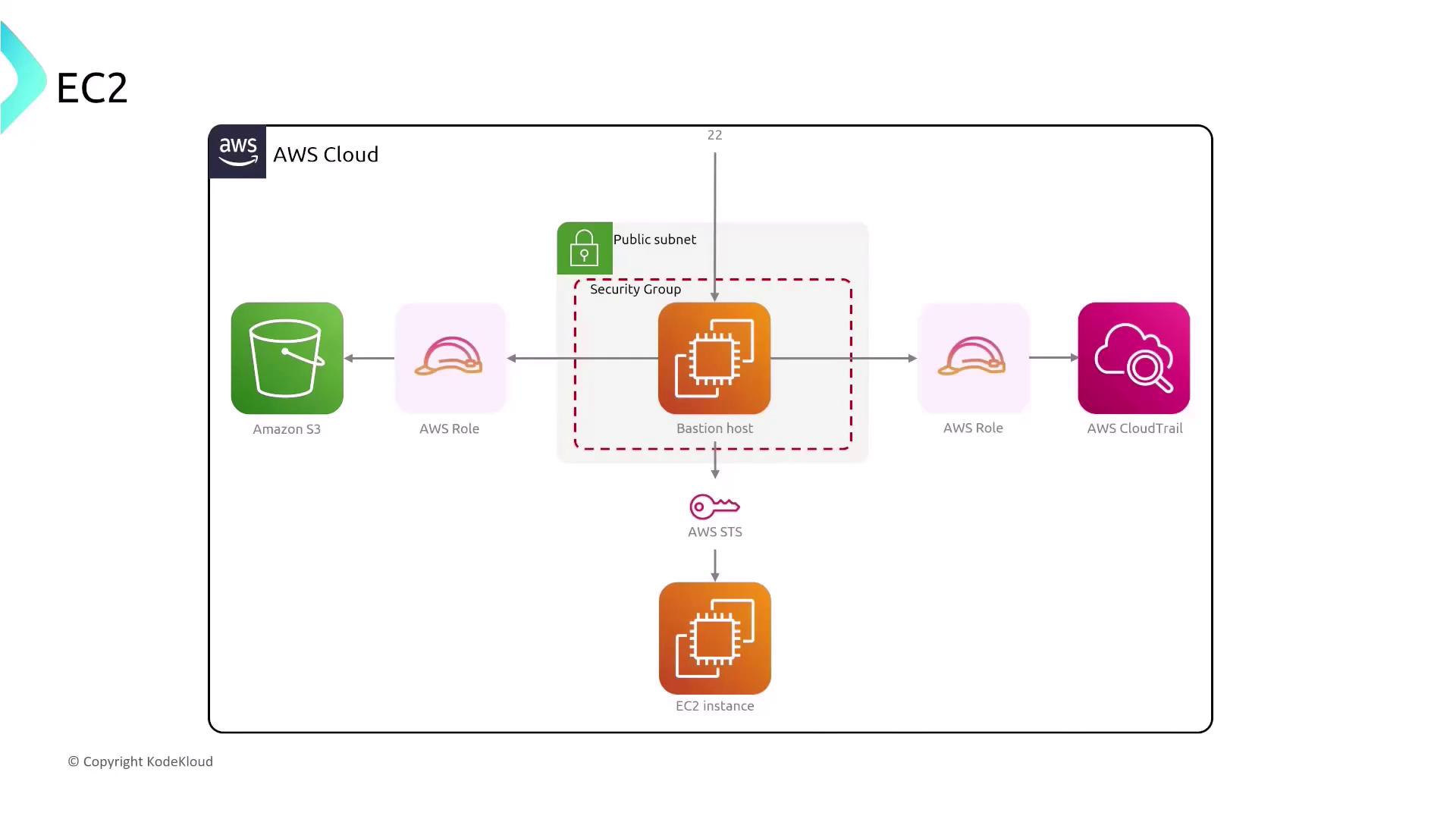

Securing EC2 and the Role of Bastion Hosts

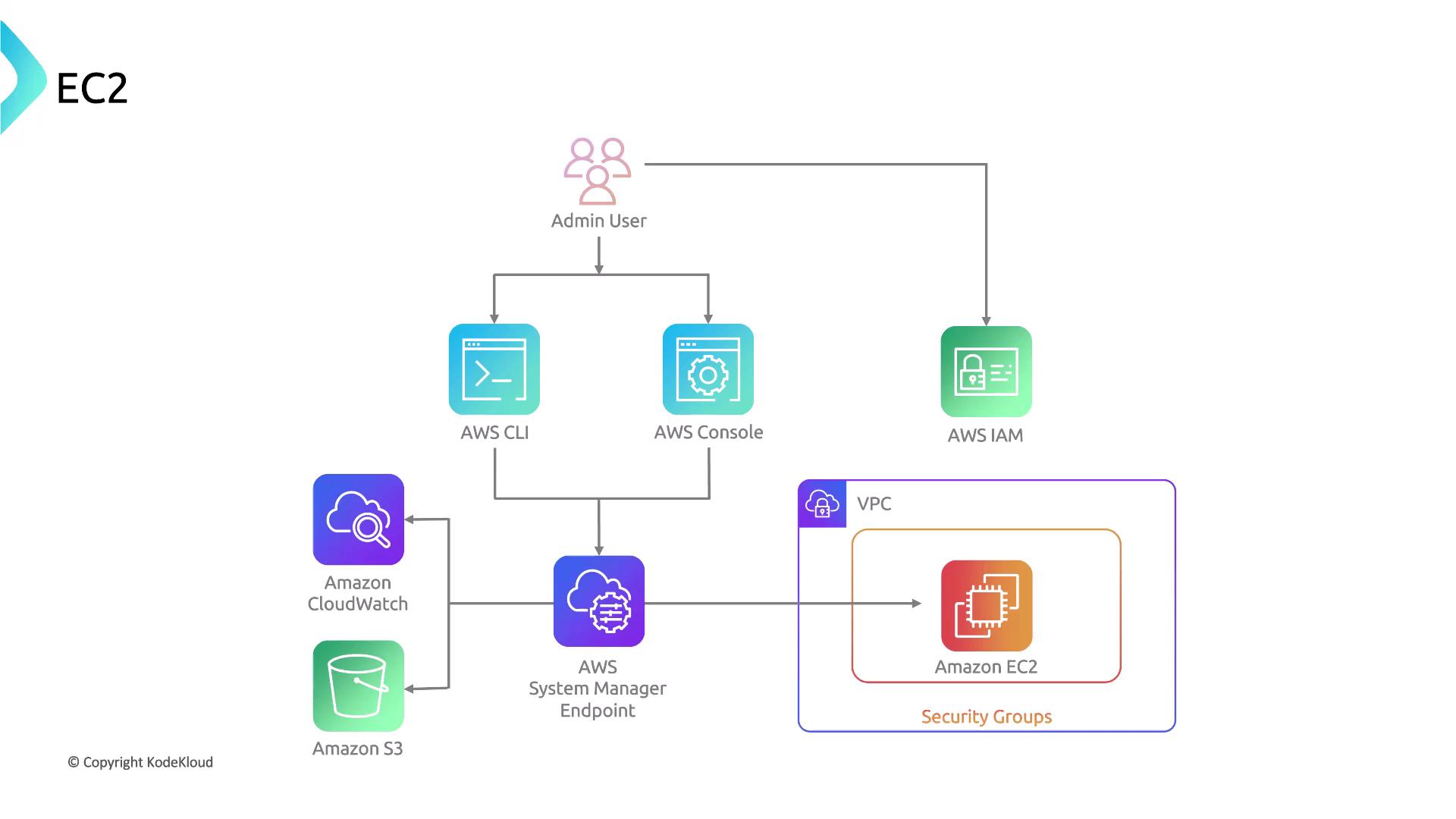

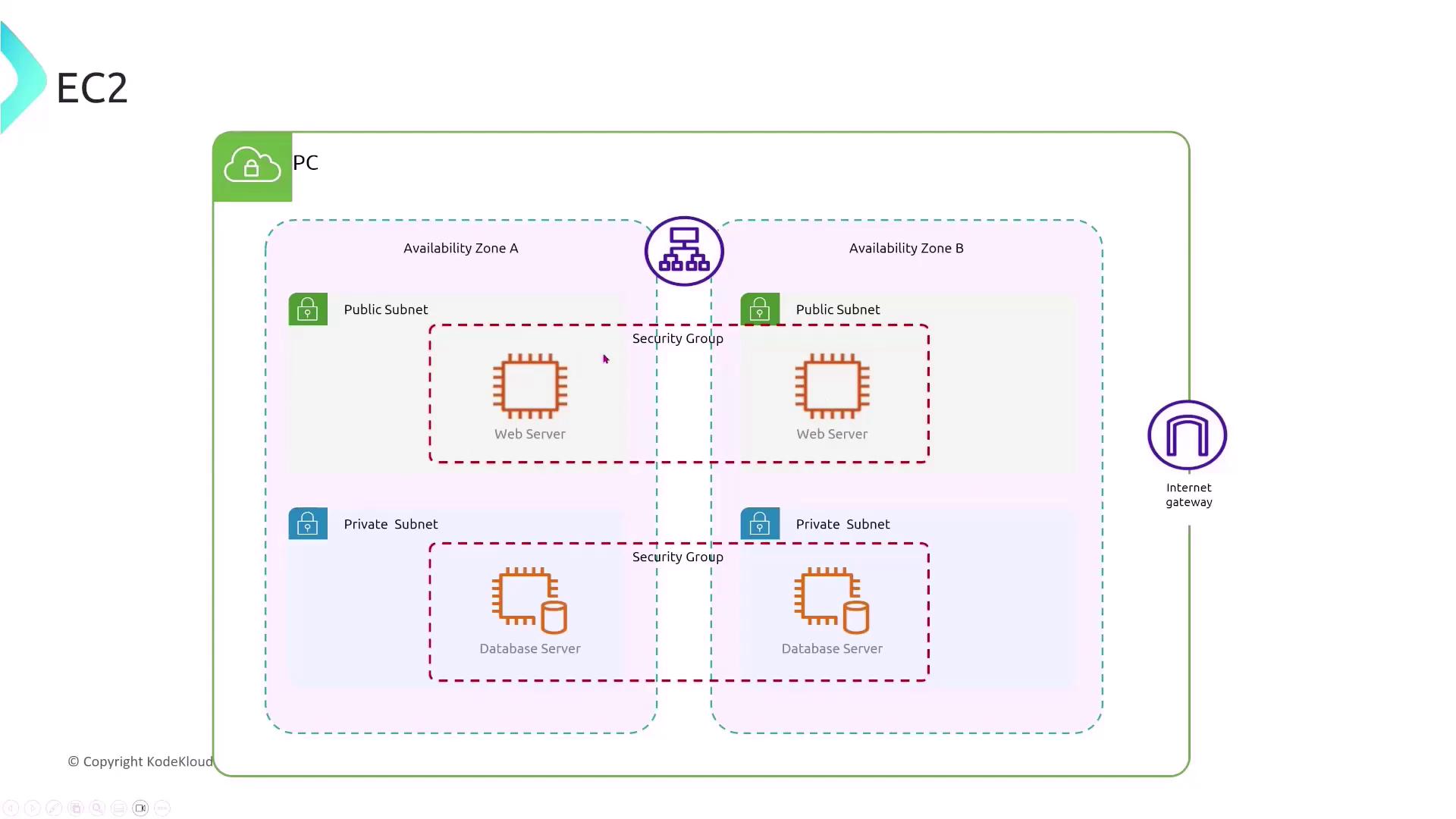

Amazon EC2, AWS’s virtual machine service, is a key component of your compute environment. A common practice is to use a bastion host (or jump box) to manage remote access securely. In basic setups, an EC2 instance located in a public subnet uses a bastion host secured by a security group that allows inbound SSH (port 22). IAM roles further restrict access, granting specific permissions to services like CloudTrail and S3.

- Place the bastion host in a public subnet.

- Restrict SSH access to known IP addresses and use the bastion host as a gateway for accessing instances in private subnets.

- Minimize direct exposure of numerous instances to the internet by consolidating access points.

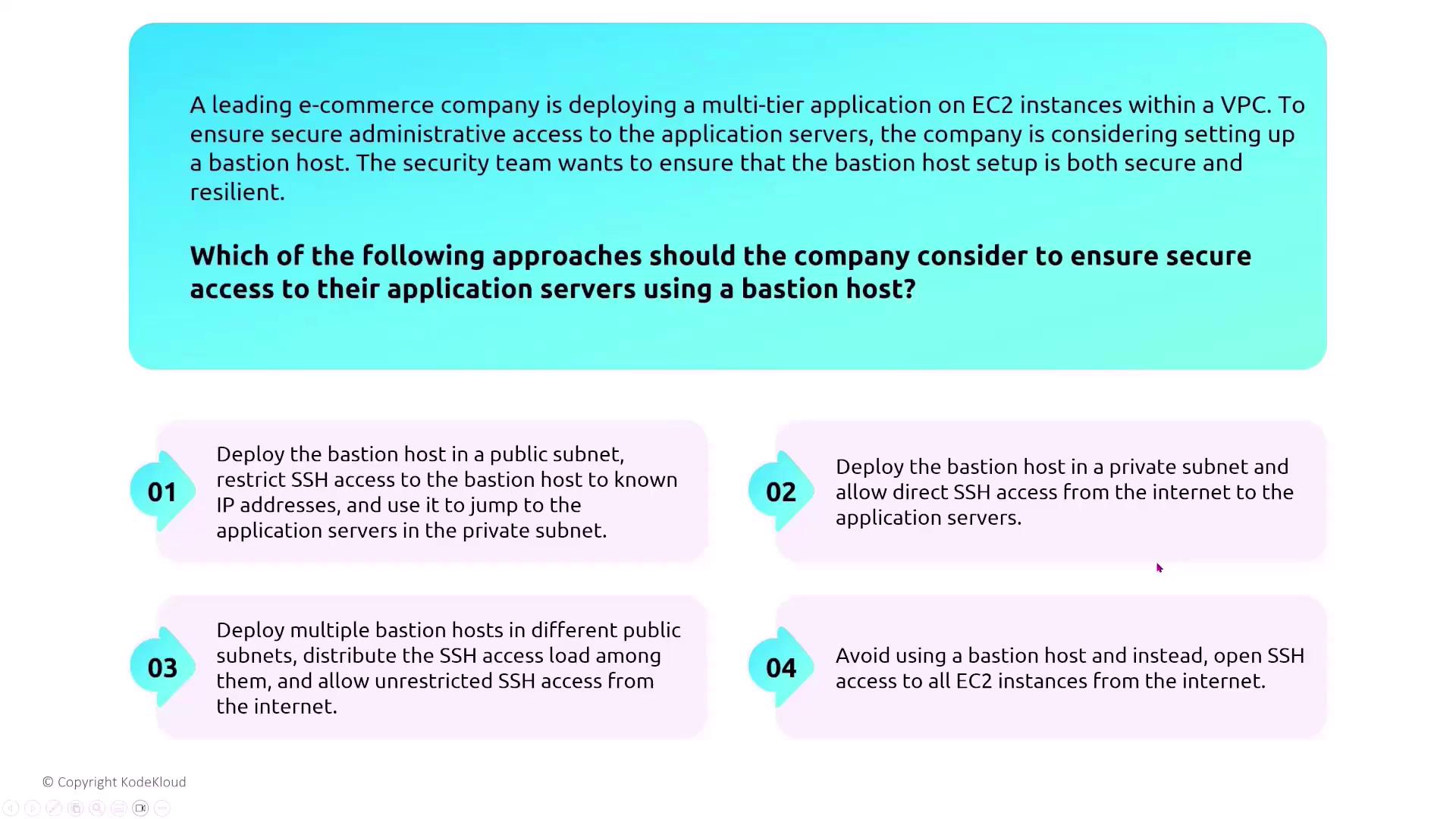

Bastion Host Security Options

An e-commerce company deploying a multi-tier application on EC2 is evaluating the best approach to secure administrative access. Which option best enhances security?- Deploy the bastion host in a public subnet, restrict SSH access to known IP addresses, and use it as a jump box to access private application servers.

- Deploy the bastion host in a private subnet and allow direct SSH access from the internet.

- Deploy multiple bastion hosts across different public subnets while distributing SSH access load with unrestricted access.

- Avoid using a bastion host that allows open SSH access to all EC2 instances.

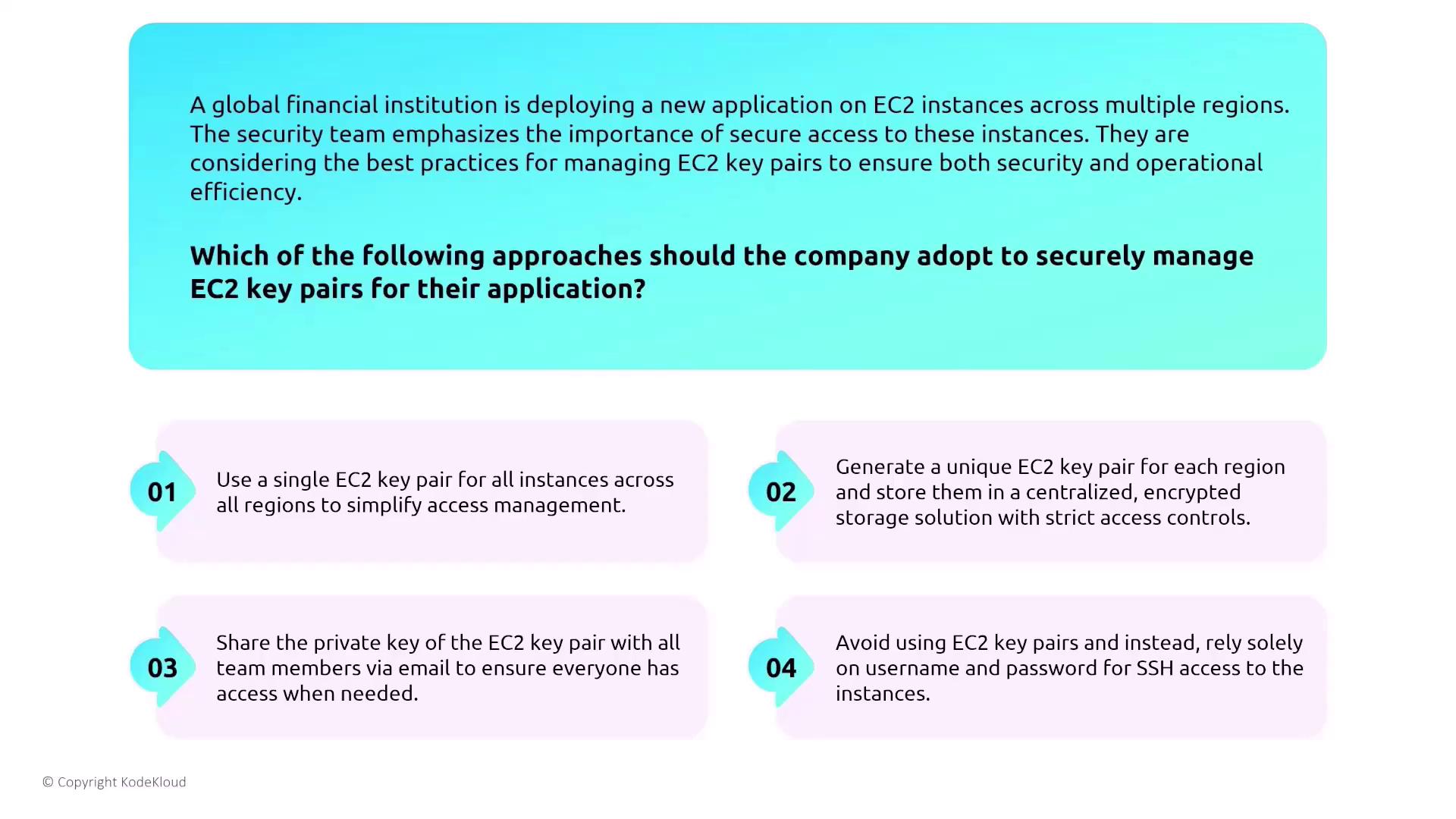

Managing EC2 Key Pairs Securely

Secure key pair management is crucial when deploying EC2 instances across multiple regions. Consider the following approaches:- Use a single EC2 key pair across all regions.

- Generate a unique EC2 key pair for each region and store them in a centralized, encrypted storage solution with strict access controls.

- Share the private key among team members via email.

- Avoid using EC2 key pairs and rely solely on usernames and passwords.

In many deployments, a dedicated key pair is used during initial setup. Afterward, assign each user their unique key pair to enforce one-to-one accountability.

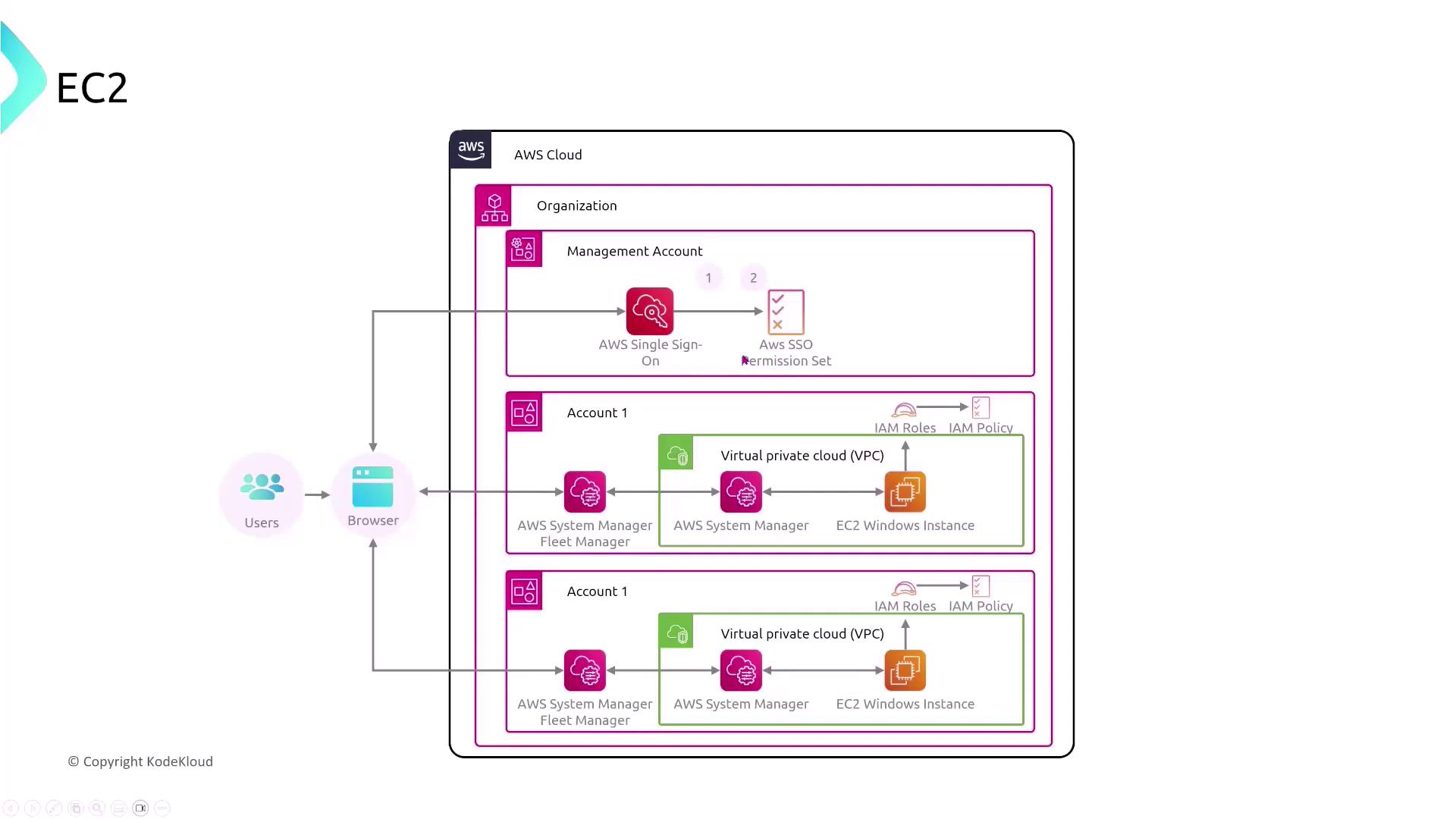

Replacing Bastion Hosts with AWS Systems Manager

AWS Systems Manager Session Manager offers a modern alternative to traditional bastion hosts. This service allows administrators to securely access EC2 instances without the need to open inbound ports or manage SSH keys. For instance, a healthcare company managing critical applications with sensitive patient data can leverage Systems Manager. Which statement is accurate regarding this service?- Systems Manager enables direct access to the underlying hardware for diagnostics.

- Systems Manager provides a unified interface to access EC2 instances without opening inbound ports or managing SSH keys.

- Using Systems Manager requires EC2 instances to be publicly accessible.

- Systems Manager replaces EC2 virtual machines.

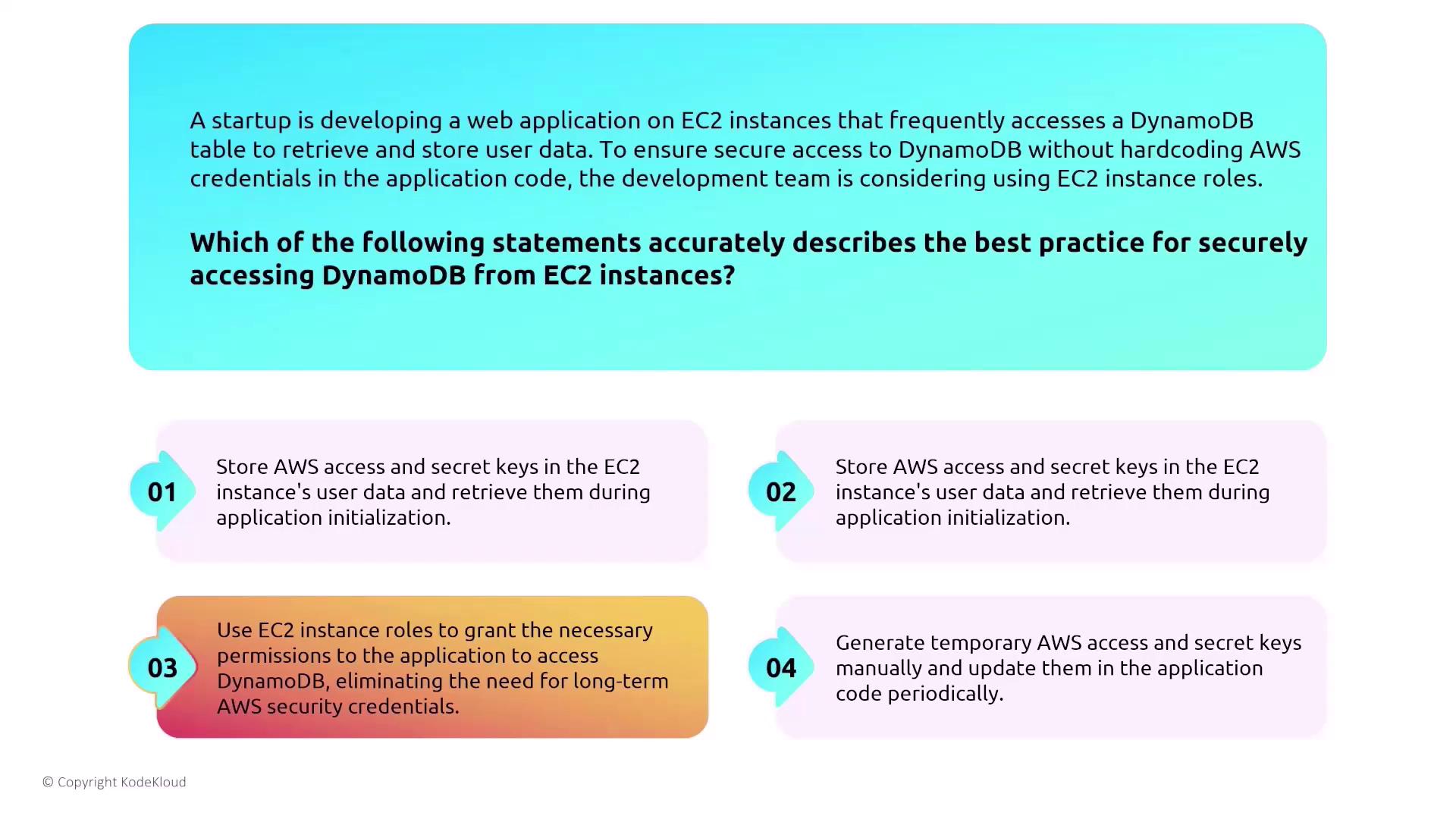

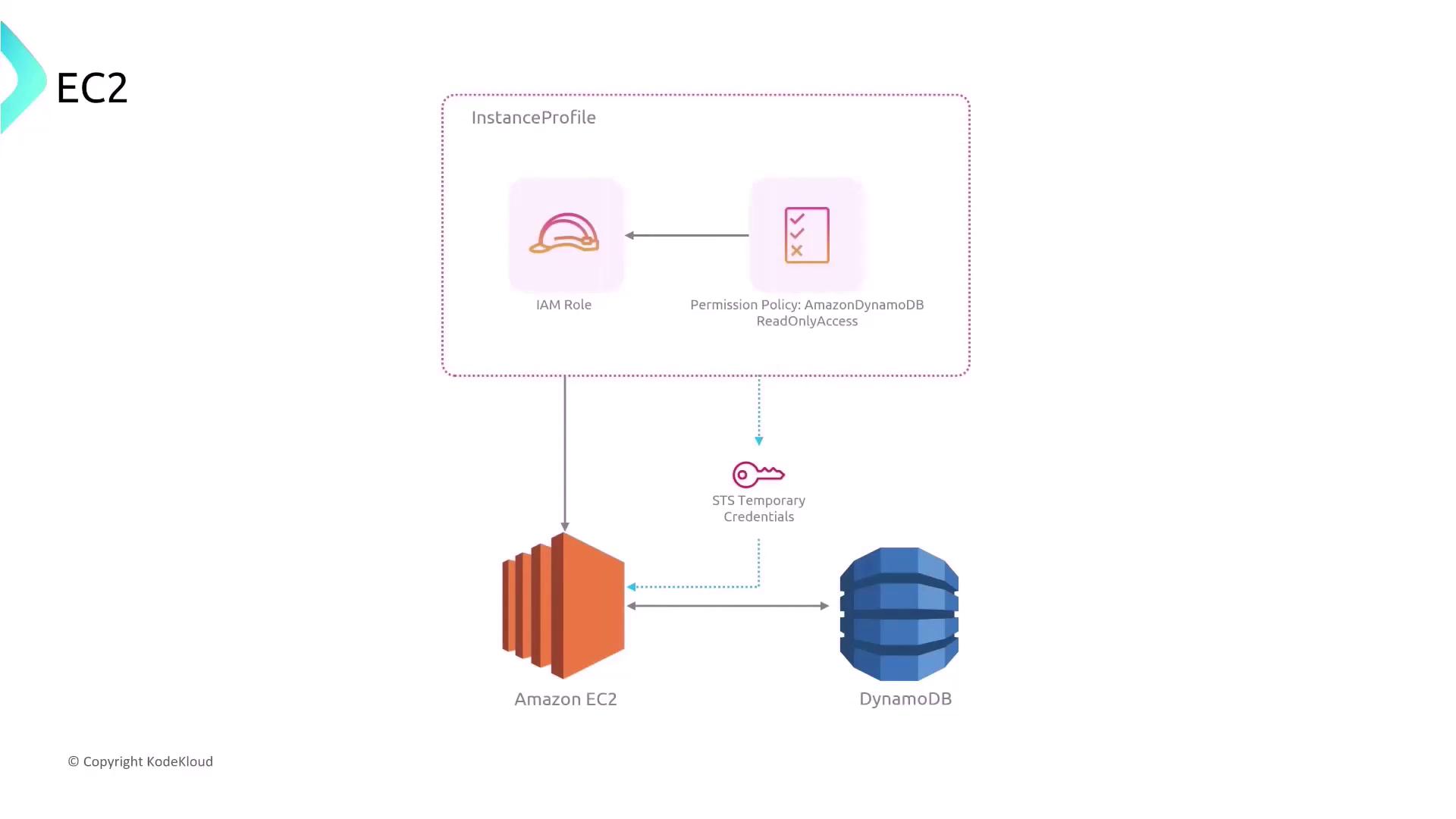

Securing Access to DynamoDB from EC2

For applications utilizing DynamoDB, it is imperative to avoid embedding AWS credentials in your code. Instead, leverage EC2 instance roles, which securely grant the necessary permissions on demand. Consider these approaches:- Store AWS access and secret keys in the user data of an EC2 instance.

- Store the keys in user data and retrieve them during application initialization.

- Use the EC2 instance role to provide necessary permissions without requiring long-term credentials.

- Manually generate temporary access keys.

IAM Roles Anywhere for External Workloads

For workloads running outside AWS, AWS IAM Roles Anywhere offers a solution for obtaining temporary security credentials safely. Consider these approaches:- Use long-term AWS credentials and bypass X.509 certificates.

- Register your certificate authority with IAM Roles Anywhere as a trust anchor.

- Use self-signed X.509 certificates with a trust anchor.

- Avoid using IAM roles by managing separate IAM users with static credentials.

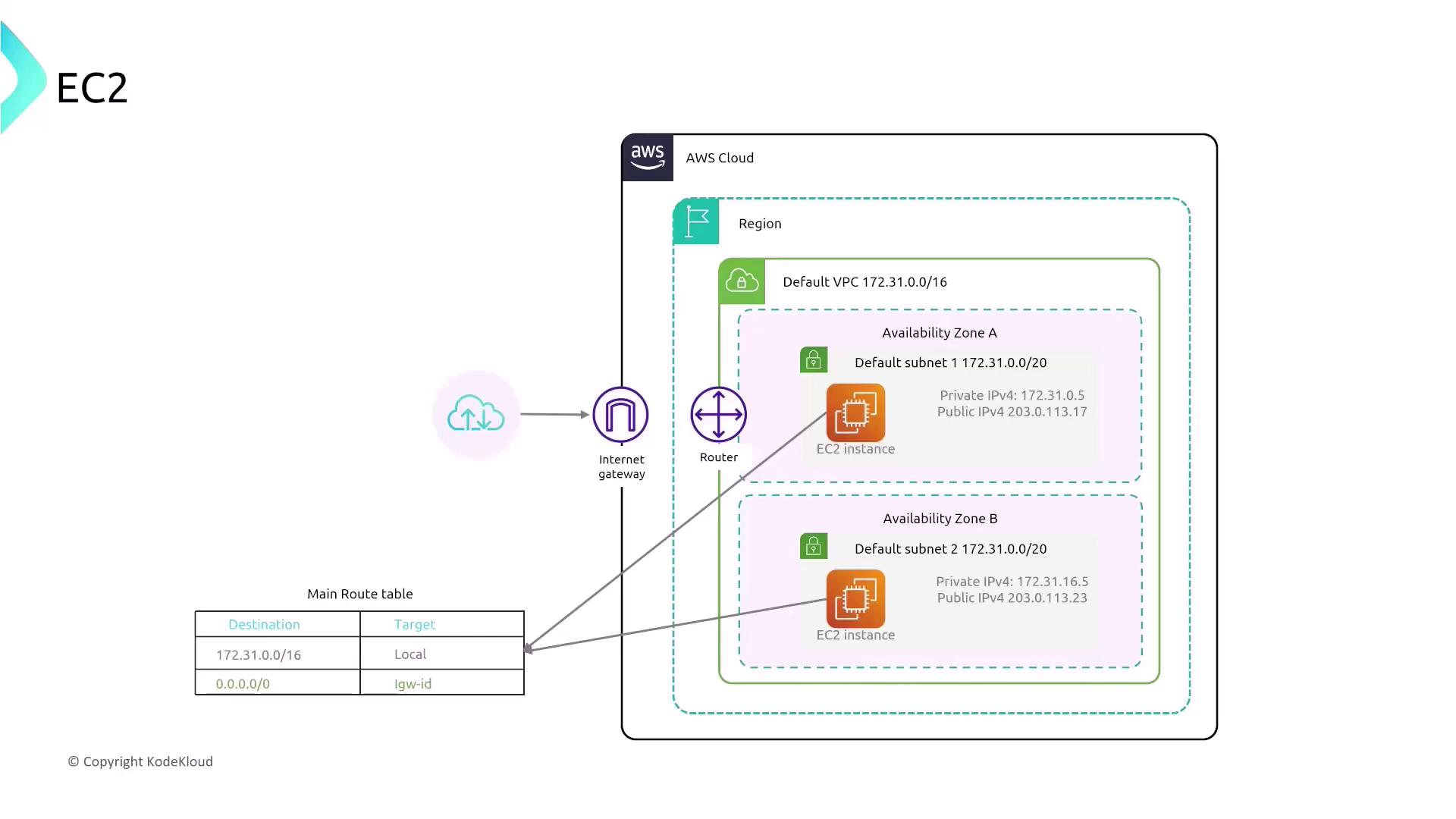

Public versus Private Subnets and Routing Considerations

Understanding the difference between public and private subnets is essential when architecting your EC2 environment. In a typical VPC setup:- Public Subnets: These subnets have a route directing 0.0.0.0/0 traffic to an Internet Gateway (IGW), and instances here usually receive a public IP address.

- Private Subnets: In these subnets, traffic does not route directly to an IGW; rather, it passes through a NAT gateway or NAT instance for internet access.

- Tighten security group rules to disable unnecessary inbound and outbound traffic.

- Regularly update operating systems and software patches.

- Avoid practices such as storing sensitive data on the root volume or using the root user for day-to-day operations.

Enhancing Security with Private Subnets and NAT

To mitigate threats like DDoS attacks, consider re-evaluating your routing strategies. Instead of exposing every instance to the internet, you can place instances in private subnets and direct traffic through a NAT gateway or NAT instance. This strategy minimizes your public exposure and significantly enhances security.

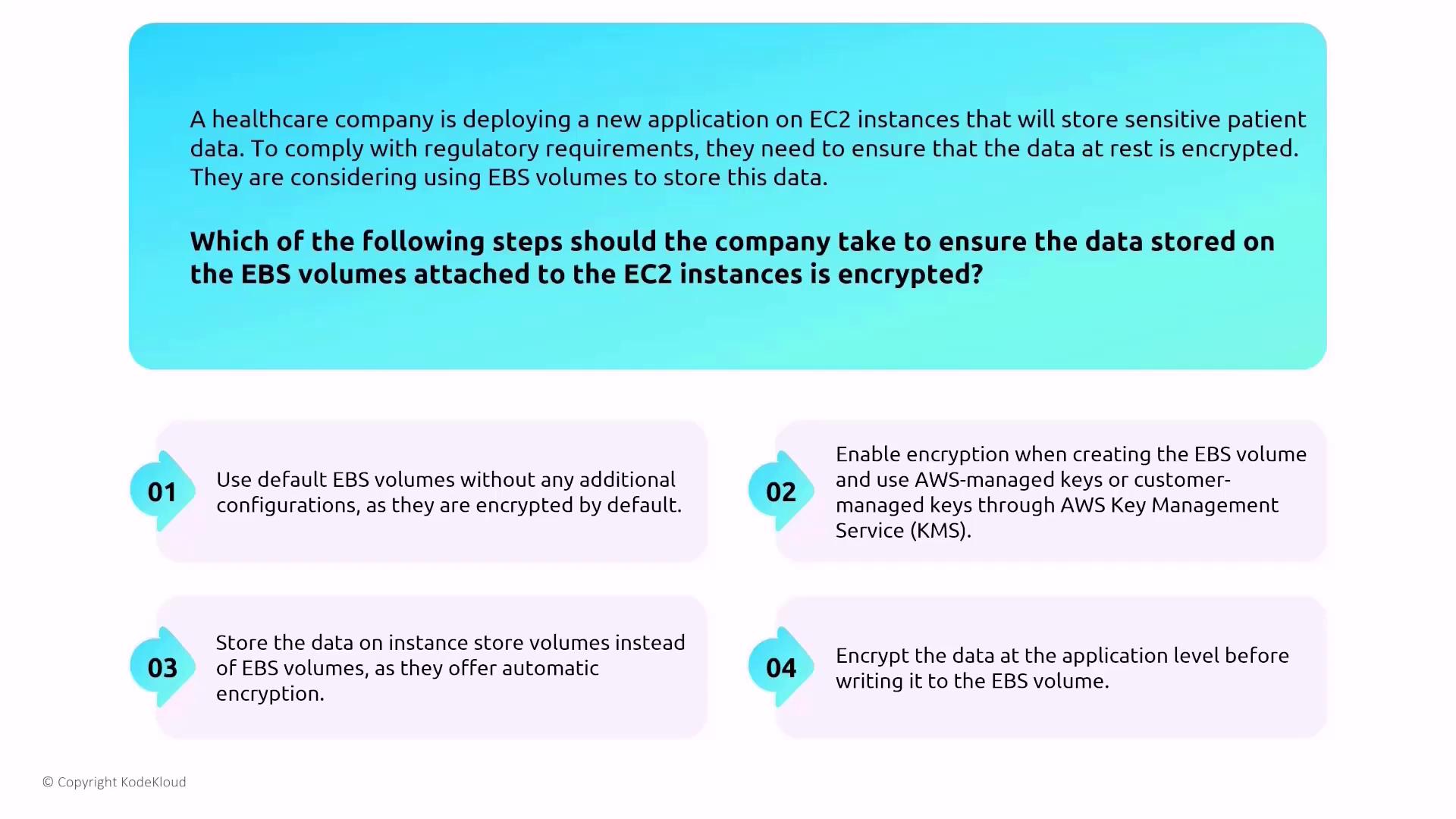

EBS Encryption and Data Security

Data stored on EBS volumes must be secured, as these volumes are not encrypted by default. To protect data-at-rest, consider the following practices:- Enable encryption when creating your EBS volume.

- Choose between AWS-managed keys or customer-managed keys via AWS KMS.

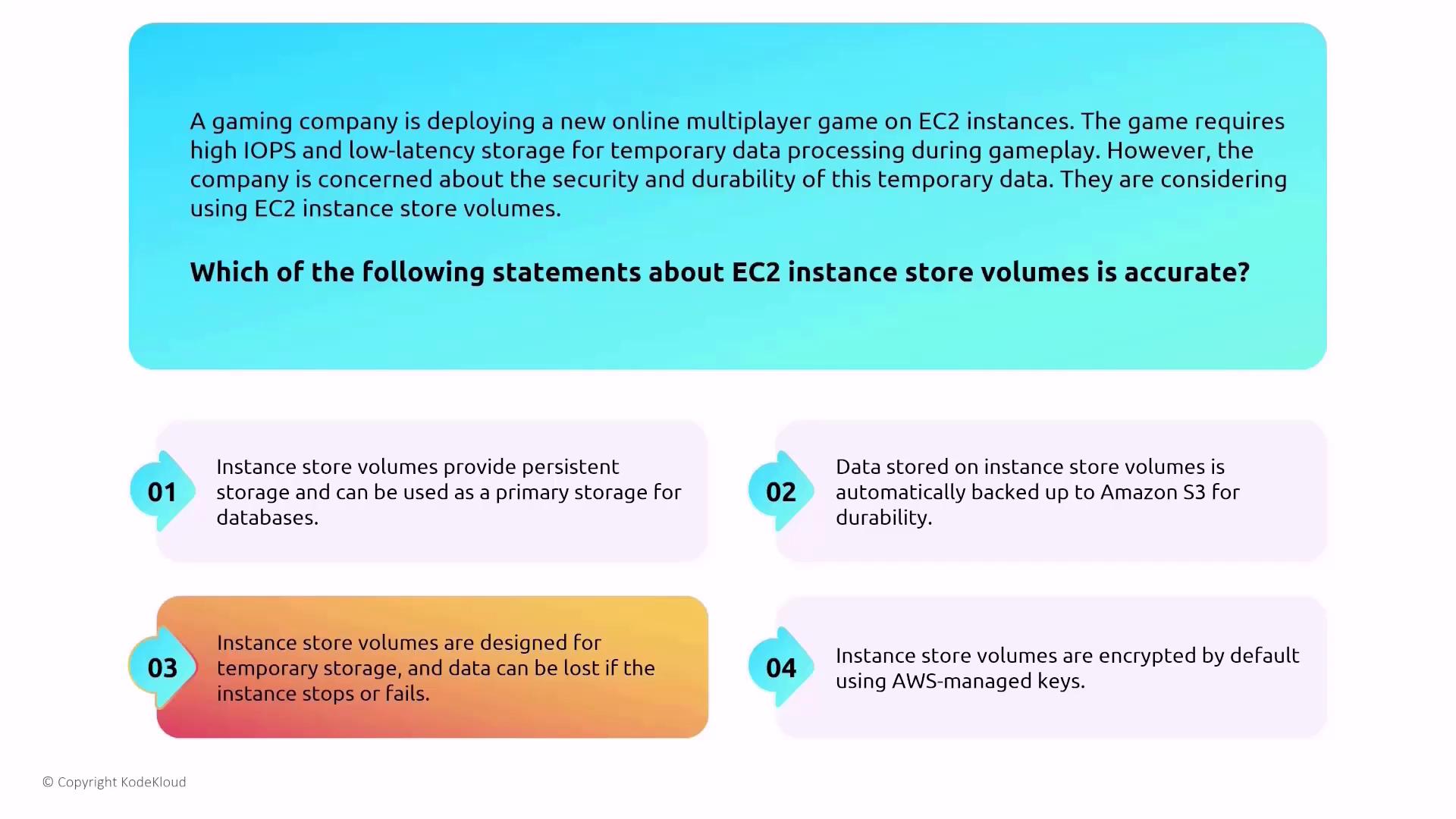

Instance Storage versus EBS

For workloads that demand high IOPS and low latency—such as an online multiplayer game—EC2 instance store volumes might be considered. However, keep in mind:- Instance store volumes are intended for temporary storage.

- Data is lost if the instance stops or fails.

- Instance store volumes do not come encrypted by default using AWS-managed keys; any encryption would need to be implemented at the application level.

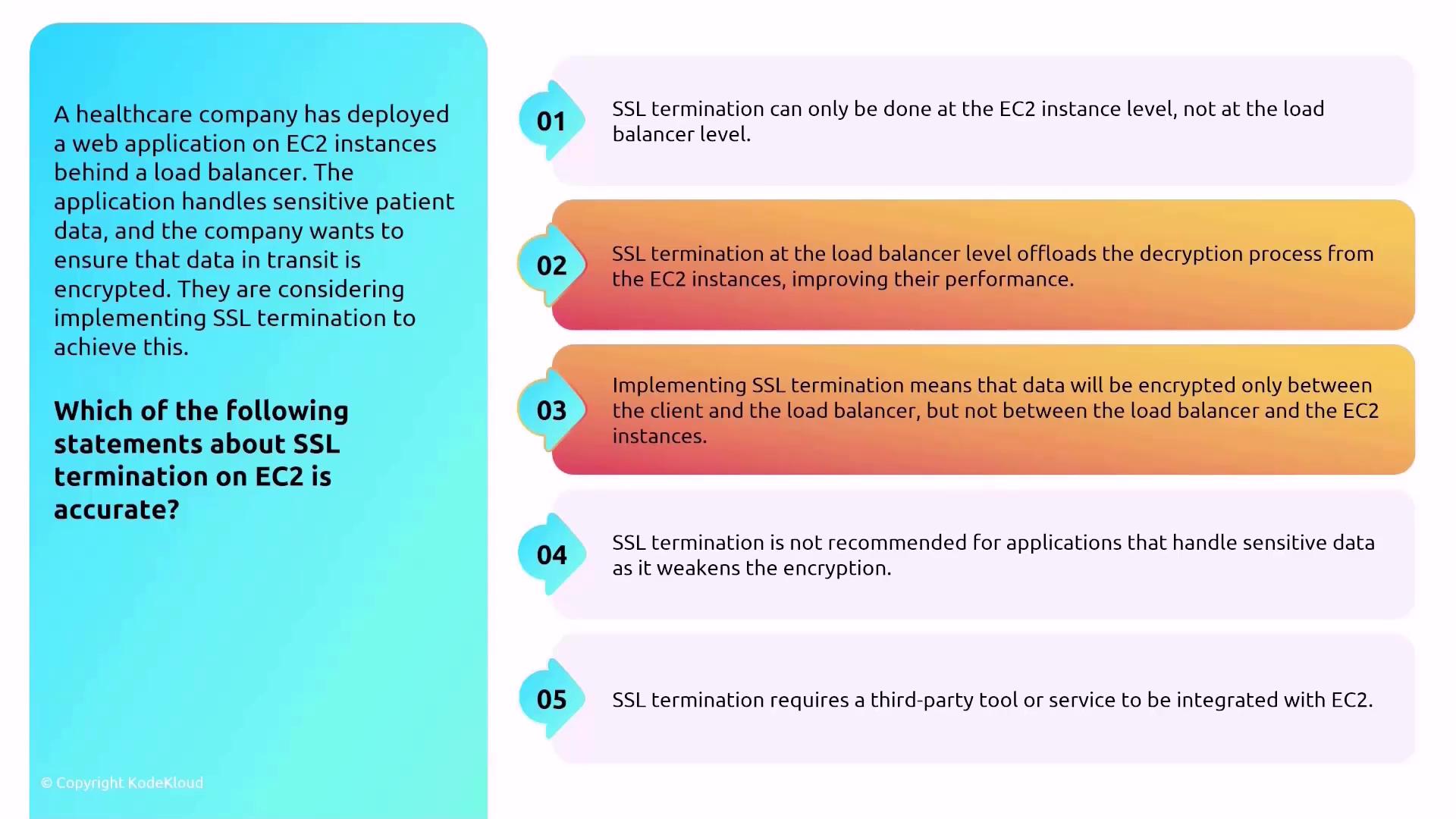

SSL Termination at the Load Balancer

An Application Load Balancer (ALB) can manage SSL termination, which offloads the decryption process from your EC2 instances. Key points include:- Traffic between the client and the load balancer is encrypted.

- Communication between the load balancer and the EC2 instance can be configured to be unencrypted or re-encrypted using a different certificate.

- Offloading SSL termination improves EC2 performance by reducing the processing load.

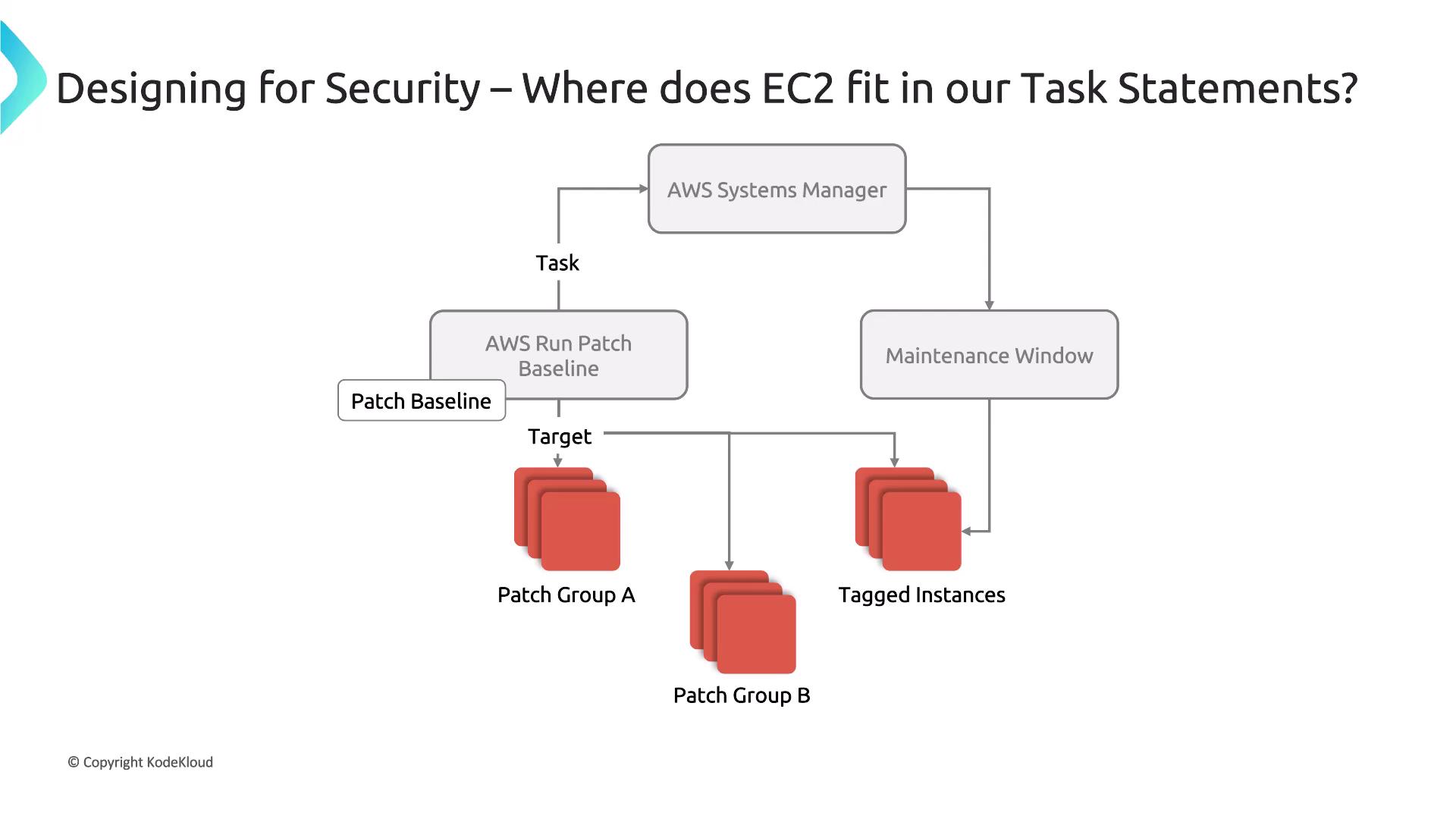

Securing EC2 with Patch Management

Ongoing patch management is critical for maintaining the security of your EC2 instances. AWS Systems Manager Patch Manager allows you to automate the patching process by:- Defining patch baselines that specify approved updates.

- Patching both operating systems and application software.

- Automating the patching process (including reboots) without manual intervention.

- Eliminating the need for exposing instances to the public internet during patching.

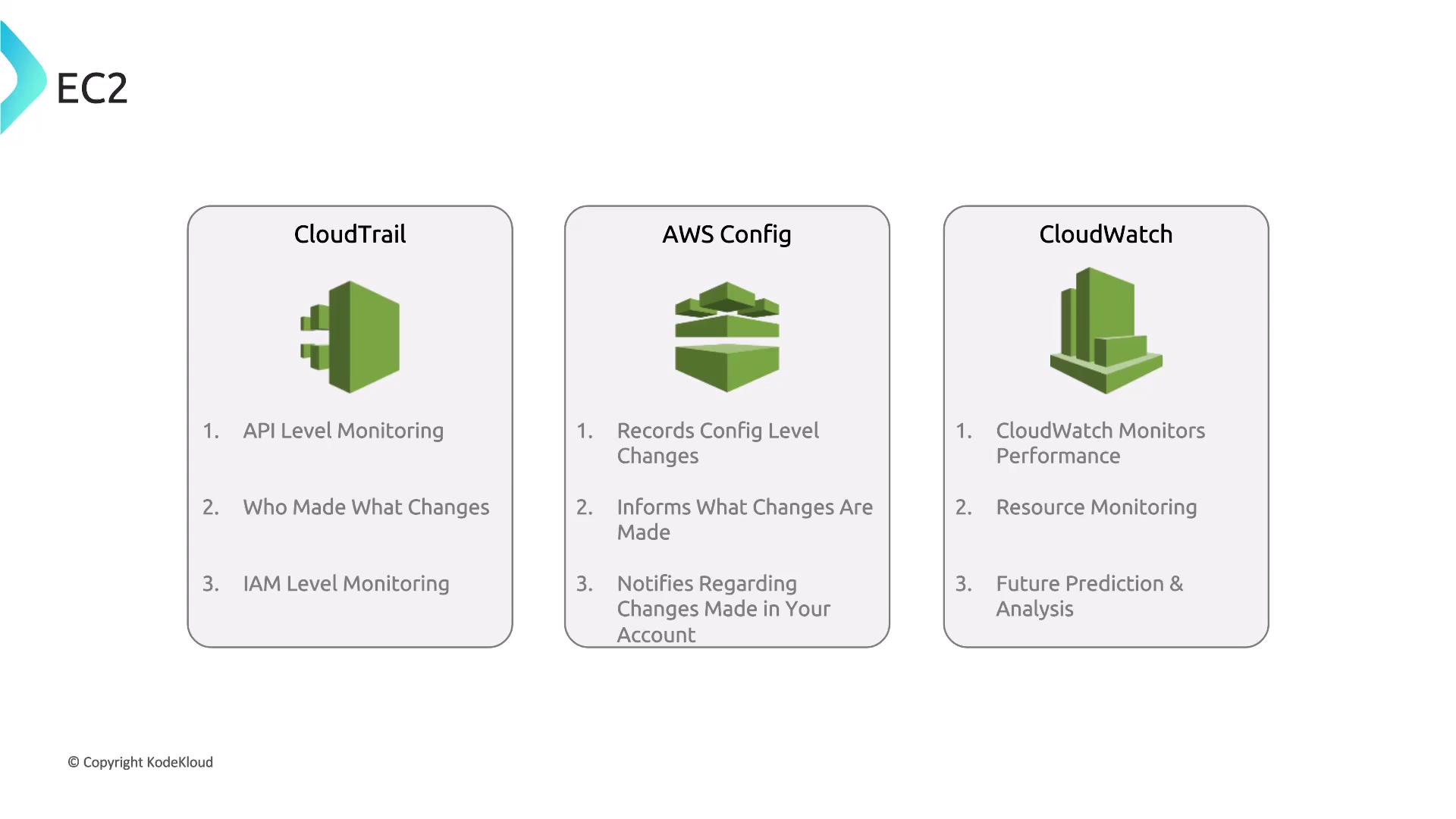

Integrating CloudTrail and CloudWatch

Integrating AWS CloudTrail with CloudWatch is essential for monitoring API calls and system changes:- CloudTrail logs API calls made on EC2 instances.

- These logs can be forwarded to CloudWatch for real-time monitoring and analysis.

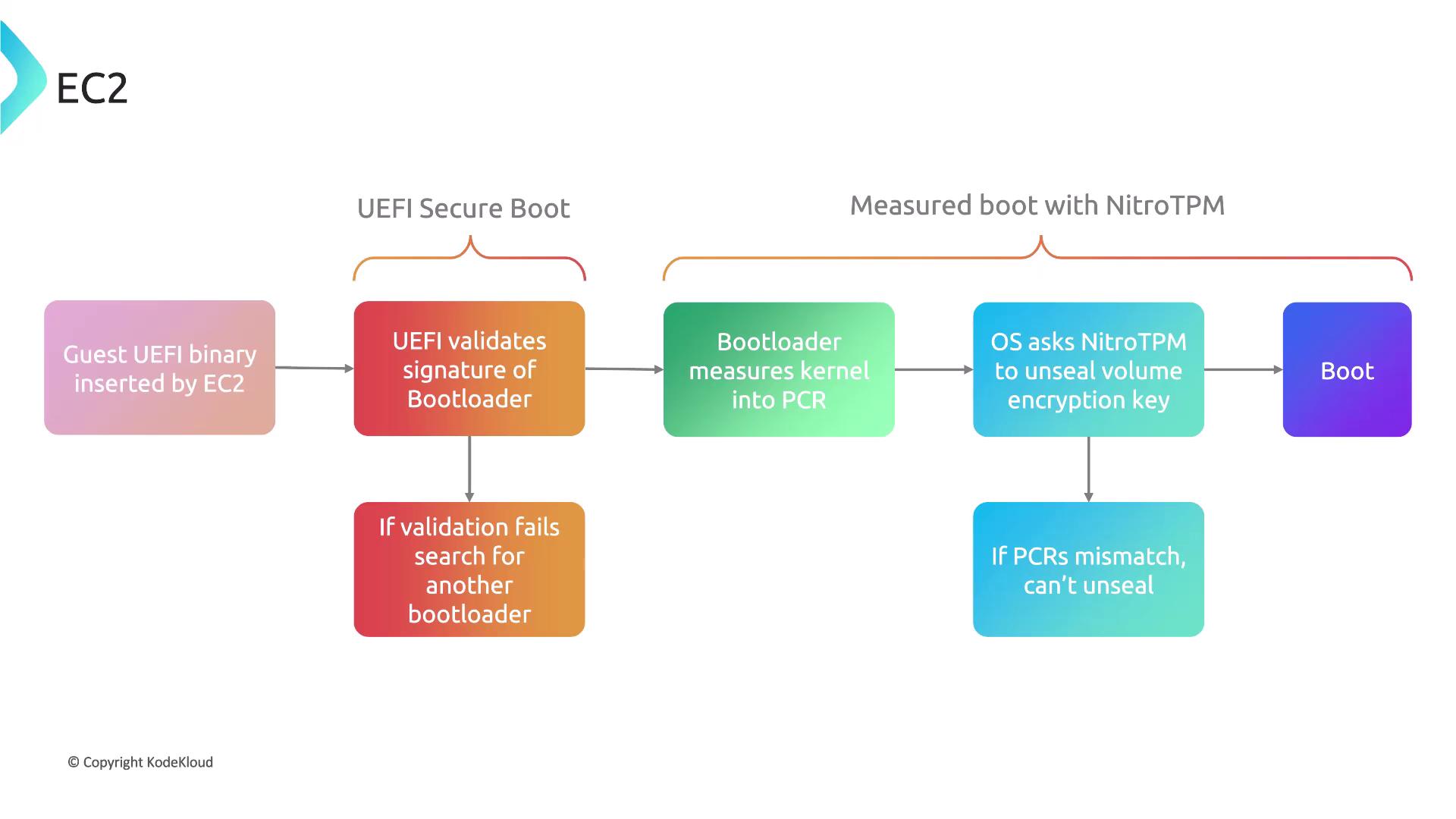

UEFI Secure Boot and Nitro TPM

AWS EC2 integrates advanced security features such as UEFI Secure Boot and Nitro TPM. Here’s how they enhance security:- The UEFI secure boot process validates the bootloader’s signature.

- After validation, the bootloader securely measures and loads the kernel.

- Nitro TPM unseals the boot volume only when the measurements match expected values, ensuring the integrity of the operating system.

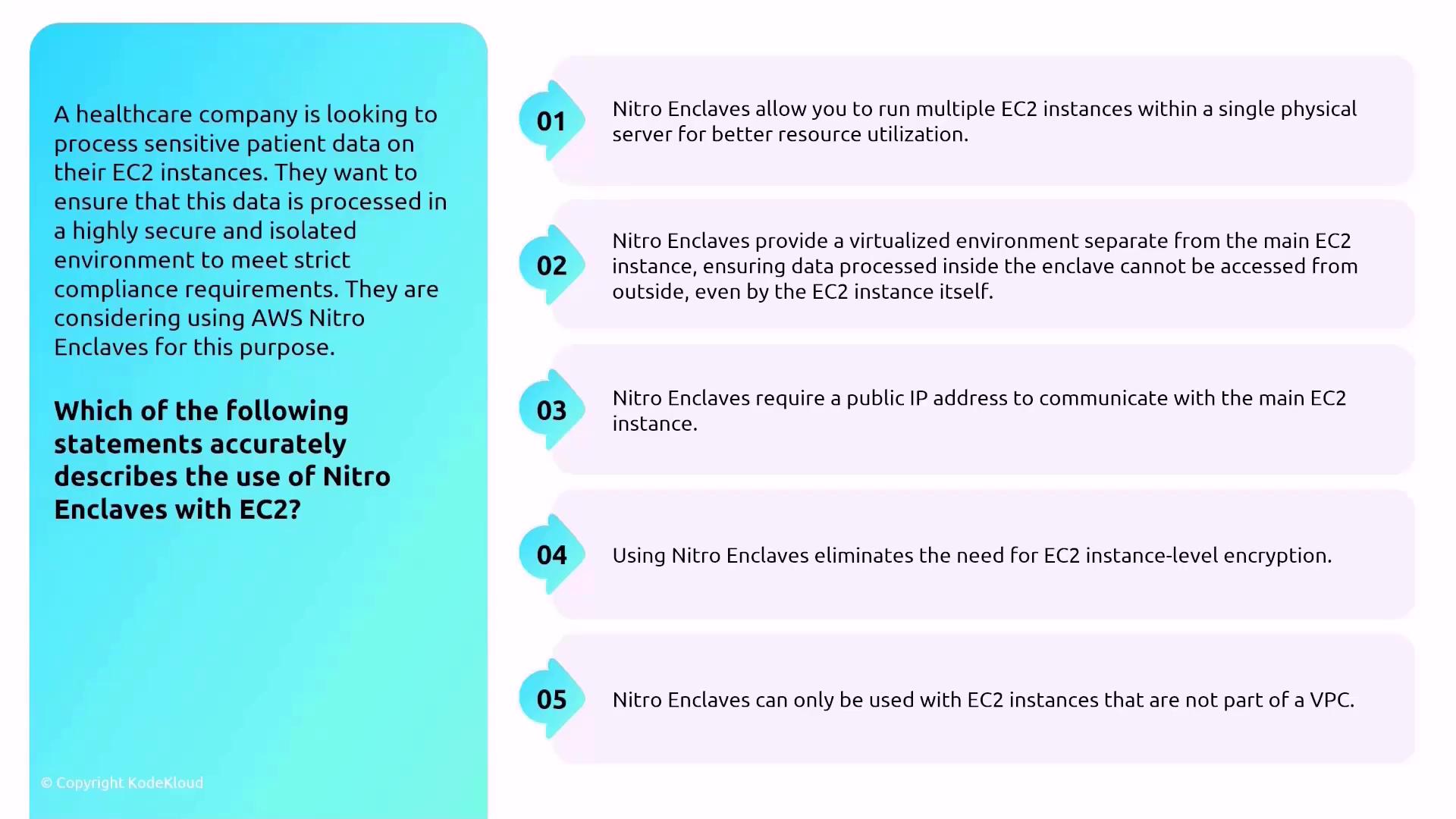

AWS Nitro Enclaves

AWS Nitro Enclaves offer a way to process highly sensitive data within a highly isolated environment. Key considerations include:- Nitro Enclaves create an isolated, secure computing environment within your EC2 instance.

- Data processed inside an enclave remains inaccessible to the parent instance.

- Communication between the enclave and the parent instance is limited to a virtual socket (vsock).

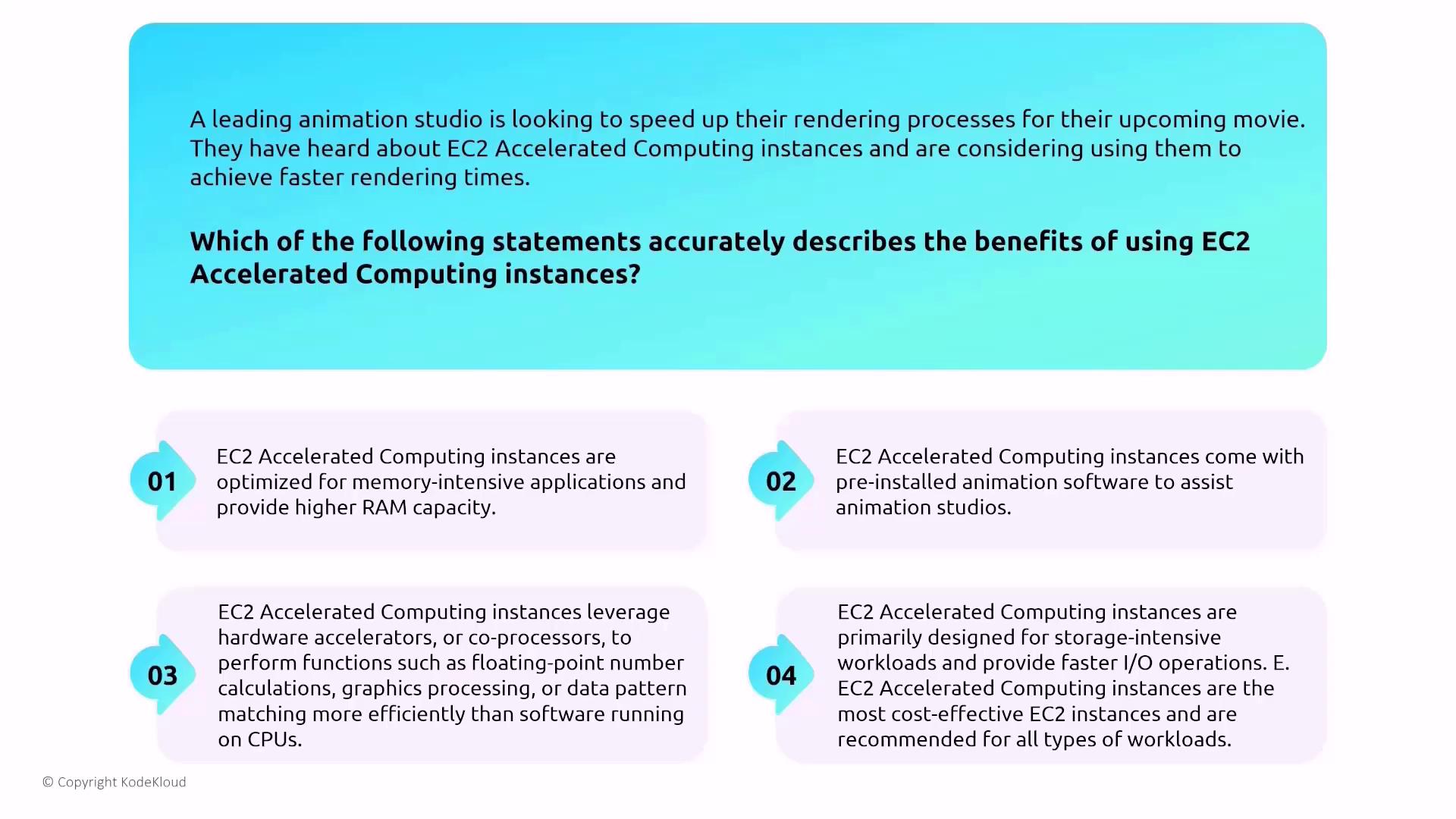

EC2 Instance Types Overview

When choosing an EC2 instance, AWS offers several instance families to suit different workloads:- General Purpose: Includes T and M family instances.

- Compute Optimized: Denoted by instance types starting with C (e.g., C5, C6, C7).

- Memory Optimized: Designed for memory-intensive tasks; instance types typically begin with R.

- Storage Optimized: Often indicated by instance types such as I, D, or H.

- Accelerated Computing: Equipped with GPUs (e.g., G and P families) for tasks like rendering or machine learning.

- Accelerated computing instances are optimized for memory-intensive applications.

- They come with pre-installed animation software.

- They leverage hardware accelerators (GPUs) to perform computations more efficiently than CPUs.

- They are primarily designed for storage-intensive workloads.

This lesson has covered key aspects of securing compute services on AWS—from understanding the shared responsibility model to configuring secure access for EC2, managing key pairs, leveraging Systems Manager, ensuring data encryption on EBS, handling instance storage correctly, and integrating advanced features such as Nitro TPM and Nitro Enclaves. By applying these best practices and understanding AWS’s security mechanisms, you can significantly enhance the security posture of your compute workloads. Happy architecting!