AWS Solutions Architect Associate Certification

Designing for Security

Turning up Security on Storage Services Part 3

In this lesson, we delve deeper into secure storage by exploring storage manipulation services. Beyond merely storing files, these services back up, replicate, and manage your data—actively enhancing both reliability and security.

AWS Backup

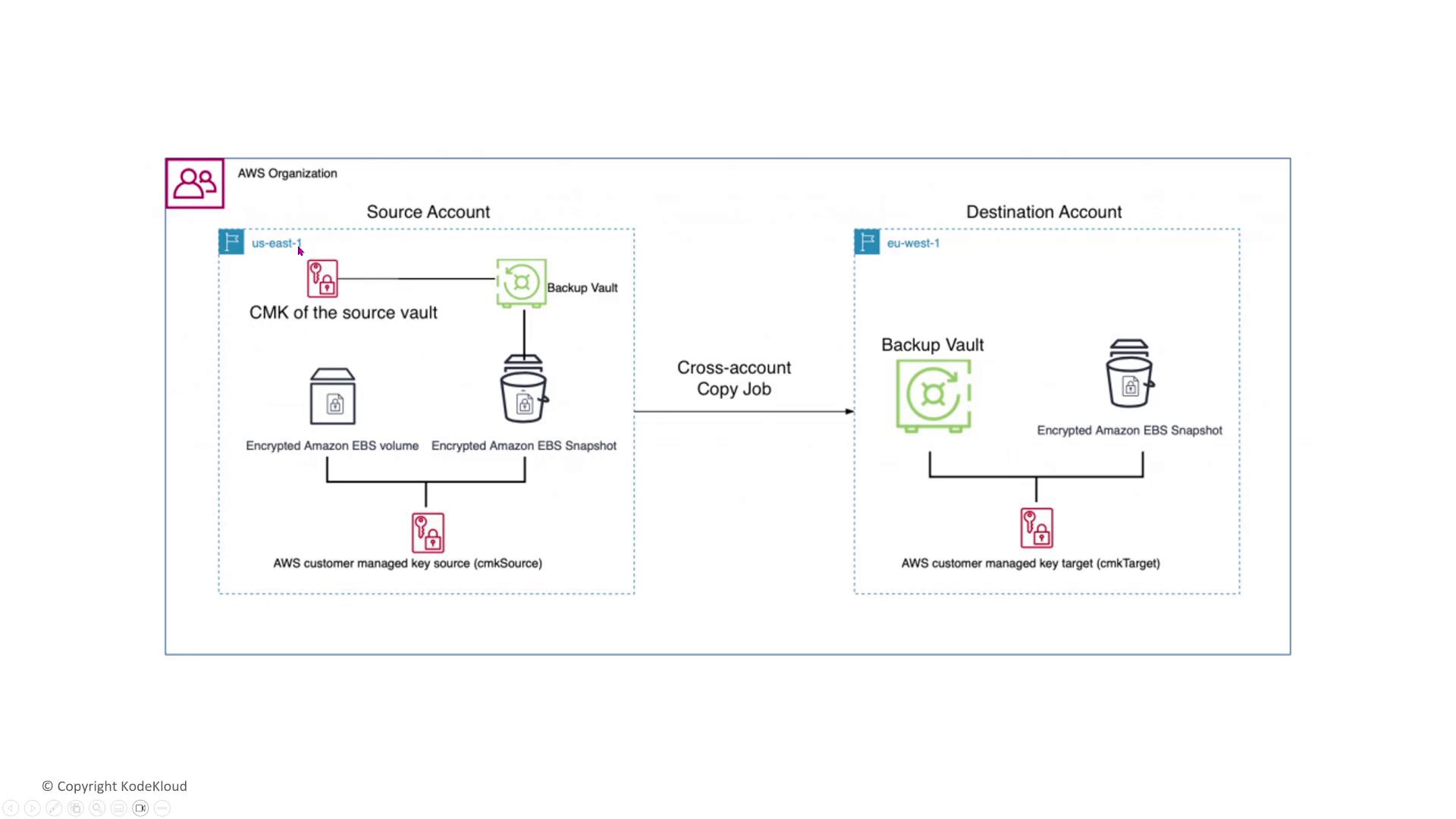

AWS Backup is a relatively recent addition to the AWS portfolio (approximately five years old) that automates the backup process for data, such as encrypted EBS snapshots and volumes, using customer-managed keys. In a typical scenario, one AWS account backs up data to a vault that supports cross-account copy jobs to another AWS Backup vault. For instance, backups taken in US East 1 can be replicated to EU West 1.

AWS Backup is secure by design, but for added protection, consider these best practices:

- Encrypt all items stored in the backup vault.

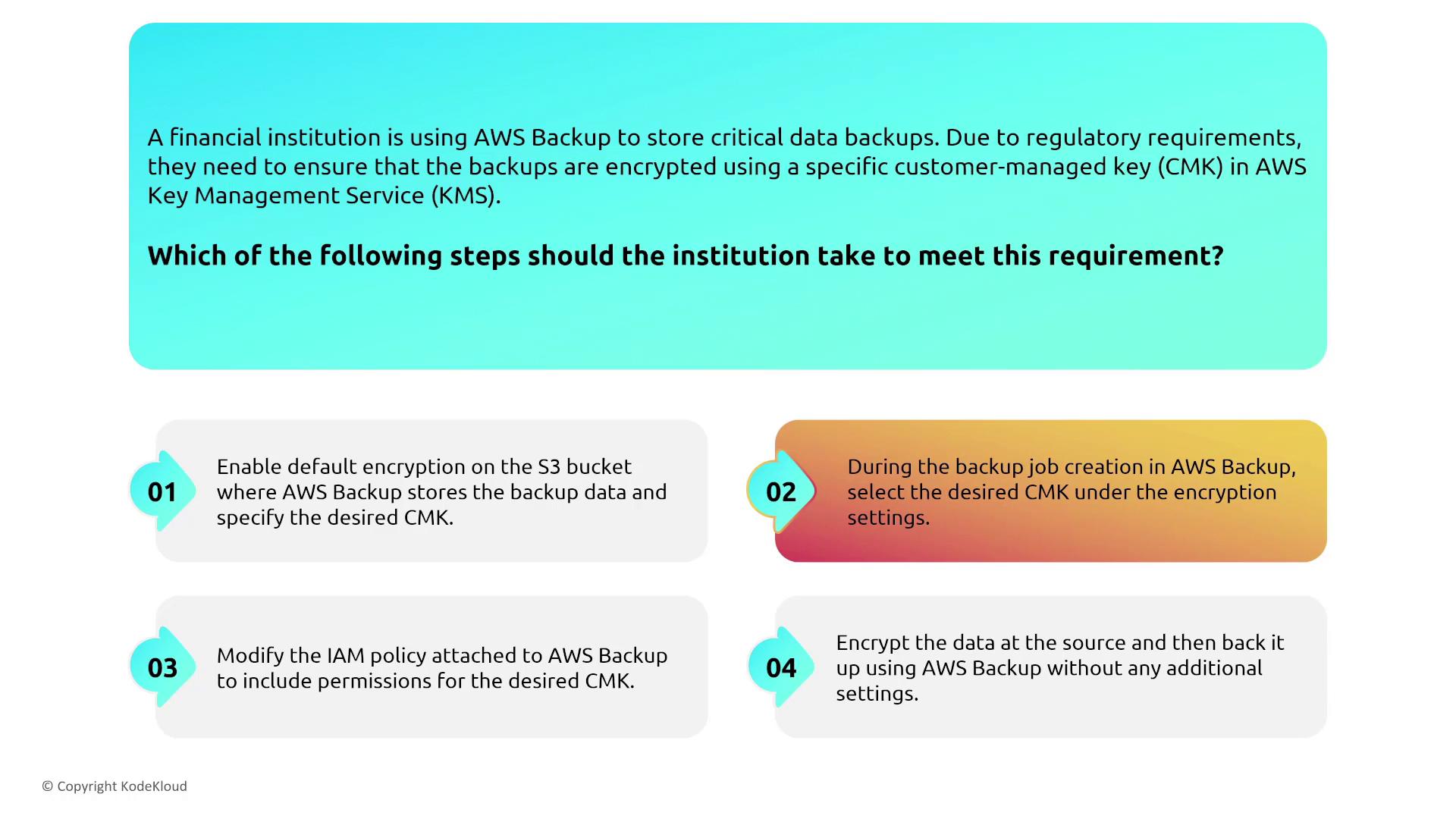

- Configure the backup job’s encryption settings to use a specific AWS Key Management Service (KMS) customer-managed key (CMK) when required by regulatory standards.

- Avoid relying solely on the default S3 encryption (SSE-S3) if a CMK is necessary; instead, opt for SSE-KMS.

- Adjust IAM policies for AWS Backup to grant the proper permissions over the CMK. Note that this does not automatically attach the CMK to your backup job.

Example for Regulatory Compliance

For example, a financial institution that needs to comply with regulatory requirements can select the desired CMK during job creation to enforce encryption at both the source and in the backup vault.

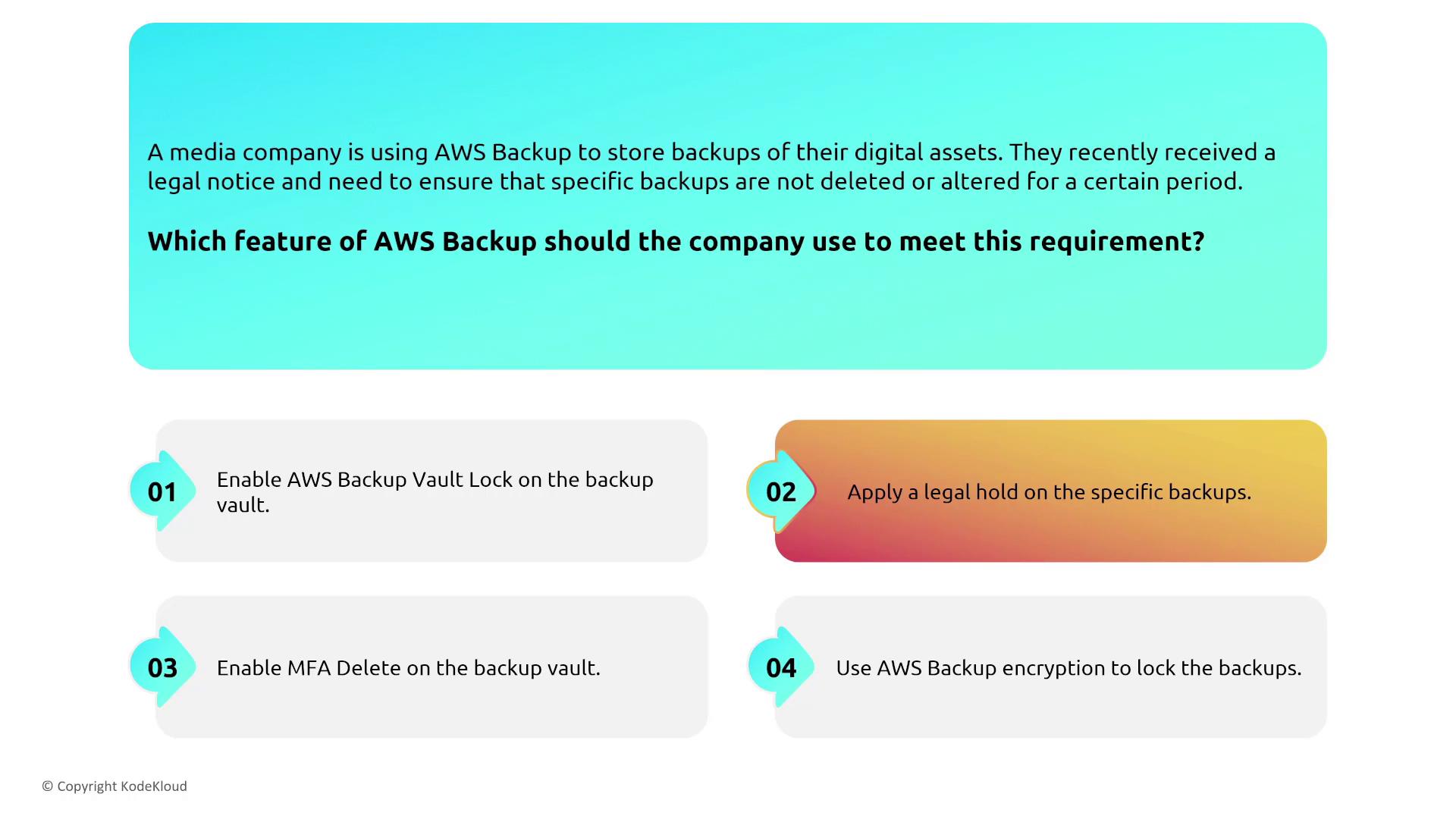

Legal Holds and Vault Locks

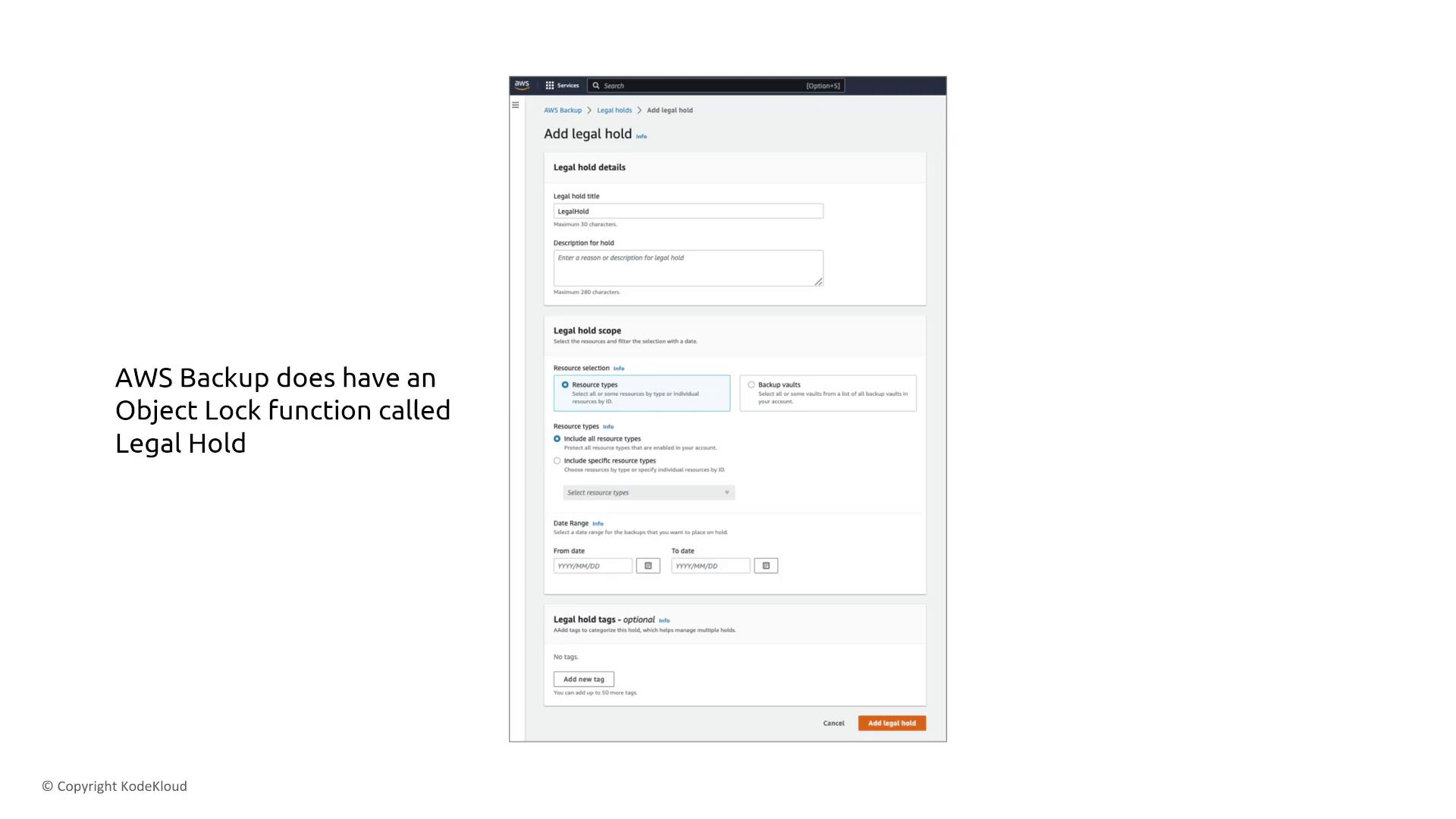

Imagine a media company that receives a legal notice requiring certain backups to remain unaltered for a specified period. AWS Backup provides two mechanisms to support this requirement:

- Legal Hold: Applies across multiple vaults, securing backups throughout the entire AWS Backup deployment.

- Vault Lock: Secures a single backup vault by applying compliance or governance controls, thereby preventing any modifications.

While a legal hold spans multiple vaults, a vault lock functions similarly to an S3 object lock but is confined to one vault.

For scenarios such as a pharmaceutical company needing to lock data for seven years—even from a root user—apply a legal hold when multiple vaults are involved or use a vault lock for a single vault.

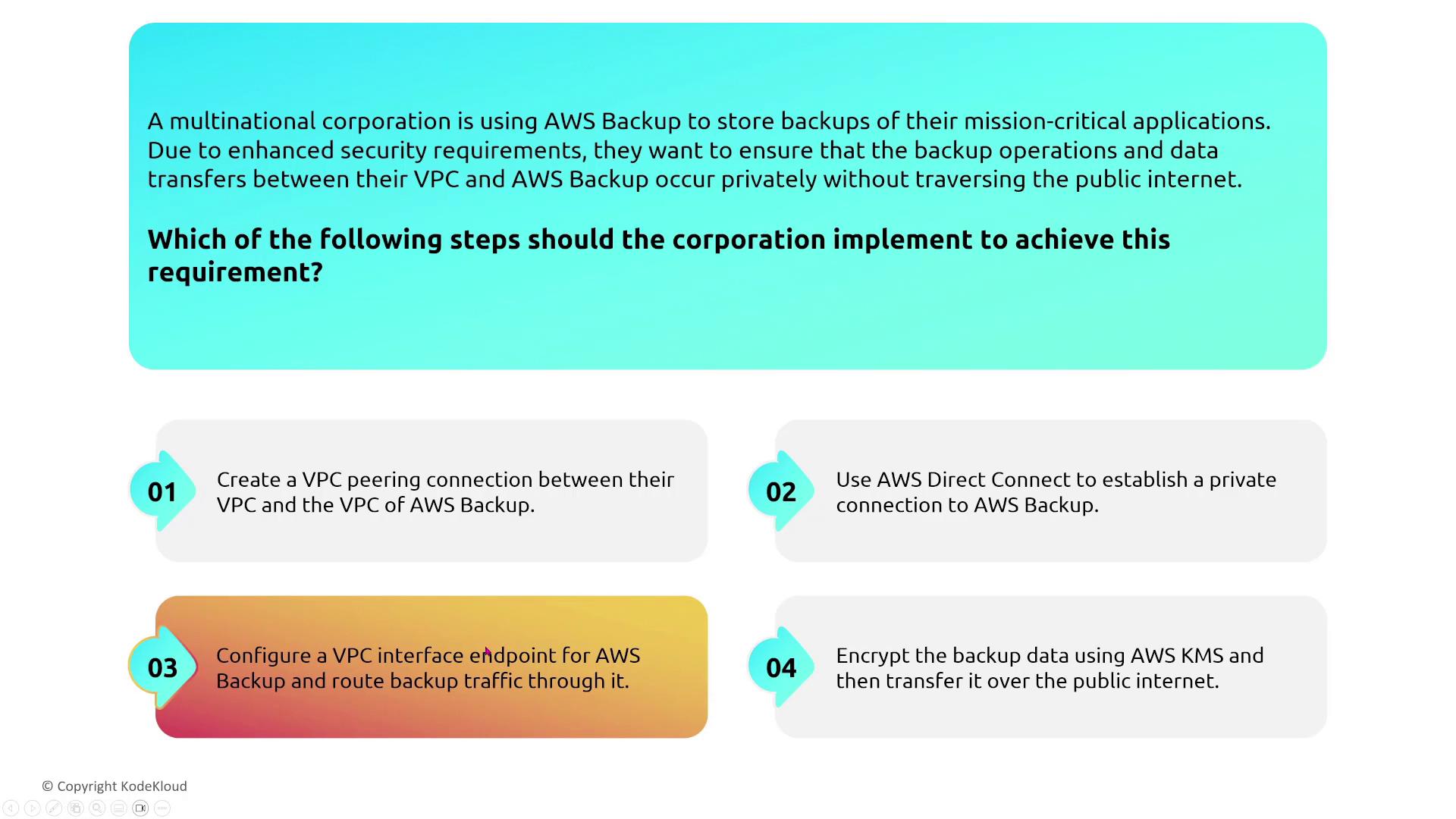

Securing Data Transfers with VPC Interface Endpoints

AWS Backup traffic can be secured using VPC interface endpoints. These endpoints assign an IP address from your subnet, ensuring that traffic flows over AWS’s private backbone instead of the public internet. This configuration is particularly beneficial for multinational corporations that require secure backup operations between their VPCs and AWS Backup.

The recommended approach is to configure a VPC interface endpoint for AWS Backup. Keep in mind that only S3 and DynamoDB support gateway endpoints; all other services, including AWS Backup, require interface endpoints.

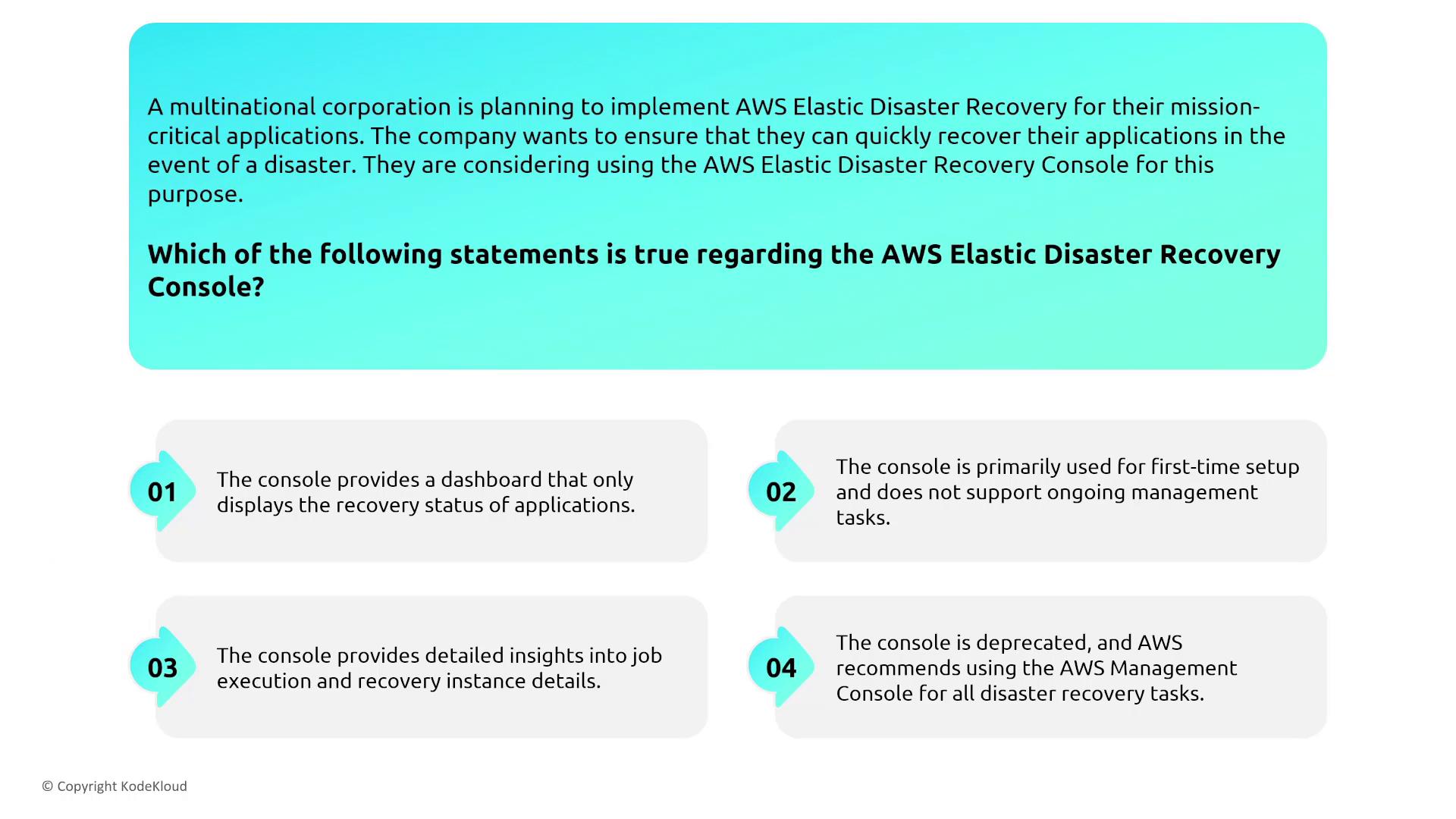

Elastic Disaster Recovery (EDR)

Formerly known as CloudEndure (or Elastic Disaster Recovery), EDR replicates storage from any location—including non-AWS environments—into AWS on a block-by-block basis. This near-real-time recovery capability is essential for mission-critical applications during a disaster.

The EDR console is a dedicated interface that provides detailed insights into job executions, recovery instance details, and overall disaster recovery operations. It is used during both the initial setup and subsequent management phases.

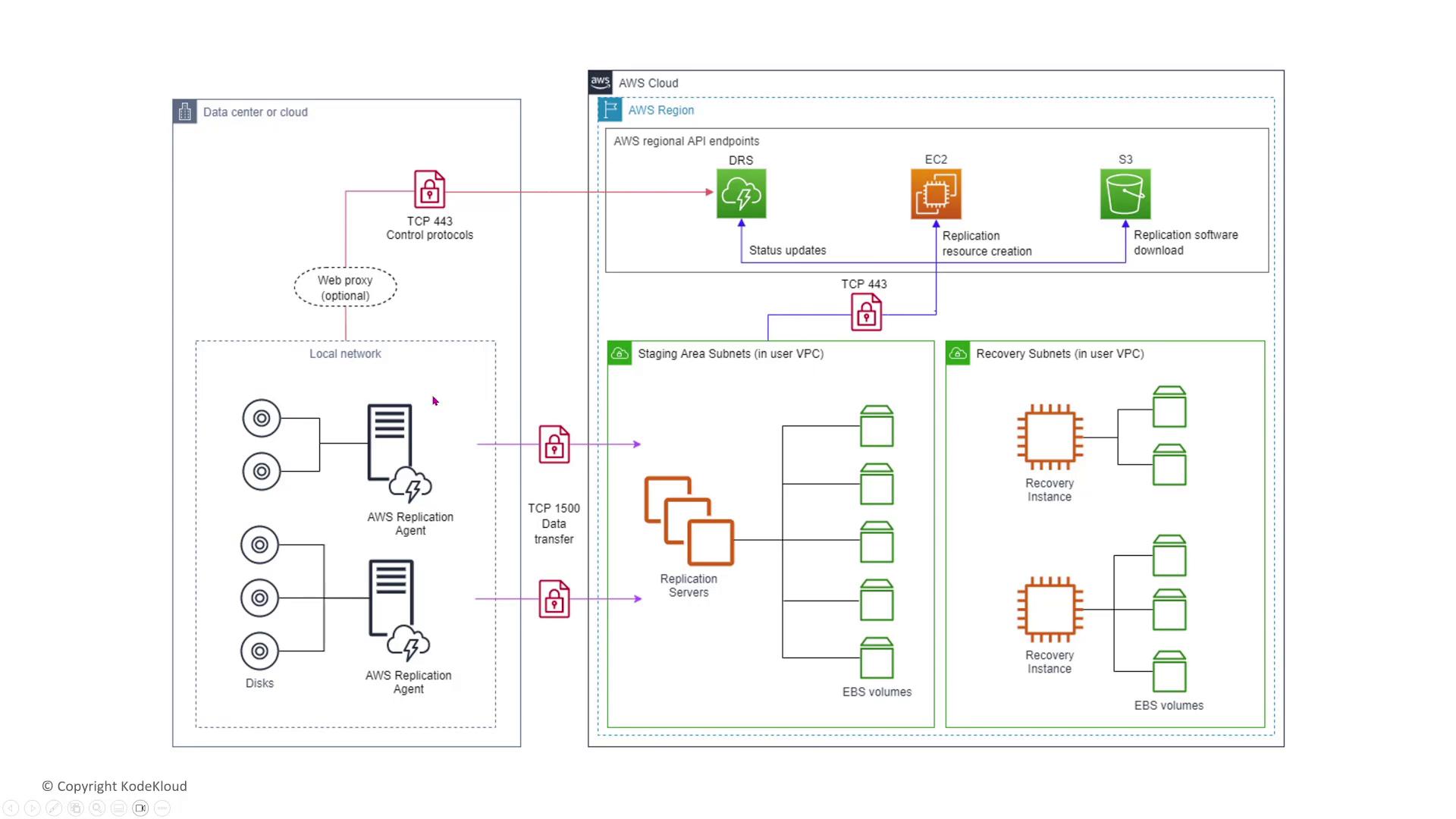

A typical EDR process involves:

- Data sourced from an external data center (or another cloud provider) is identified.

- An AWS Replication Agent installed at the source transfers the data to replication servers.

- Replication servers pre-stage the data in volumes before copying it to the target virtual machines in AWS.

- Synchronization is maintained on a block-by-block basis, ensuring that even the smallest changes are securely replicated.

For example, a healthcare organization using EDR might conduct a failover test and then perform a "failback" to restore systems to the primary environment after a disaster.

Storage Gateway

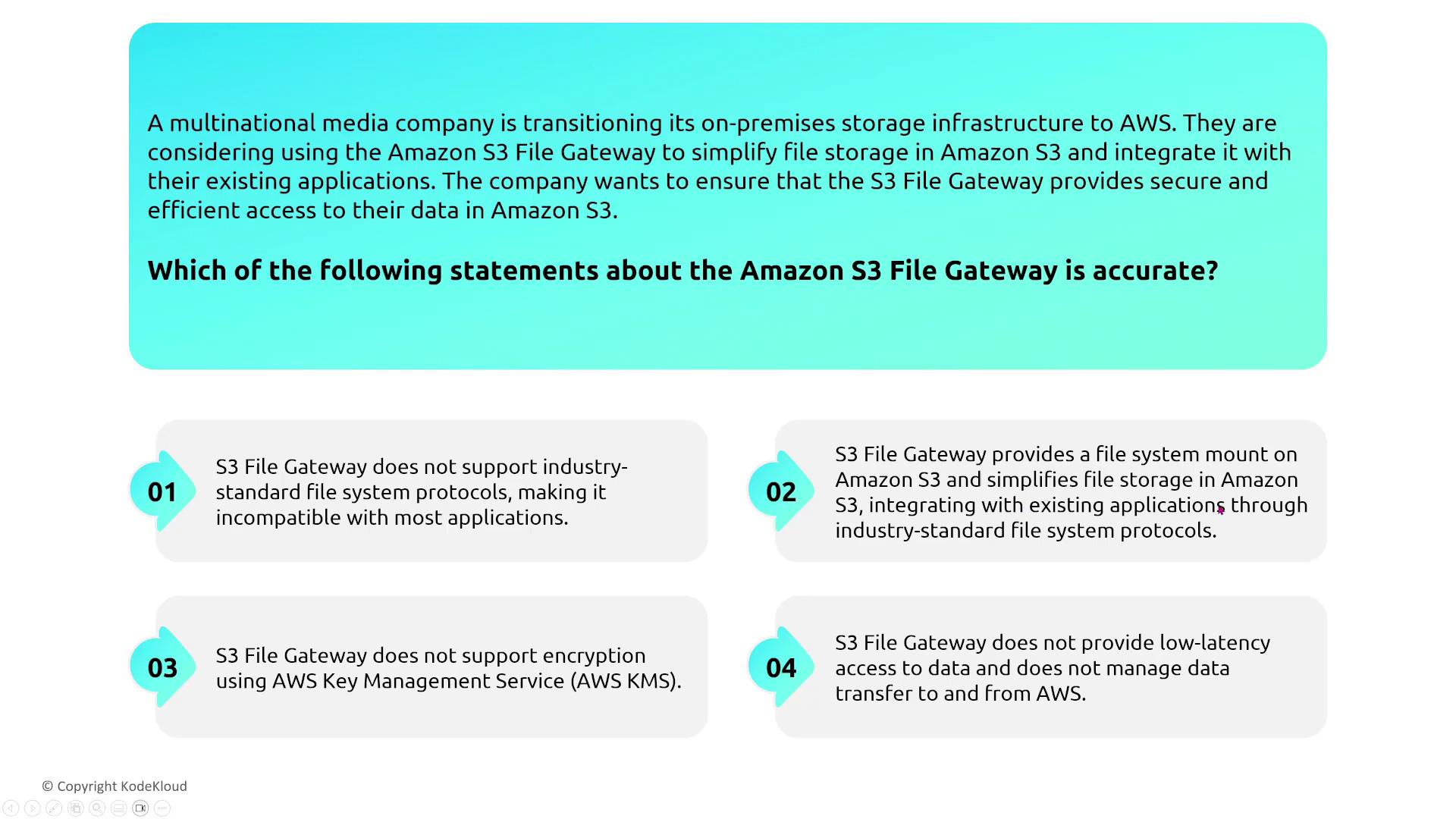

Storage Gateway is one of AWS’s longstanding services that seamlessly integrate on-premises data with AWS cloud services. It is available in several modes:

- S3 File Gateway: Provides a file system mount on Amazon S3 that supports standard file system protocols (NFS and SMB) with encryption enabled by default.

- Volume Gateway: Offers iSCSI-based storage volumes. Volume Gateway can operate in:

- Cached Mode: Frequently accessed data is stored on-premises, while the complete dataset remains in Amazon S3.

- Stored Mode: The entire dataset is stored locally and in Amazon S3, with asynchronous backups.

- Tape Gateway: Emulates a physical tape backup infrastructure using a virtual tape library (VTL), storing backups in S3 and optionally archiving them in Amazon Glacier.

- FSx File Gateway: Specifically designed for Windows-based file systems using SMB. This gateway integrates with FSx for Windows File Server and supports on-premises caching with SSDs for optimal performance.

S3 File Gateway

A media company considering an S3 File Gateway will benefit from industry-standard file protocols that simplify integration with existing applications while ensuring data encryption.

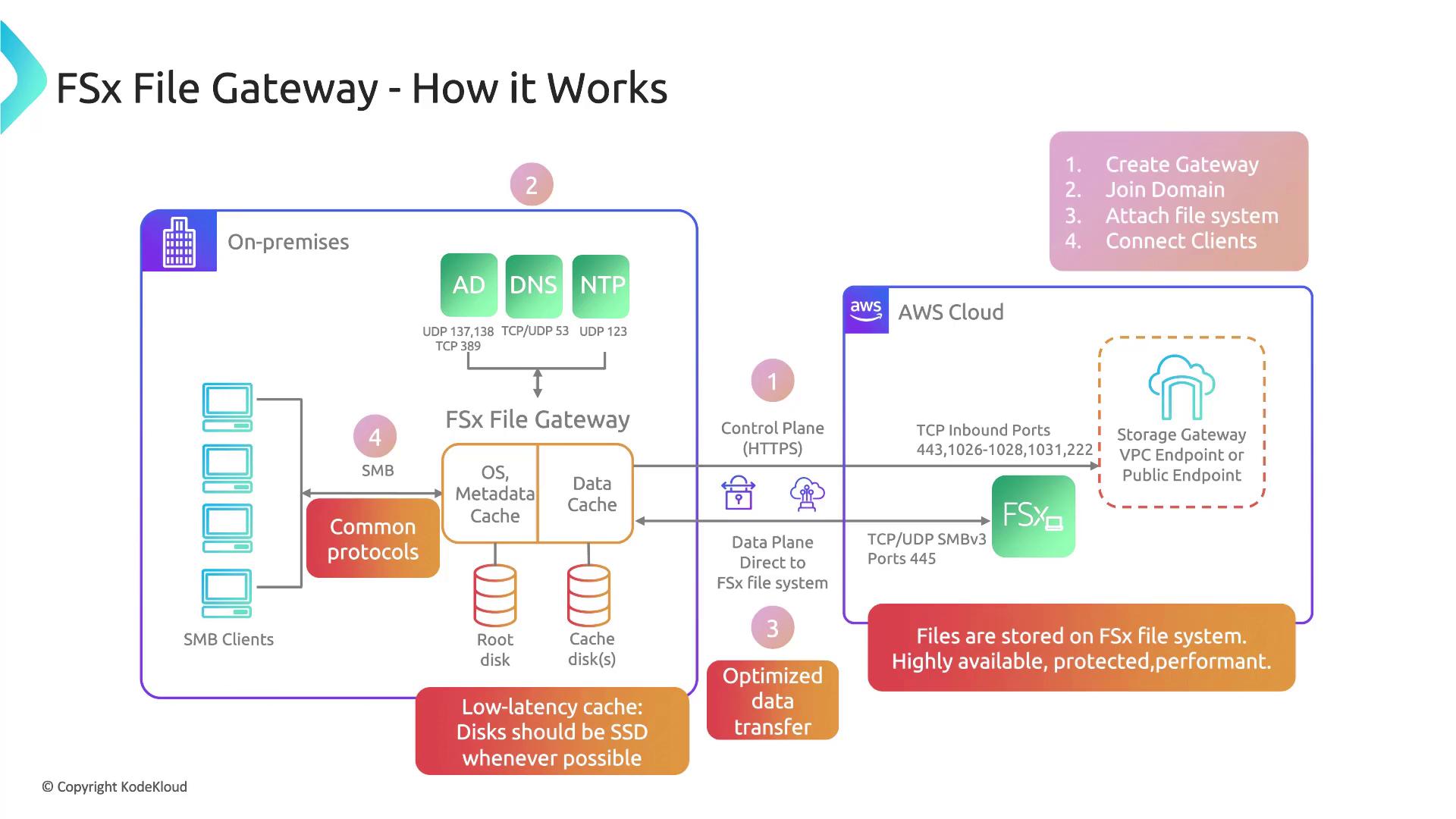

FSx File Gateway

The FSx File Gateway enables low-latency access to Windows file systems via the SMB protocol. It connects on-premises systems to an FSx for Windows File Server in the cloud and uses both data and metadata caches to optimize performance. Successful implementation requires proper setup of components such as DNS, time servers, and secure VPC endpoints.

This gateway can be deployed on-premises as a virtual machine using standard hypervisors (e.g., ESXi, Hyper-V, or KVM) and can join a Microsoft domain, ensuring seamless integration with enterprise environments.

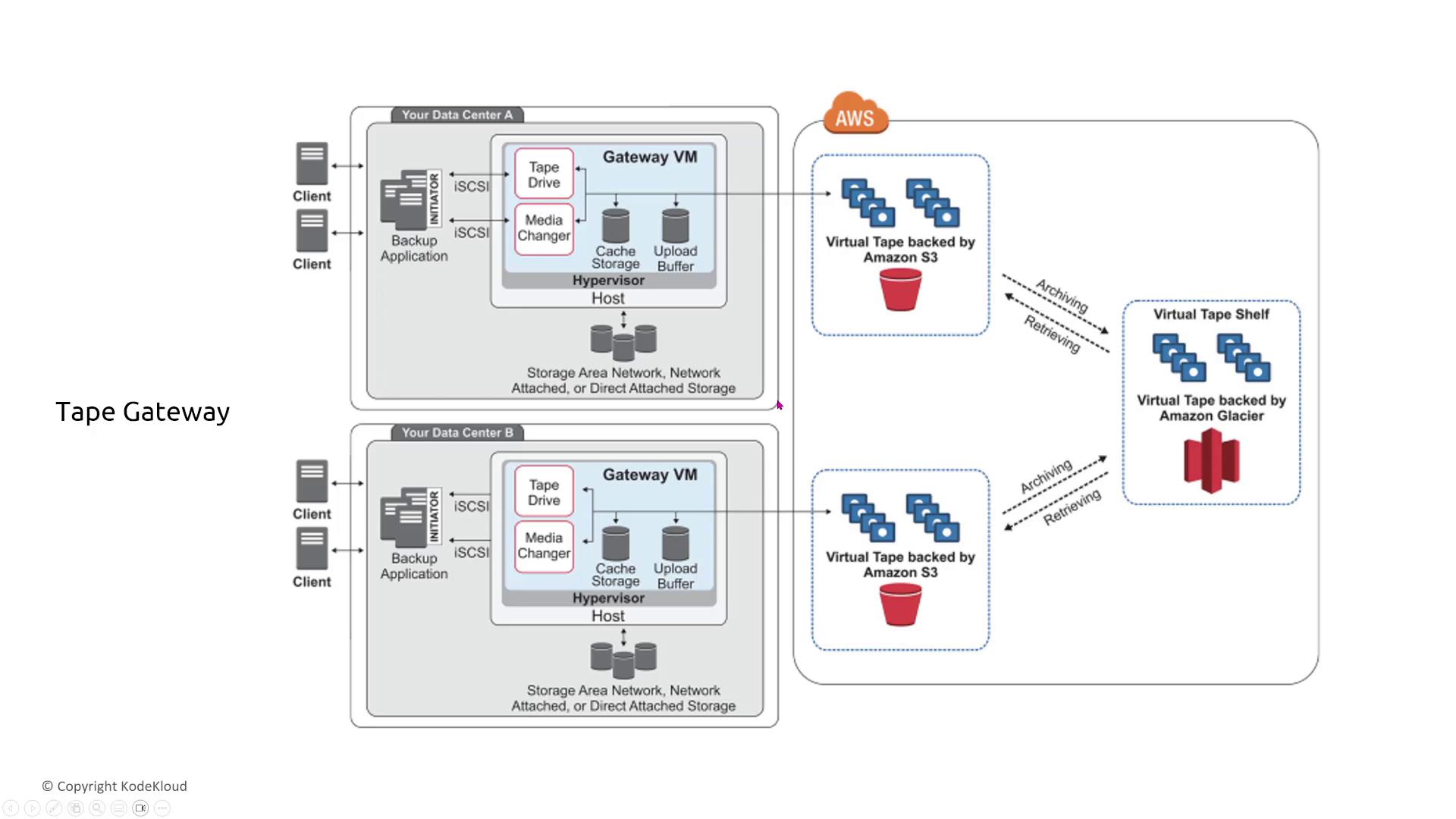

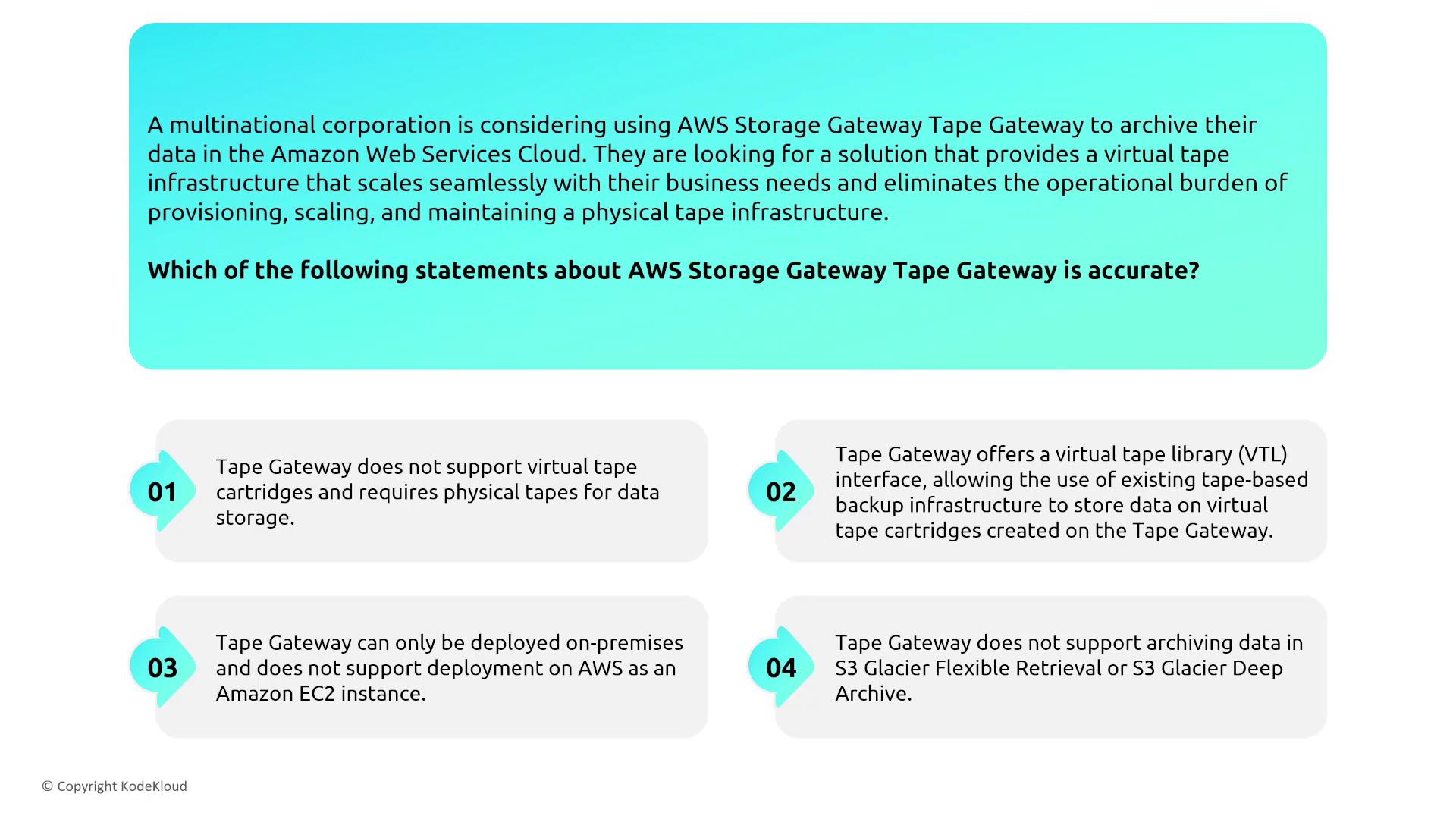

Tape Gateway

Tape Gateway replaces traditional tape backup infrastructure with a virtual tape library (VTL). Data from on-premises backup applications is transmitted via iSCSI to the tape gateway appliance, which then stores virtual tapes in S3 or archives them in Amazon Glacier.

When evaluating Tape Gateway, consider the following features:

- Supports a virtual tape library (VTL) interface.

- Seamlessly integrates with your existing backup infrastructure.

- Can be deployed virtually on-premises or as an EC2 instance.

- Fully supports archiving with both S3 Glacier Flexible Retrieval and S3 Glacier Deep Archive.

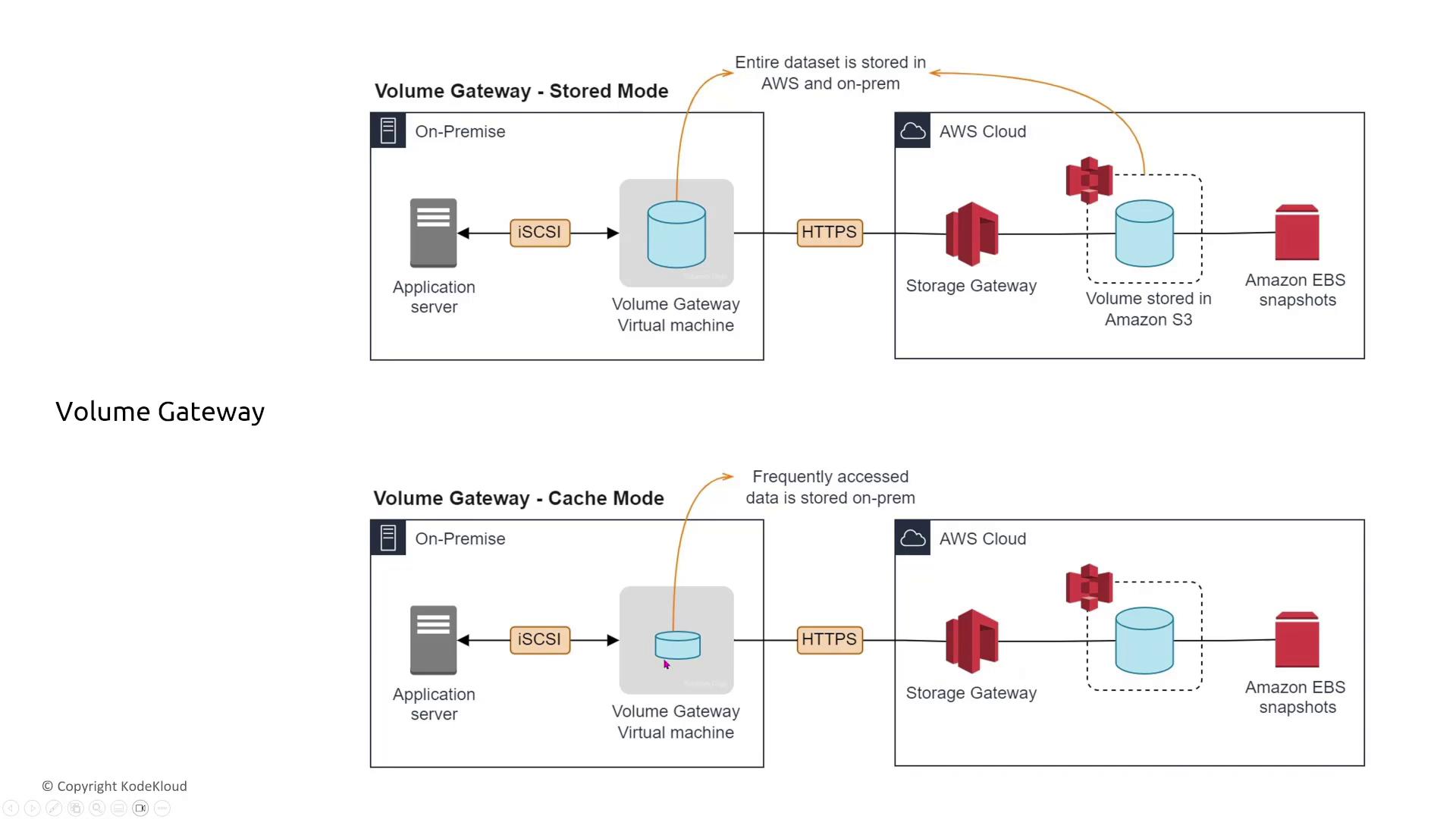

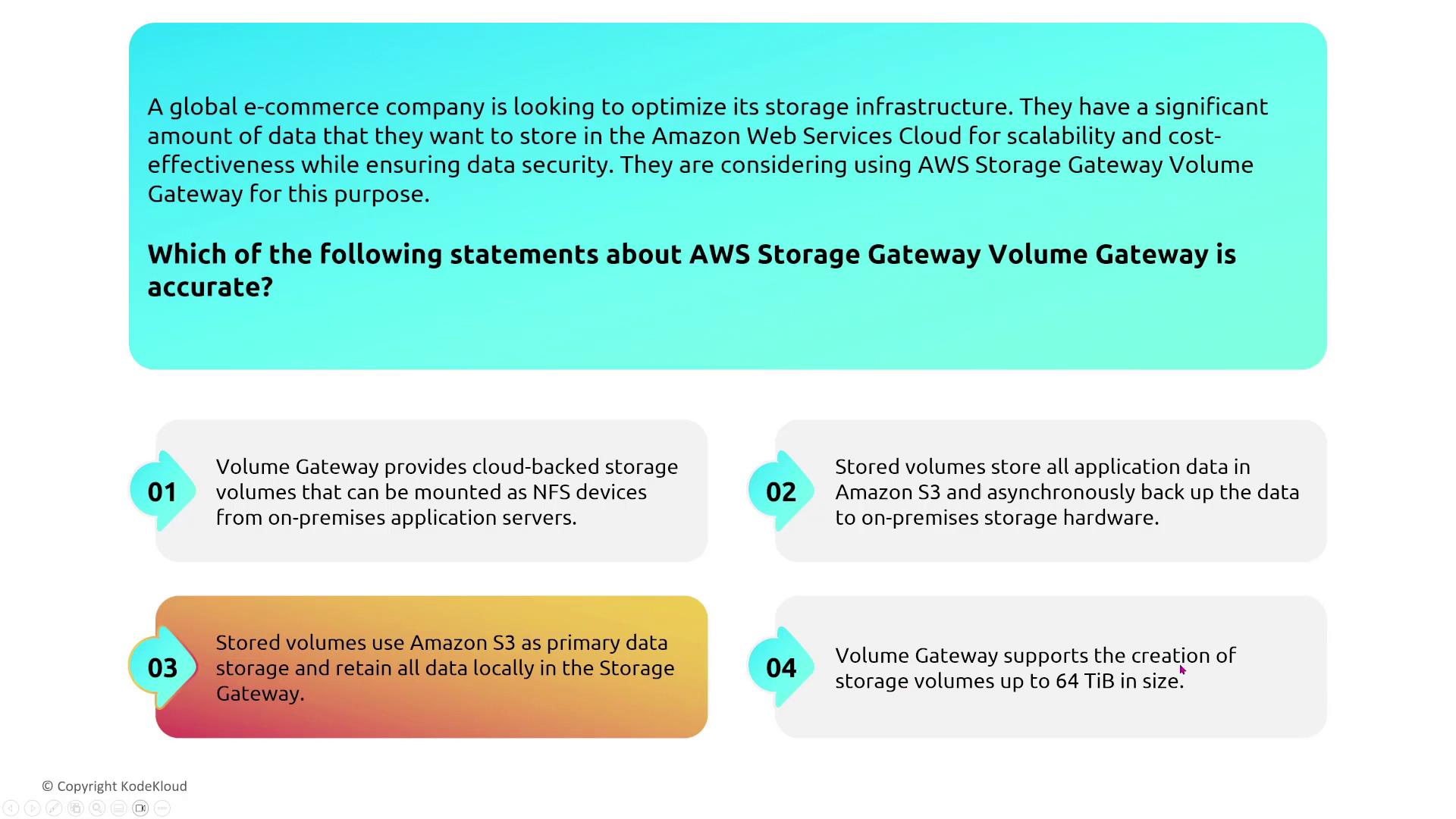

Volume Gateway

Volume Gateway delivers cloud-backed iSCSI storage for on-premises servers, configurable in two modes:

- Cached Mode: Frequently accessed data is cached on-premises while the complete dataset remains in Amazon S3.

- Stored Mode: The entire dataset is maintained locally as well as in Amazon S3 with asynchronous backups.

This gateway is engineered for fast provisioning and efficient snapshot creation, ensuring encryption both in transit and at rest.

For example, a global e-commerce company seeking both scalability and security might choose Volume Gateway in stored mode to maintain a complete local copy of the data while using S3 as the primary storage medium. Although some sources might mention a maximum volume size of 64 terabytes, the current supported limit is typically 32 terabytes.

Summary

- This module focused on storage services that enable manipulation of data through backup, replication, and disaster recovery.

- Newer AWS storage services include robust logging and built-in data-at-rest protection by default.

- Nearly all storage services support encryption through AWS KMS or customer-managed methods.

- AWS provides comprehensive monitoring through CloudWatch, with additional oversight possible via CloudTrail, AWS Config, and Trusted Advisor for premium support plans.

Thank you for joining this comprehensive 90-minute discussion on storage services. In upcoming sessions, we will explore additional topics, including compute services.

Happy securing your data!

Watch Video

Watch video content

Practice Lab

Practice lab