AWS Solutions Architect Associate Certification

Services Data and ML

Demo Kinesis in real time consumption and production

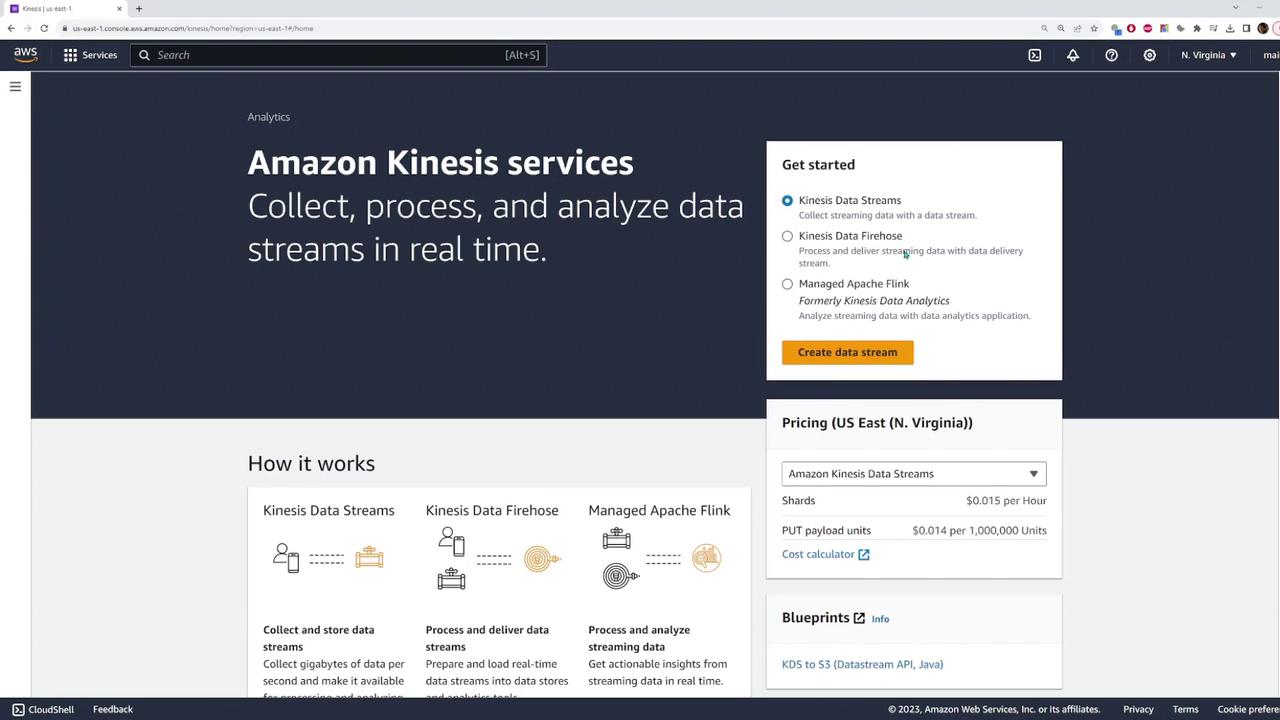

In this lesson, you'll learn how to work with Amazon Kinesis by sending dummy data—simulating crypto or stock trading prices—to a Kinesis data stream. This data is then automatically forwarded to a Kinesis Data Firehose and delivered to an S3 bucket. Follow the steps and diagrams below to set up your streaming data pipeline.

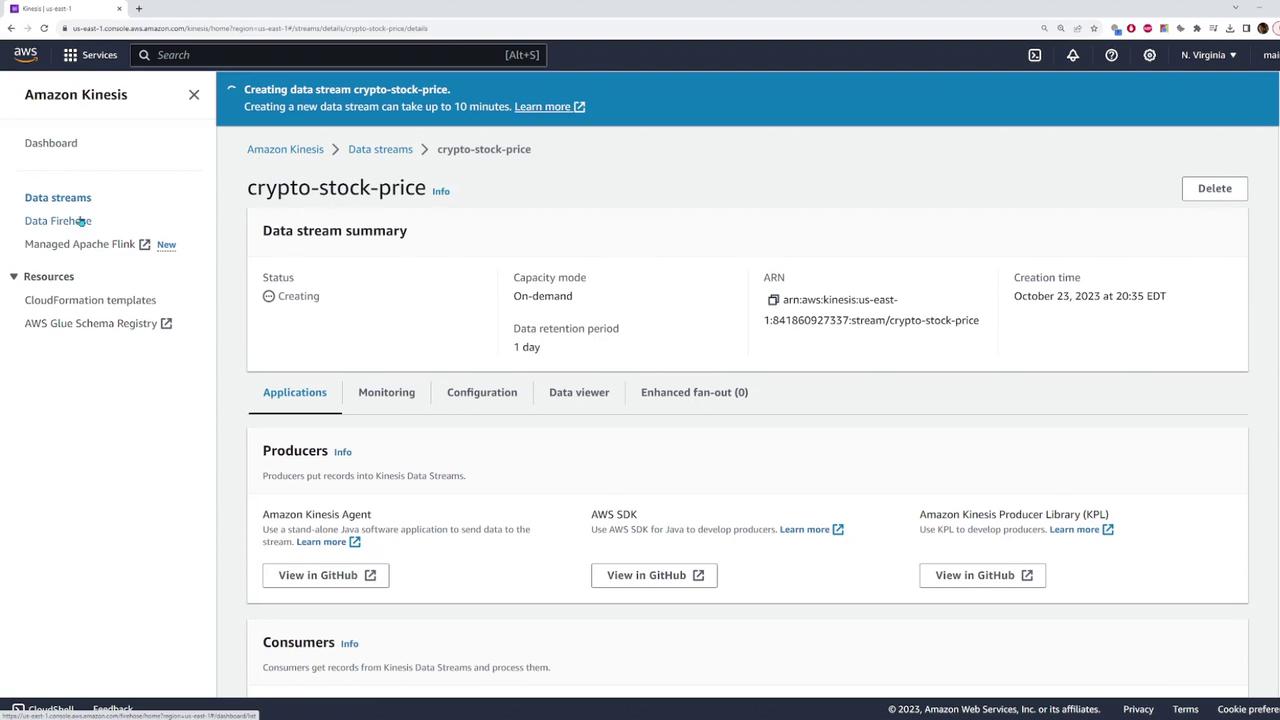

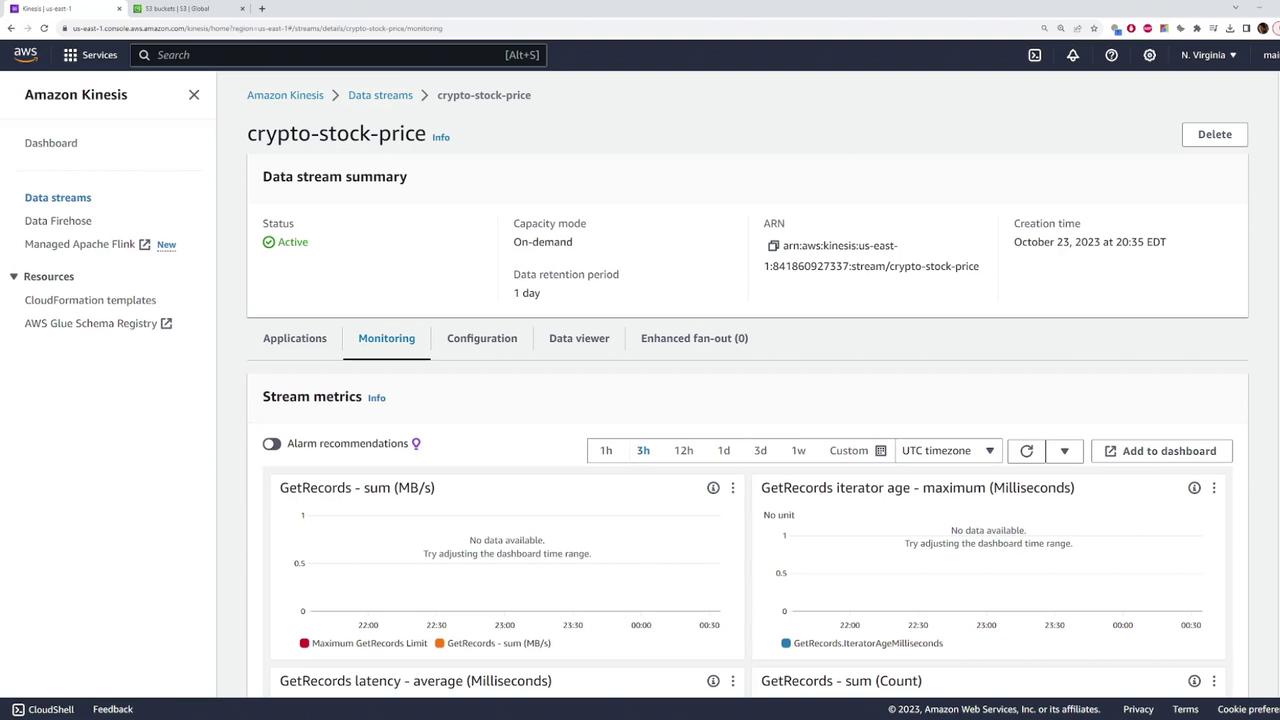

Creating a Kinesis Data Stream

Start by navigating to the Kinesis service page in the AWS console and creating a new data stream.

Enter a stream name (for example, "crypto stock price").

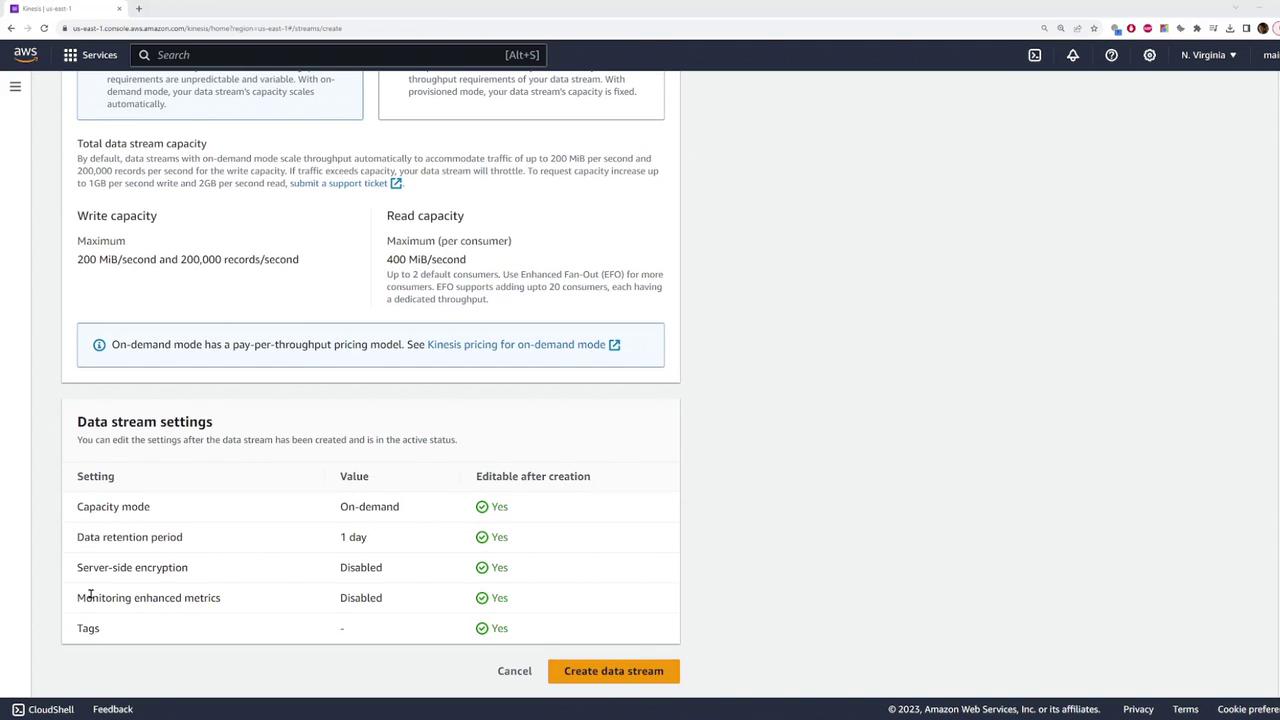

Choose the capacity mode:

- Select Provisioned if you know your overall throughput requirements.

- Select On-Demand for dynamic scaling.

Pricing Consideration

Note that the On-Demand option may be pricier compared to Provisioned capacity.

Configure additional stream settings as required.

After configuring your settings, click Create data stream to complete this step.

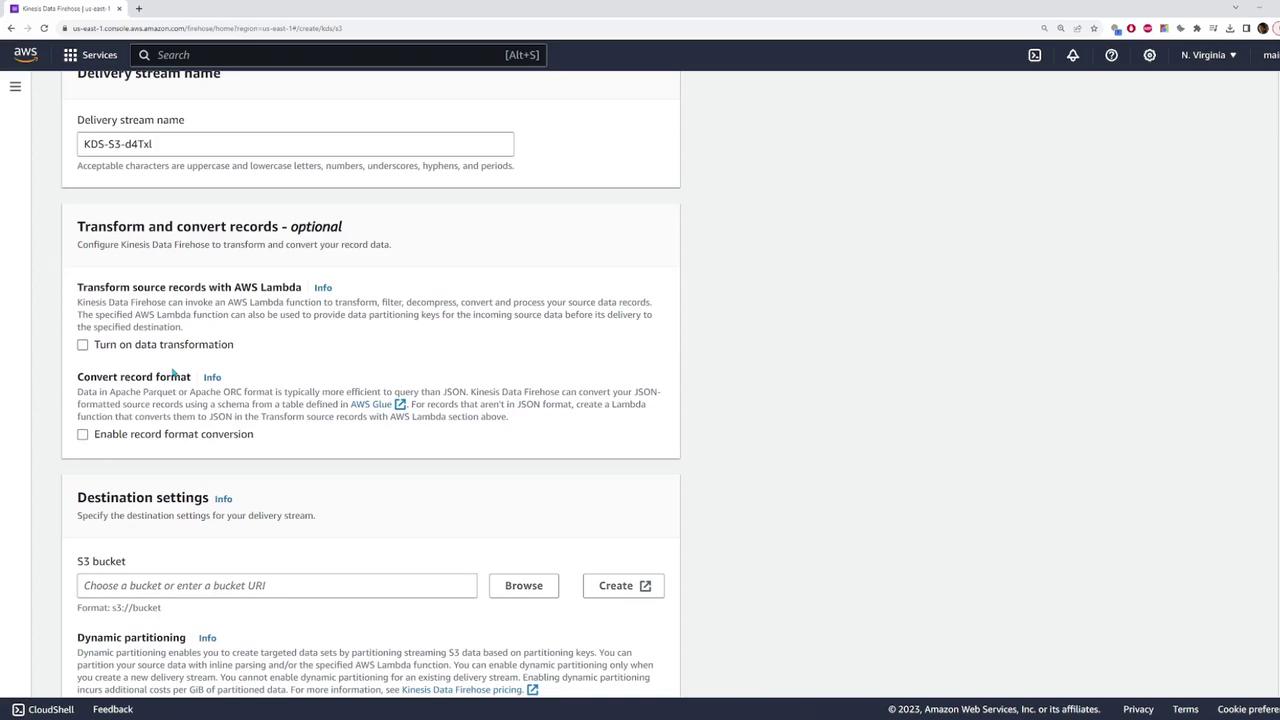

Configuring the Kinesis Data Firehose

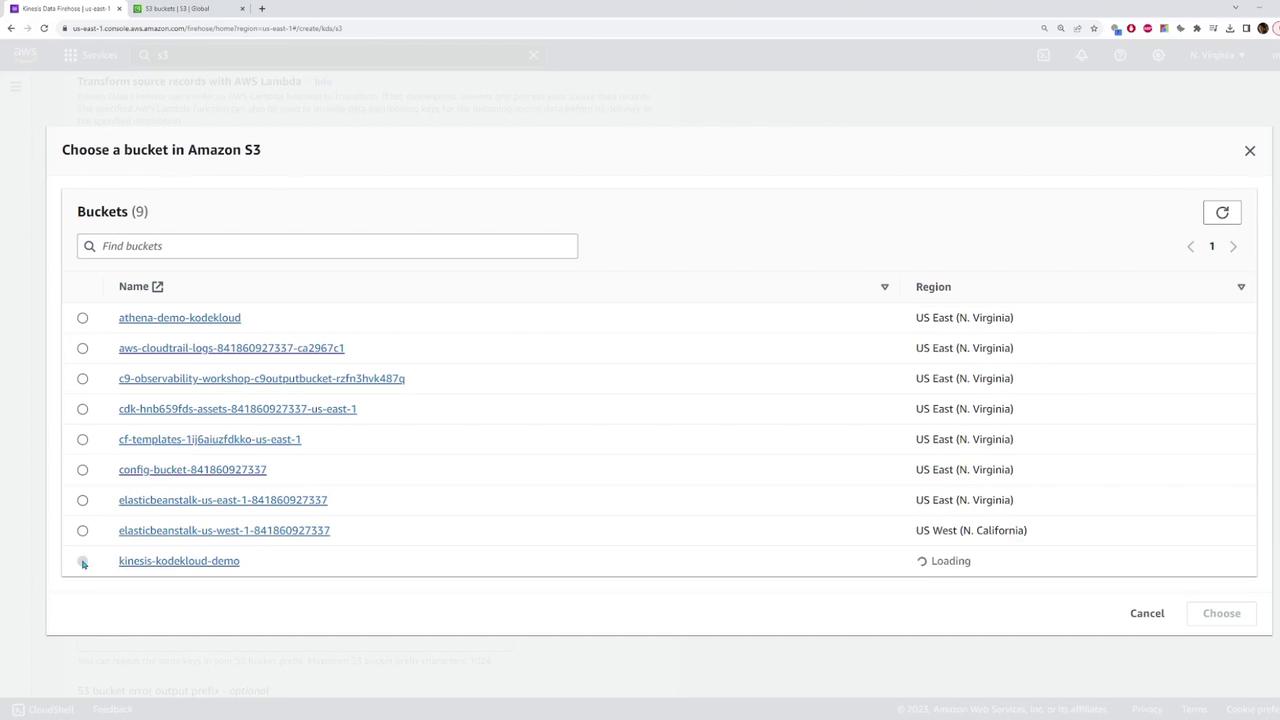

Next, configure a Kinesis Data Firehose to channel data from the Kinesis data stream to an S3 bucket.

- Choose the data stream you just created as your source.

- For the destination, select or create an S3 bucket. In this example, a new bucket named "Kinesis Code Cloud Demo" is created.

- Optionally, enable data transformation by activating a Lambda function. For this demo, leave the transformation settings as default.

- Review your settings and create the delivery stream.

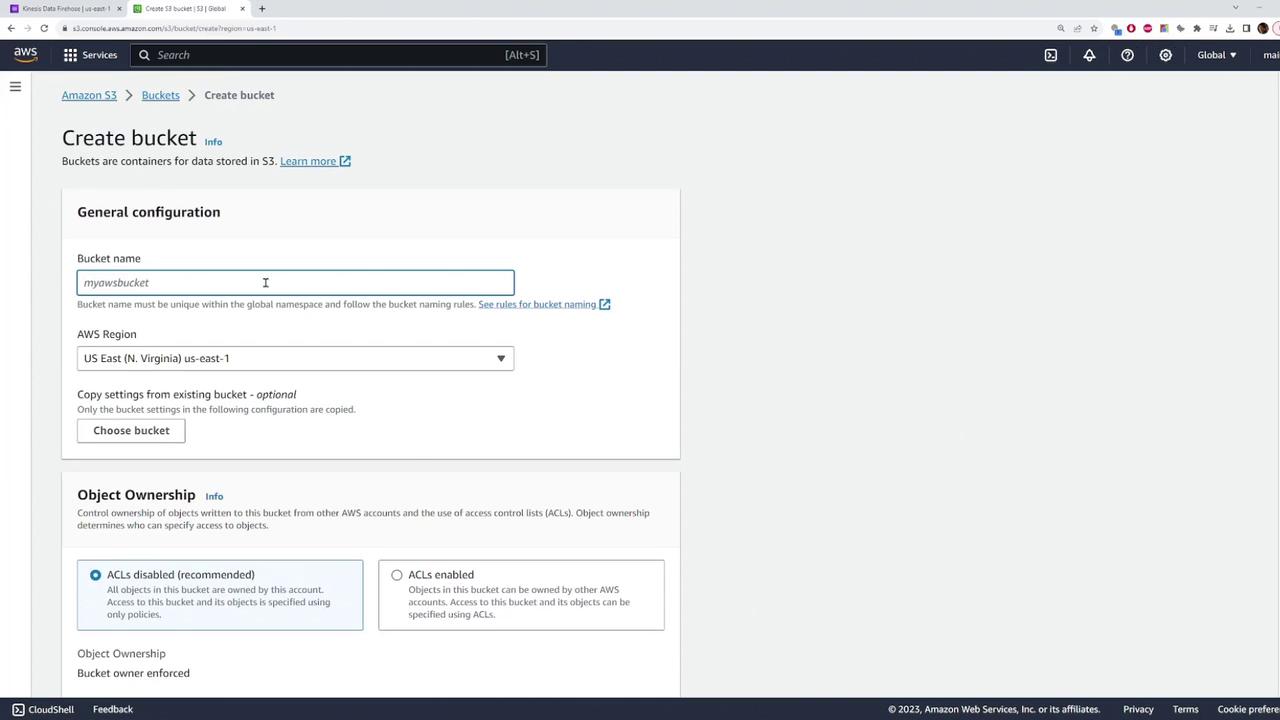

Setting Up an S3 Bucket

If you do not already have an S3 bucket, follow these steps to create one:

- Enter the bucket name ("Kinesis Code Cloud Demo" in this example).

- Select your AWS region and configure additional settings as needed.

- Click Create bucket to finalize the setup.

After creation, verify the bucket's availability by browsing your bucket list.

Sending Test Data to the Data Stream

With the Kinesis data stream and Firehose set up, send test data using the AWS SDK with JavaScript. The sample code provided below sends a test record every 50 milliseconds.

Code Example: Sending a Single Record

// Sample code to send a record using Kinesis PutRecordCommand

const command = new PutRecordCommand(input);

const response = await client.send(command);

console.log(response);

Complete Snippet: Generating Dummy Data

This complete snippet generates dummy data with timestamps and simulated prices:

setInterval(async () => {

const input = {

// PutRecordInput

StreamName: "crypto-stock-price",

Data: Buffer.from(

JSON.stringify({

date: Date.now(),

price: "$" + (Math.floor(Math.random() * 40000) + 1)

})

)

};

// Create and send the PutRecordCommand using your AWS SDK client

const command = new PutRecordCommand(input);

const response = await client.send(command);

console.log(response);

}, 50);

Run this code from your working directory (for example, C:\Users\sanje\Documents\scratch\kinesis). It will continuously generate and send data into the stream over several minutes.

Verifying Data Delivery in S3

Once the test data has been sent, verify delivery by checking your S3 bucket. The Kinesis Data Firehose typically organizes files into folders based on the current date (e.g., "2023"). Opening one of these files should reveal JSON objects similar to the examples below:

Example 1

{"date":169810779318,"price":"$46941"}

{"date":169810793259,"price":"$342561"}

{"date":169810793368,"price":"$301171"}

{"date":169810793418,"price":"$274001"}

{"date":169810793469,"price":"$6611"}

{"date":169810793518,"price":"$94176"}

Example 2

{"date":1689188257344,"price":"115.48"}

{"date":1689188257345,"price":"348.47"}

{"date":1689188257346,"price":"519.32"}

These records confirm that data is successfully transmitted from your Kinesis data stream through the Firehose and stored in the S3 bucket.

Conclusion

This demonstration has shown you how to set up a real-time data ingestion and delivery pipeline using Amazon Kinesis. The steps covered include:

- Creating a Kinesis data stream

- Configuring a Kinesis Data Firehose

- Setting up an S3 bucket

- Sending test data using the AWS SDK

- Verifying data delivery in S3

This robust setup not only supports real-time data processing but also offers capabilities such as data transformation via Lambda functions, providing a scalable solution for various applications. Happy streaming and exploring further real-time data processing techniques!

Watch Video

Watch video content