What Is Vector Search?

Vector search is an advanced retrieval technique that leverages high-dimensional embeddings to identify semantically relevant data. Unlike traditional keyword-based searches, which may miss contextually similar terms, vector search transforms words or phrases into numerical vectors (or embeddings) that encapsulate their meaning. This enables a more nuanced comparison that goes beyond exact keyword matches.

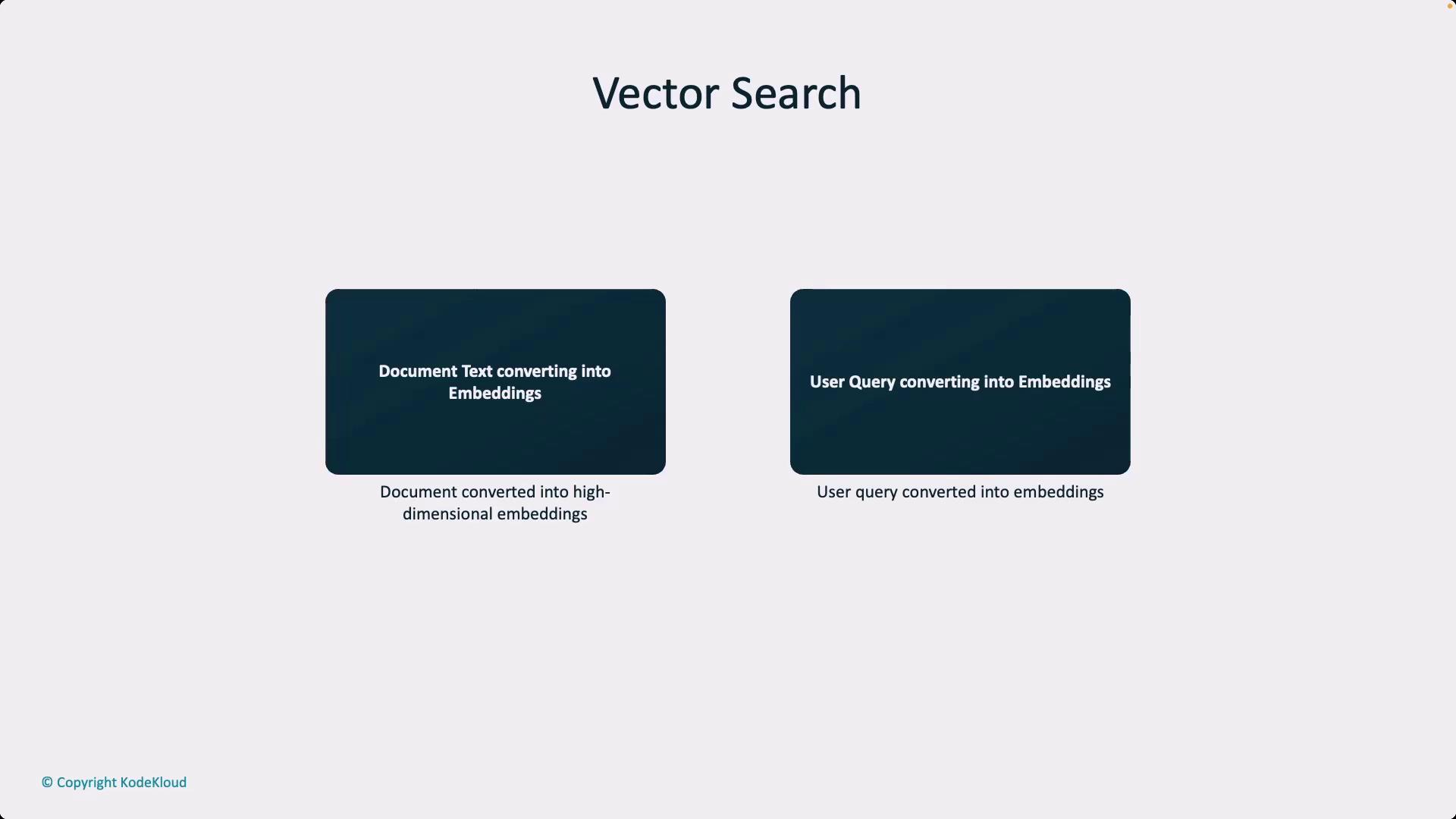

Converting Text to Embeddings

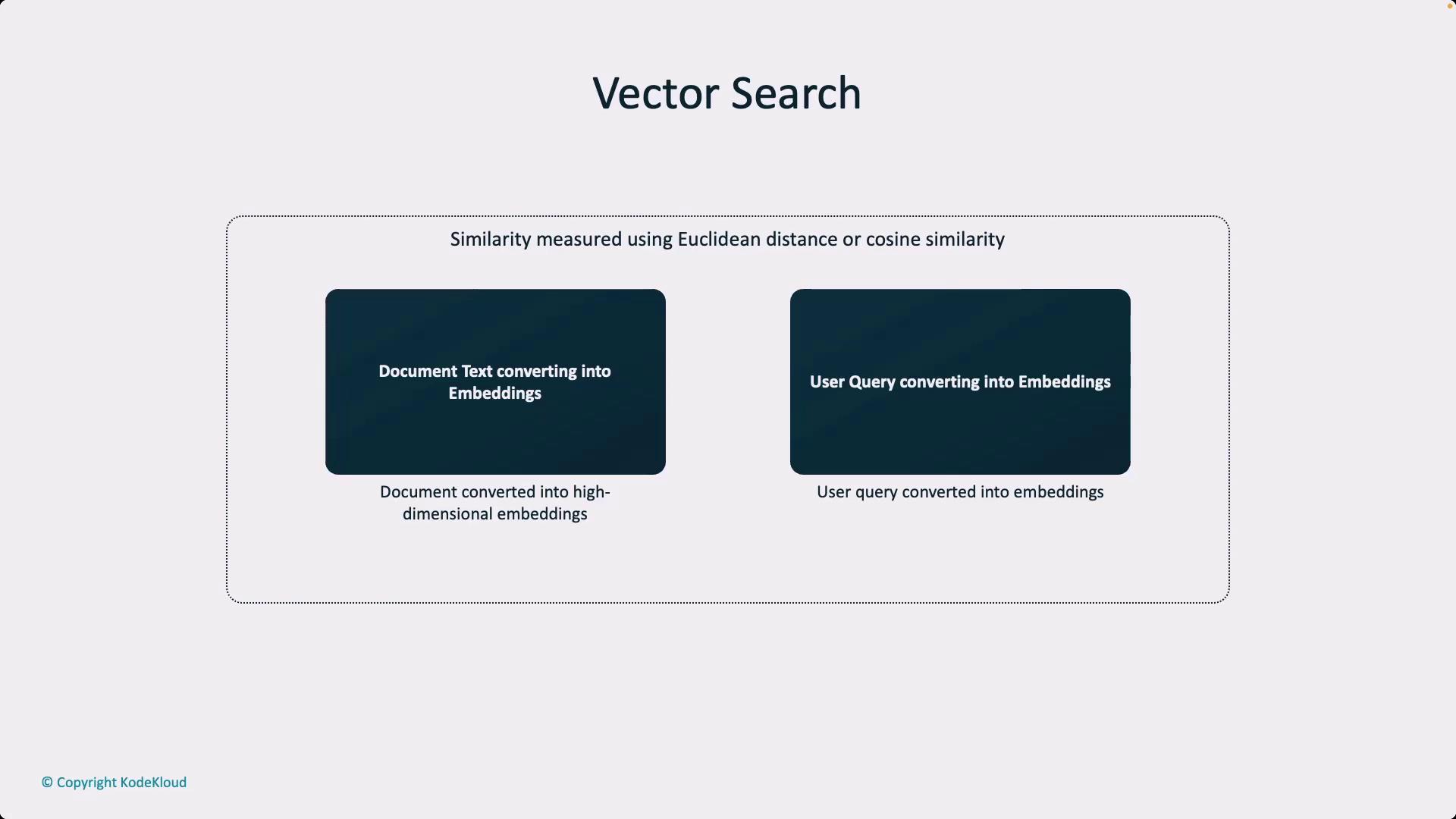

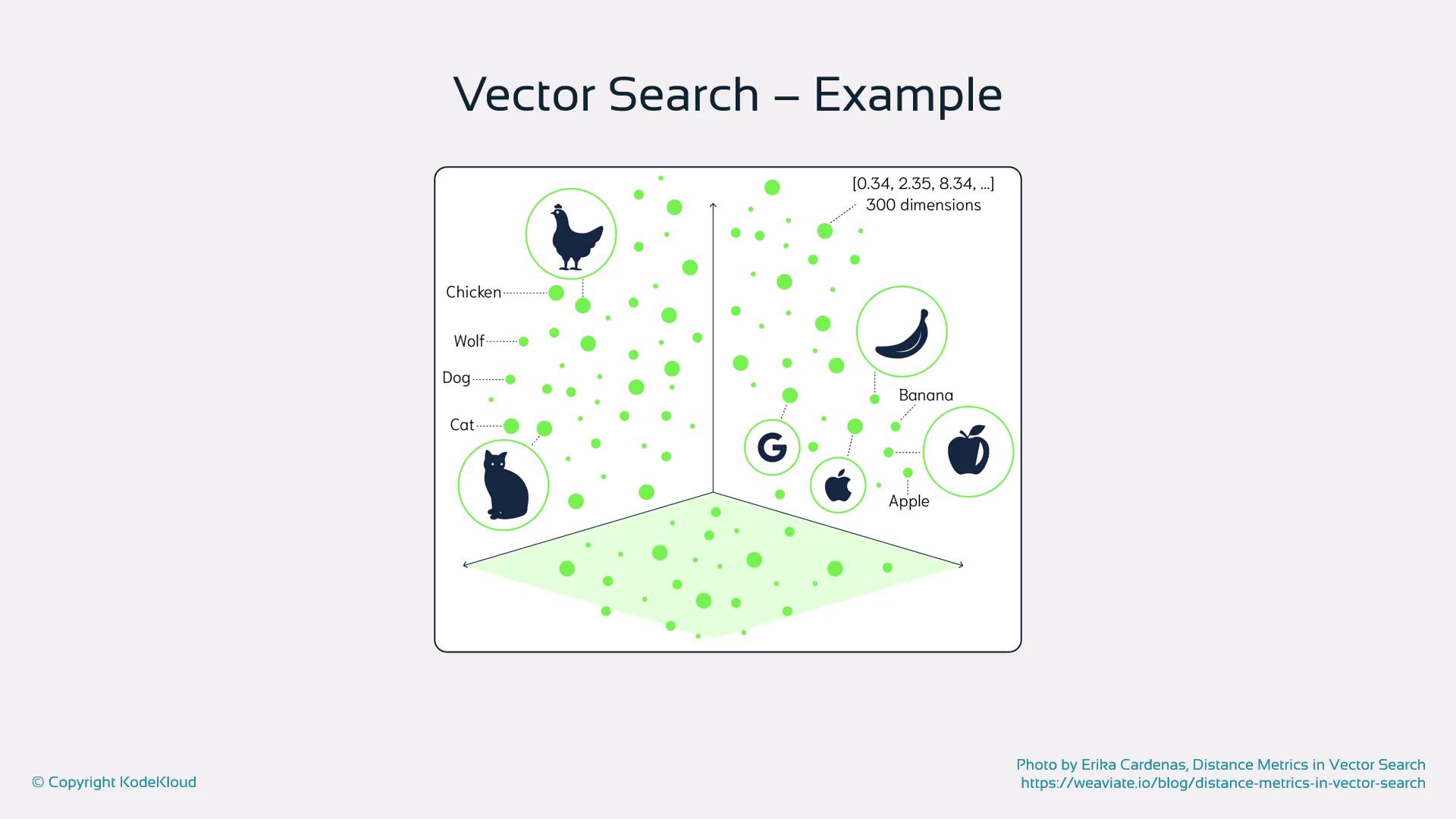

To capture subtle meanings, AI models are used to transform text into embeddings—multi-dimensional numerical vectors representing the semantic content of words, sentences, or entire documents. Once converted, these embeddings allow the system to compare data points using mathematical techniques such as Euclidean distance, cosine similarity, or dot product.

Measuring Similarity in the Vector Space

Once both the query and documents are represented as embeddings, various techniques are employed to measure their similarity. The process involves computing the “distance” between vectors—where a smaller distance indicates a higher level of similarity. This method ensures the retrieval of documents that are contextually related, even if exact keywords are not present.

Using vector-based similarity measures not only improves the recall of relevant documents but also enhances the overall search accuracy in semantic-rich environments.

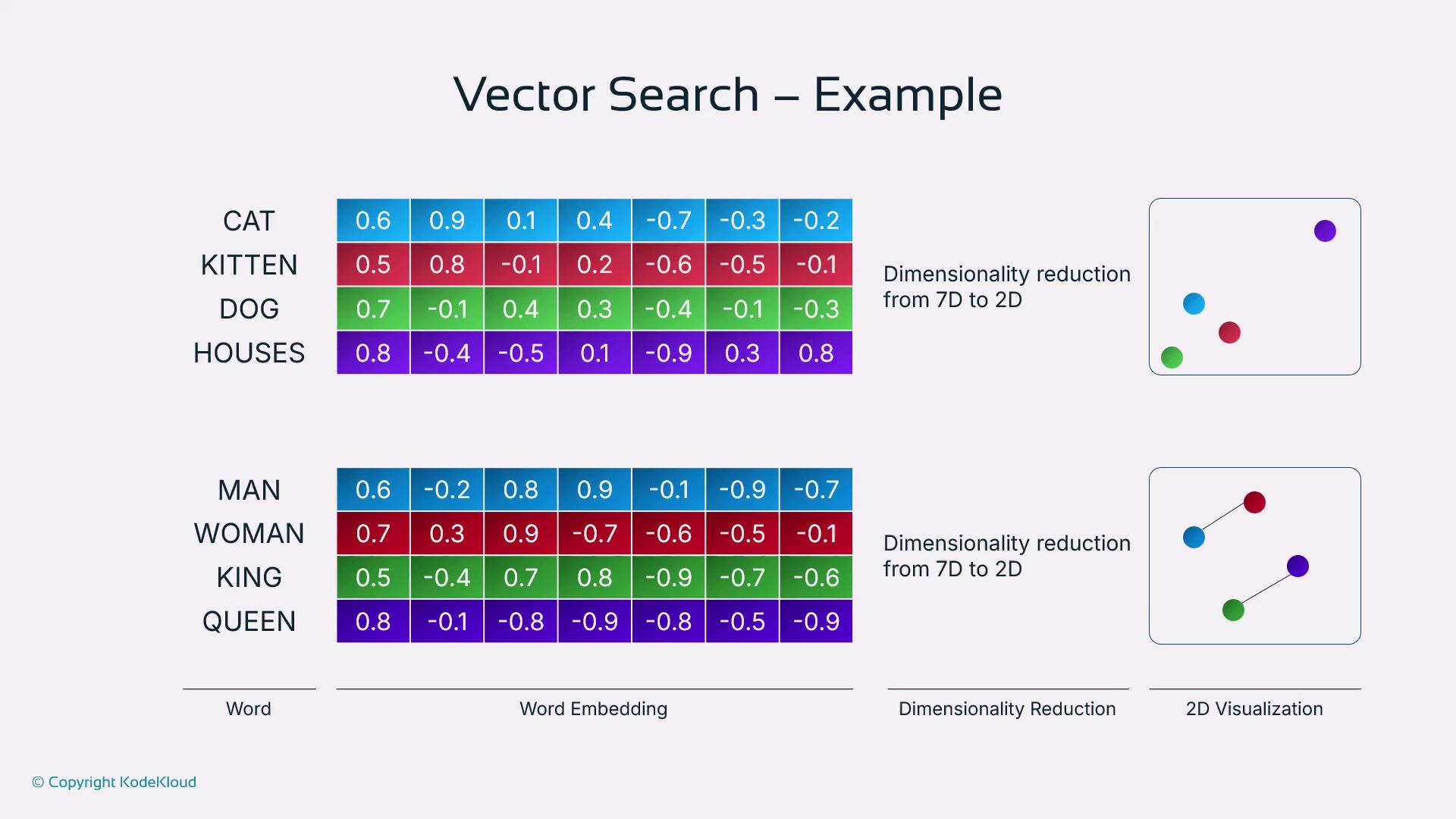

Visualizing Embeddings

Visualizing embeddings often involves reducing their dimensions to two or three, revealing clusters of semantically related words. For example, words like “cat” and “kitten” may cluster closely together, as well as other related pairs like “man” and “woman.” This technique helps to highlight the underlying structure in language data.

Real-World Application: Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation (RAG) is a practical application of vector search. In RAG systems, the same embedding model is used to encode both documents and queries. By measuring the distance between these embeddings, the system efficiently retrieves the most relevant pieces of information, enhancing both accuracy and efficiency in real-world applications.

RAG leverages the power of vector search to combine retrieval processes with generative capabilities, making it a powerful tool for developing intelligent systems.