Introduction to OpenAI

Introduction to AI

Ethical Considerations in Generative AI

Generative AI systems like GPT-4 and DALL·E are transforming how we create text, images, audio, and video. As these models become more realistic, it’s critical to understand their ethical implications—ranging from bias and misinformation to privacy and intellectual property. In this article, we’ll dive into:

- Why ethics matter in AI development

- How bias and fairness impact generative systems

- The rise of deepfakes and misinformation

- Intellectual property challenges

- Privacy risks and surveillance concerns

- Frameworks for responsible AI governance

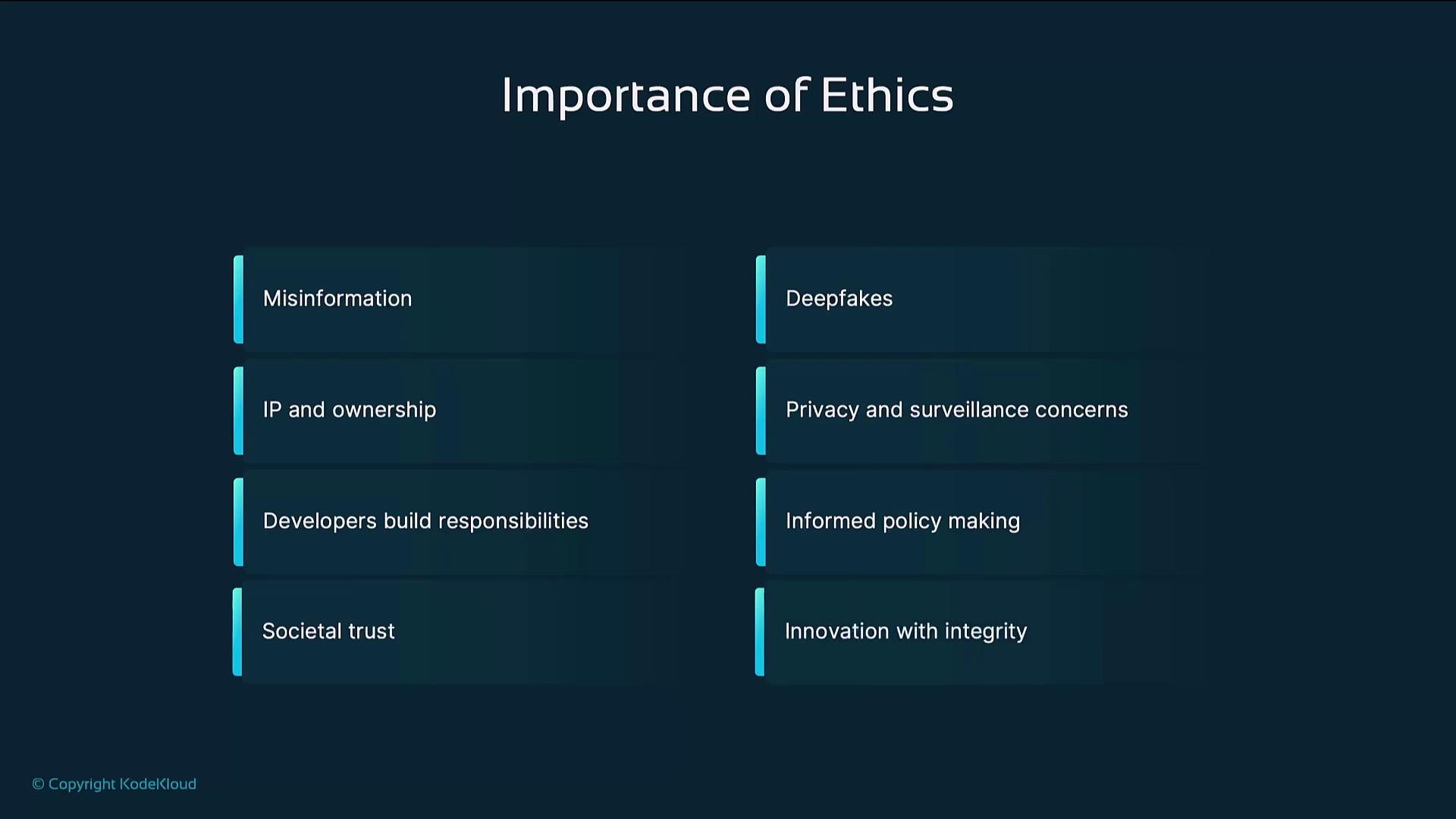

Importance of Ethics in AI

Ethical awareness is the foundation for building AI that benefits society. By embedding principles of fairness, transparency, and accountability, developers, businesses, and policymakers can ensure trust and innovation go hand in hand.

Key benefits of prioritizing ethics:

- Responsible development

Incorporate fairness checks and clear documentation throughout the model lifecycle. - Informed policymaking

Align regulations with technical realities to protect public interest. - Public trust

Transparent practices foster confidence and encourage broader adoption. - Sustainable innovation

Ethical frameworks drive creative solutions that respect human rights and IP.

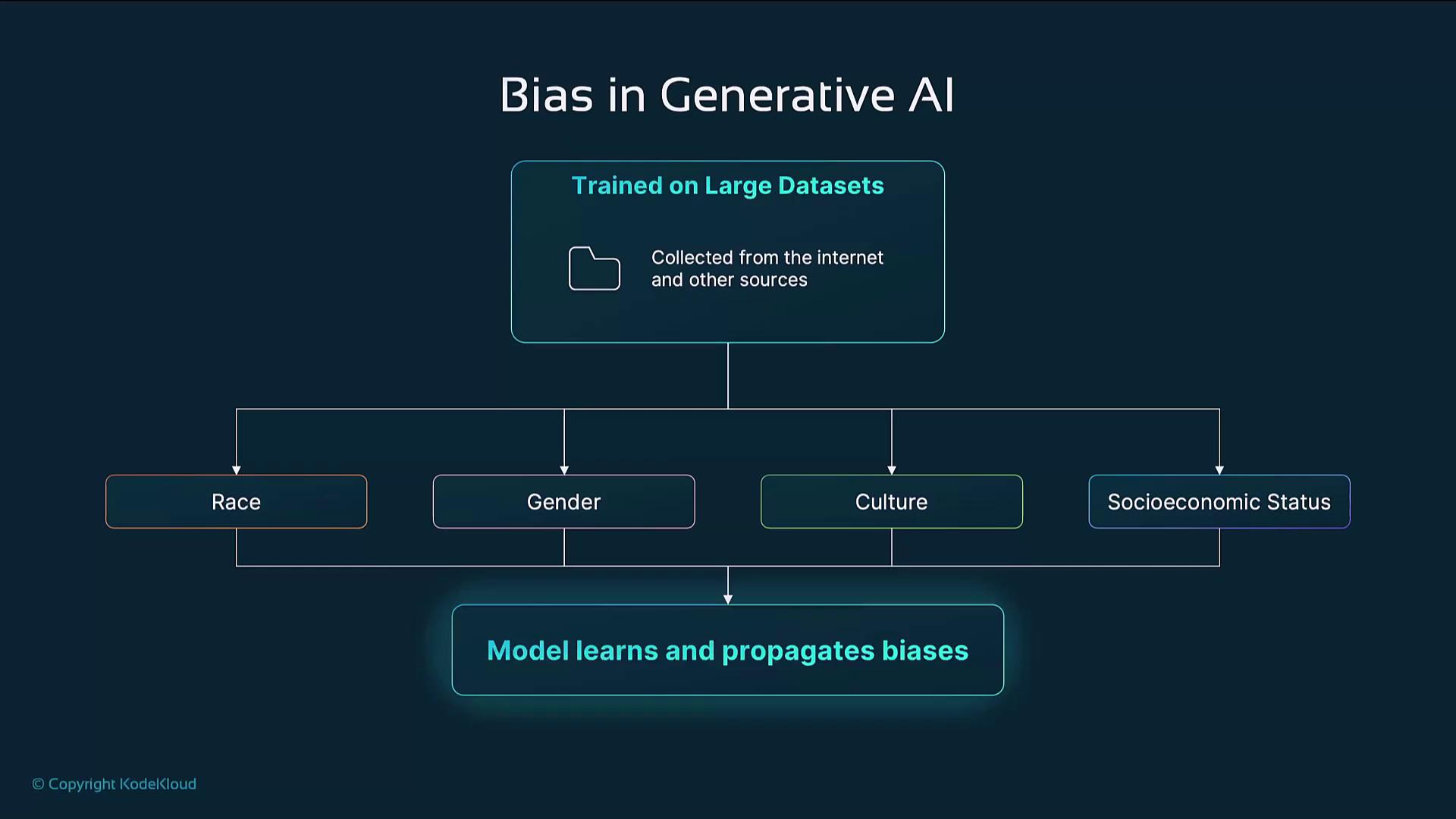

Bias and Fairness in Generative AI

AI models learn from large, real-world datasets that often carry social and historical biases. Without corrective measures, these systems risk reinforcing stereotypes and unfair treatment.

Sources of Bias

- Data imbalance: Overrepresentation of certain demographics

- Historical prejudice: Legacy content that reflects past inequities

- Cultural blind spots: Underrepresented languages, regions, or viewpoints

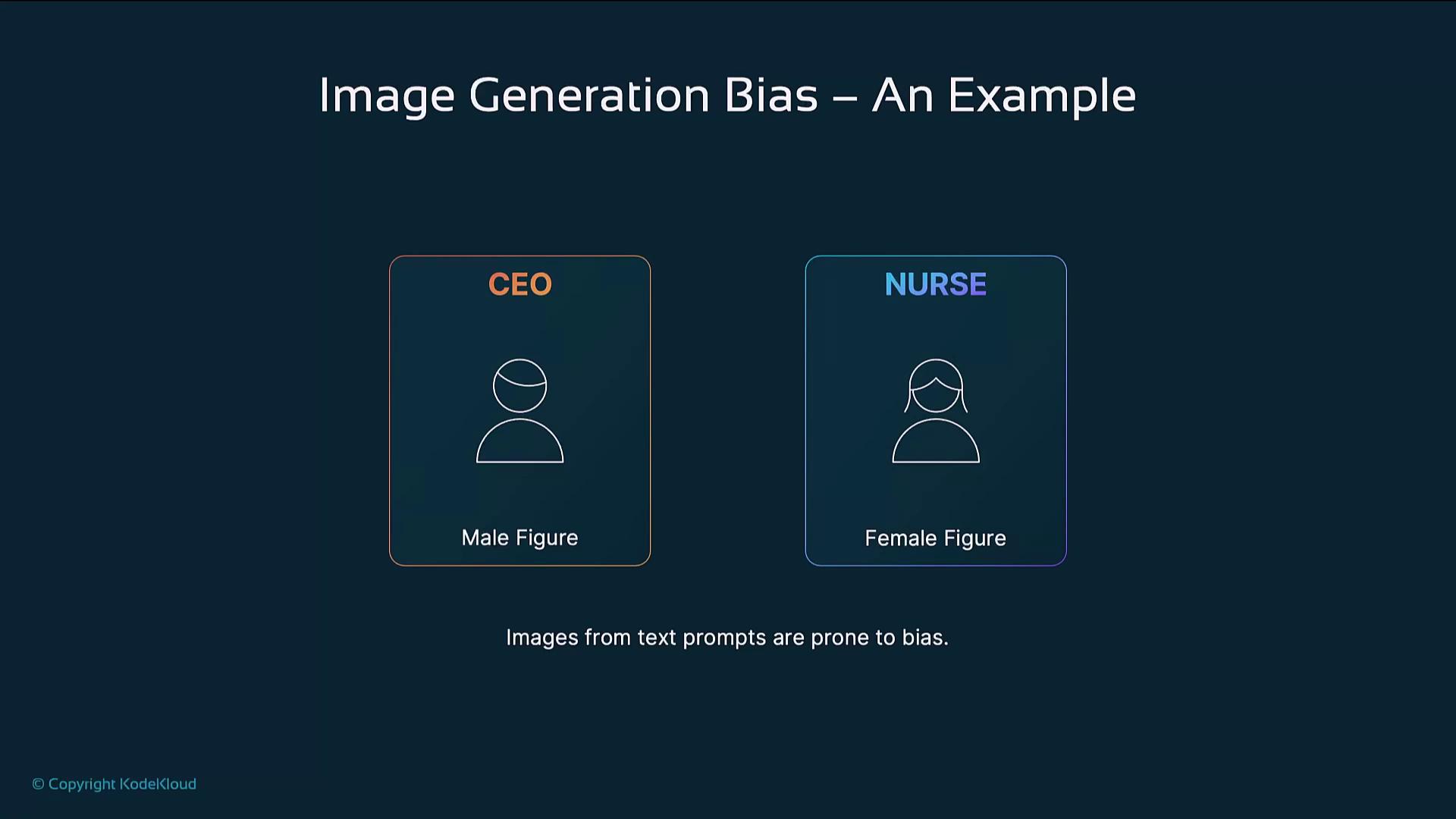

Impact on Output

- Text Generation

Subtle word associations (e.g., leadership→men; caregiving→women) - Image Generation

Gender and racial stereotypes—CEOs depicted as men, nurses as women

Note

Regular bias audits and diverse evaluation sets are essential to detect subtle discriminatory behaviors.

Mitigation Techniques

| Technique | Description | Example |

|---|---|---|

| Diverse fine-tuning | Retrain on balanced datasets | Add underrepresented voices in prompts and labels |

| Fairness metrics | Track parity across demographic groups | Measure Equal Opportunity Difference (EOD) |

| Continuous bias auditing | Schedule periodic reviews | Quarterly automated test suites |

| Content moderation | Block or flag policy-violating outputs | Reject prompts containing hate speech |

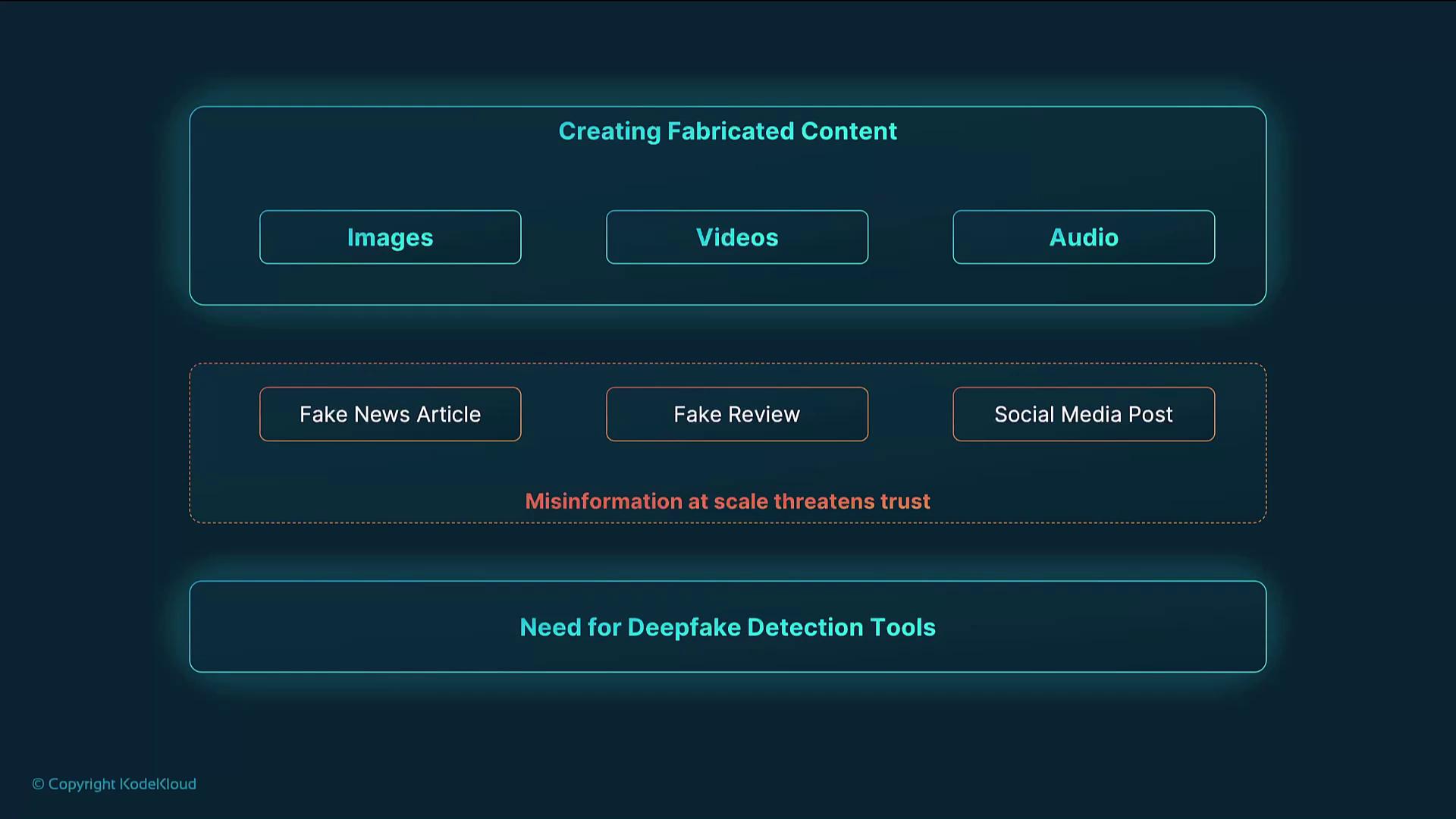

Misinformation and Deepfakes

Generative AI can fabricate realistic text, audio, images, and video—creating significant misinformation risks.

- Fake news and reviews: Automated generation of false claims

- Deepfake media: Synthetic audio/video impersonations of public figures

Warning

Deepfakes can undermine elections, incite panic, and erode trust. Always verify sources and employ detection tools.

Key safeguards

- Watermark or cryptographically sign AI-generated media

- Develop and deploy deepfake detection models

- Enforce clear labeling policies on platforms

Intellectual Property and Ownership

When AI generates creative works, questions arise about authorship, rights, and compensation.

| Stakeholder | Ownership Question | Potential Outcome |

|---|---|---|

| AI Developer | Does the model creator hold copyright? | Licenses specifying model-output usage |

| End User (Prompter) | Can prompters claim authorship of the result? | Terms of service granting user rights |

| Original Creators | Are artists’ works scraped without consent? | Licensing fees, opt-out or data-rights |

Collaboration among legal experts, policymakers, and developers is crucial to:

- Define clear ownership and copyright rules

- Create compensation and attribution frameworks

- Increase transparency of training datasets

Privacy and Surveillance

Generative AI can fabricate personal data, impersonate voices/faces, or manipulate video evidence, posing severe privacy risks.

- Identity theft: Fake IDs or profiles for fraud

- Voice/face cloning: Unauthorized access or social engineering

- Surveillance manipulation: Altered CCTV footage or biometric spoofing

Note

Adopt “privacy by design” principles and comply with regulations like GDPR to secure personal data.

Companies and governments must implement strong encryption, access controls, and audit trails to prevent misuse.

Ethical Frameworks and Governance

Building trust in AI requires governance structures that keep pace with rapid technological advances.

- Transparency

Openly document model capabilities, limitations, and data sources. - Accountability

Establish channels for reporting and remedying harmful outputs. - Fairness

Integrate bias detection and mitigation into every development stage. - Safety

Implement guardrails against malicious or unintended use.

Global cooperation—across industry, academia, and regulators—is essential to create dynamic policies that reflect societal values and technological progress.

Links and References

- General Data Protection Regulation (GDPR)

- OpenAI Usage Policies

- Deepfake Detection Challenge

- AI Ethics Guidelines Global Inventory

Watch Video

Watch video content