Introduction to OpenAI

Introduction to AI

Exploring Bias and Fairness in Language Models

As Large Language Models (LLMs) like GPT-4 power chatbots, virtual assistants, and content generation tools, understanding bias and ensuring fairness is critical. Training data sourced from books, articles, and the web may contain stereotypes and unbalanced representations, leading to unintended, harmful outputs. This article covers:

Defining Bias vs. Fairness

Bias refers to systematic errors or prejudices learned during training, while fairness is the deliberate effort to counteract these biases and achieve equitable outcomes.

What Is Bias in LLMs?

Bias in LLMs occurs when models exhibit systematic preferences, associations, or prejudicial patterns based on their training data.

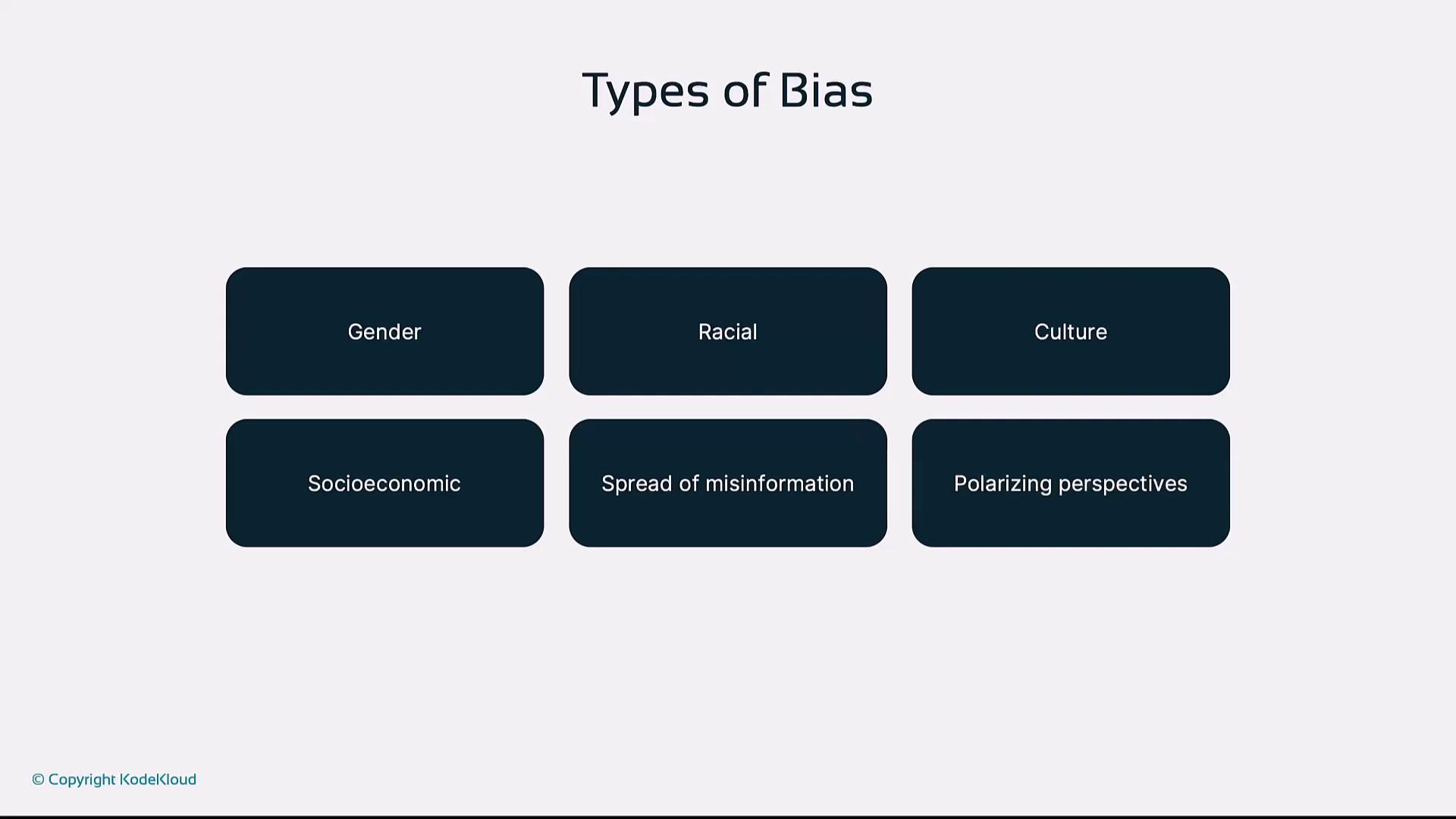

Common Types of Bias

| Bias Type | Description | Example |

|---|---|---|

| Gender Bias | Assigns roles or attributes based on gender stereotypes | Completing “The nurse” with “she” and “The engineer” with “he” |

| Racial Bias | Associates negative traits or criminality with ethnic groups | Suggesting certain groups are more prone to crime |

| Cultural Bias | Prioritizes content from specific regions or cultures | Favoring Western idioms over non-Western expressions |

| Socioeconomic Bias | Overrepresents affluent perspectives, underrepresents low-income experiences | Generating luxury-focused scenarios |

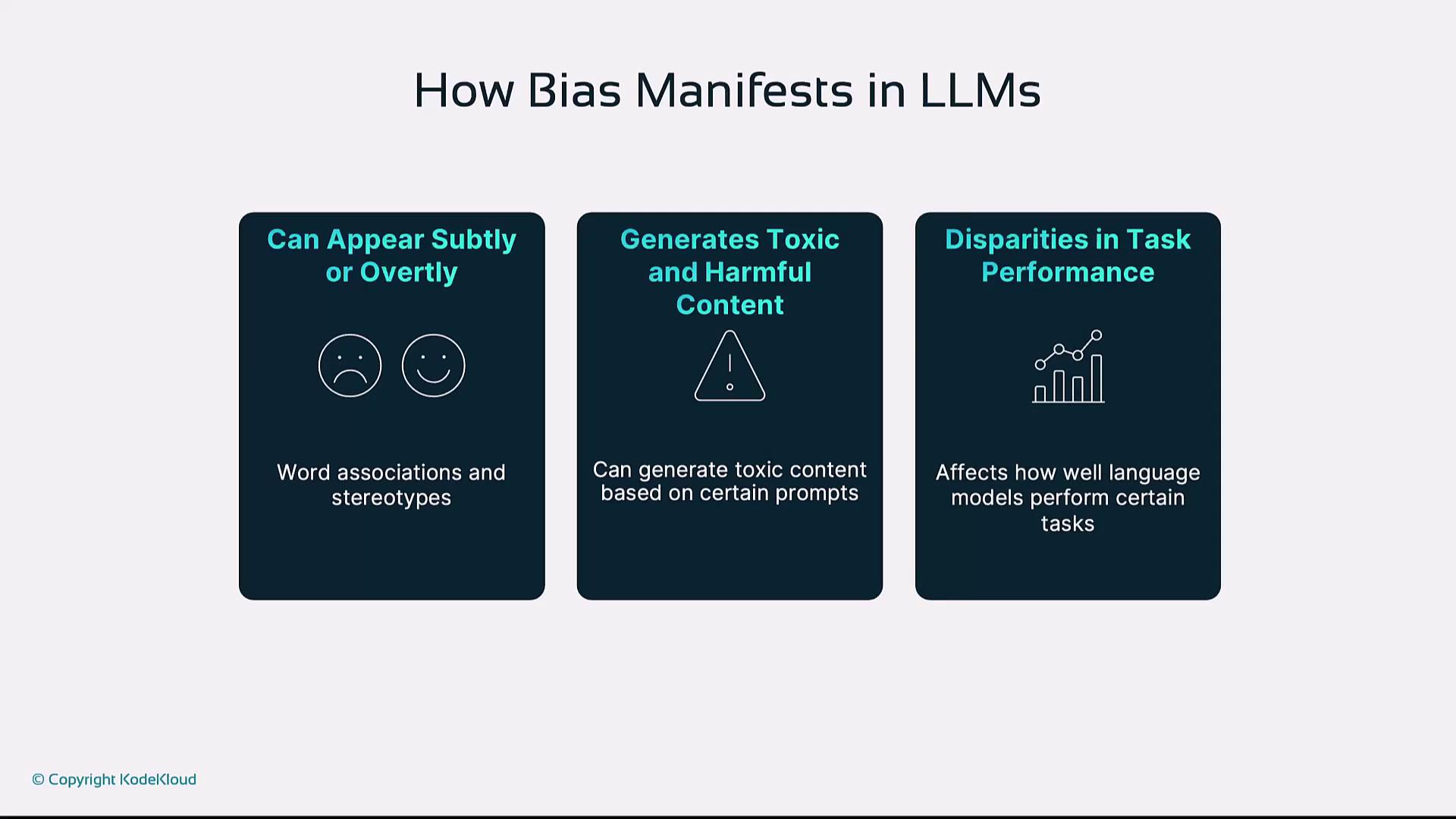

How Bias Manifests in LLM Outputs

Bias can surface in subtle word choices, explicit toxic content, or uneven model performance:

- Word association stereotypes (e.g., “The doctor said” → “he”; “The nurse said” → “she”)

- Harmful or toxic responses under ambiguous prompts

- Lower accuracy or fluency on non-Western dialects or languages

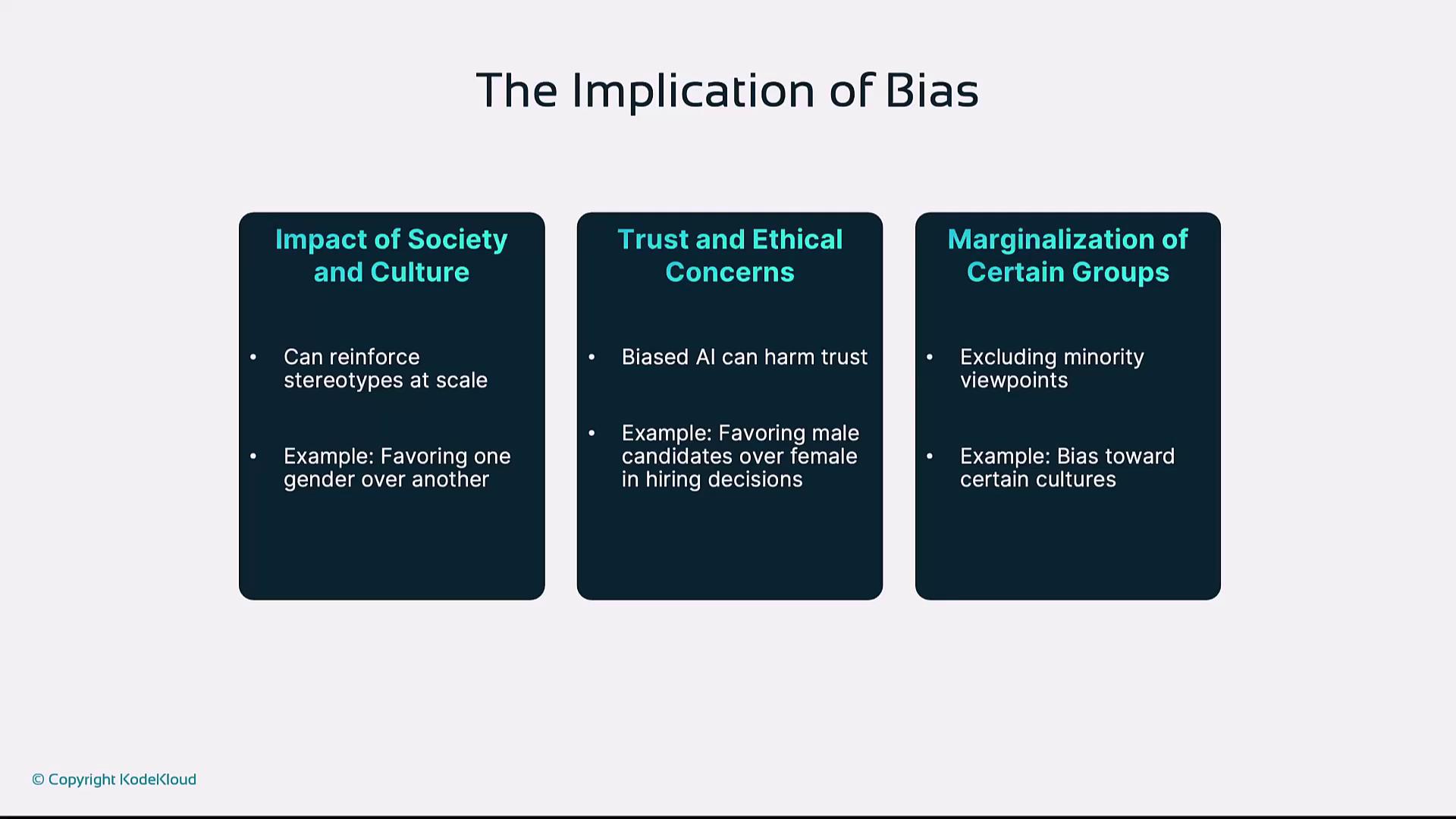

Implications of LLM Bias

- Reinforcing social stereotypes at scale (e.g., in recruitment tools)

- Eroding user trust and raising ethical or legal concerns

- Marginalizing underrepresented communities and perspectives

Warning

Biased AI systems deployed without audit can perpetuate harmful narratives and expose organizations to reputational and compliance risks.

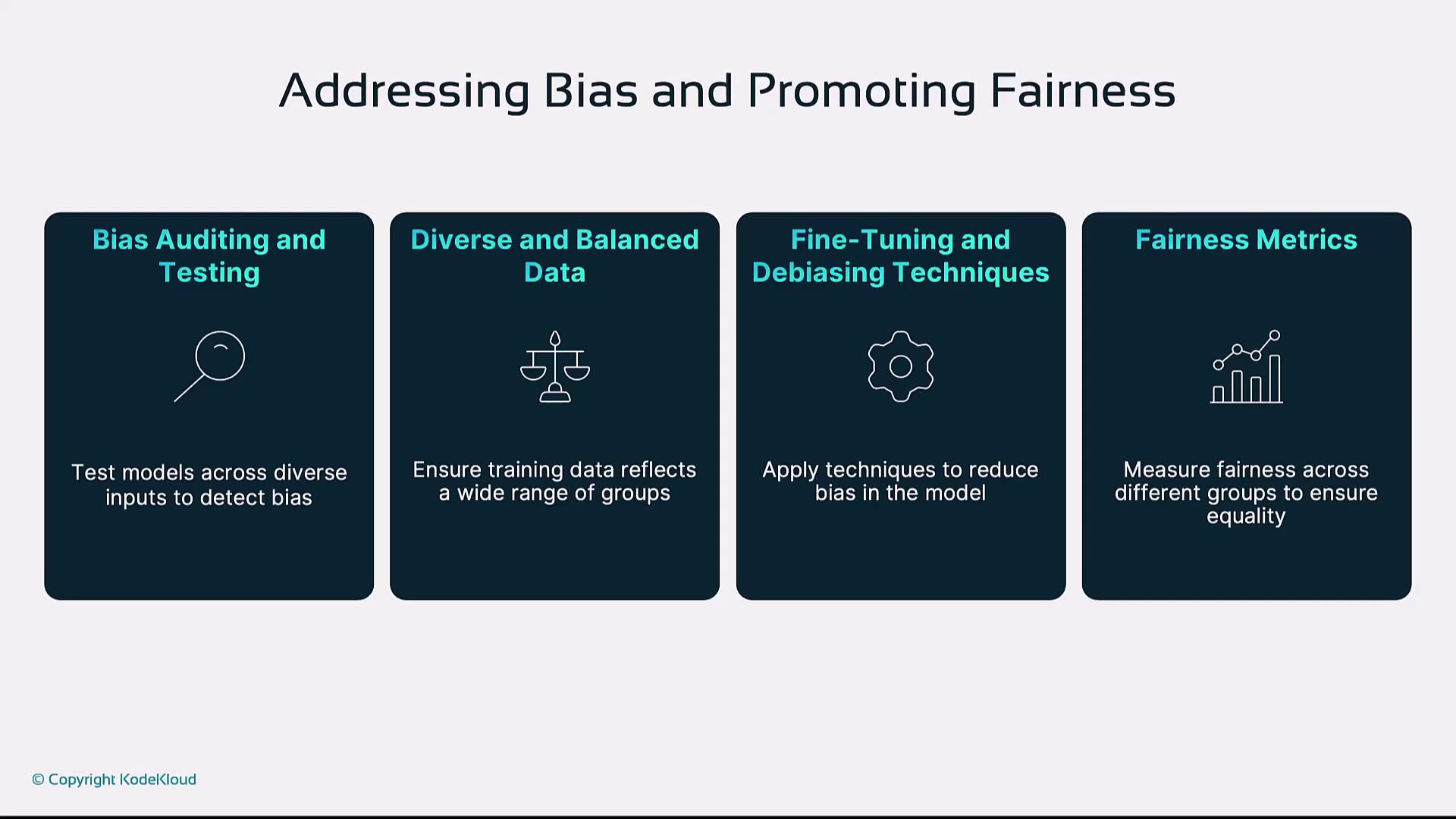

Strategies to Mitigate Bias and Enhance Fairness

- Bias Auditing: Test models with neutral, demographically varied prompts to detect skewed outputs

- Balanced Training Data: Curate datasets representing diverse regions, cultures, and socioeconomic backgrounds

- Debiasing Techniques: Apply fine-tuning, counterfactual augmentation, or adversarial training to reduce associations

- Fairness Metrics: Measure performance across groups using metrics like Equality of Opportunity

Note

Incorporating continuous monitoring and user feedback loops helps maintain fairness as models evolve.

Current Research, Tools, and Frameworks

- Fairness Indicators: Google’s Fairness Indicators for tracking disparities

- Ethical AI Frameworks: Principles from OpenAI Charter and Google AI Principles guide transparent, accountable model development

Links and References

Watch Video

Watch video content