Introduction to OpenAI

Vision

Overview of OpenAI Vision

OpenAI Vision combines advanced computer vision and language understanding to interpret, generate, and manipulate images through the OpenAI Vision API. Whether you’re building accessibility tools, automation pipelines, or creative applications, Vision models like GPT-4 Vision and DALL·E provide powerful multimodal capabilities.

Why Vision Models Matter

Computer vision models unlock new horizons for automation, creativity, and multimodal AI interactions:

Expanding AI’s Domain

Vision brings AI into healthcare, retail, manufacturing, and creative arts—industries where images and visual data are central. For example, radiology AI can flag anomalies in X-rays or MRIs for faster diagnosis.Enabling Multimodal Interactions

By combining visual and textual inputs, you can generate captions, answer questions about a photo, or build richer chat experiences.

Example: A virtual assistant analyzes a product image and returns detailed descriptions or personalized recommendations.Enhancing Automation

From cashier-less retail checkouts to autonomous vehicles, real-time image recognition powers new workflows.

Example: A self-driving car uses Vision API to identify road signs, obstacles, and pedestrians for safe navigation.Boosting Creativity and Content Generation

Tools like DALL·E transform text prompts into vivid images—ideal for prototyping designs, marketing visuals, or original artwork.

Example: Describe a futuristic cityscape and DALL·E generates an inspiring concept image.

Core Capabilities of the OpenAI Vision API

Note

All examples assume access to a vision-capable GPT-4 model (for instance, gpt-4-vision) or the DALL·E endpoints. Make sure your API key has the proper scopes enabled.

Image Captioning

Generate natural language descriptions for any image—useful in accessibility, SEO, and automated photo tagging.

import openai

def caption_image(image_url):

response = openai.chat.completions.create(

model="gpt-4-vision",

messages=[{"role": "user", "content": f"Describe this image: {image_url}"}],

max_tokens=100

)

return response.choices[0].message.content

url = "https://example.com/path/to/image.jpg"

print("Caption:", caption_image(url))

Object Recognition and Detection

Detect objects and their coordinates for analytics, surveillance, or industrial inspection.

import openai

def detect_objects(image_url):

response = openai.chat.completions.create(

model="gpt-4-vision",

messages=[{"role": "user", "content": f"List all objects in this image and their locations: {image_url}"}],

max_tokens=150

)

return response.choices[0].message.content

url = "https://example.com/path/to/image.jpg"

print("Objects Detected:", detect_objects(url))

Visual Question Answering (VQA)

Ask questions about image content—ideal for customer support, education, and accessibility tools.

import openai

def visual_question_answering(image_url, question):

response = openai.chat.completions.create(

model="gpt-4-vision",

messages=[

{"role": "user", "content": f"Here is an image: {image_url}\nQuestion: {question}"}

],

max_tokens=100

)

return response.choices[0].message.content

image_url = "https://example.com/path/to/image.jpg"

answer = visual_question_answering(image_url, "What is this object?")

print("Answer:", answer)

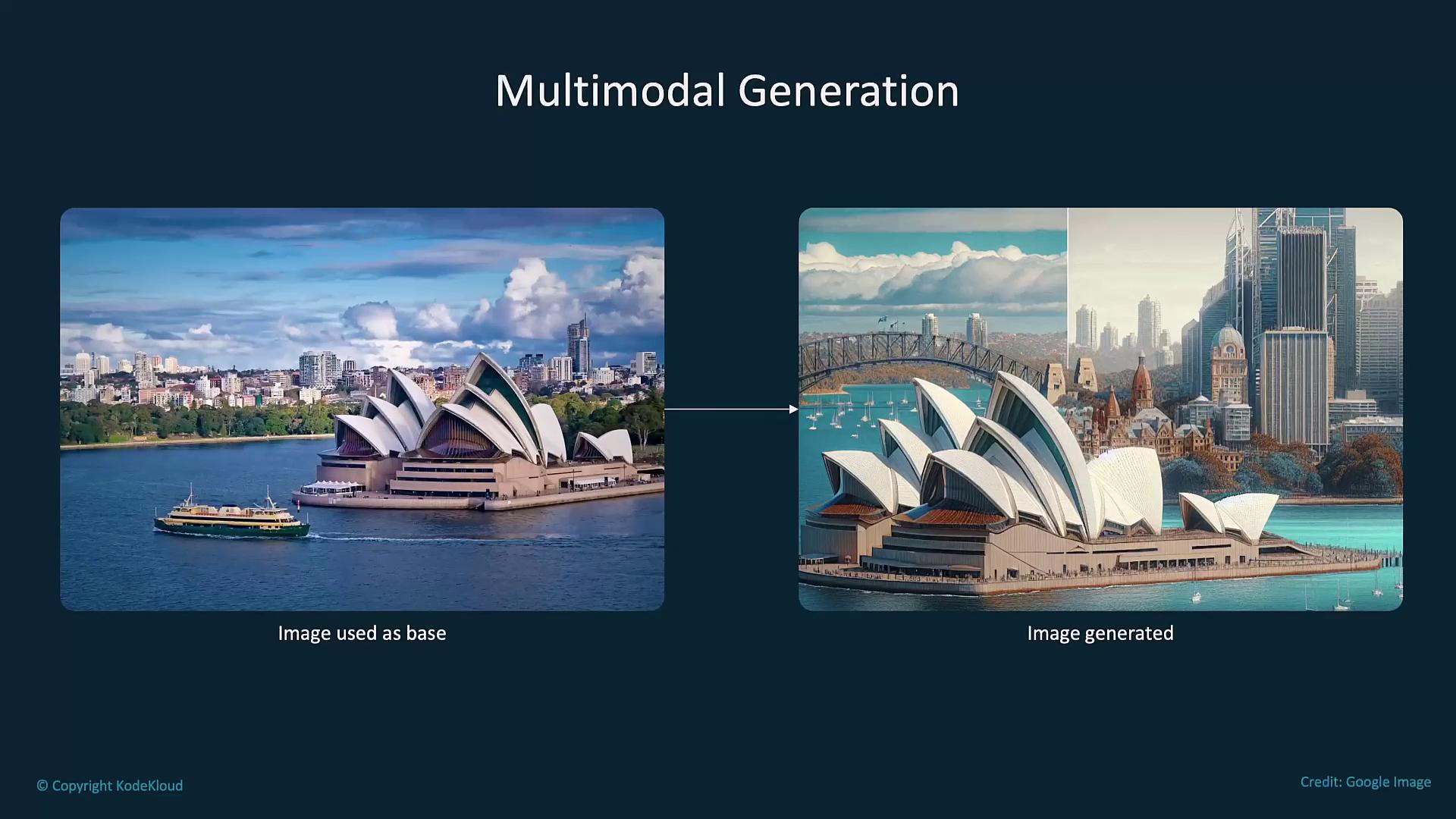

Multimodal Generation

Combine text and images for creative editing, image-to-sketch transformations, or custom visualizations.

import openai

def generate_image_from_sketch(image_url, text_description):

response = openai.images.generate(

model="dall-e-3",

prompt=f"Use the following image as a base: {image_url}. Add these details: {text_description}",

size='1024x1024'

)

return response.data[0].url

image_url = "https://example.com/path/to/sketch.jpg"

description = "Add a bright blue sky and detailed buildings in the background."

print("Generated Image URL:", generate_image_from_sketch(image_url, description))

Content Moderation

Automatically flag unsafe or policy-violating images before they reach end users.

Warning

Ensure you comply with OpenAI’s content policy when moderating sensitive images.

import openai

def moderate_image(image_url):

response = openai.moderations.create(

model="vision-moderation-latest",

input=image_url

)

return response.results[0].flagged

url = "https://example.com/path/to/image.jpg"

print("Moderation flagged:", moderate_image(url))

Face Recognition and Analysis

Identify or verify individuals, estimate age, gender, and emotion for security or user analytics.

import openai

def analyze_face(image_url):

response = openai.chat.completions.create(

model="gpt-4-vision",

messages=[{"role": "user", "content": f"Analyze this image for age, gender, and emotion: {image_url}"}],

max_tokens=100

)

return response.choices[0].message.content

url = "https://example.com/path/to/face.jpg"

print("Face Analysis:", analyze_face(url))

Image-to-Image Translation

Convert sketches to photorealistic renders, apply filters, or simulate design prototypes.

import openai

def image_to_image_translation(input_image_url, transformation_description):

response = openai.images.generate(

model="dall-e-3",

prompt=f"Transform the image at {input_image_url} by {transformation_description}",

size='1024x1024'

)

return response.data[0].url

input_image_url = "https://www.example.com/hi.jpg"

transformation_description = "convert this sketch into a photorealistic image."

print("Translated Image URL:", image_to_image_translation(input_image_url, transformation_description))

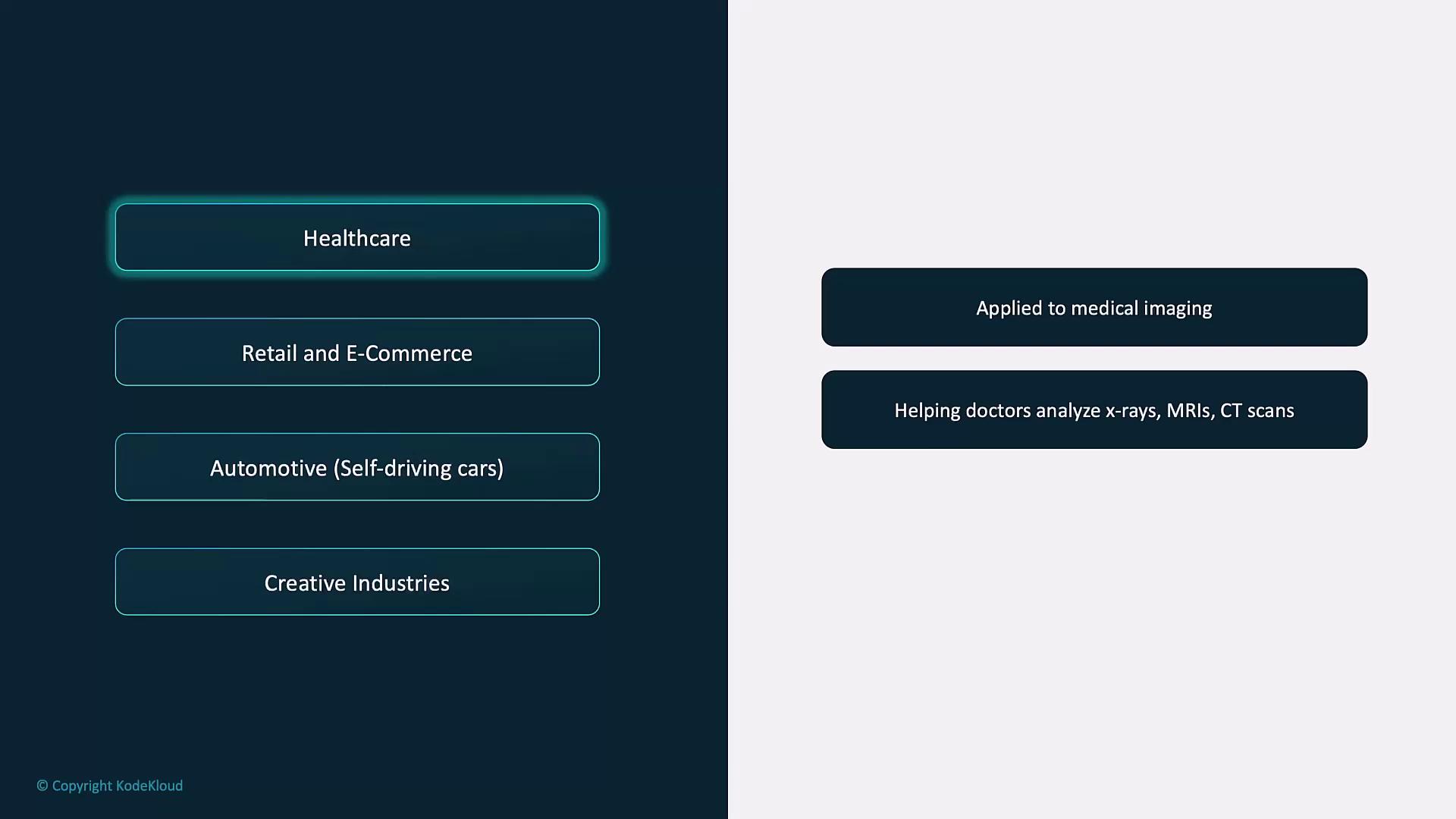

Use Cases Across Industries

| Industry | Application | Illustration |

|---|---|---|

| Healthcare | AI-assisted radiology: analyzing X-rays, MRIs, and CT scans |  |

| Retail & E-Commerce | Inventory tagging, shopper behavior analysis, personalized ads |  |

| Automotive (Self-driving cars) | Obstacle detection, traffic-sign recognition, navigation |  |

| Creative Industries | Rapid concept art, marketing visuals, multimedia prototyping |  |

Links and References

Watch Video

Watch video content