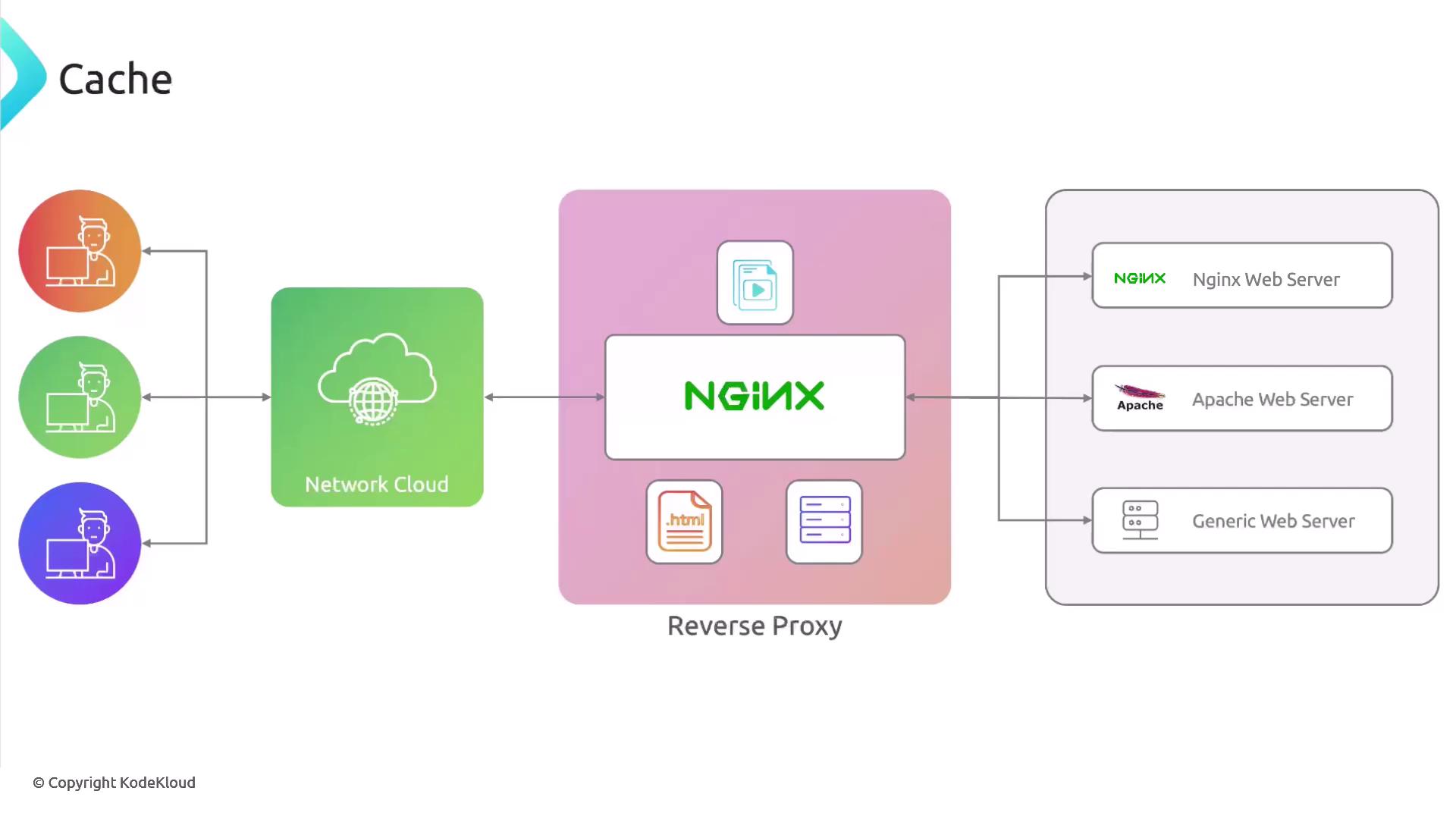

What Is a Reverse Proxy?

A reverse proxy sits between clients and one or more backend servers. It receives incoming requests, routes them to the appropriate server pool, and returns the server’s response to the client. Common use cases include:- Hiding backend server identities

- SSL/TLS offloading

- Caching static assets

- Distributing traffic across multiple application servers

A reverse proxy can improve security, performance, and scalability by centralizing request handling, encryption, and caching.

Reverse Proxy vs. Load Balancer

While both components sit in front of your servers, their primary responsibilities differ:| Feature | Reverse Proxy | Load Balancer |

|---|---|---|

| Main Role | Hide backend details and forward traffic | Distribute traffic evenly across servers |

| SSL/TLS Offloading | Yes | Sometimes (depends on implementation) |

| Caching | Yes | Rarely |

| Application Firewall | Often integrated | Rarely |

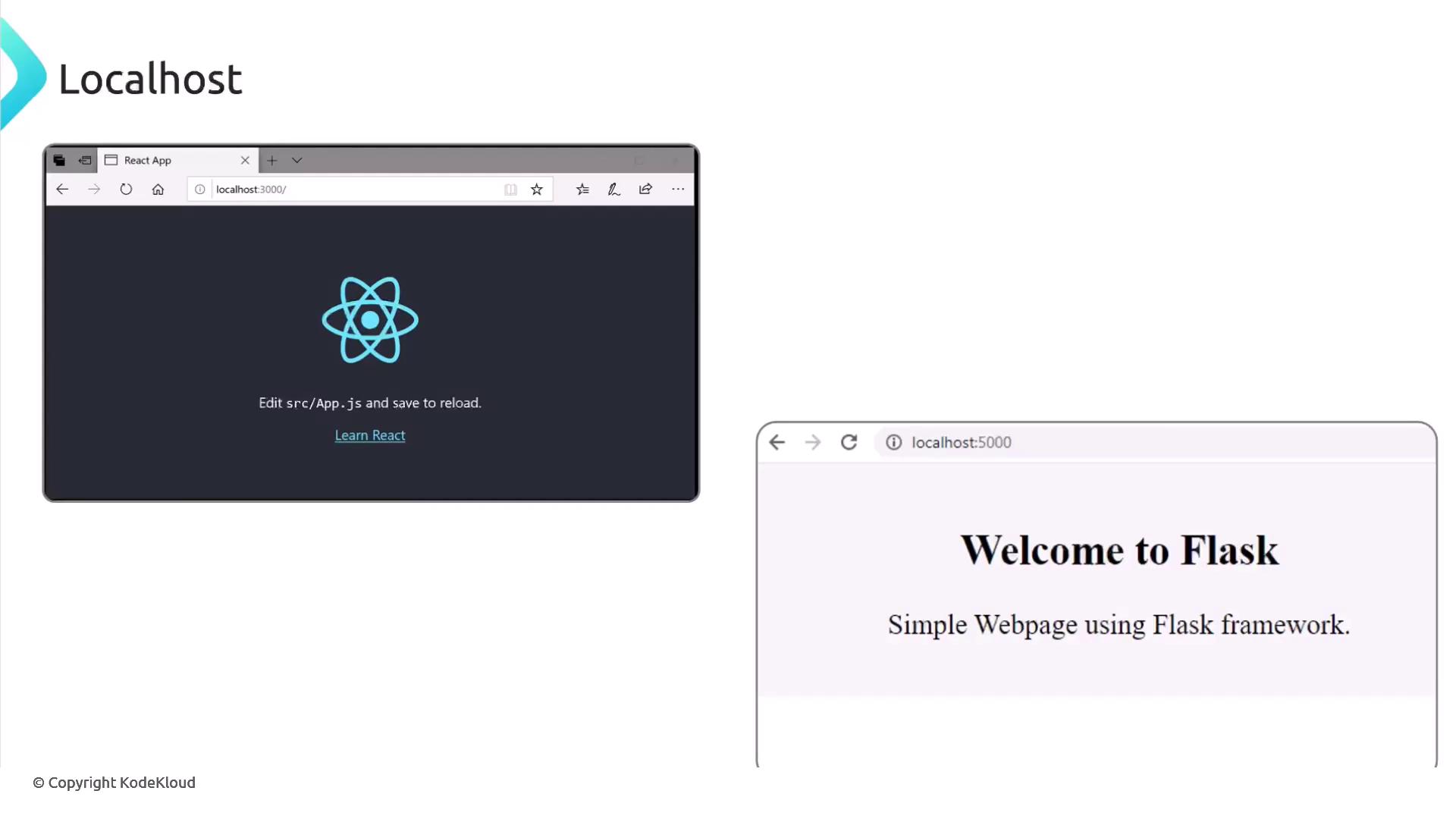

Placing Application Frameworks Behind a Reverse Proxy

Modern web apps often use frameworks like React (Node.js), Flask (Python), Rails (Ruby), or Laravel (PHP). By default, these bind to local ports (e.g., React on 3000, Flask on 5000). In production:- The reverse proxy exposes only itself to the Internet

- Backend servers remain isolated on private networks

Never expose application-framework ports directly to the public Internet. Always route through a hardened reverse proxy.

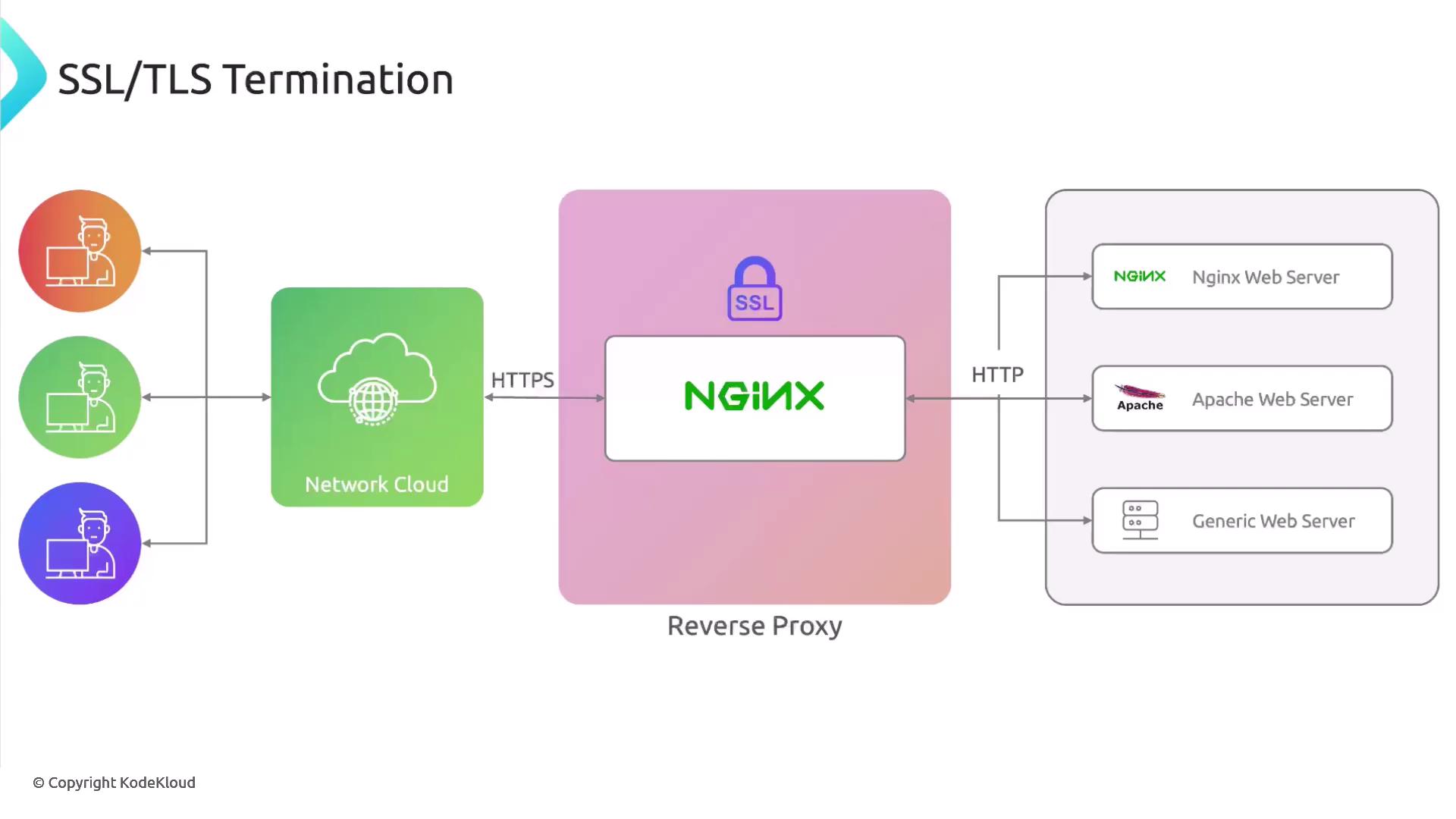

SSL/TLS Termination (Offloading)

Offloading SSL/TLS decryption to the reverse proxy reduces CPU load on your application servers. Clients connect over HTTPS to the proxy, which decrypts the traffic, forwards plain HTTP to backends, then re-encrypts responses.

1. Basic HTTP Reverse Proxy

2. HTTPS Termination at the Proxy

3. End-to-End TLS Encryption

When compliance mandates encrypted links all the way to your app servers, enable HTTPS inproxy_pass:

Caching to Reduce Backend Load

Caching static files and repeatable responses (images, CSS, JSON) at the proxy layer decreases latency and backend CPU usage. NGINX can act as a cache server to serve frequent requests directly from local storage.