What Is Rate Limiting?

Imagine you’re driving in a 100 km/h zone at 90 km/h—well under the limit—while another car speeds past at 130 km/h.

Why Rate Limiting Matters

- Protects against DDoS (Distributed Denial of Service)

- Thwarts brute-force password guessing

- Prevents large-scale web scraping

- Controls API abuse for endpoints like social networks

Brute-Force Attacks

Automated scripts try credentials repeatedly—targeting login pages until they succeed.

Web Scraping

Scripts extract valuable data from sites (e.g., copying car listings from Autotrader).

API Overuse

Endpoints (like Instagram’s post, like, follow, DM APIs) must limit calls to stay responsive.

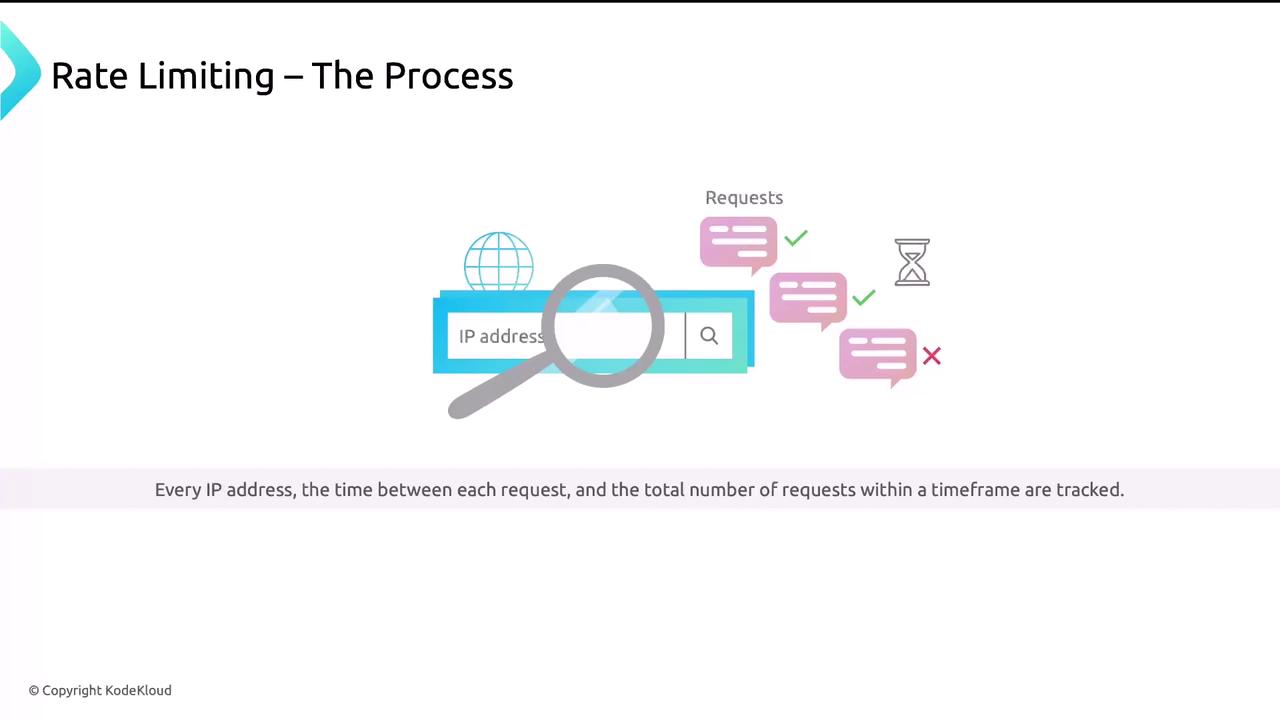

- Client IP address

- Interval between requests

- Total requests within a time window

NGINX Rate Limiting Methods

NGINX provides two core rate-limiting mechanisms:| Method | Purpose | Core Directives |

|---|---|---|

| Request rate limiting | Limit requests per time interval | limit_req_zone, limit_req |

| Connection rate limiting | Limit simultaneous connections per IP | limit_conn_zone, limit_conn |

1. Request Rate Limiting

Implements a token bucket algorithm, allowing a defined number of requests per time unit. Excess requests get delayed or rejected with HTTP 429.Adjust the

rate parameter to r/s, r/m, or r/h depending on expected traffic.http context:

server block:

2. Connection Rate Limiting

Restricts the number of concurrent connections per client IP—ideal against SYN floods or slow-loris style attacks.Ensure the shared memory zone size (e.g.,

10m) is sufficient for the number of tracked IPs to avoid performance issues.http context:

server block:

2) to allow more parallel connections.

Implement these NGINX configurations to safeguard your web server against abuse and ensure consistent performance under load.