PyTorch

Building and Training Models

Introduction to Neural Networks

Understanding neural networks is fundamental before diving into model training with PyTorch. These models, inspired by the human brain, are at the core of many modern artificial intelligence applications, including prediction, pattern recognition, and problem-solving.

Neural networks learn by analyzing data patterns, much like the human brain improves skills with practice. They excel at identifying hidden relationships within large datasets, which makes them essential for applications ranging from image classification to natural language processing.

Overview

Neural networks consist of layers of interconnected neurons, where each neuron acts as a simple decision-making unit. As data moves through these layers, the network refines and interprets the information, ultimately leading to precise predictions.

How Neural Networks Work

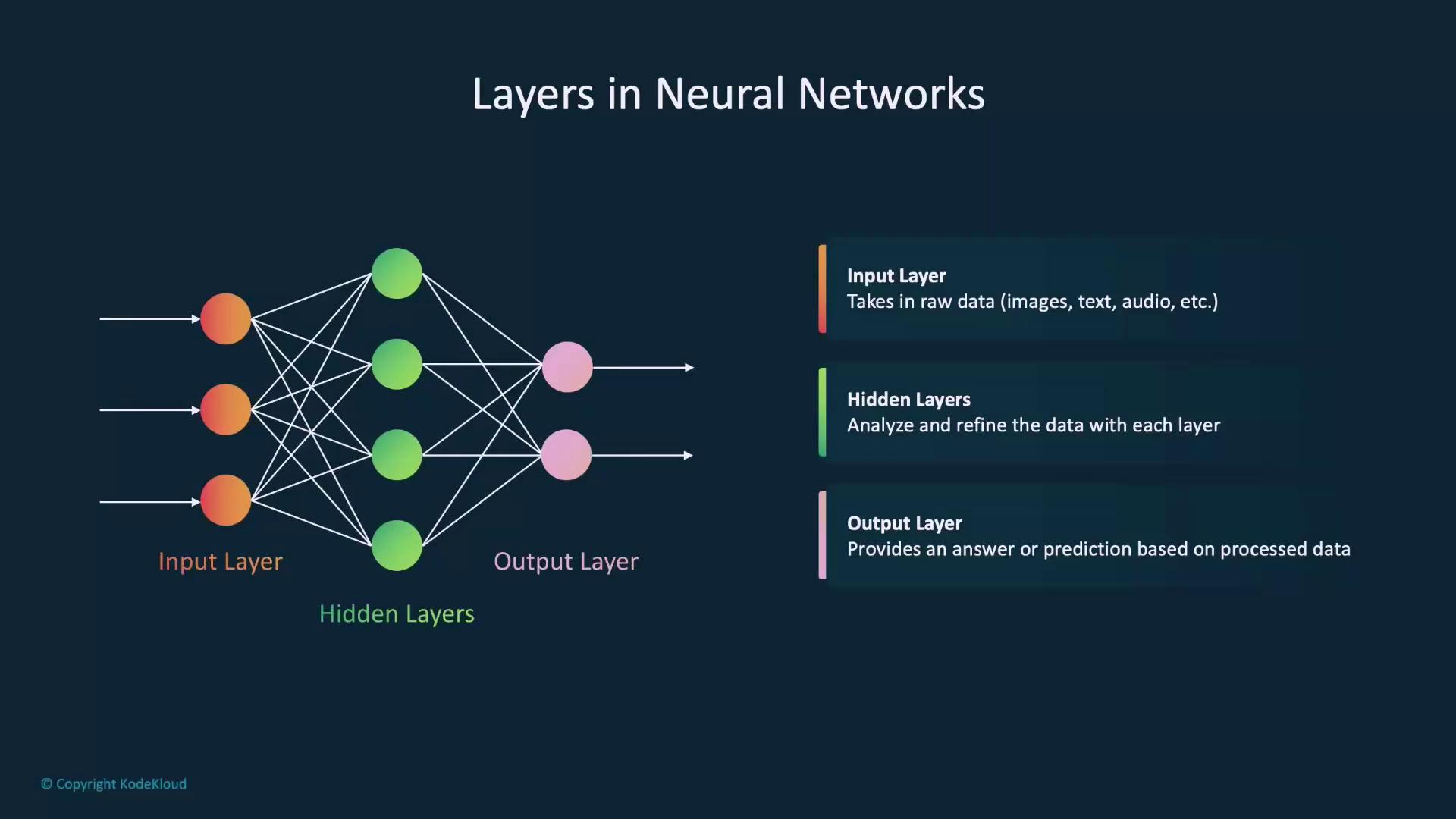

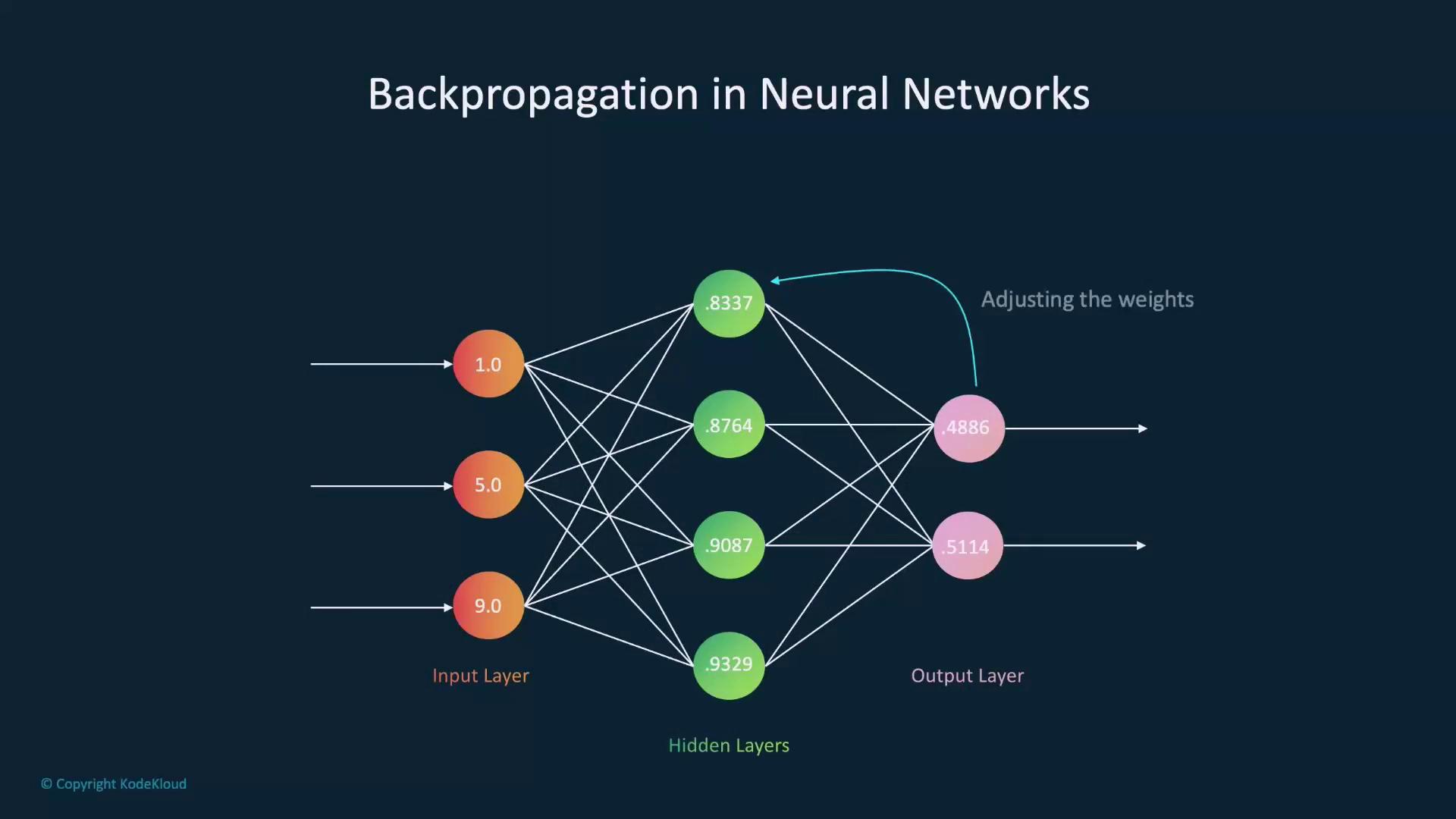

A neural network comprises multiple layers of neurons:

- Input Layer: Receives the raw data, such as images, text, or audio.

- Hidden Layers: Perform the majority of data analysis by identifying patterns and extracting features.

- Output Layer: Delivers the final predictions or decisions based on the processed information.

The individual neurons collaborate by receiving input, processing it, and making decisions based on predefined rules. This collective operation allows the neural network to tackle complex tasks by breaking them down into simpler, manageable operations.

A Practical Example

Consider a network that classifies images as either dogs or cats:

- Input Stage: The image is fed into the input layer.

- Feature Extraction: Hidden layers extract and refine key features.

- Decision Making: The output layer classifies the image based on the processed data.

Activation Functions: The Neuron Gatekeepers

Activation functions determine whether a neuron should "activate" by processing the input data. They act as filters, allowing only relevant information to pass through. Common functions include:

- Sigmoid: Smooth curve used for binary activations.

- ReLU (Rectified Linear Unit): Popular in modern networks due to its simplicity and effectiveness of passing only positive values.

Learning and Training in Neural Networks

During training, neural networks make predictions based on the input data. When these predictions are incorrect, the network adjusts the connections (weights) between neurons. This iterative process helps the network improve over time, similar to learning from mistakes.

Backpropagation: Fine-Tuning the Model

Backpropagation is a critical technique where errors are propagated backwards through the network to update the weights. This feedback loop helps pinpoint the source of errors and refines the model with each iteration.

Types of Neural Networks

Neural networks come in various architectures, each tailored for specific types of tasks. Here is a quick overview:

| Type | Use Case | Description |

|---|---|---|

| Feed-Forward Neural Network | Standard processing from input to output | Data flows in one direction without looping |

| Convolutional Neural Network (CNN) | Image analysis and pattern recognition | Excels at processing grid-like topology such as images |

| Recurrent Neural Network (RNN) | Sequential data processing (e.g., text, audio) | Ideal for handling time-series or sequence-dependent data |

Key Takeaways

- Neural networks draw inspiration from the human brain, leveraging interconnected neurons to recognize patterns and make predictions.

- The layered structure — comprising input, hidden, and output layers — is critical for data processing.

- Activation functions ensure that only valuable information is forwarded through the network.

- Training involves iterative weight adjustments, with backpropagation playing a pivotal role in refining network accuracy.

- Various architectures like CNNs and RNNs are optimized for tasks such as image recognition and sequential data processing.

Next Steps: Implementing Neural Networks with PyTorch

Now that you have a solid understanding of neural network fundamentals, you're ready to dive into implementing these models using PyTorch. In the upcoming sections, we will walk through the process of building and training neural networks with code examples, ensuring you can leverage PyTorch effectively for your projects.

For more detailed guidance on neural network implementations and advanced techniques, be sure to explore additional resources and documentation on PyTorch's official site.

Happy coding and learning!

Watch Video

Watch video content