PyTorch

Model Deployment and Inference

Deployment Options

Congratulations on reaching this stage of the course!

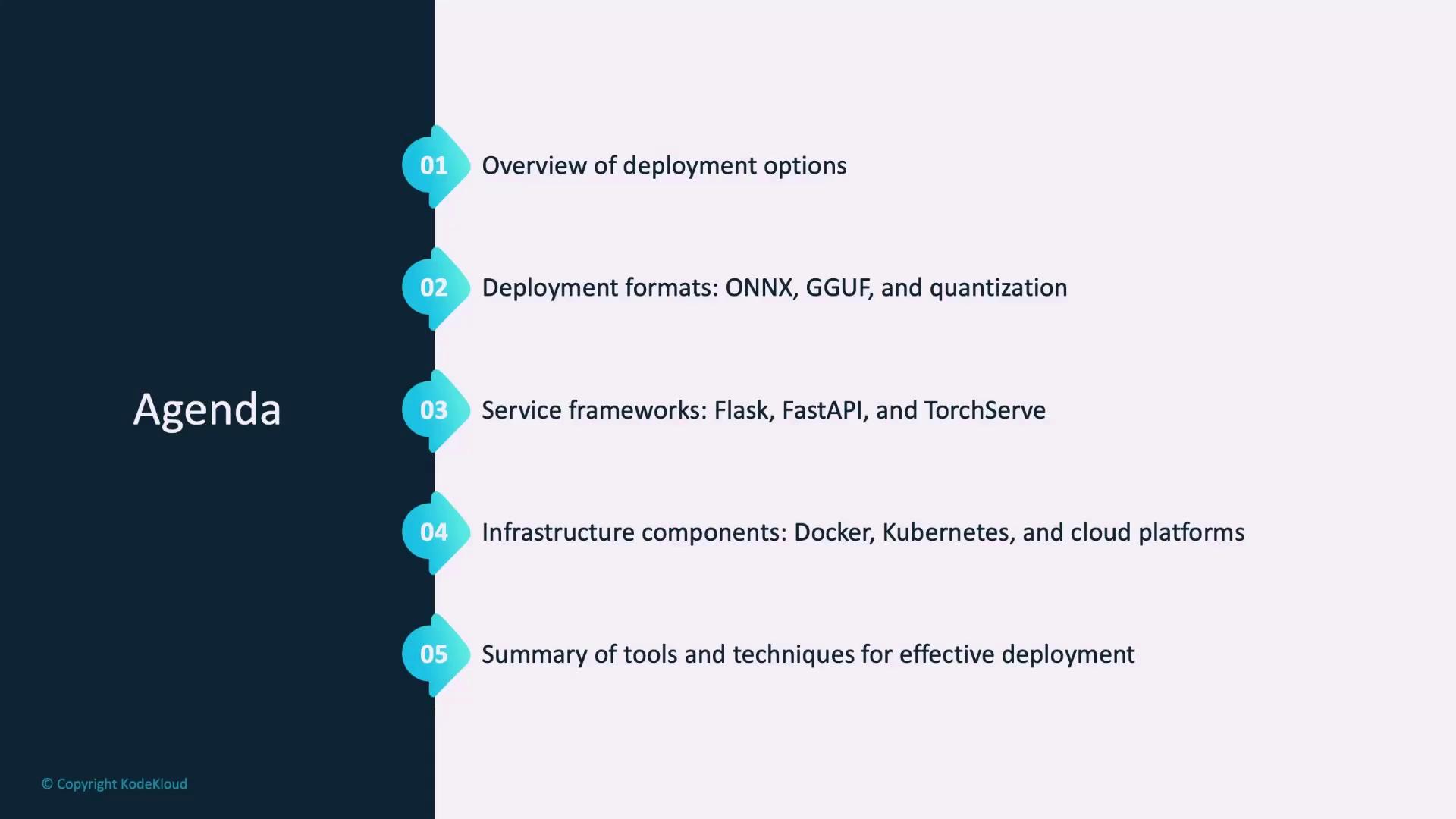

After building and evaluating three models, it's time to share your best creation with your client at Awesome AI. In this lesson, we’ll provide an overview of model deployment options for PyTorch. You’ll learn about various deployment formats, serving frameworks, and essential infrastructure components. While some topics will be explored in further detail in later lessons, this guide offers a comprehensive outline to help you get started.

Deployment Formats

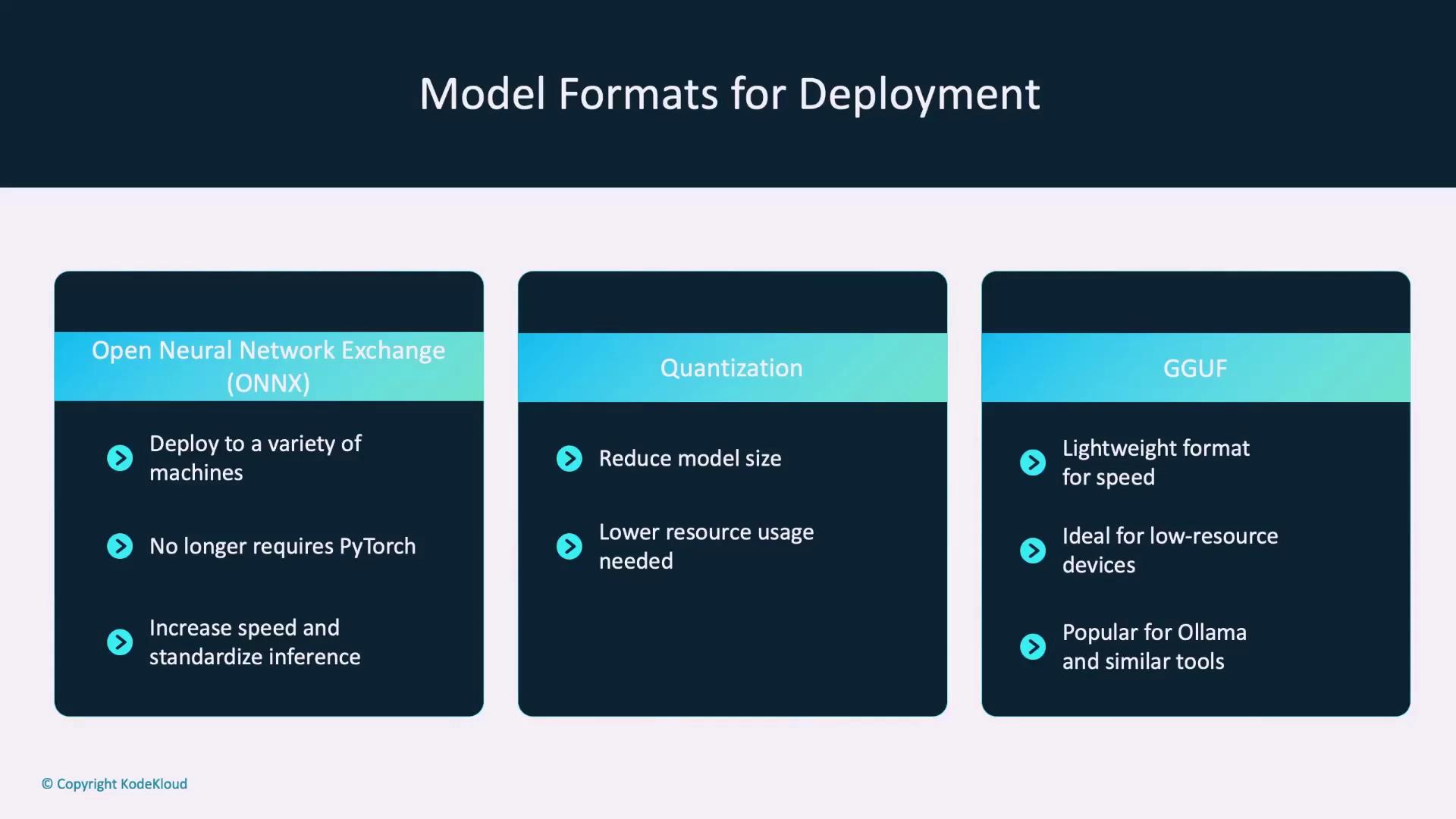

When deploying models, you don't have to stick to the native PyTorch format. Alternative formats can optimize your model's performance and extend its compatibility.

ONNX

ONNX (Open Neural Network Exchange) is a widely adopted format that enables cross-framework model usage. Converting a PyTorch model to ONNX facilitates integration into systems that do not natively support PyTorch. This conversion also standardizes model inference, making it suitable for diverse platforms—from centralized servers to edge devices.

Quantization

Quantization reduces the size and computational requirements of your model, making it especially useful for devices with limited resources such as mobile phones or IoT devices. Techniques like Int8, Dynamic, and Mixed Precision Quantization can significantly boost inference speed with minimal impact on accuracy.

Note

Quantization is a complex topic and may warrant its own dedicated lesson.

GGUF

GGUF is a lightweight format optimized for low-latency inference, ideal for edge and mobile deployments where computational resources are limited. It has recently gained popularity, especially with tools like Running Local LLMs With Ollama, which allow large language models (LLMs) to run on devices with just a CPU.

Serving Frameworks

To make your PyTorch models accessible, you need serving frameworks that expose your model via web endpoints. When a request is sent over HTTP, these endpoints return a prediction from the model. Here are three popular options:

Flask

Flask is a lightweight Python web framework known for its simplicity. It is ideal for small-scale deployments where extensive features are not necessary. More in-depth coverage of Flask will be provided in a later lesson.

FastAPI

Designed for high-performance applications, FastAPI is perfect for creating APIs that require asynchronous execution. It efficiently handles multiple requests simultaneously and offers a robust feature set, making it a favorite for serving models.

TorchServe

TorchServe is crafted specifically for serving PyTorch models. It includes useful features such as model versioning and inference batching, which facilitate managing model updates and optimizing performance. However, it may not offer the same level of flexibility as Flask or FastAPI.

Deployment Infrastructure

In addition to selecting the proper model format and serving framework, containerization and scalable deployment tools are essential for successful deployment.

Docker

Docker packages your application along with its dependencies into containers, ensuring consistent performance across your local machine, servers, or cloud environments. Key benefits of Docker include:

- Easy sharing and deployment of your model

- Simplified scaling and dependency management

Kubernetes

Kubernetes is an orchestration platform for managing containerized applications, making it indispensable for large-scale deployments. Its standout features include:

- Autoscaling containers based on traffic demands

- Rolling updates for seamless version transitions without downtime

- Resource monitoring and management to optimize performance

Cloud Platforms

Deploying models to the cloud simplifies scaling and reduces the complexity of managing infrastructure. Popular cloud platforms include:

- AWS SageMaker: A fully managed service that handles both training and deployment, eliminating the need for infrastructure management.

- Google Vertex AI: Offers versatile options including serverless hosting for efficient deployment.

- Azure ML: Known for robust MLOps support and suitability for hybrid and edge deployments.

Best Practices for Model Deployment

Deploying your PyTorch model effectively requires a strategic approach. Consider the following best practices to ensure a robust deployment.

Model Preparation

- Optimize your model by converting it to ONNX for improved cross-platform compatibility.

- Apply quantization techniques to minimize model size and reduce latency.

Testing

- Test your model thoroughly in a staging environment that closely mirrors production settings to verify its accuracy and performance.

Version Control

- Use semantic versioning to maintain and track different versions of your models. This practice enhances reproducibility and simplifies change management.

Monitoring and Maintenance

- Keep track of vital metrics such as latency, throughput, and error rates.

- Regularly monitor both input data variations and model outputs to detect any performance degradation.

Infrastructure and Scalability

- Package your model with Docker along with all its dependencies.

- Leverage Kubernetes for efficient resource management, automated scaling, and hassle-free deployment.

- Select a cloud platform (AWS, Google, or Azure) that best matches your application's requirements.

Security

- Secure your APIs using HTTPS, and implement robust authentication and authorization mechanisms.

- Adhere to data privacy standards such as GDPR, ensuring that sensitive information is encrypted during transit and at rest.

Summary

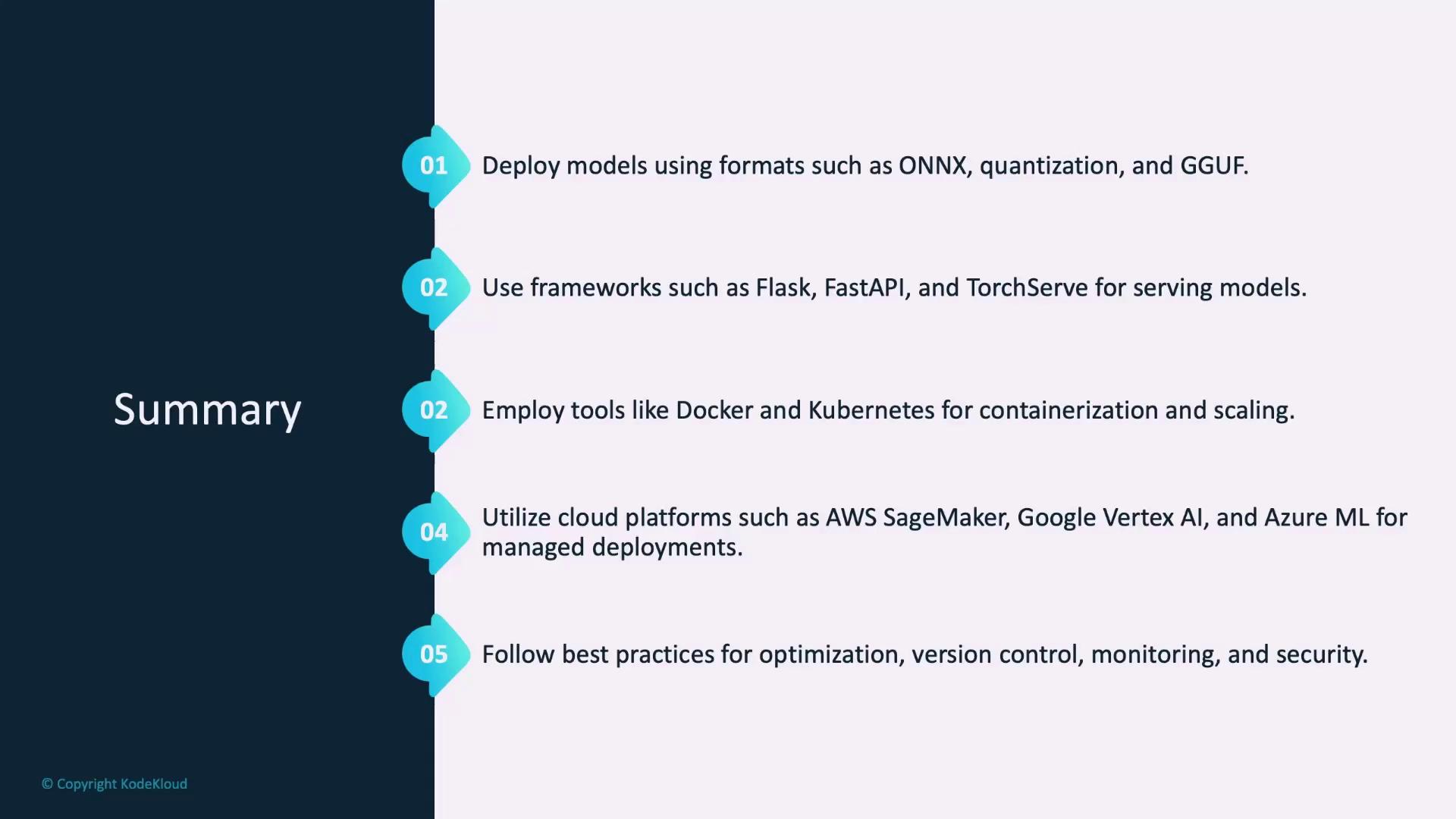

In summary, deploying PyTorch models involves multiple layers of decision-making:

- Deployment Formats: Use ONNX for cross-platform support, quantization for performance optimization, and GGUF for low-latency inference in resource-constrained environments.

- Serving Frameworks: Choose from Flask and FastAPI for flexible API-based serving or TorchServe for PyTorch-specific features like versioning and inference batching.

- Infrastructure Tools: Docker ensures consistent, containerized deployments, while Kubernetes provides scalability and seamless updates through automated resource management.

- Cloud Platforms: Platforms like AWS SageMaker, Google Cloud Vertex AI, and Azure ML simplify the deployment process with managed services that handle scaling and infrastructure.

Deploying models effectively requires a well-rounded approach that considers model optimization, infrastructure management, performance monitoring, and security. With these tools and best practices, your PyTorch models will be well-equipped for real-world applications.

Let's move on to the demo where we will see some of these concepts in action.

Watch Video

Watch video content