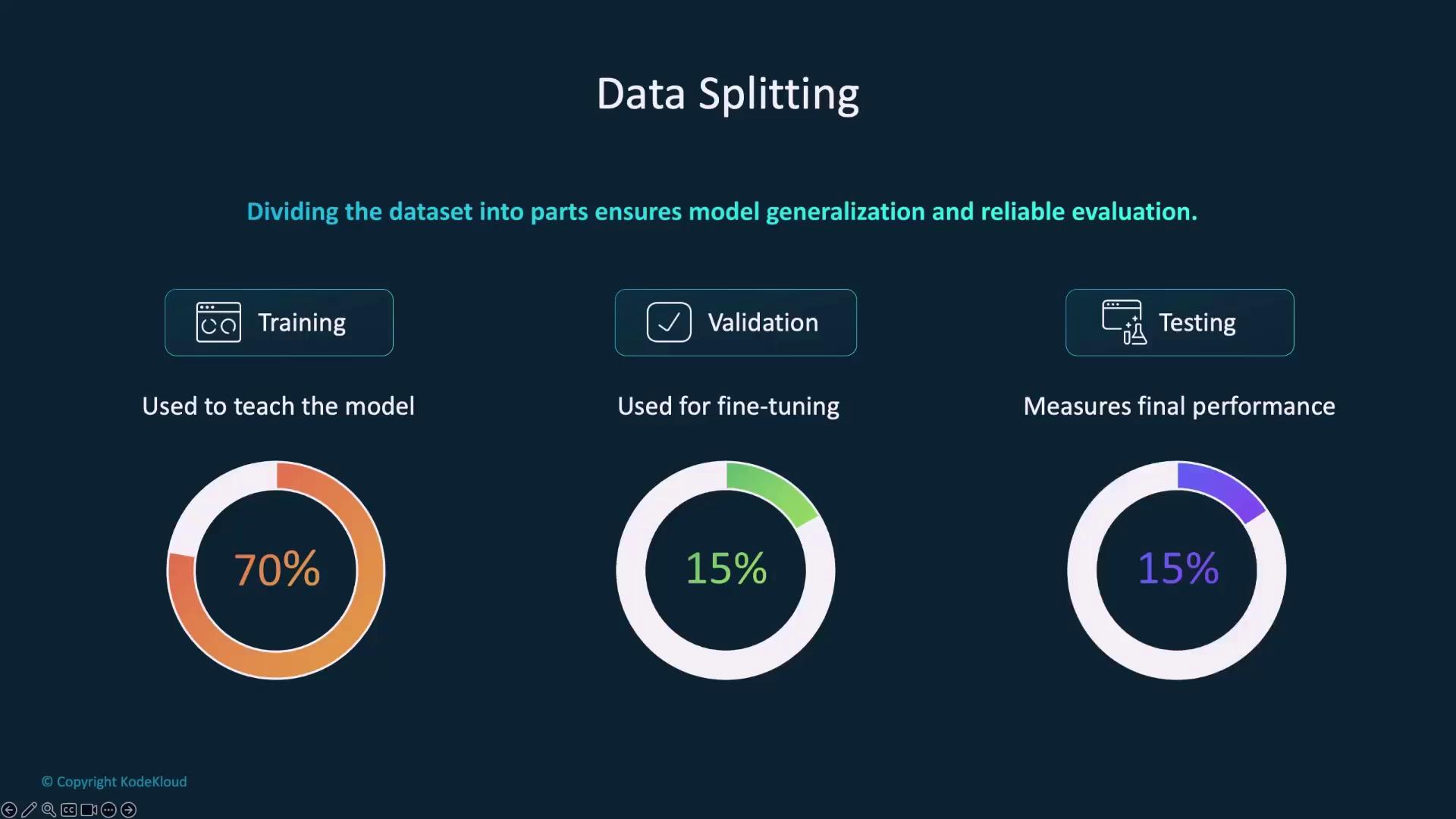

Data Splitting

Data splitting divides your dataset into training, validation, and testing subsets. This is essential for ensuring that your model generalizes well and that performance metrics are reliable. For instance, you might use a 70/15/15 ratio for training, validation, and testing respectively, although you can adjust these percentages to meet your project requirements.

RandomSplit utility from the torch.utils.data module can help automate this process by randomly dividing the dataset into the desired sizes. Here’s an example:

Keep in mind that

RandomSplit produces different splits every time it is executed. For reproducible results, manage data tracking and versioning separately.Dataset Versioning

Versioning is vital for ensuring reproducibility in model training. By recording the exact data used (for example, via a CSV annotations file), you can easily reproduce and verify your experiments—even if the underlying dataset changes. Tools like DVC or Git are commonly used for this purpose.Data Cleaning and Preprocessing

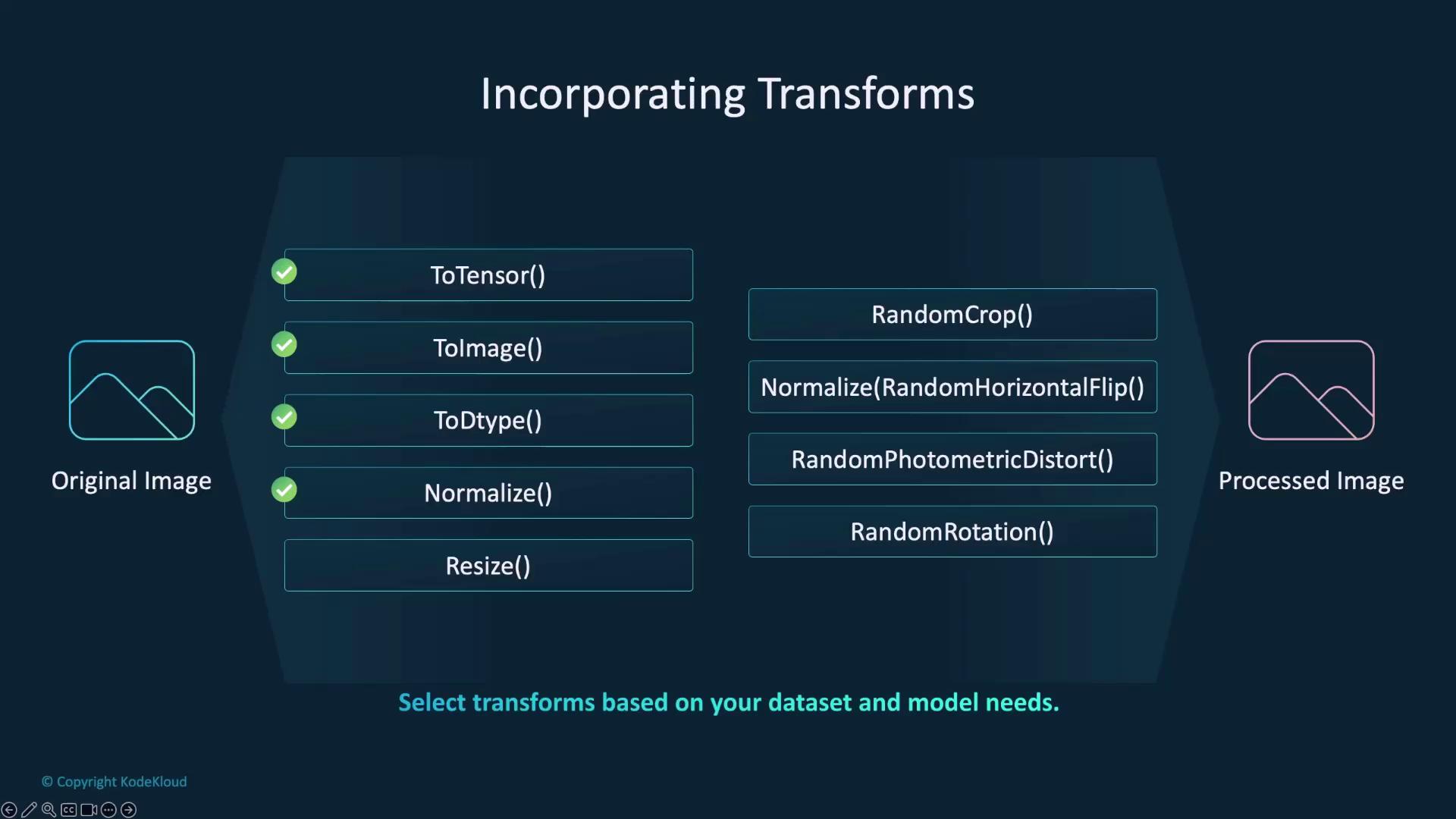

Before training your image classification model, cleaning and preprocessing the data is key. Data cleaning removes duplicate, blurry, or irrelevant images that could confuse the model, while preprocessing standardizes the data by resizing images and normalizing pixel values. Important transformations for image classification include:- Conversion to tensor using

ToTensor() - Normalization for consistent pixel value ranges

Creating a PyTorch Dataset

PyTorch supports both preloaded and custom datasets. For example, if you’re using a preloaded dataset like CIFAR10, make sure to review its documentation for details on subset flags. Here’s how you can set up CIFAR10 with basic transformations:RandomSplit.

Data Versioning and Tracking Approaches

Documenting your data is crucial. You have a couple of approaches:-

Annotations File

Use an annotations file to record each image’s path along with its corresponding label: -

Folder Organization

Organize your dataset into folders for training, validation, and testing, with subfolders for each class label:

Using an annotations file offers flexibility, as it allows managing datasets without loading all images into memory at once.

Data Transformations

Different subsets of your dataset may require unique transformations. Training transforms often include data augmentations, while validation and testing transforms remain minimal for consistency.Training Transformation

Validation Transformation

Creating DataLoaders

DataLoaders are essential for batching and efficiently feeding data into your model during training and evaluation. Below is an example of how to create DataLoaders for your custom datasets:Summary

This lesson covered essential topics for effective data preparation in PyTorch:- Data Splitting: Using

RandomSplitto create balanced training, validation, and testing subsets. - Data Cleaning and Preprocessing: Ensuring image quality and consistency through cleaning, resizing, and normalization.

- Dataset Versioning: Tracking data with annotations files or organized folder structures for reproducibility.

- Data Transformations: Customizing training and validation pipelines to include the necessary augmentations.

- Creating DataLoaders: Efficiently batching and feeding data during model training.