AWS Solutions Architect Associate Certification

Designing for Reliability

Turning up Reliability on Data Services

Welcome back! In this article, we explore how to design for reliability in data, machine learning, and AI services on AWS. We’ll cover a range of AWS services and discuss best practices to ensure that your architecture remains resilient and scalable.

Let's dive right in.

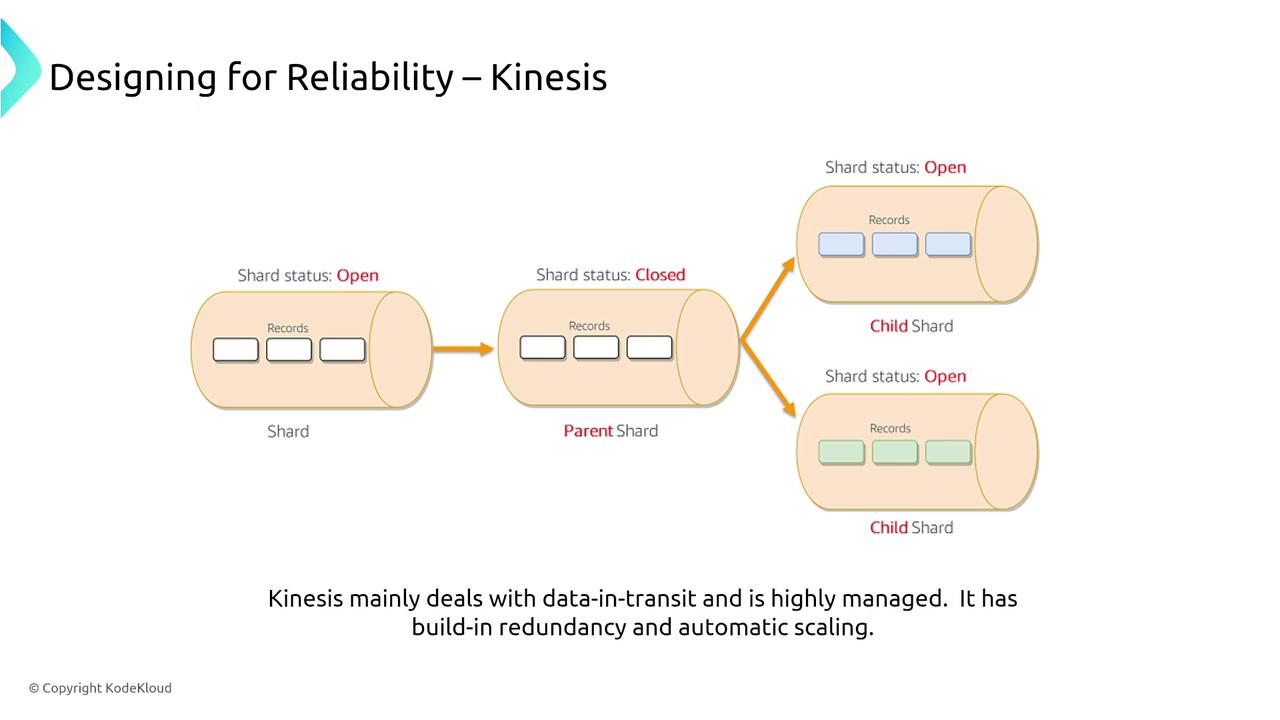

Data Ingestion with Kinesis

Kinesis is a powerful streaming bus service that can handle data in transit with built-in redundancy and automatic scaling. The service achieves scalability by splitting data across multiple shards, each with its own throughput capacity. This allows you to distribute records for independent processing across shards.

When your workloads experience fluctuations, enabling auto scaling on your Kinesis shards (based on utilization) ensures that your data stream scales to meet demand.

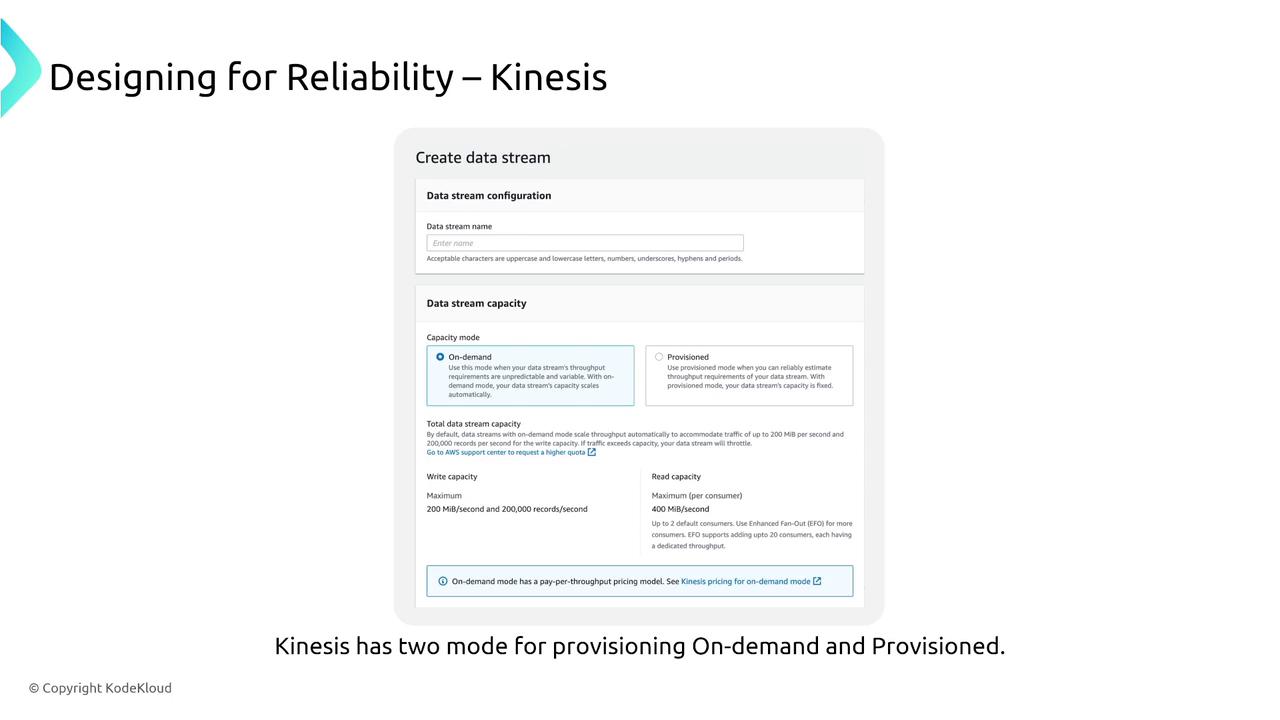

When you create a stream, you can choose between provisioned mode, where you set the minimum and maximum capacities, or on-demand mode, which automatically offers about 200 megabits per second (or 200,000 records per second write capacity). If your usage exceeds available capacity, throttling occurs, and you may need to adjust your service quota.

On-demand mode is ideal for companies experiencing seasonal spikes because AWS manages scaling automatically. Additionally, Kinesis allows you to configure data retention periods ranging from 24 hours to 365 days to meet compliance or processing requirements.

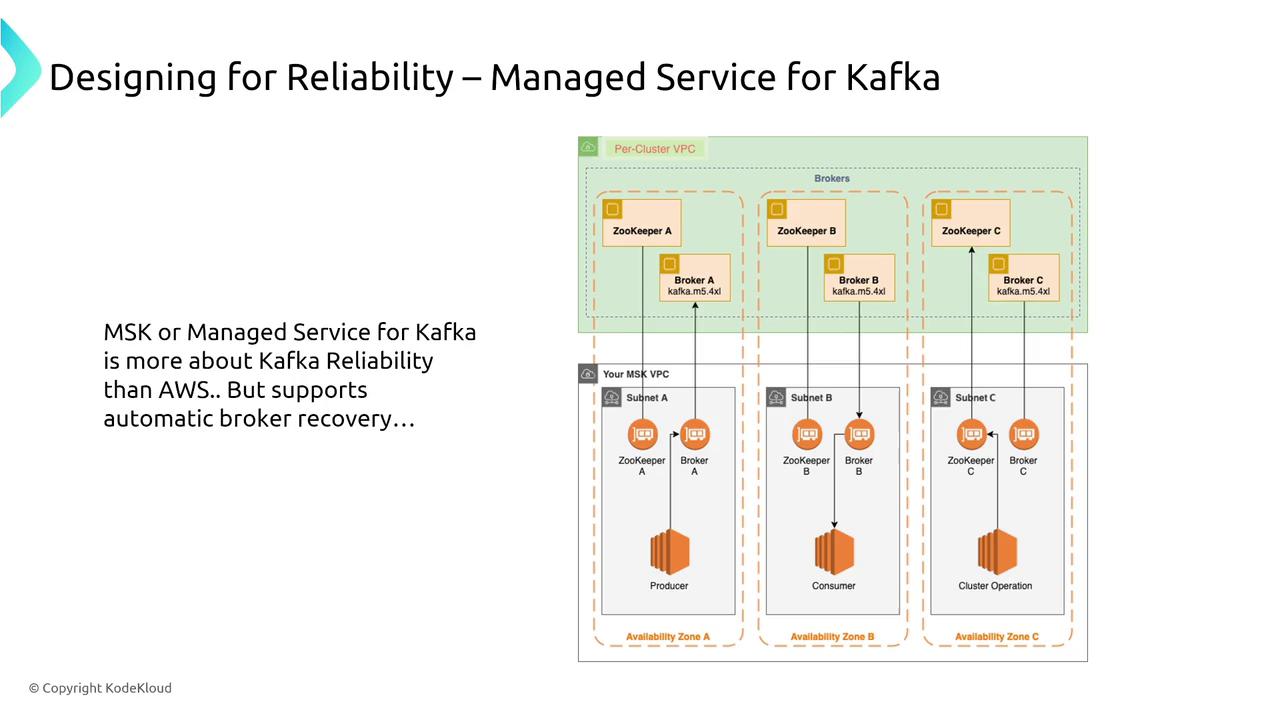

Managed Service for Kafka (MSK)

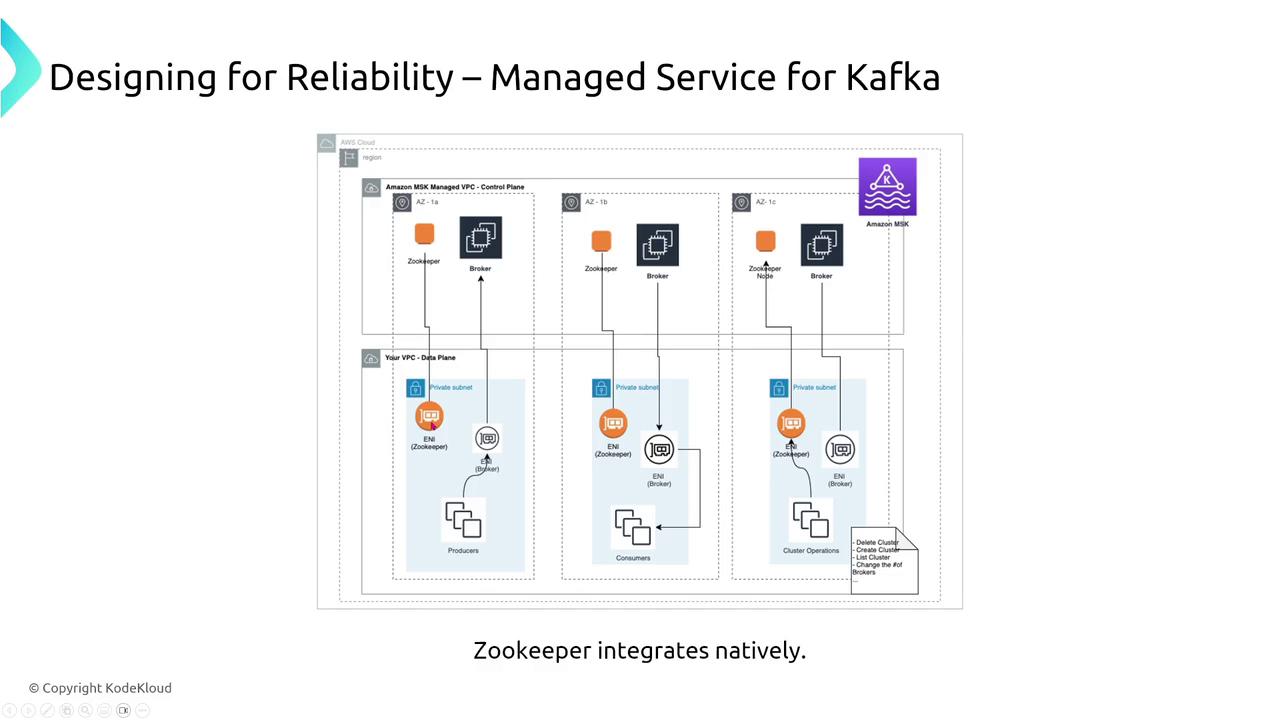

Amazon MSK provides a managed Kafka experience with added insights that simplify cluster management. The typical data flow in MSK involves:

- Incoming data connecting to ZooKeeper.

- Distribution from ZooKeeper to dedicated broker nodes in a cluster.

- Brokers, each defined by its machine size, handling portions of the data load.

ZooKeeper manages cluster operations such as rearranging nodes and automatic broker recovery. MSK also features:

- Auto-detection and replacement of failed brokers (auto-healing).

- Cluster-to-cluster asynchronous replication for cross-region data synchronization.

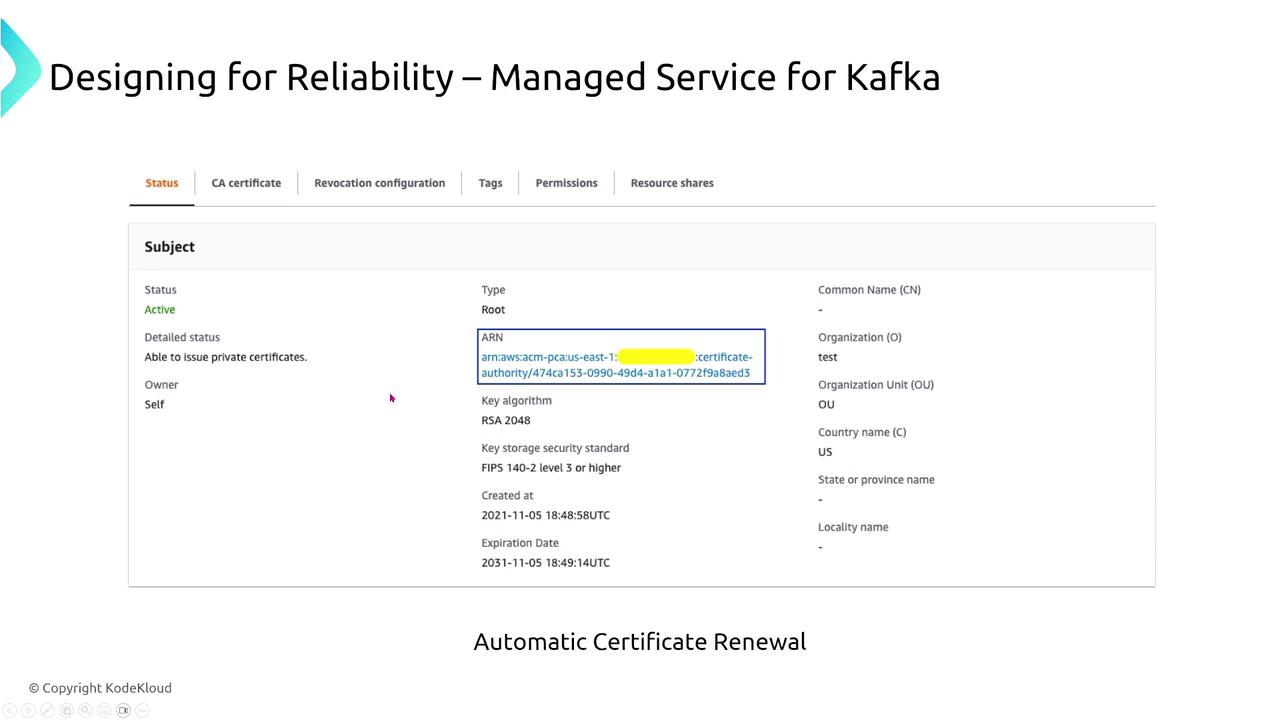

For high availability, you can deploy Kafka clusters across multiple Availability Zones or regions. Tasks like certificate renewal are automated to maintain secure intra-cluster communications.

The architecture that integrates ZooKeeper with broker nodes provides an inherently reliable framework for managing Kafka clusters.

Data Transformation with Glue and EMR

AWS Glue

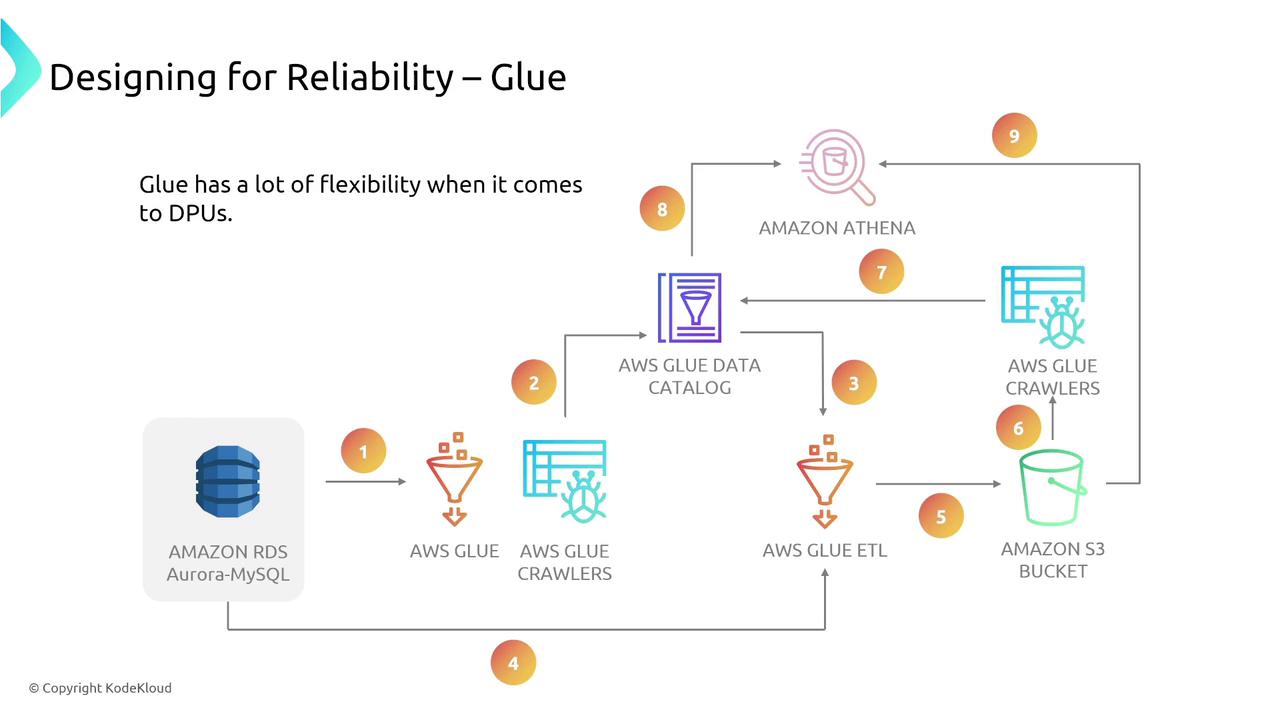

AWS Glue is a managed ETL (Extract, Transform, Load) service based on open-source PySpark. It automatically crawls your data sources, creates a catalog, and launches ETL jobs. Glue integrates seamlessly with S3, Athena, and QuickSight for processing and visualization.

In terms of reliability, AWS Glue:

- Supports automated retries on failed jobs.

- Scales compute with Data Processing Units (DPUs) according to job requirements.

You pay based on the number of DPUs provisioned per job, so it’s important to adjust these resources based on your workload.

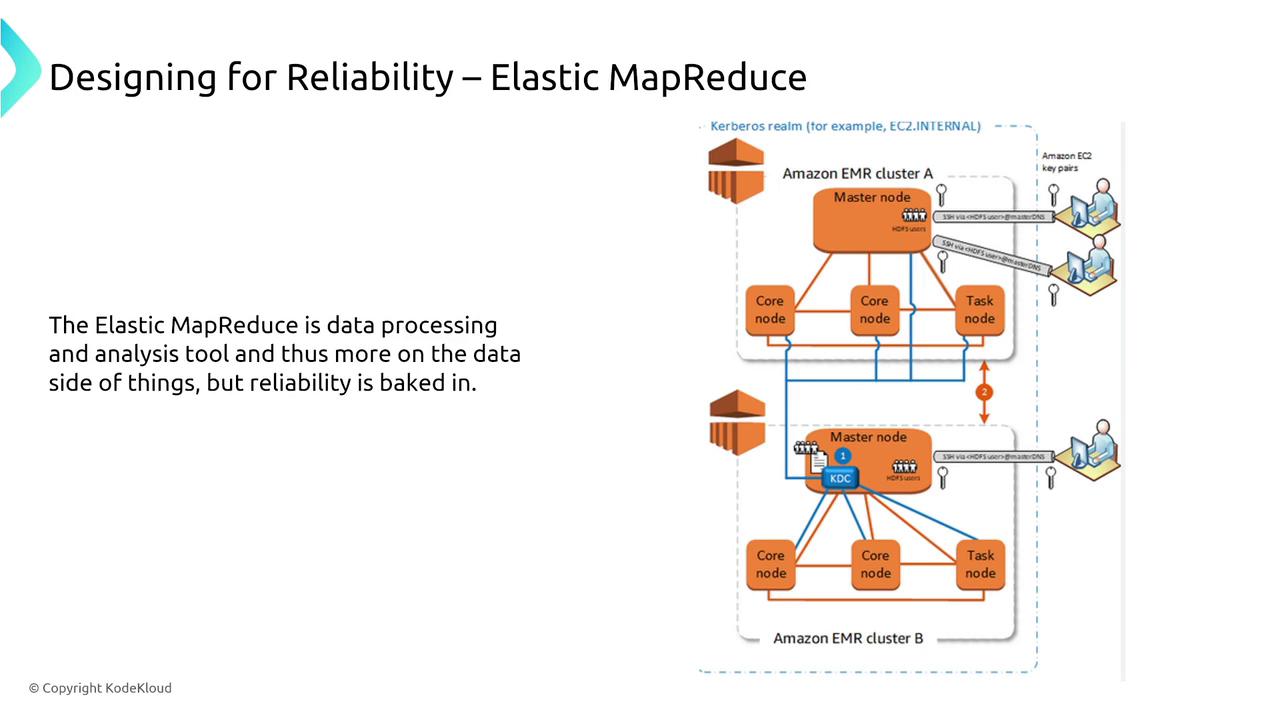

Amazon EMR

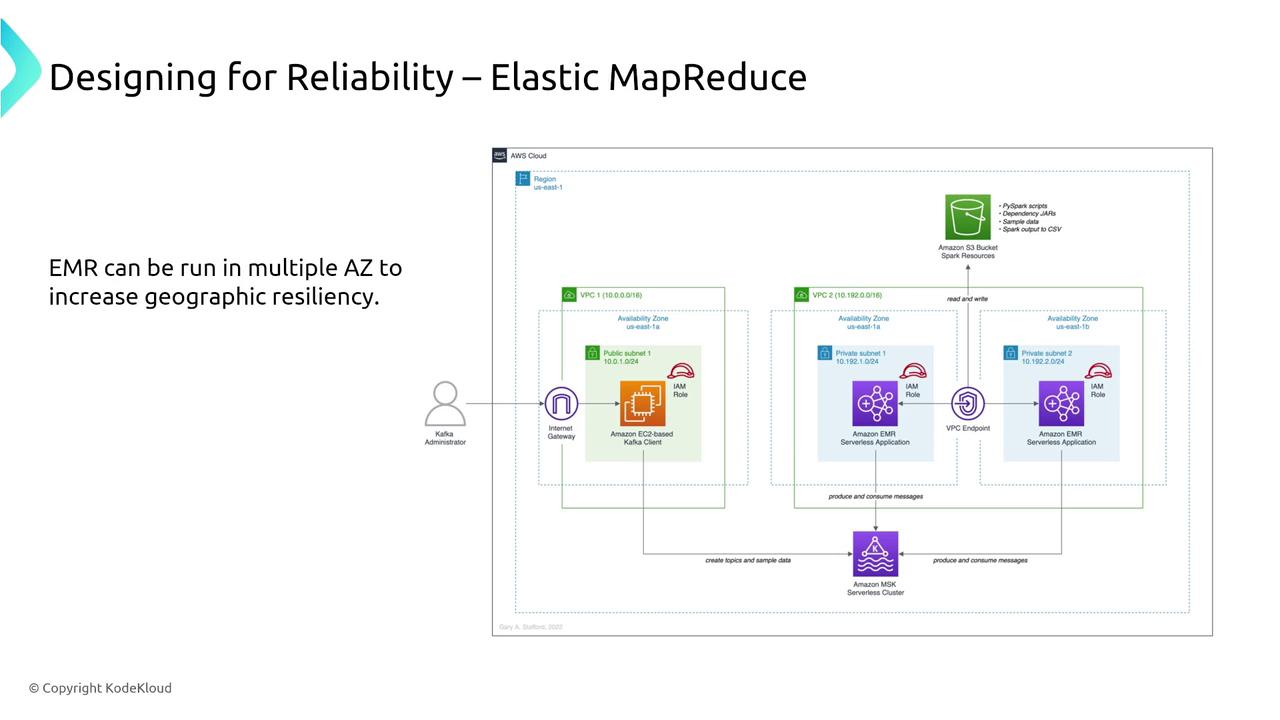

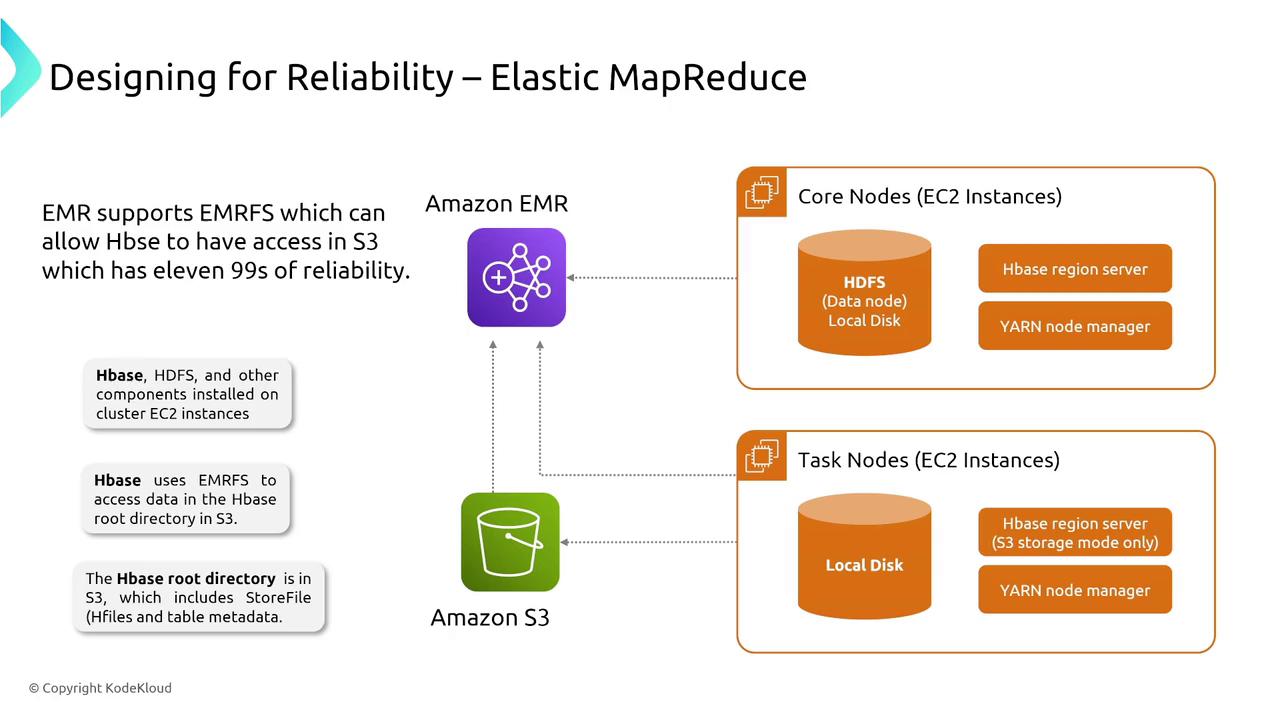

Amazon EMR is a managed cluster service that supports frameworks such as Hadoop, MapReduce, and Spark. Reliability is enhanced through:

- Node redundancy by deploying multiple primary nodes.

- Resource management using Yarn.

- Multi-AZ deployments to provide geographic resiliency.

EMR also integrates EMR-FS for direct S3 access and supports CloudFront, CloudWatch, and logging tools to provide added visibility and resilience.

Monitoring your clusters is simple with EMR’s integration with CloudWatch, enabling you to review logs and track health across core or task nodes.

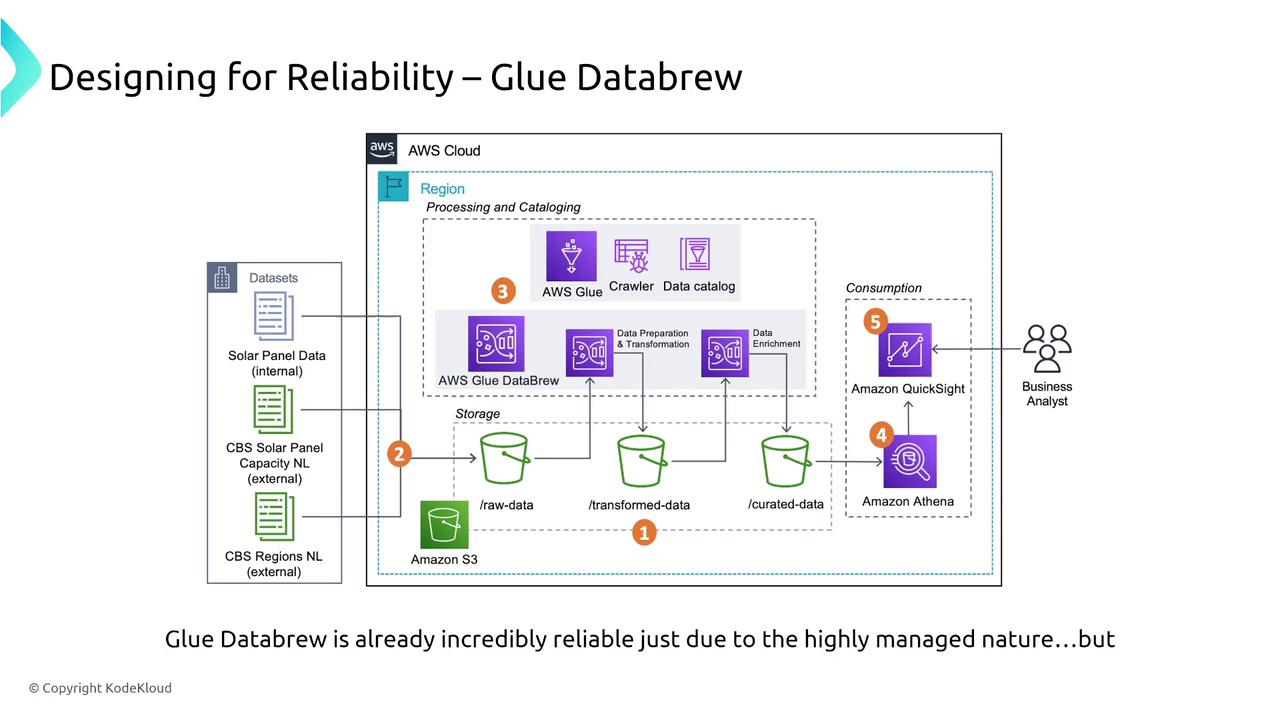

Data Preparation with Glue DataBrew

AWS Glue DataBrew is a fully managed service for data preprocessing, enrichment, and transformation. It streamlines tasks such as filling missing values and computing averages. While DataBrew supports automatic job retries, it is designed with minimal configuration options to simplify your workflow.

Data Storage and Presentation

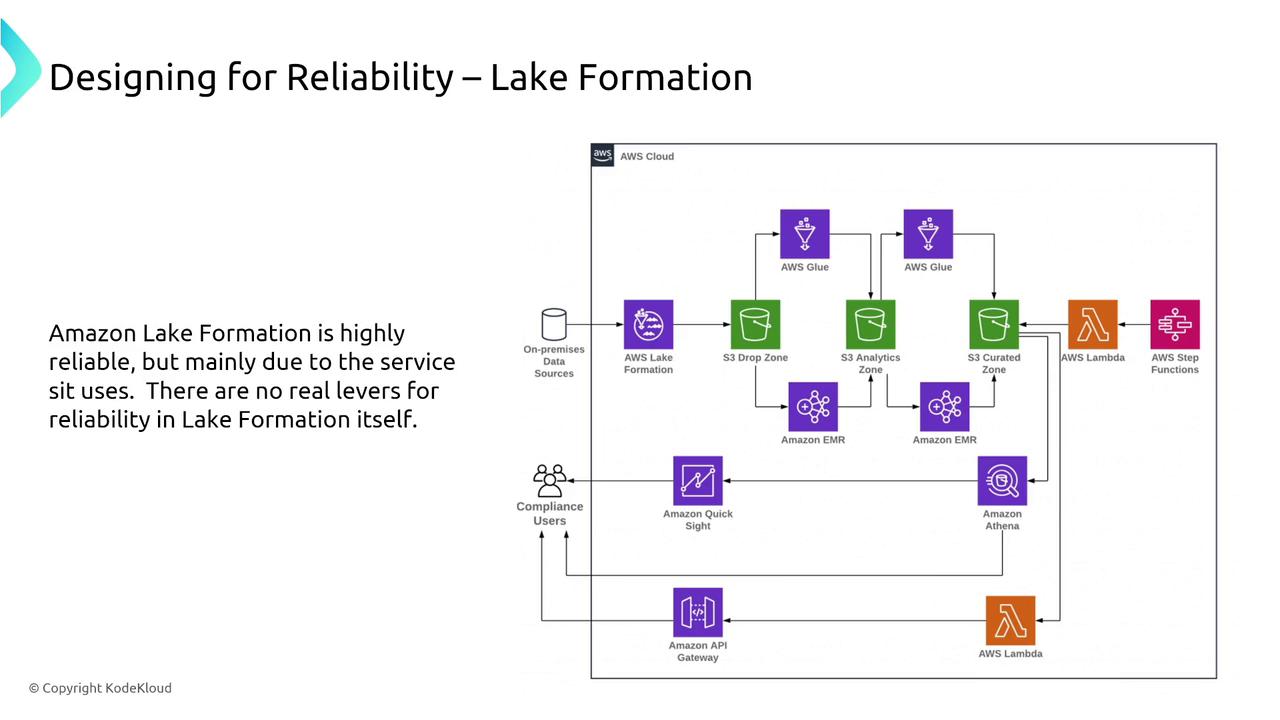

Lake Formation

AWS Lake Formation simplifies setting up a secure data lake using S3, Glue, EMR, and other services under one management pane. However, its reliability largely depends on the underlying services like S3.

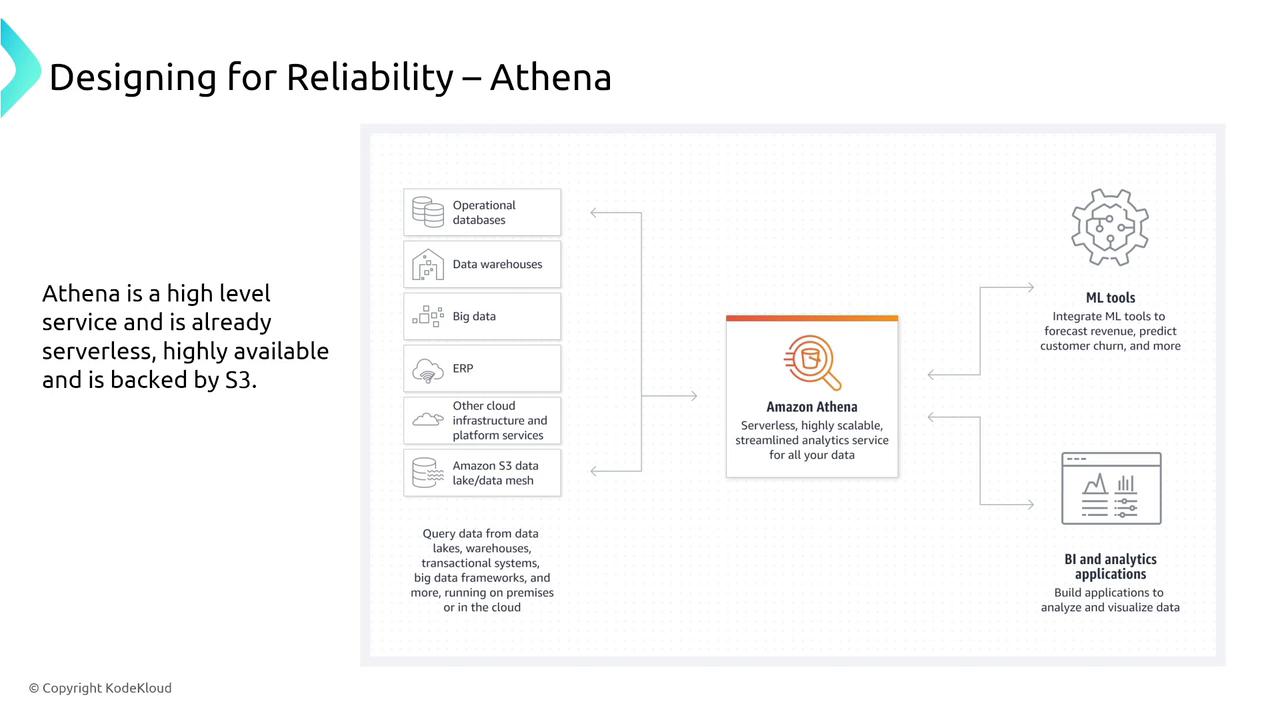

Amazon Athena

Amazon Athena is a serverless query service that operates on data stored in S3 and other sources. It provides resilient and scalable query capabilities with minimal configuration—making it ideal for querying large datasets reliably.

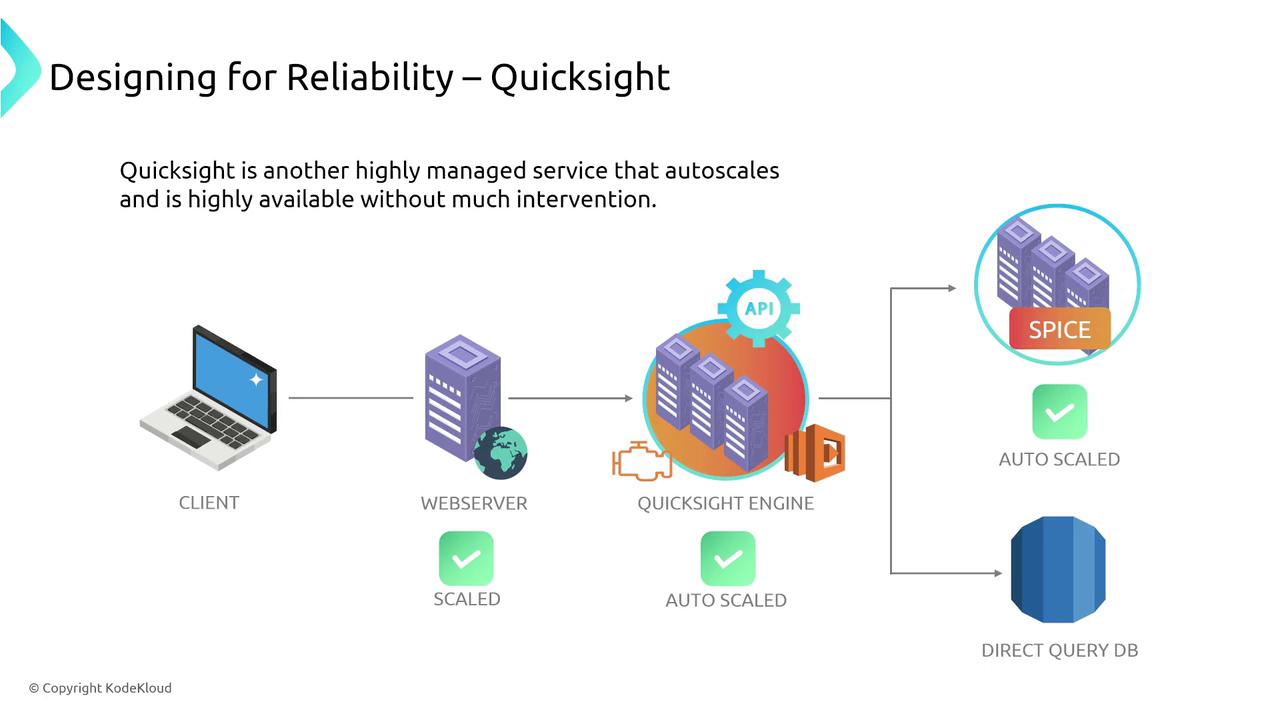

Similarly, Amazon QuickSight is an inherently scalable dashboard service that requires minimal setup for robust analytics.

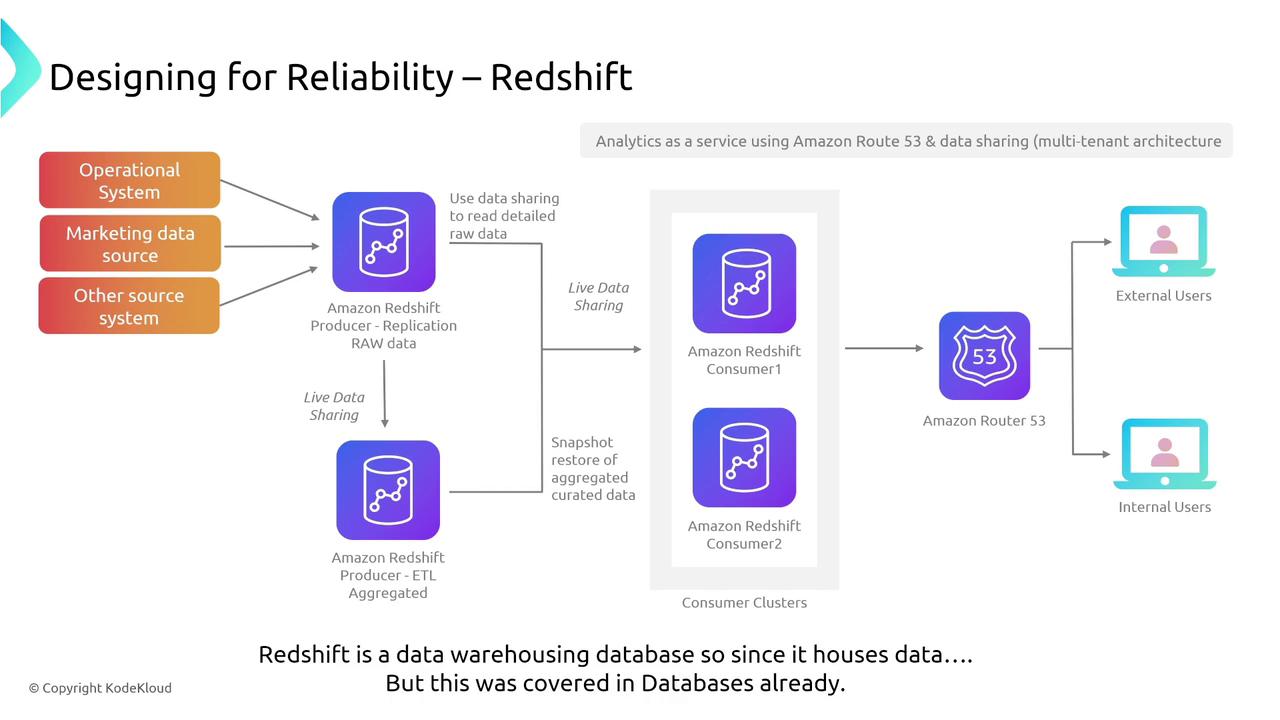

Redshift is another reliable storage system that plays an essential role in reporting and analytics.

Machine Learning and AI Services

SageMaker

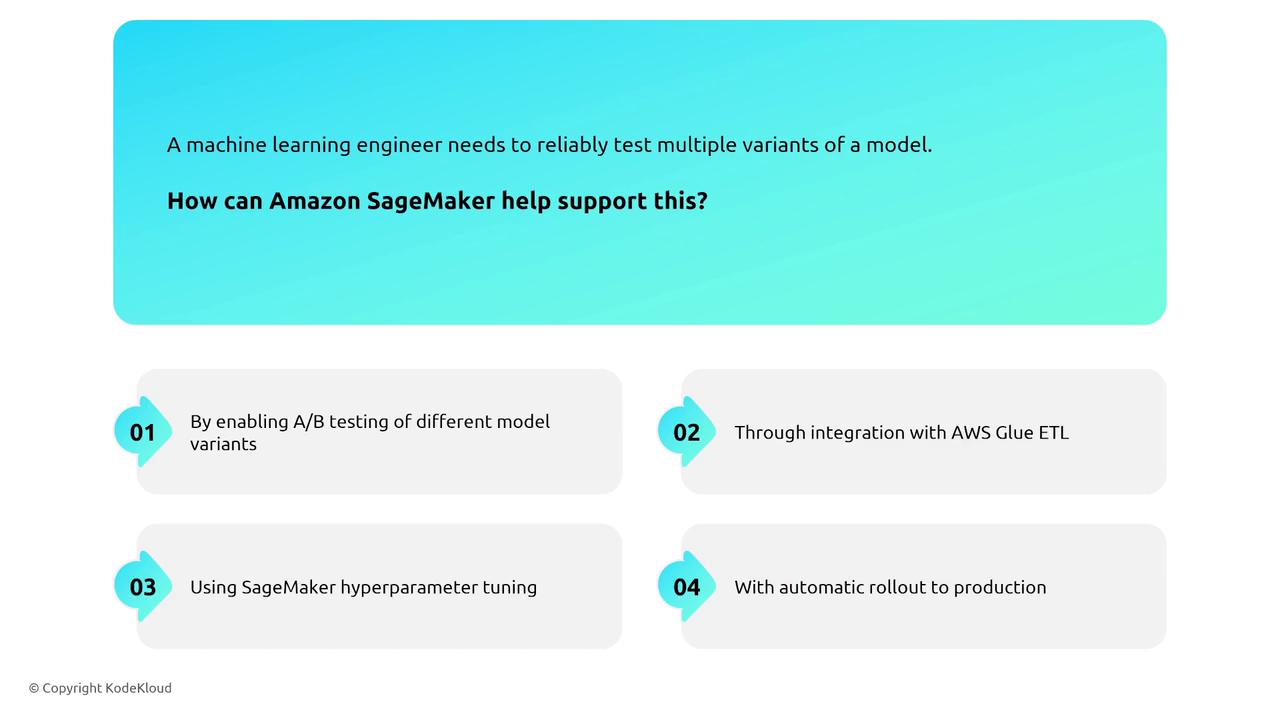

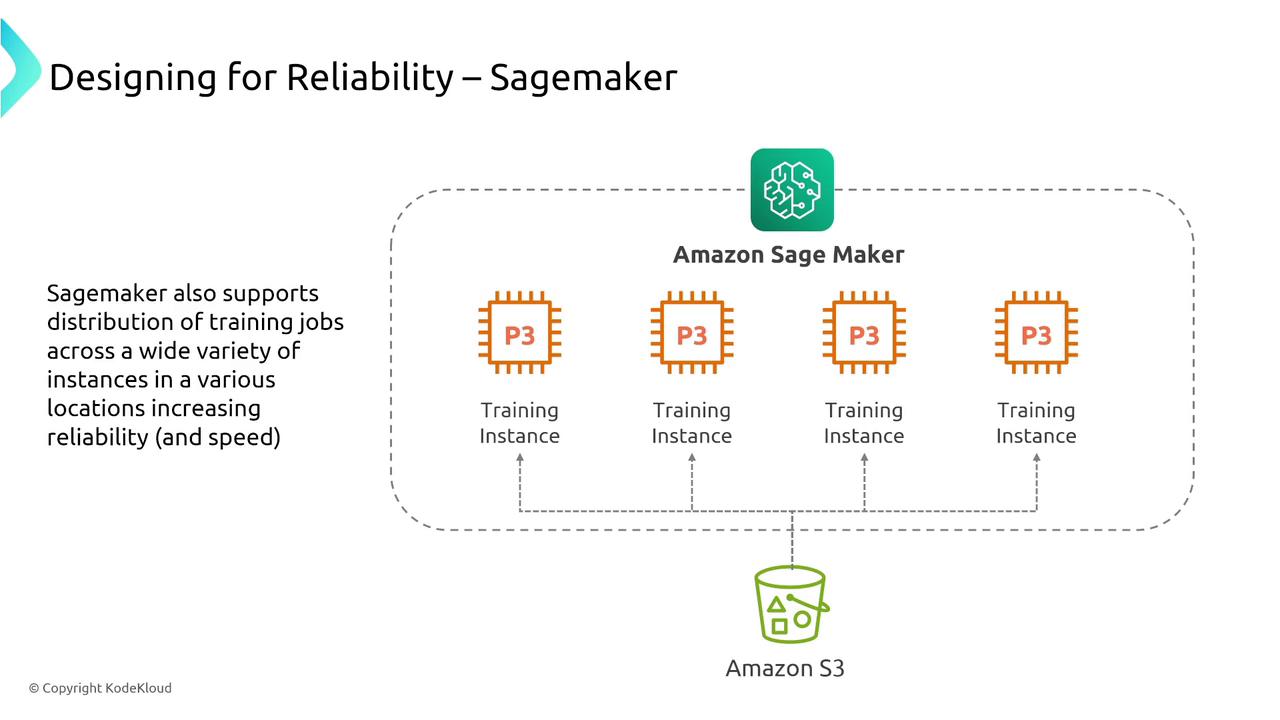

Amazon SageMaker supports model training, hosting, and testing with instance-based infrastructure. To enhance reliability, it implements strategies such as canary testing, load balancing, and multi-instance deployment. SageMaker also supports A/B testing for model evaluation and distributed training across multiple instances.

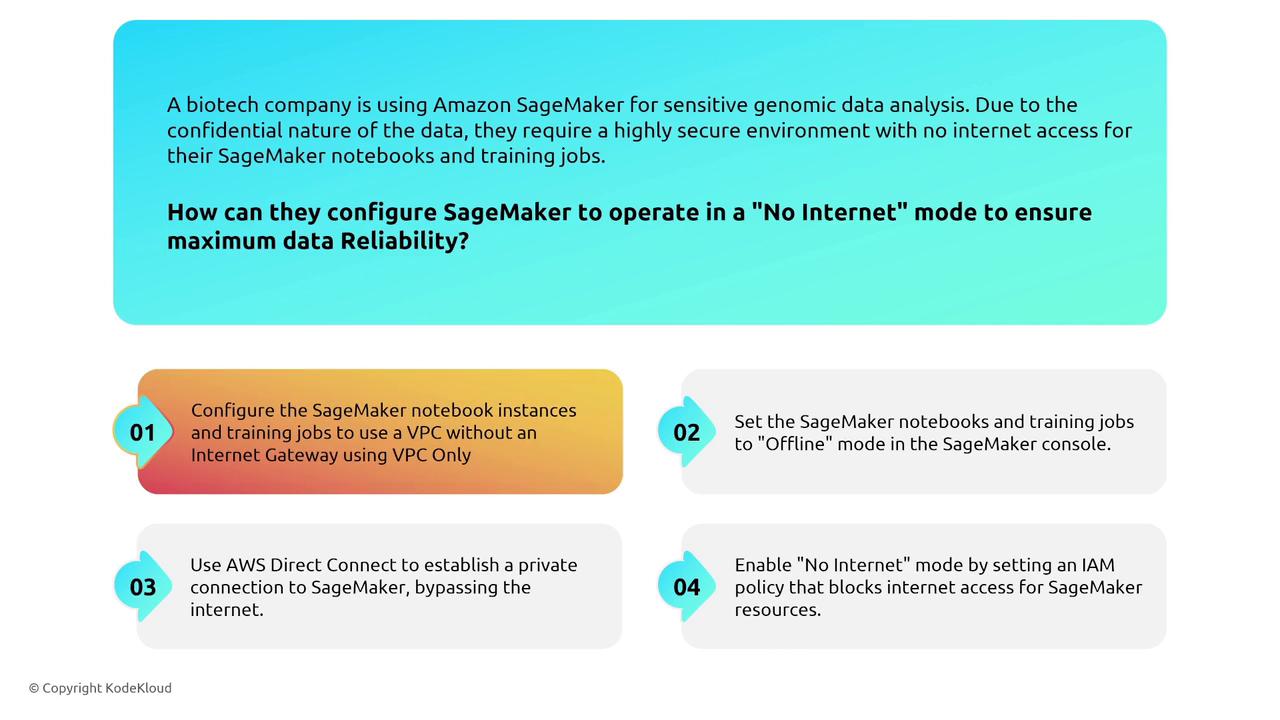

Monitoring is crucial; with CloudWatch and CloudTrail, you can track SageMaker’s performance. You can also configure notebook instances in a no-internet mode through a VPC without an Internet Gateway to meet strict security requirements.

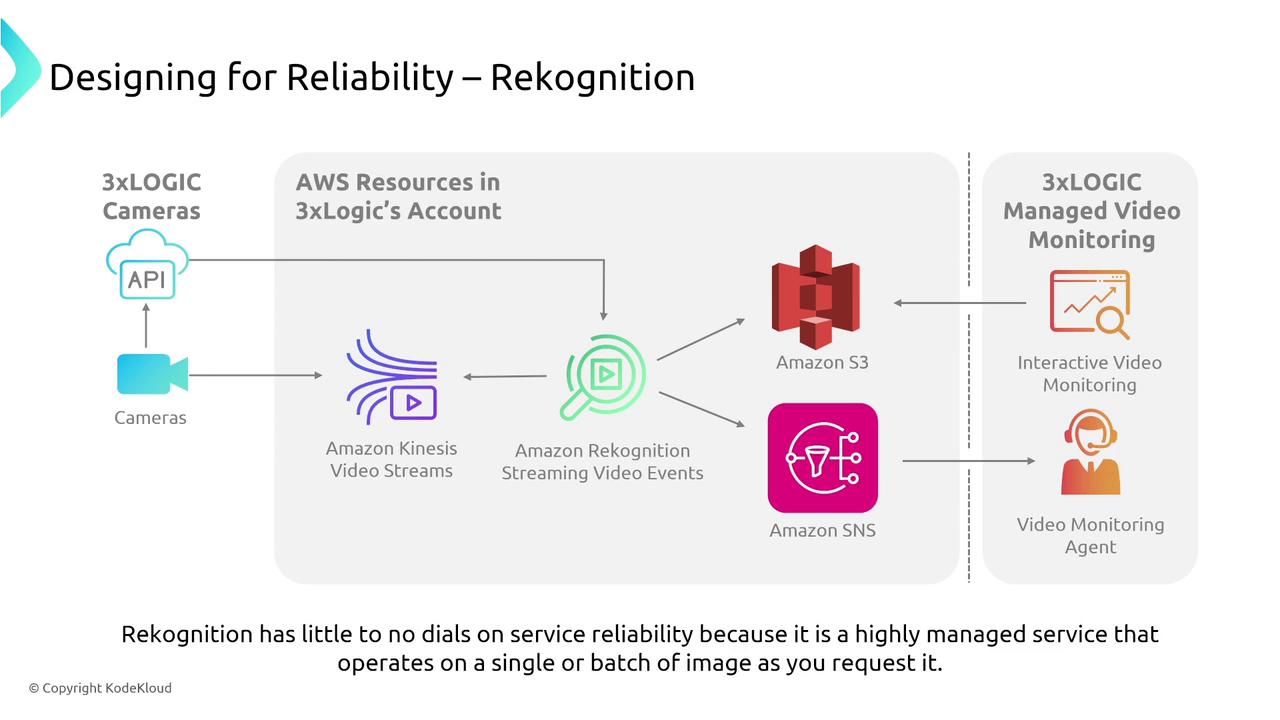

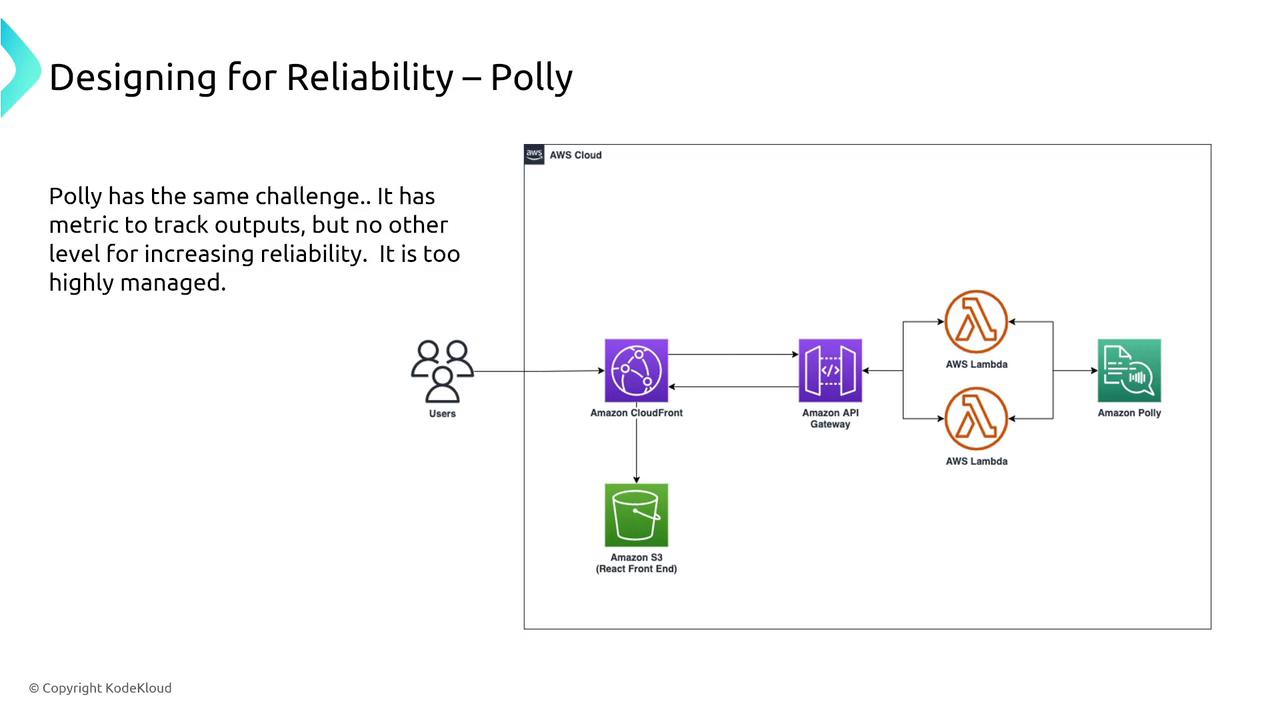

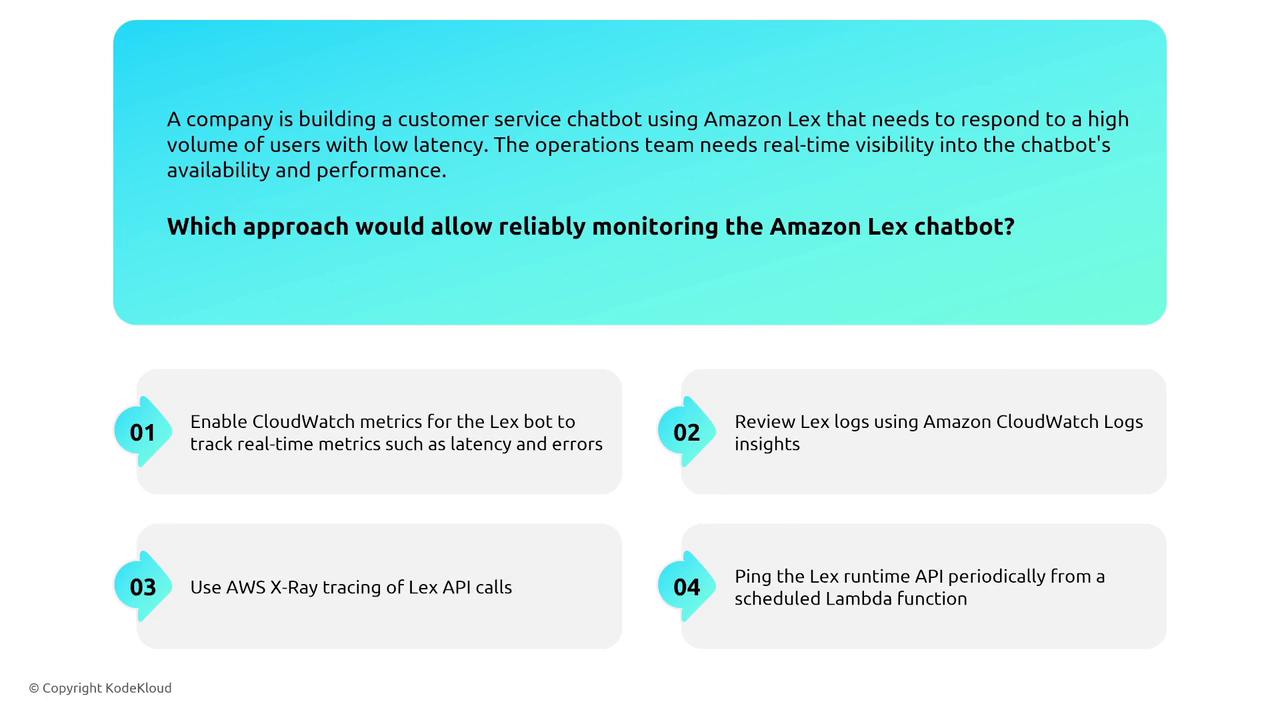

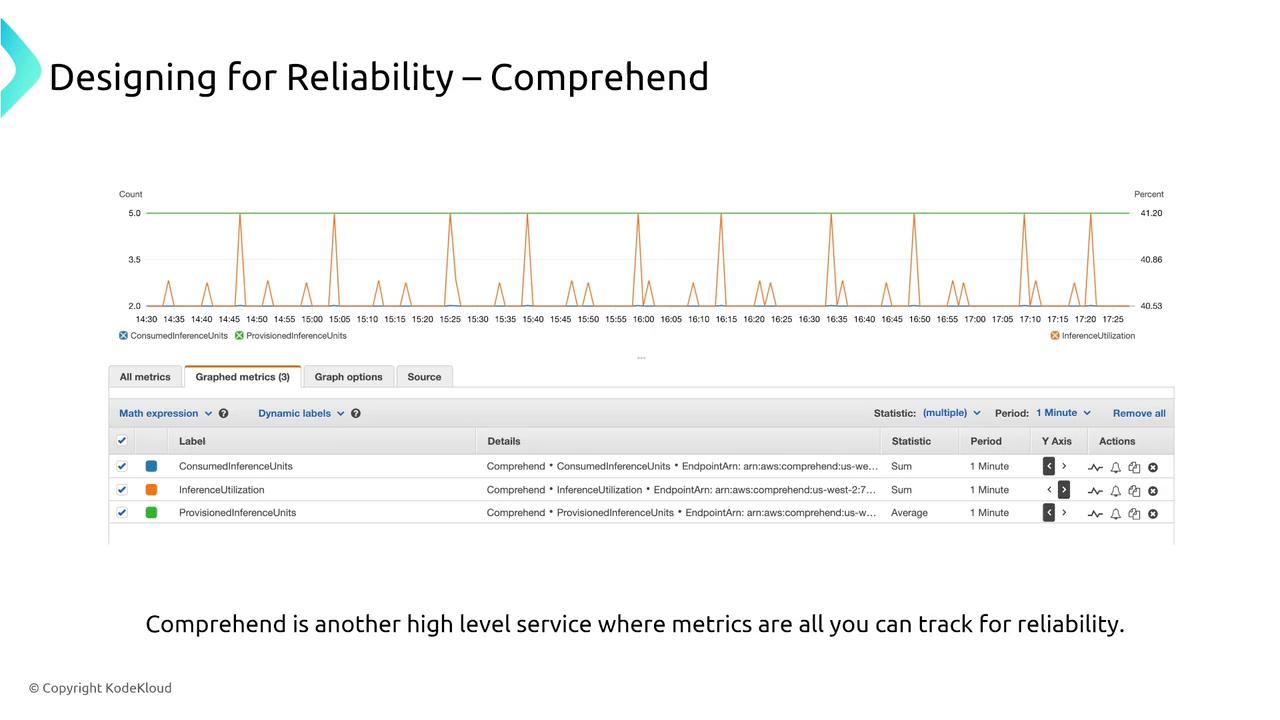

Highly Managed Services: Rekognition, Polly, Lex, and Comprehend

Highly managed AWS services like Amazon Rekognition (image and video analysis), Polly (text-to-speech), Lex (chatbots), and Comprehend (NLP) are designed to be inherently reliable. They handle auto scaling, retries, and failover internally. For monitoring:

- Use CloudWatch metrics and logs to track performance and errors.

- Enable AWS X-Ray tracing for deeper insight where needed.

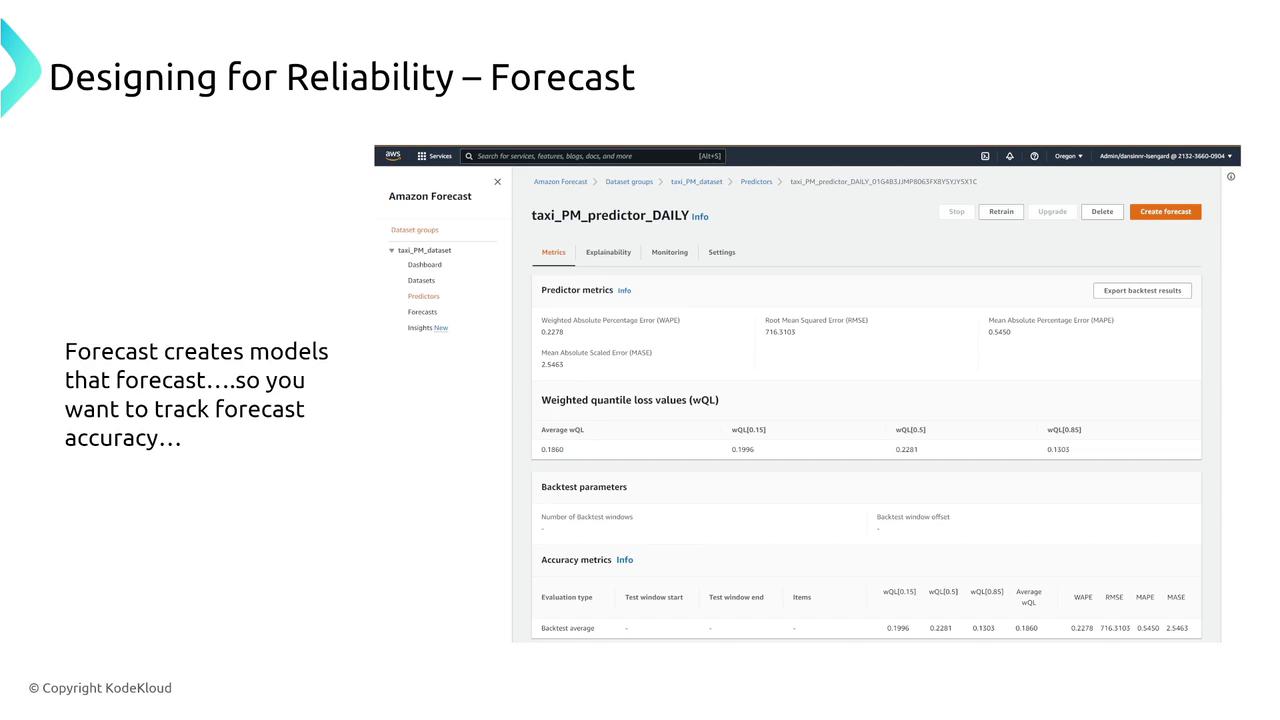

For real-time monitoring of services like Comprehend and Amazon Forecast, CloudWatch and CloudTrail remain your primary tools.

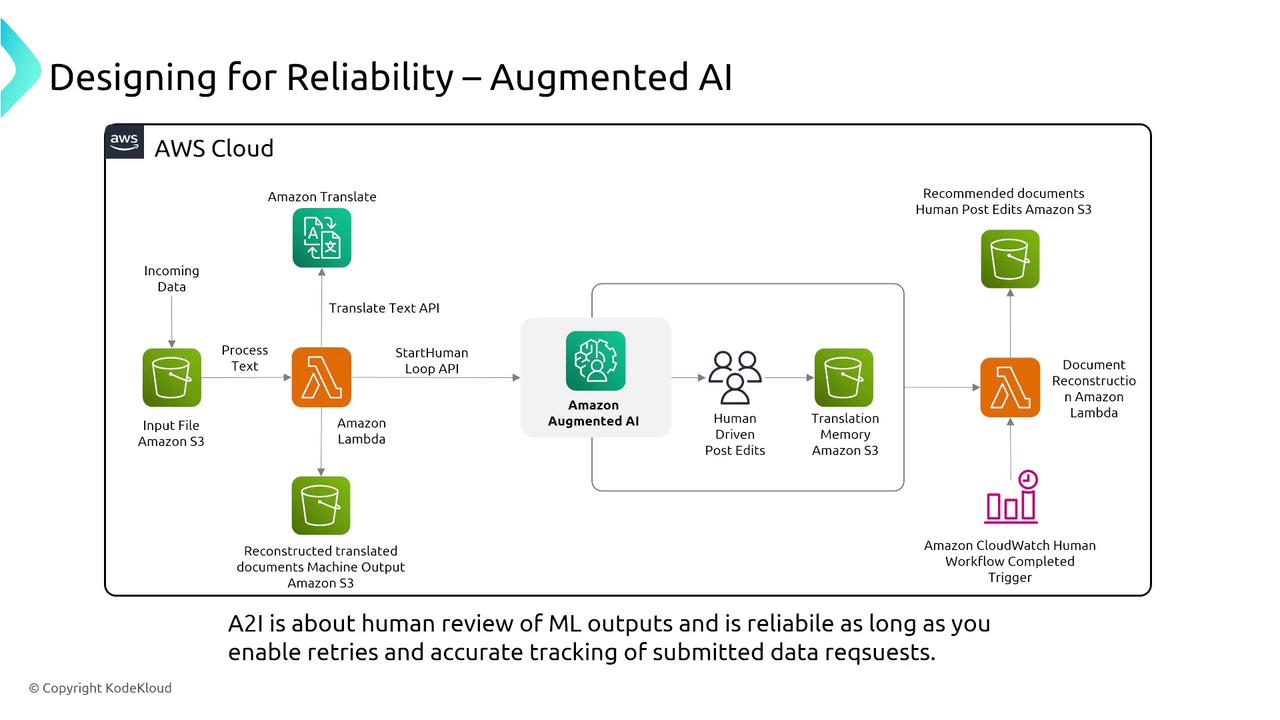

Augmented AI and Fraud Detection

Augmented AI (A2I) introduces a human review stage into machine learning outputs, adding an extra layer of reliability. This service integrates human insights with automated predictions for improved outcomes.

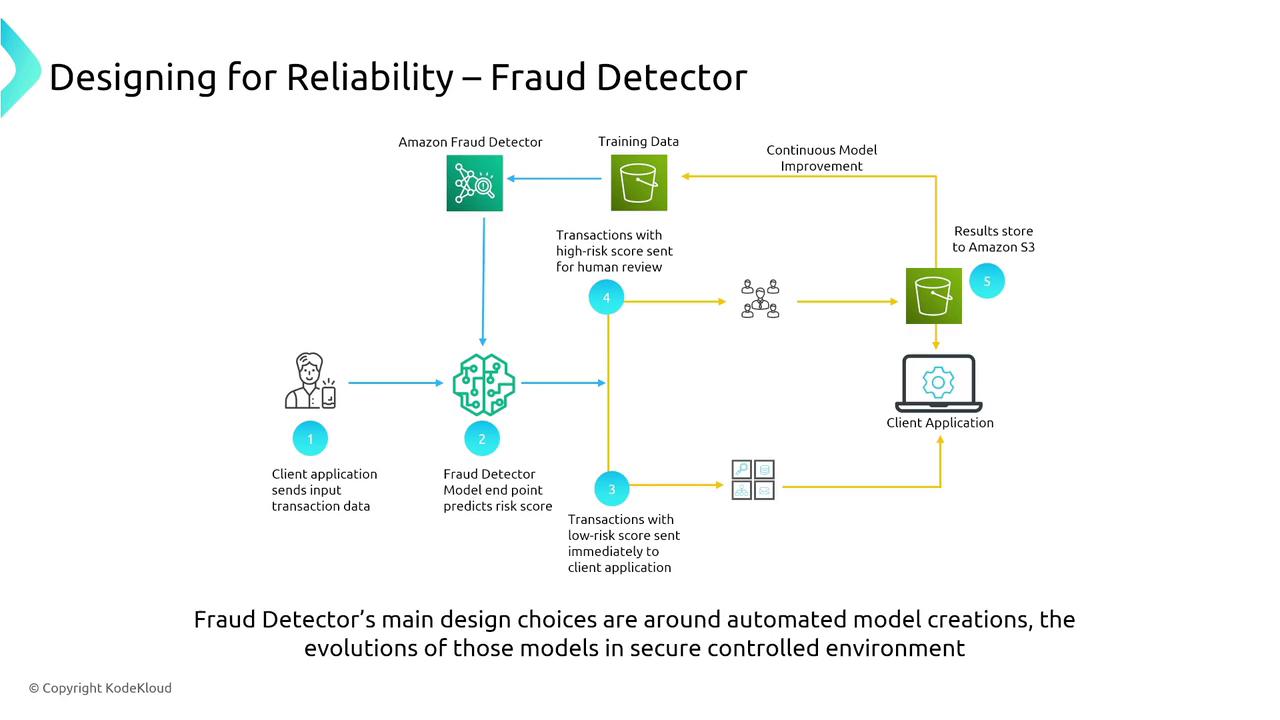

AWS Fraud Detector reliably identifies anomalous transactions using pre-built models and continuous improvement mechanisms, ensuring constant vigilance against fraud.

Language Services: Transcribe, Translate, and Textract

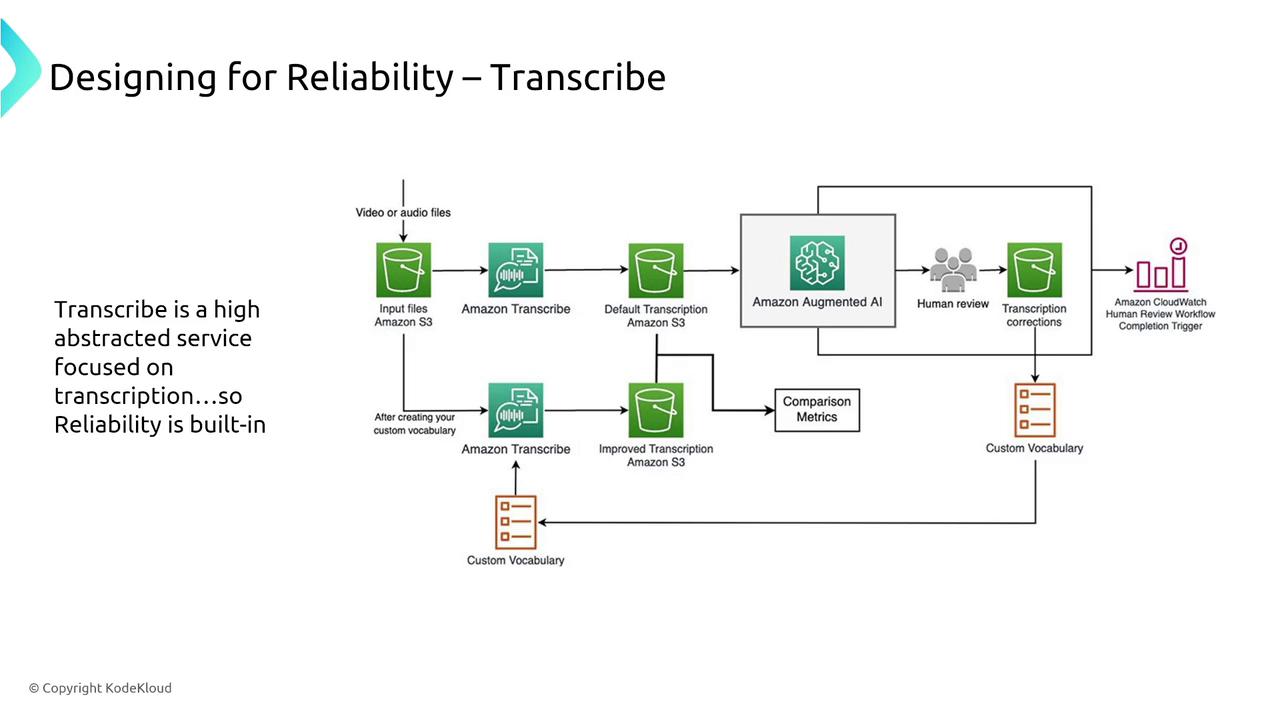

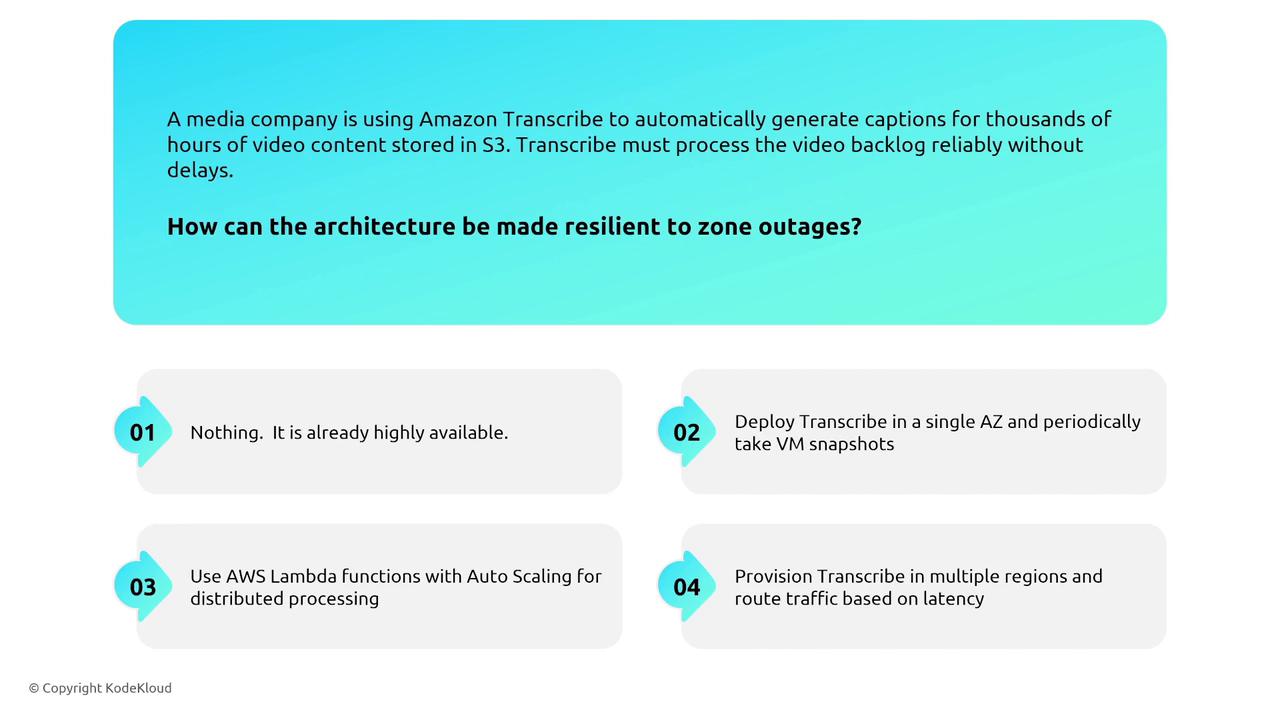

Amazon Transcribe converts audio to text at scale with built-in reliability features. Using Transcribe for tasks like video captioning leverages its API-driven, resilient design.

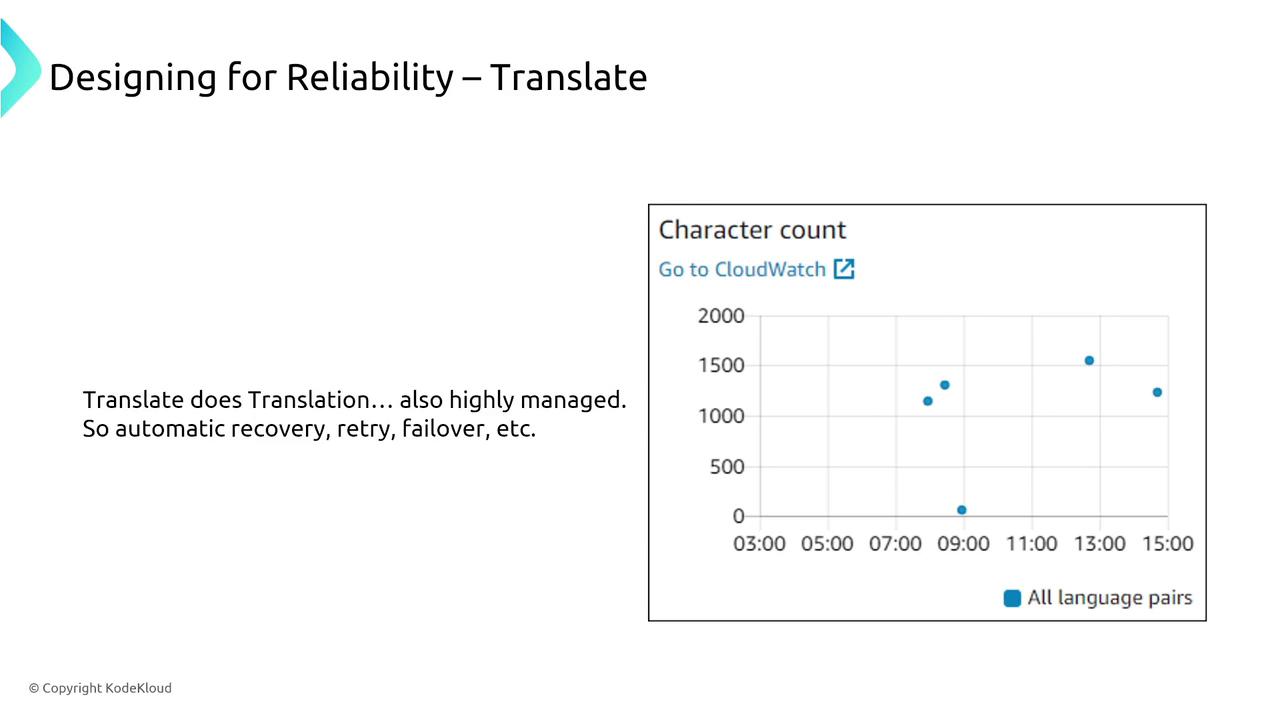

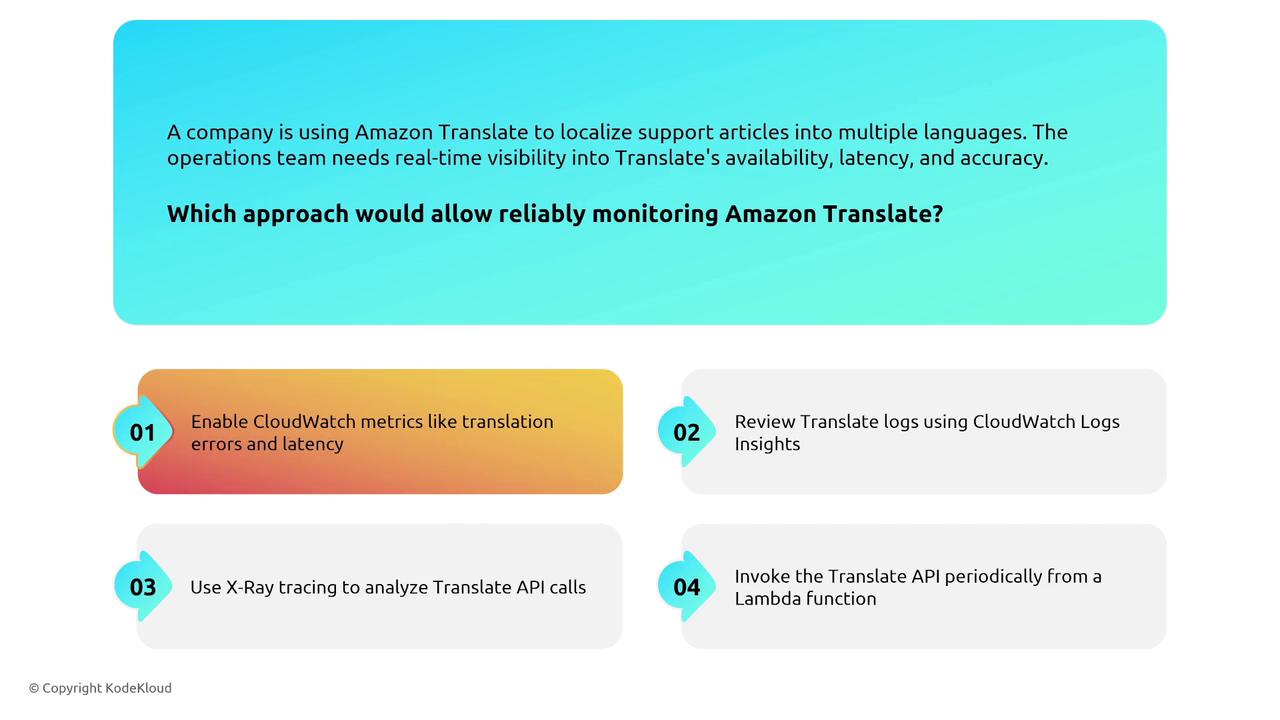

Amazon Translate provides robust language translation with automatic recovery, retries, and failover capabilities.

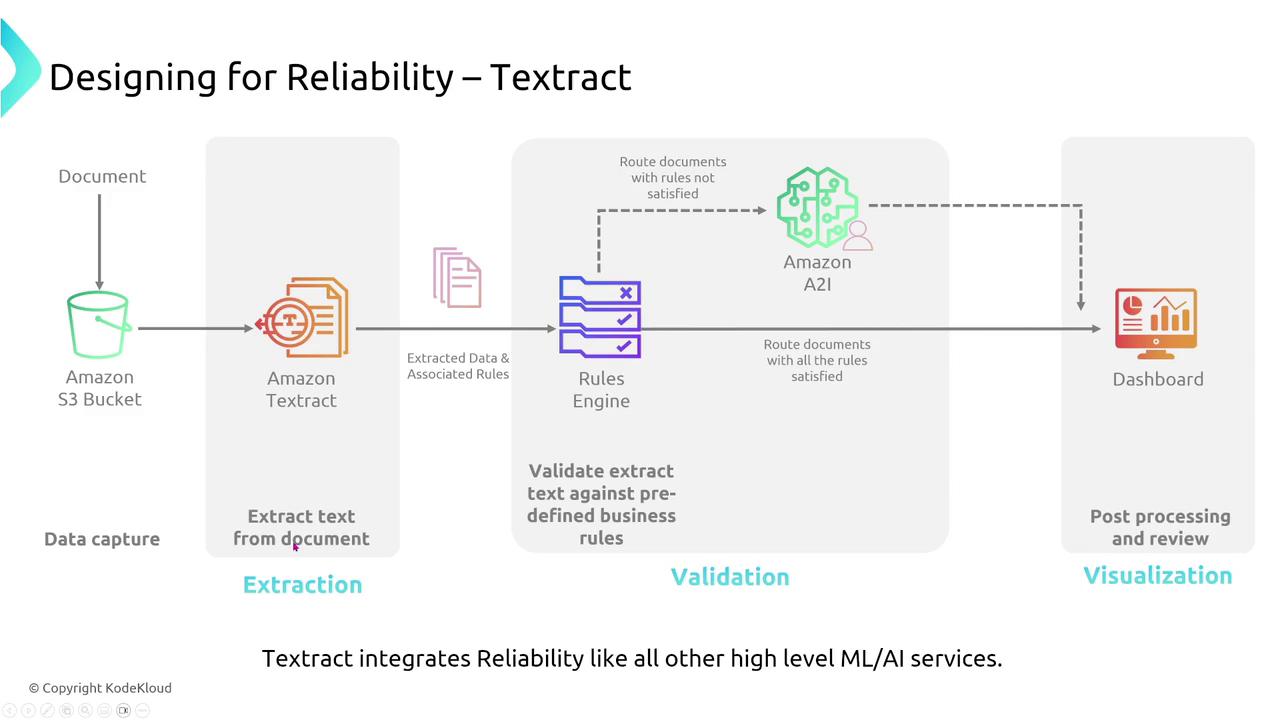

Amazon Textract extracts text from documents and images with high accuracy and reliability. Integrated monitoring with CloudWatch and AWS X-Ray (if needed) provides further operational visibility.

Summary

This article covered a broad range of AWS services—from data ingestion with Kinesis and MSK, through transformation with Glue and EMR, to advanced machine learning and AI services like SageMaker, Rekognition, Polly, Lex, Comprehend, Transcribe, Translate, and Textract. Key reliability features include:

- Auto scaling and automatic recovery.

- Cross-AZ and multi-region deployments.

- Integrated monitoring using CloudWatch and CloudTrail.

Highly managed services such as QuickSight, Lake Formation, and the language services are designed to be resilient by default, reducing the operational overhead required to maintain continuous availability and reliability.

In conclusion, AWS services are built to scale and recover automatically, ensuring your data and AI architectures remain robust under diverse workloads.

Thank you for reading, and we hope you now have a better understanding of how to design and maintain reliability across various AWS data and AI services.

Watch Video

Watch video content