Generative AI in Practice: Advanced Insights and Operations

Prompting Techniques in LLM

Chain of Thought Prompting

Chain-of-thought prompting is a powerful approach that instructs models to break down complex tasks into smaller, manageable steps before reaching a final answer. This technique encourages a deliberate reasoning process, making it particularly beneficial for problems that involve multiple sub-tasks or require detailed reasoning.

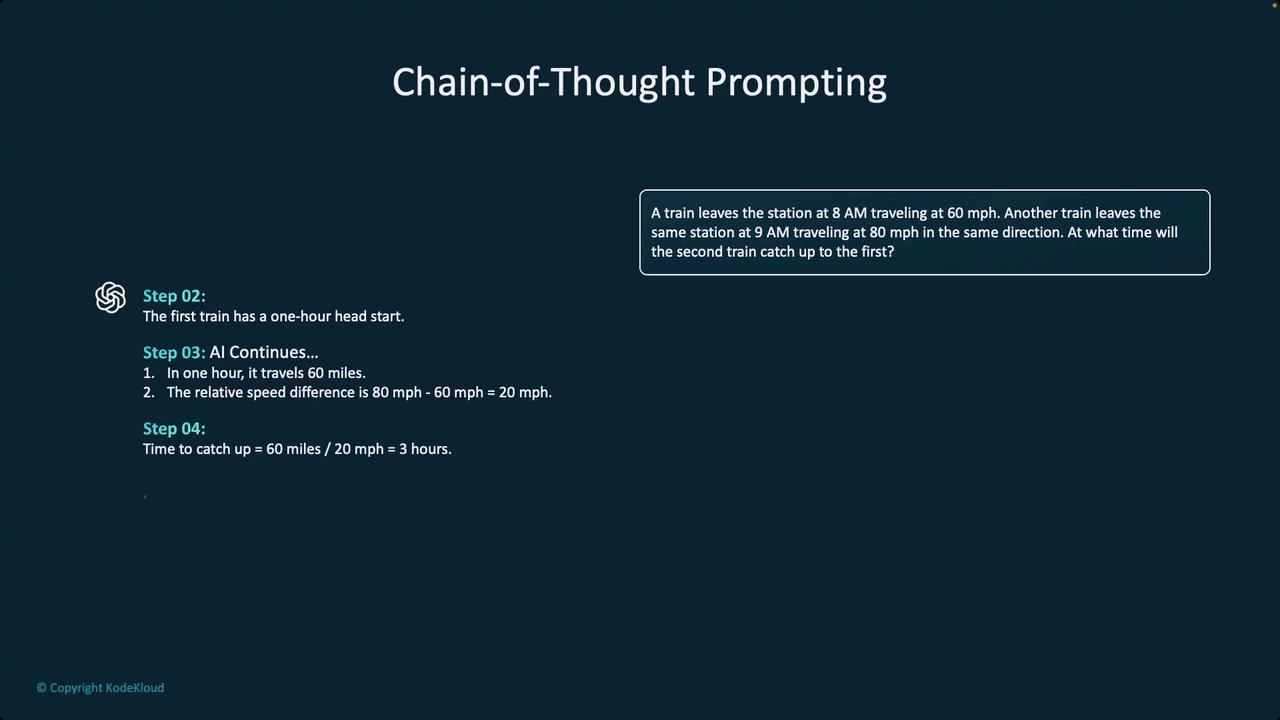

By integrating chain-of-thought prompting, models can tackle challenges such as calculating when a faster train will catch up to a slower one. In such scenarios, the problem is split into smaller queries regarding timings, speeds, and distances. Each segment is analyzed independently, and the results are synthesized to produce an accurate final answer.

Key Benefit

Chain-of-thought prompting helps models produce higher-quality responses by breaking down complex problems into clear, sequential steps.

A notable extension of this strategy can be observed in OpenAI's models. Early tests with emerging O1 models showed that models using chain-of-thought reasoning often outperform their zero-shot counterparts, even when both models are of similar sizes. However, the effectiveness of this approach depends on the nature of the task; it is not a one-size-fits-all solution.

Chain-of-thought prompting is especially useful for tasks that require multi-step reasoning. For instance, solving the problem: "A train leaves the station at 8 a.m. and travels at a set speed; when will another train, departing later at a different speed, catch up?" — involves breaking the problem into parts that address time calculations, speed differentials, and distance evaluations.

It is also important to note that while larger models tend to perform well even without explicit chain-of-thought prompting, this structured, step-by-step reasoning is particularly advantageous for midsize models. By following a deliberate analytical process, midsize models can often deliver improved performance without necessitating a significant increase in model size.

Overall, chain-of-thought prompting serves as an essential technique for addressing problems that can be naturally decomposed into smaller components. This method not only boosts the accuracy and quality of the responses but also equips AI models with a more systematic approach to problem-solving in complex scenarios.

Watch Video

Watch video content